JAX vs PyTorch: Ultimate Deep Learning Framework Comparison

NovitaAI

NovitaAI

Introduction

Deep learning has become a popular field in machine learning, and there are several frameworks available for building and training deep neural networks. Two of the most popular deep learning frameworks are JAX and PyTorch.

JAX, built on functional programming principles and excelling at high-performance numerical computing, offers unique features like automatic differentiation. In comparison, PyTorch, favored for its ease of use and dynamic computation graph, is popular for implementing neural networks. Both frameworks have extensive community support, catering to a diverse range of deep learning tasks and algorithms. Let's delve deeper into their key differences and strengths.

Understanding JAX and PyTorch

JAX and PyTorch are renowned deep learning frameworks. Both frameworks leverage powerful tools for neural network implementation, with PyTorch offering simplicity and JAX emphasizing functional programming principles. Understanding the nuances of these frameworks is crucial for selecting the ideal solution for specific machine learning tasks and projects.

Origins of JAX and Its Evolution in Deep Learning

Originally developed by Google Research, JAX emerged as a powerful framework that implements automatic differentiation for machine learning and deep learning tasks. It is built on functional programming principles, offering unique features such as function transformations and scalability. JAX's seamless integration with NumPy places it at the top of popular options for deep learning.

Over time, JAX has gained traction in the community due to its functional programming model and faster performance, especially when handling complex neural networks.

The Emergence of PyTorch and Its Impact on AI Research

PyTorch emerged as a powerful framework, transforming AI research with its ease of use and extensive community support. Its unique features, like dynamic computation graphs and imperative programming model, made it a go-to choice for deep learning tasks. PyTorch's popularity surged due to its Pythonic syntax and seamless integration with hardware accelerators. Researchers found PyTorch easy for experimentation, debugging, and scaling deep learning models. Its impact on AI research continues to grow, shaping the landscape of deep learning algorithms and applications.

Key Features Comparison

Automatic differentiation and hardware accelerators are crucial in the battle of JAX vs PyTorch. JAX stands out with its functional programming model and XLA compiler for high-performance computing. PyTorch, on the other hand, boasts an easier learning curve and compatibility with dynamic computation graphs. Both frameworks offer unique features and extensive community support, making them top choices for deep learning tasks.

- Syntax and Flexibility:

JAX is a relatively new framework that was built with the goal of providing a simple and flexible way to write high-performance code for machine learning models. Its syntax is similar to NumPy, which makes it easy to learn for those already familiar with the popular numerical computing library. On the other hand, PyTorch has a more complex syntax that can take some time to get used to, but it also offers more flexibility in terms of building complex neural network architectures.

- Performance and Speed:

JAX, leveraging XLA and JIT compilation, excels in handling complex computations on TPUs with exceptional speed. PyTorch, while efficient on GPUs, may face challenges on TPUs due to hardware disparities. JAX outperforms PyTorch in TPU-based tasks, providing an edge in scalability and performance for specific requirements. Understanding these nuances can guide users in selecting the best framework for their deep learning tasks.

- Ecosystem and Community Support

Both frameworks have active communities and offer a wide range of tools and libraries for deep learning tasks. However, PyTorch has been around longer and has a larger user base, which means that there are more resources available for beginners and more established libraries for specific tasks like computer vision or natural language processing.

Differences in Ecosystem and Community Support

The ecosystem and community support for JAX and PyTorch differ significantly.

PyTorch boasts a larger community with extensive support for beginners and advanced users alike, making it a good choice for those with specific requirements.

JAX has a more niche community but offers unique features and is at the top of Numpy, leveraging functional programming principles.

While PyTorch excels in practicality, JAX shines in its functional programming model, catering to users with more complex needs.

The Developer Community: A Comparative Analysis

The developer community surrounding JAX and PyTorch plays a crucial role in the evolution and adoption of these deep learning frameworks.

While PyTorch boasts a larger community due to its early establishment and backing by Facebook, JAX is gaining momentum within the machine learning community. Developers appreciate PyTorch for its extensive community support and resources. On the other hand, JAX's unique features and functional programming paradigm attract those looking for a more specialized approach in deep learning development.

Available Libraries and Extensions

When considering available libraries and extensions, both JAX and PyTorch offer a rich ecosystem to support machine learning tasks.

While PyTorch boasts a wide array of pre-built modules for neural networks, JAX excels in leveraging XLA for high-performance computations. PyTorch's repository contains numerous community-developed extensions for diverse functionalities, whereas JAX's functional programming model allows for convenient function transformations. Depending on specific requirements, users can explore the libraries and extensions provided by both frameworks to enhance their deep learning projects.

Use Cases and Success Stories

Real-world applications showcase JAX's versatility in scientific computing, quantum algorithms, and neural networks. Conversely, PyTorch finds extensive use in image classification, natural language processing, and computer vision tasks within industry and academia.

Real-World Applications of JAX

JAX finds real-world applications in diverse fields like machine learning, where its automatic differentiation capabilities empower developers to implement efficient deep learning models. Its ability to interface seamlessly with GPUs and TPUs makes it a powerful choice for projects requiring hardware accelerators. JAX's functional programming paradigm and functional transformations enable users to build and scale complex deep neural networks for various deep learning tasks. Its performance and ease of use position it as a top contender for cutting-edge deep learning algorithms in real-world scenarios.

PyTorch in Industry and Academia

PyTorch has made significant inroads in industry and academia, being widely adopted for various deep learning applications. Its flexibility, scalability, and ease of use have propelled it to the forefront of deep learning frameworks. Industries across sectors such as healthcare, finance, and technology leverage PyTorch for research, production models, and innovative projects. In academia, PyTorch is a staple tool for researchers and students due to its robust support for experimentation and implementation of cutting-edge deep learning algorithms.

Code Examples and Tutorials

Both frameworks provide tutorials and code examples that cater to beginners and experts, making them popular options in the realm of deep learning tasks and algorithms. Engage with JAX code or PyTorch code to explore the power of these frameworks.

Example of JAX

Here is a simple example of using JAX and Python to calculate the derivative of the function y\=x2 at the point x\=2:

import jax

def f(x):

return x**2

def grad_f(x):

return 2*x

x = 2

dy = jax.grad(f)(x)

print(dy)

Here is a breakdown of what each line of code does:

The first line imports the

jaxmodule.The second line defines the function

f.The third line defines the function

grad_f, which calculates the derivative off.The fourth line assigns the value 2 to the variable

x.The fifth line calculates the derivative of

fat the pointxusing thejax.gradfunction.The sixth line prints the value of the derivative.

PyTorch Example

Let’s explore the derivative example from above, but this time with PyTorch. Here is a simple example of using PyTorch to calculate the derivative of the function y\=x2 at the point x\=2:

import torch

def f(x):

return x**2

x = torch.tensor(2, requires_grad=True)

y = f(x)

y.backward()

print(x.grad)

Here is a breakdown of what each line of code does:

The first line imports the

torchmodule.The second line defines the function

f.The third line creates a tensor

xwith the value 2 and sets therequires_gradflag toTrue.The fourth line calculates the value of y\=f(x).

The fifth line calculates the gradient of y with respect to x using the

backwardmethod.The sixth line prints the value of the gradient.

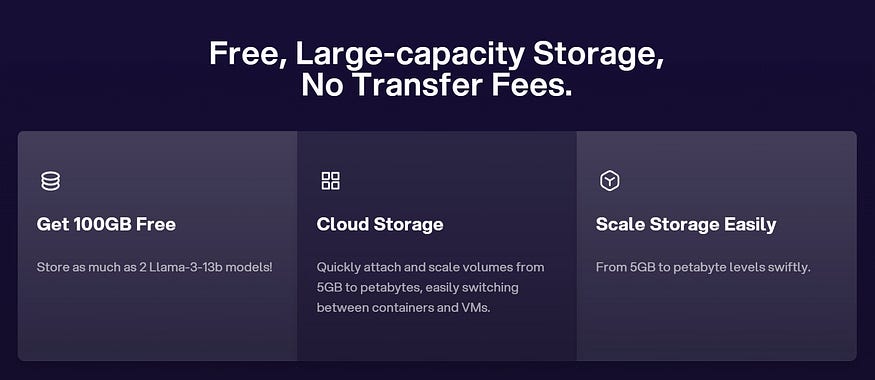

Use GPU Cloud to Accelerate Deep Learning

Novita AI GPU Instance, a cloud-based solution, stands out as an exemplary service in this domain. This cloud equipped with high-performance GPUs like NVIDIA A100 SXM and RTX 4090. This is particularly beneficial for PyTorch users who require the additional computational power that GPUs provide without the need to invest in local hardware.

Novita AI GPU Instance has key features like:

1. GPU Cloud Access:

Novita AI provides a GPU cloud that users can leverage while using the PyTorch Lightning Trainer. This cloud service offers cost-efficient, flexible GPU resources that can be accessed on-demand.

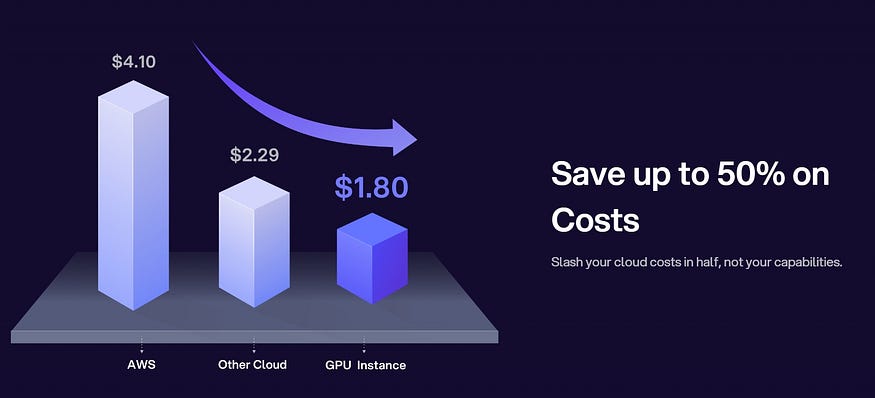

2. Cost-Efficiency:

Users can expect significant cost savings, with the potential to reduce cloud costs by up to 50%. This is particularly beneficial for startups and research institutions with budget constraints.

3. Instant Deployment:

Users can quickly deploy a Pod, which is a containerized environment tailored for AI workloads. This deployment process is streamlined, ensuring that developers can start training their models without any significant setup time.

4. Customizable Templates:

Novita AI GPU Pods come with customizable templates for popular frameworks like PyTorch, allowing users to choose the right configuration for their specific needs.

5. High-Performance Hardware:

The service provides access to high-performance GPUs such as the NVIDIA A100 SXM, RTX 4090, and A6000, each with substantial VRAM and RAM, ensuring that even the most demanding AI models can be trained efficiently.

Future Directions and Developments

Exciting developments lie ahead for both JAX and PyTorch.

JAX is focusing on enhancing its performance and expanding its support for hardware accelerators. Future updates may also address memory usage optimization for large-scale models.

PyTorch is expected to continue its growth by incorporating more advanced features for deep learning tasks. The community eagerly anticipates new releases from both frameworks, as they strive to push the boundaries of machine learning and deep learning.

Roadmap and Upcoming Features in JAX

JAX is evolving rapidly, with an exciting roadmap ahead. Upcoming features focus on enhancing machine learning capabilities, particularly in deep learning tasks. Improved automatic differentiation methods, expanded support for hardware accelerators like TPUs, and advancements in scalability are on the horizon. JAX's commitment to performance optimization and seamless integration with popular frameworks sets the stage for a promising future. Stay tuned for updates on new functionalities and optimizations, reinforcing JAX as a top choice in the deep learning landscape.

What’s Next for PyTorch? Emerging Trends

Discover the future trends of PyTorch including enhanced model interpretability, improved deployment options, and increased support for mobile and edge computing. Stay updated on advancements in PyTorch to leverage cutting-edge AI technologies effectively.

Conclusion

When deciding between JAX vs PyTorch for deep learning projects, consider specific requirements and the scale of your project. PyTorch excels in ease of use and a larger community, ideal for beginners. JAX implementation is a powerful option for those familiar with functional programming and seeking faster performance. Both frameworks offer unique features and extensive community support, catering to different needs. Ultimately, the choice between JAX and PyTorch depends on your project's complexity and your familiarity with functional programming principles.

Frequently Asked Questions

Which framework is better suited for beginners in deep learning?

For beginners in deep learning, PyTorch is often recommended due to its user-friendly interface and vast community support. Beginners might find PyTorch more accessible for starting their deep learning journey.

Can models trained in PyTorch be easily converted to work with JAX?

To migrate PyTorch models to JAX, you need to rewrite the model architecture and convert parameters. While manual conversion is possible, tools like torch2jax can aid in this process, streamlining the transition between the frameworks effectively.

Does OpenAI use JAX or PyTorch?

OpenAI primarily utilizes PyTorch in their research and development projects. While JAX offers advantages in certain domains, PyTorch's extensive ecosystem and user-friendly interface make it a preferred choice for OpenAI's AI initiatives.

Originally published atNovita AI.

Novita AI*, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.*

Subscribe to my newsletter

Read articles from NovitaAI directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by