Calculating The Size of The Dataflow Gen2 Staging Lakehouses

Sandeep Pawar

Sandeep PawarMy friend Alex Powers (PM, Fabric CAT) wrote a blog post about cleaning the staging lakehouses generated by Dataflow Gen2. Before reading this blog, go ahead and read his blog first on the mechanics of it and the whys. Note that these are system generated lakehouses so at some time in the future, they will be automatically purged but until then the users will be paying the storage cost of these lakehouses. If you want to read more about how dataflow gen2 works and whether you should stage or not , read this and this blog.

A question was asked on Reddit, how do you get the size of these lakehouses to determine the impact. Here is how you do that :

import sempy.fabric as fabric

import pandas as pd

def get_staging_lakehouse_size():

"""

Fabric.Guru | Sandeep Pawar | 07/19/2024

Get the size of each DataflowsStagingLakehouse in the capacity in MB

"""

ppu_capacities = fabric.list_capacities().query('Sku == "PP3"') # Exclude PPU workspaces

ws = fabric.list_workspaces().query('`Is On Dedicated Capacity`==True') # Only Premium

ws = ws[~ws['Capacity Id'].isin(ppu_capacities.Id)]

results = []

for _, row in ws.iterrows():

try:

lakehouses = mssparkutils.lakehouse.list(row['Id'])

for lakehouse in lakehouses:

if lakehouse['displayName'] == "DataflowsStagingLakehouse":

abfs_path = lakehouse['properties']['abfsPath']

path = abfs_path + "/Tables"

tables = [f.path for f in mssparkutils.fs.ls(path)]

total_size_mb = max(1, sum(round(sum([f.size for f in mssparkutils.fs.ls(table)]) / (1024 * 1024)) for table in tables))

results.append({'Name': row['Name'], 'Size_MB': total_size_mb})

except:

pass

return pd.DataFrame(results).sort_values('Size_MB', ascending=False)

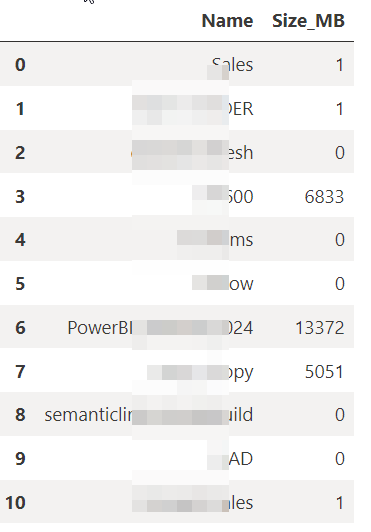

result_df = get_staging_lakehouse_size()

result_df

The pseudo-code is:

Get Premium/Fabric workspaces, excluding PPU

Identify workspaces with staging lakehouses

For each lakehouse, iterate over the tables and calculate the size of the delta tables in MB

I didn't know I had accumulated 25B of gunk in the Onelake that I was paying for. You can use this to decide/automate which workspaces to clean up.

If you want to nuke all the tables in these lakehouses, just adjust the code above to drop the tables. I considered sharing that, but I don't want you to blame me if your dataflows suddenly stopped working :P You do it at your own risk :D

References:

Cleaning the (Staging) Lakeside - It's Not About The Cell (itsnotaboutthecell.com)

Fabric Dataflows Gen2: To stage or not to stage? (crossjoin.co.uk)

Subscribe to my newsletter

Read articles from Sandeep Pawar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sandeep Pawar

Sandeep Pawar

Principal Program Manager, Microsoft Fabric CAT helping users and organizations build scalable, insightful, secure solutions. Blogs, opinions are my own and do not represent my employer.