Build & Package Manager tools in DevOps

Suraj

SurajTable of contents

- Introduction to Build & Package Manager Tools:

- Artifact repository:

- What kind of file is in the artifact?

- Install Java and Build tools

- How to build the artifact with dependencies?

- Build tools in Java

- Build Tools for Development (Managing Dependencies)

- Run the application

- Build JavaScript application

- What does the zip/tar file include?

- Package Frontend Code

- What are Build tools for other programming languages

- Publish an artifact into the repository

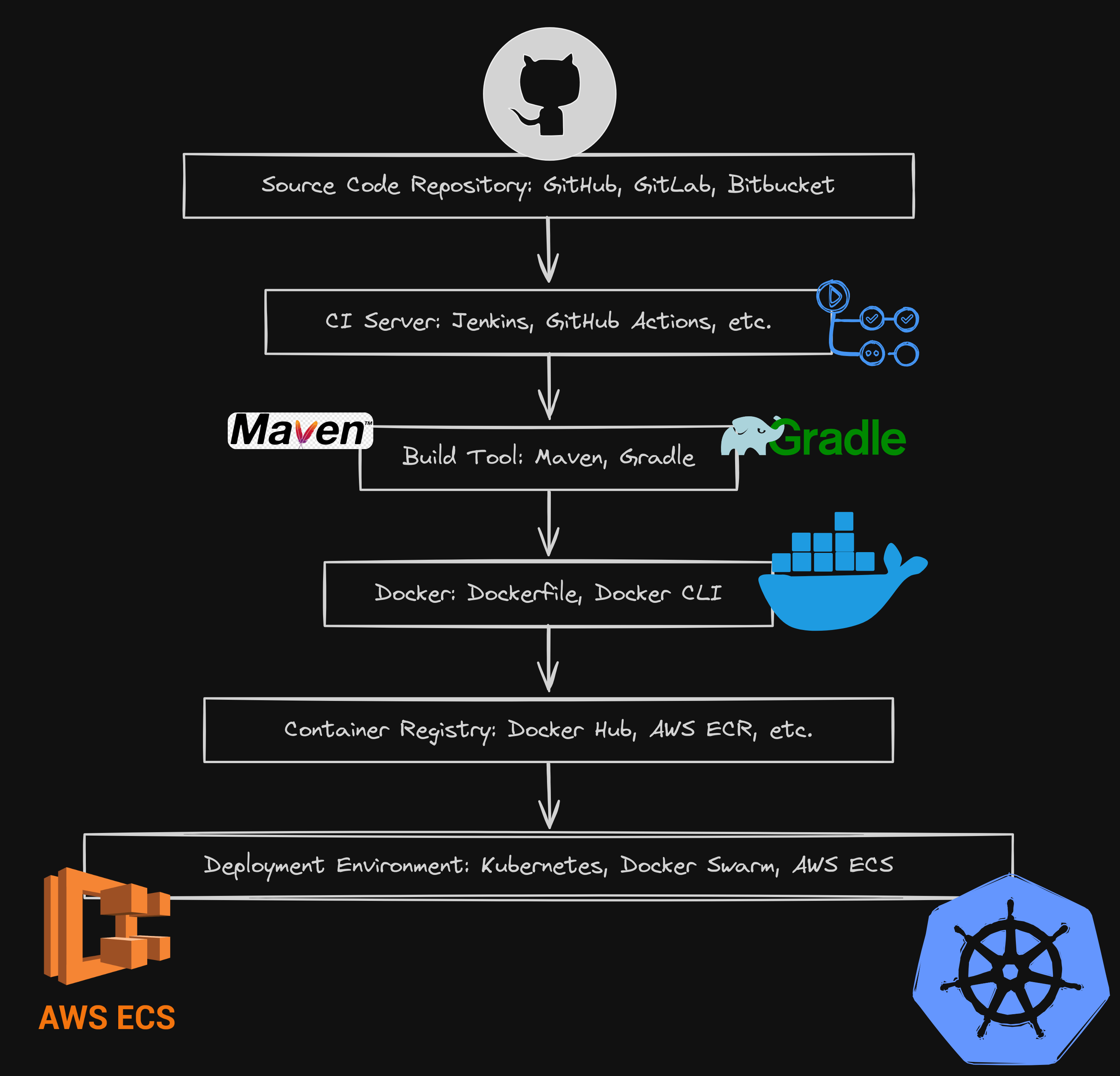

- Build tools & Docker

- Build Tools for DevOps Engineers

Introduction to Build & Package Manager Tools:

What are built and package manager tools?

When developers create an application, it needs to be available to end users. This involves deploying the application on a production server, which requires moving the application code and its dependencies to the server. This is where build and package manager tools come into play.

Package application into a single movable file

And this single movable file is called an artifact.

Packaging = “building the code”

Building the code involves:

Compiling (Hundreds of files into 1 single file)

Compress (Hundreds of files into 1 single file)

After the artifact was built we kept artifacts in storage also

In case we need to deploy multiple times, have a backup, etc.

E.g.: If we deploy the artifact on the dev server, we deploy the test environment, or later on the production environment.

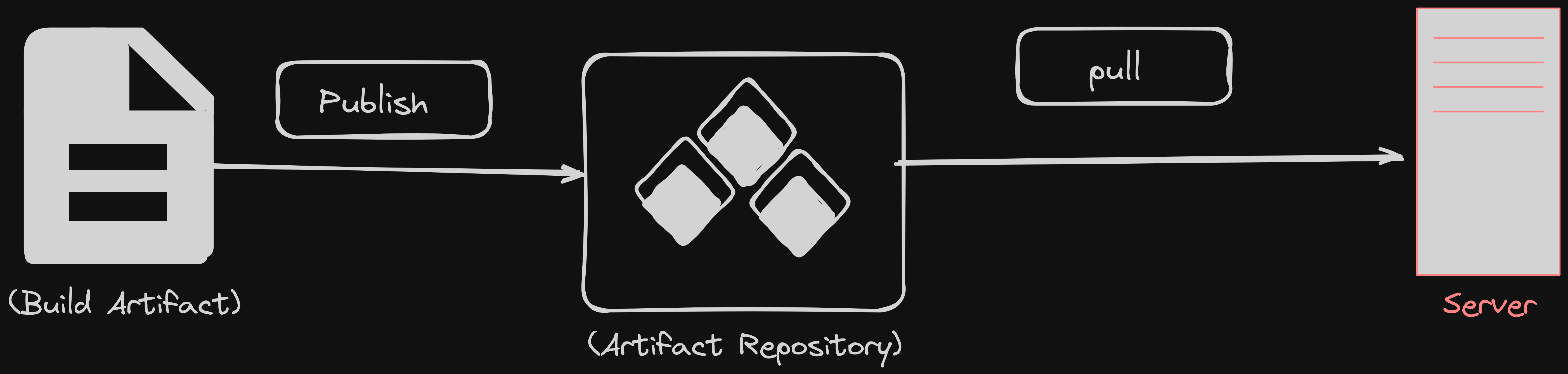

Artifact repository:

The storage location where we keep the artifact once we build it is called the artifact repository, an example is Nexus, JFrogArtifactory.

What kind of file is in the artifact?

The artifact file looks different for each programming language

E.g., if you have a Java Application the artifact file will be a JAR or WAR file

JAR = Java Archive

These include whole code and dependencies like:

Spring Framework

datetime libraries

Pdf processing libraries

Install Java and Build tools

Install java

Install Maven

install Node + npm

Download IntelliJ or VSCode

How to build the artifact with dependencies?

Using a Build Tool, so there are special tools to build the artifact

These tools are specific to the programming language

For Java:

- There is Maven and Gradle

For NodeJs Or JavaScritp

- There are npm and yarn

What These tools do:

Install dependencies

Compile and compress your code

And can do some other different tasks

Build tools in Java

JAR or WAR file

Maven: Uses XML for configuration and is widely used for managing dependencies and building projects.

Gradle: Uses Groovy for configuration and is known for its flexibility and performance.

| Tools | Maven | Gradle |

| Language uses | XML | Groovy |

Which is more convenient for scripting and writing all those tasks for building the code or compiling etc.

Both have command line tools and commands to execute the tasks

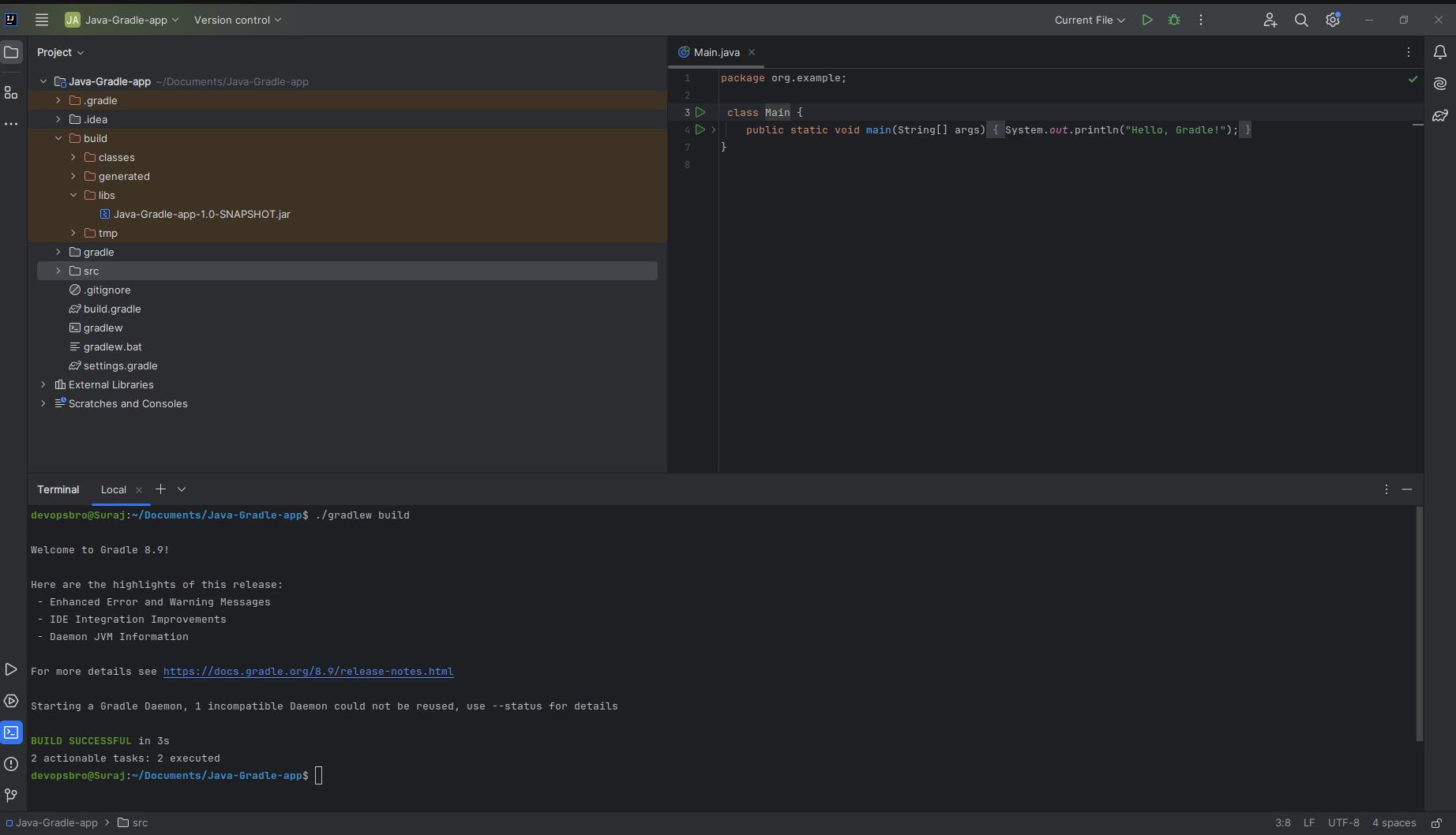

Gradle commands to execute the Java file (Doing Hands-On)

This command will compile the code, resolve dependencies, and package the application into an artifact using Gradle.

./gradlew build

In the Gradle, we don’t need to configure how the JAR file is built.

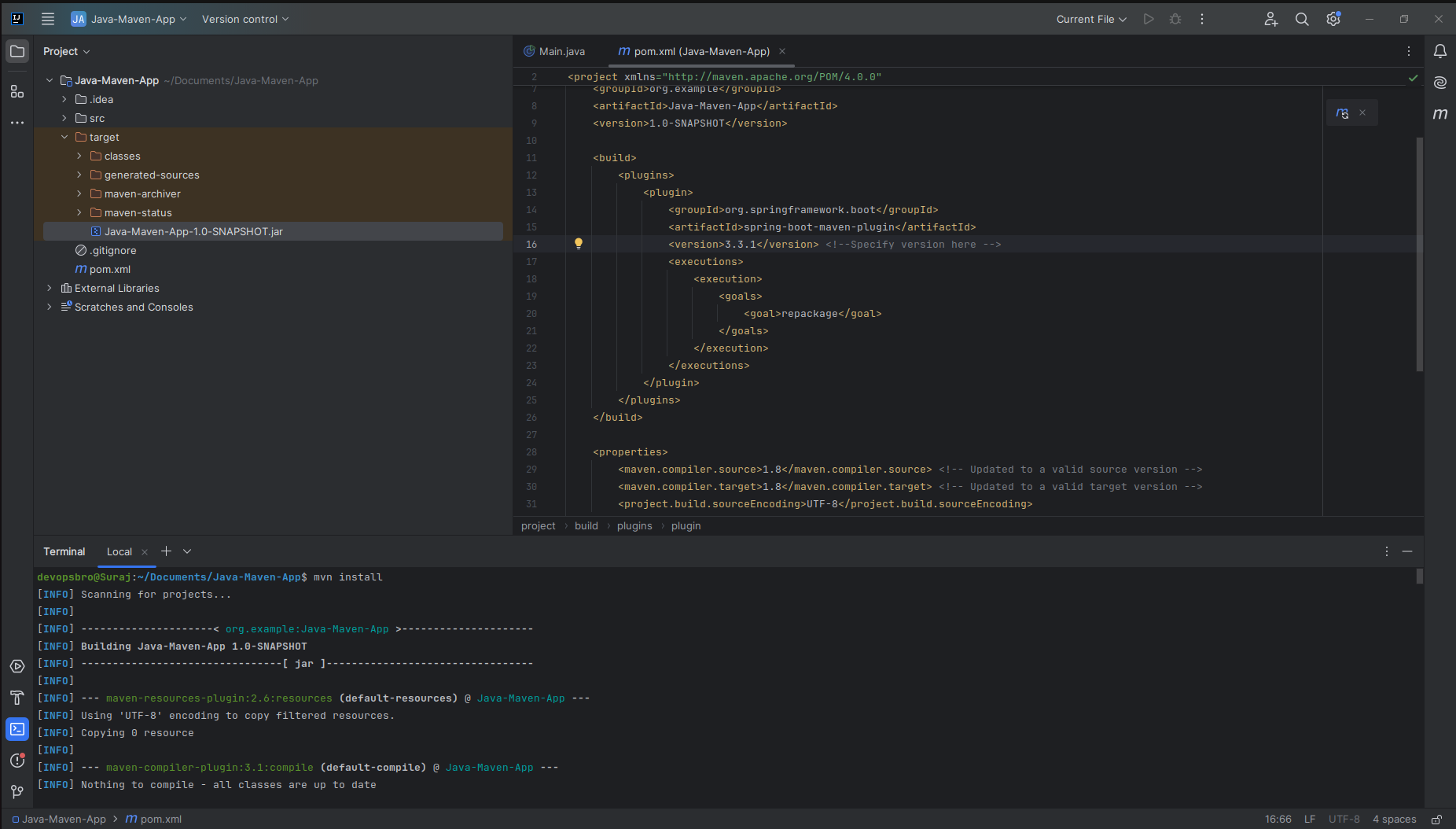

Maven command to execute the Java file

Add this plugin to your pom.xml file:

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version><!-- Specify version here --></version>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

The command will compile the code, run tests, package the application into an artifact, and install it into the local Maven repository.

mvn install

Build Tools for Development (Managing Dependencies)

It would help if you had the build tools also locally when developing the App

Because you need to run the app locally

run tests

Maven and Gradle have their own dependency files

- Dependencies file = Managing the dependencies for the project

Where these Dependencies files lies:

For the maven its - pom.xml

For the Gradle it's - build.gradle

You need a library to connect your Java application to ElasticSearch

Find a dependency with name and version

You add it to the dependencies file (e.g.

pom.xml)Dependency gets downloaded locally (e.g local maven repo)

Run the application

Now let’s say we’ve built an artifact, stored it in the artifact repository, and copied it also on the server.

Now the question is how do you run the application with the artifact

This is the command to execute the .jar file for example:

// This example is for gradle

java -jar <name of jar file>

//This is an example of Gradle

java -jar build/libs/java-app-1.0-SNAPSHOT.jar

// This example is for Maven

java -jar <name of jar file>

//This is an example of Maven

java -jar target/java-maven-app-1.0-SNAPSHOT.jar

So if you are on a fresh server where and you have Java installed you can download the artifact from the repository and start the application using above commands.

Build JavaScript application

What about the JavaScrip Application

JS doesn’t have a special artifact type

So it can’t be built in ZIP or TAR file

So how do we build a js artifact file as a zip or tar file

These are the alternatives over Gradle and Maven in JavaScript

npm- much more widely usedyarn

These both contain package.json file for dependencies

npm and yarn are package managers and NOT build tools

These package managers install dependencies, but not used for transpiling JavaScript code or JavaScript artifact.

npm repository for dependencies : It refers to the npm registry, which is a public repository of JavaScript packages. It is used to find and install dependencies (libraries or modules) required for JavaScript projects.

Command Line Tools - npm

npm start- start the applicationnpm stop- stop the applicationnpm test- run the testnpm publish- publish the artifact

What does the zip/tar file include?

- application code, but not the dependencies

When you Run the app on the server

You must install the dependencies first

Unpack zip/tar

Run the app

You need to copy the artifact & package.json file to the server to run the application

To create an artifact file from our node js application

npm pack

JavaScript world is much more flexible than the Java world

But not as structured and standardized

So the above is about nodejs a backed application but what about frontend application when it comes to package.

Package Frontend Code

Different ways to package this

Package fronted and backend code separately

Common artifact file

For example, if we have reactjs and nodejs application both in Javascript than we can have:

Separate pakcage.json file for frontend and backend

Common package.json file

Frontend/react code needs to be transpiled!

Browser don’t support latest JS versions or other fancy code decorations, like JSX

The code needs to be compressed/minified!

Separate tools for that - Build Tools/Bundler!

e.g.: webpack

Build Tools - Webpack

Webpack is a build tool that bundles, transpiles, and minifies JavaScript and other assets for frontend applications.

It will transpiles, minified, bundles, compresses the code.

// install the webpack

npm install

// It is used to bundle or compress the javascript code

npm run build

Dependencies for Frontend Code

npm and yarn for dependencies

Separate package.json vs. common package.json with backend code

Can be an advantage to have the same build and package management tools

In case react has Java as a backend:

Bundle-fronted app with webpack

manage dependencies with npm or yarn

package everything into a WAR file

What are Build tools for other programming languages

These concepts are similar to other languages

pip package manager for python

Publish an artifact into the repository

You build the artifact you need to push it to the artifact repository

Build tools have commands for that

Then you can download (curl, get) it anywhere

NOTE: we don’t fetch or push the artifact like that because of Docker.

The two important things to understand related to these package managers and build tools that we use for different programming languages Docker and another CI/CS pipeline(Jenkins)

Build tools & Docker

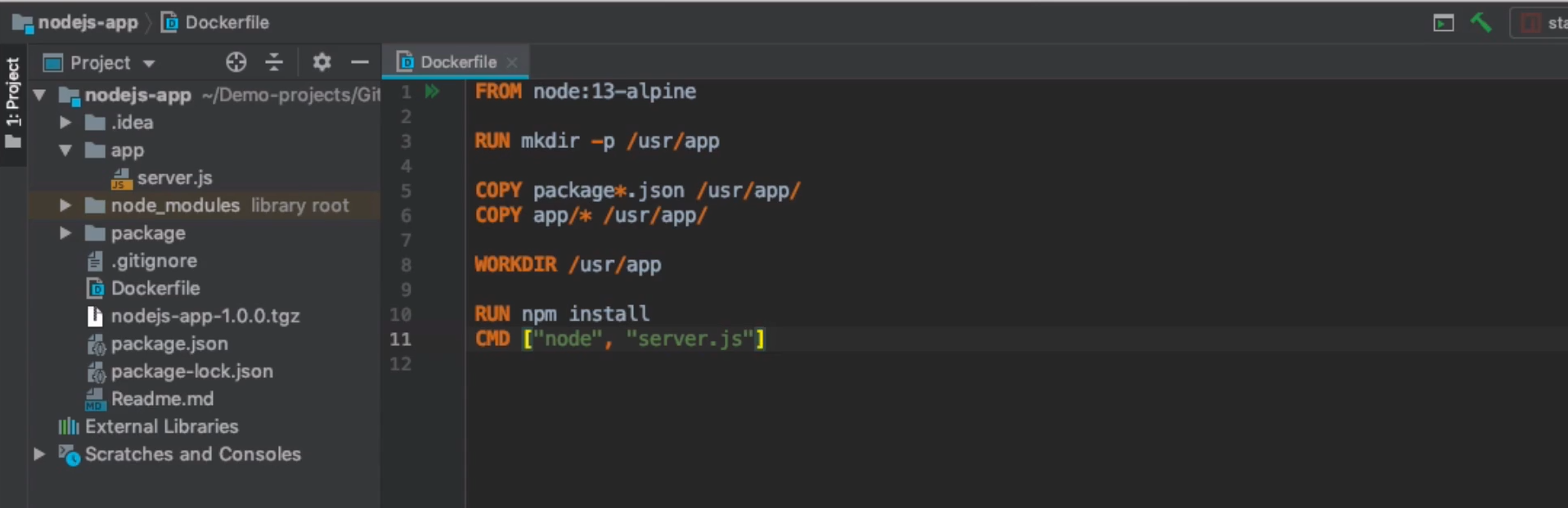

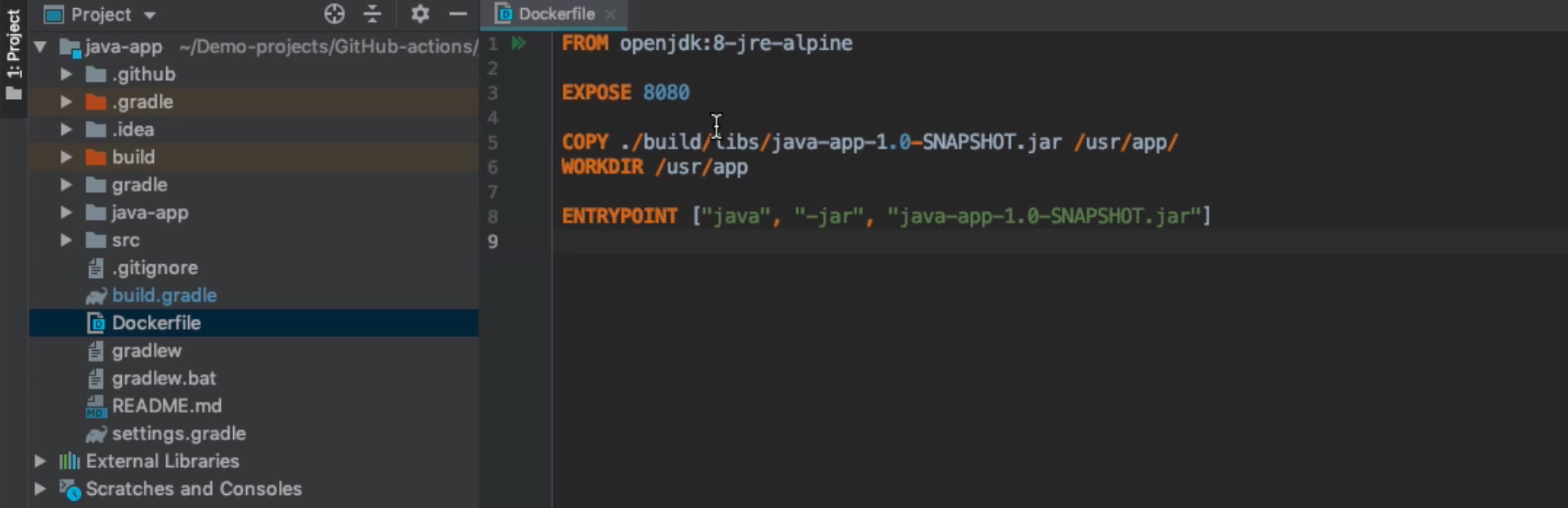

With Docker, the need to create zip or tar files is reduced. Docker images can directly contain the application code, simplifying the deployment process.

With docker, we don't need to build and move different artifact types.

There is just one artifact type - Docker images

Now, we build Docker images from the application and we don't need a repository for each file type, no need to move multiple files to the server like package.json, no need to zip, just copy everything into the docker filesystem and run it from docker image.

Docker image is also an artifact

In order to start the application you don't need npm or java on the server, execute everything ( command to run the JAR or node application) inside the docker image.

NOTE: For the different Java and javascript applications - we don’t need to create zip or tar files anymore because of docker images because we can directly copy and paste the JS code files into docker image but we have to build the application

Docker file for Javascript

Docker file for java

Build Tools for DevOps Engineers

Why should you know these Build Tools?

DevOps engineers need to understand build tools to assist developers, automate builds, and manage CI/CD pipelines. This includes:

Developers install dependencies locally and run the application, but don’t build it locally

You need to execute tests on the build servers

npm/yarn testgradle/mvn testHelp Developers for building the application

Because you know where and how it will run

Build Docker image ⇒ Push to Repo ⇒ Run on Server

You need to configure the build automation tool CI/Cd pipeline

Install dependencies ⇒ run tests ⇒ build/bundle app ⇒ push to repo

NOTE: You don’t run the app locally

Subscribe to my newsletter

Read articles from Suraj directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Suraj

Suraj

I'm a Developer from India with a passion for DevOps ♾️ and open-source 🧑🏻💻. I'm always striving for the best code quality and seamless workflows. Currently, I'm exploring AI/ML and blockchain technologies. Alongside coding, I write Technical blogs at Hashnode, Dev.to & Medium 📝. In my free time, I love traveling, reading books