Best Practices: Snowflake Search Optimisation Services

John Ryan

John Ryan

The fact is that Snowflake Search Optimization Service is a superb way to maximize query performance for point-lookup queries. Yet, it’s one of the most misunderstood and poorly deployed Snowflake performance query tuning tools.

It can be a really effective way to spend a lot of money and achieve nothing – which sucks. However, this article will explain what Search Optimization Service is, how it works, and (more importantly) when to use this ground-breaking performance tuning tool.

Finally, we’ll describe a best practices methodology to deploy Search Optimization to help you avoid the pitfalls and make your queries fly.

What is Search Optimization Service?

Also known as SOS, Search Optimisation Service is one of a suite of Snowflake query performance acceleration tools. Unlike Cluster Keys or Snowflake Query Acceleration Service, which are designed to maximize the performance of queries returning thousands or millions of rows, Search Optimization Service aims to maximize queries that return a few highly selective rows from tables containing millions of entries.

This insight is probably the most important feature of Search Optimization Service, leading to the Best Practices later in this article. In my experience, many Snowflake Solution Architects abandon SOS because, if used inappropriately, it can lead to high costs for little or no benefit.

To give you an idea of the potential gains, I used the Search Optimization service to improve query performance from nearly nine minutes to 17 seconds. That’s a 30 times performance improvement without the need to change a single line of code.

How Does Search Optimization Service Work?

The diagram below illustrates how Search Optimization Service builds an “index-like structure” based upon an existing table when the user executes the following ALTER TABLE statement.

alter table STORE_SALES

add search optimization;

Similar to Snowflake Clustering, the alter table creates the index structure using a background process, but don’t be confused assuming this builds an index.

“Whereas a B-Tree index records where the data IS. A bloom filter records where the data ISN’T”

Unlike a traditional B-Tree index which stores a link to each row, the Search Optimization Service builds a set of Bloom Filters to track the partitions where the data isn’t. Using a patented Bloom filter solution, Snowflake automatically prunes (skips) micro partitions which means the fewer matching rows returned, the more extreme the performance gain.

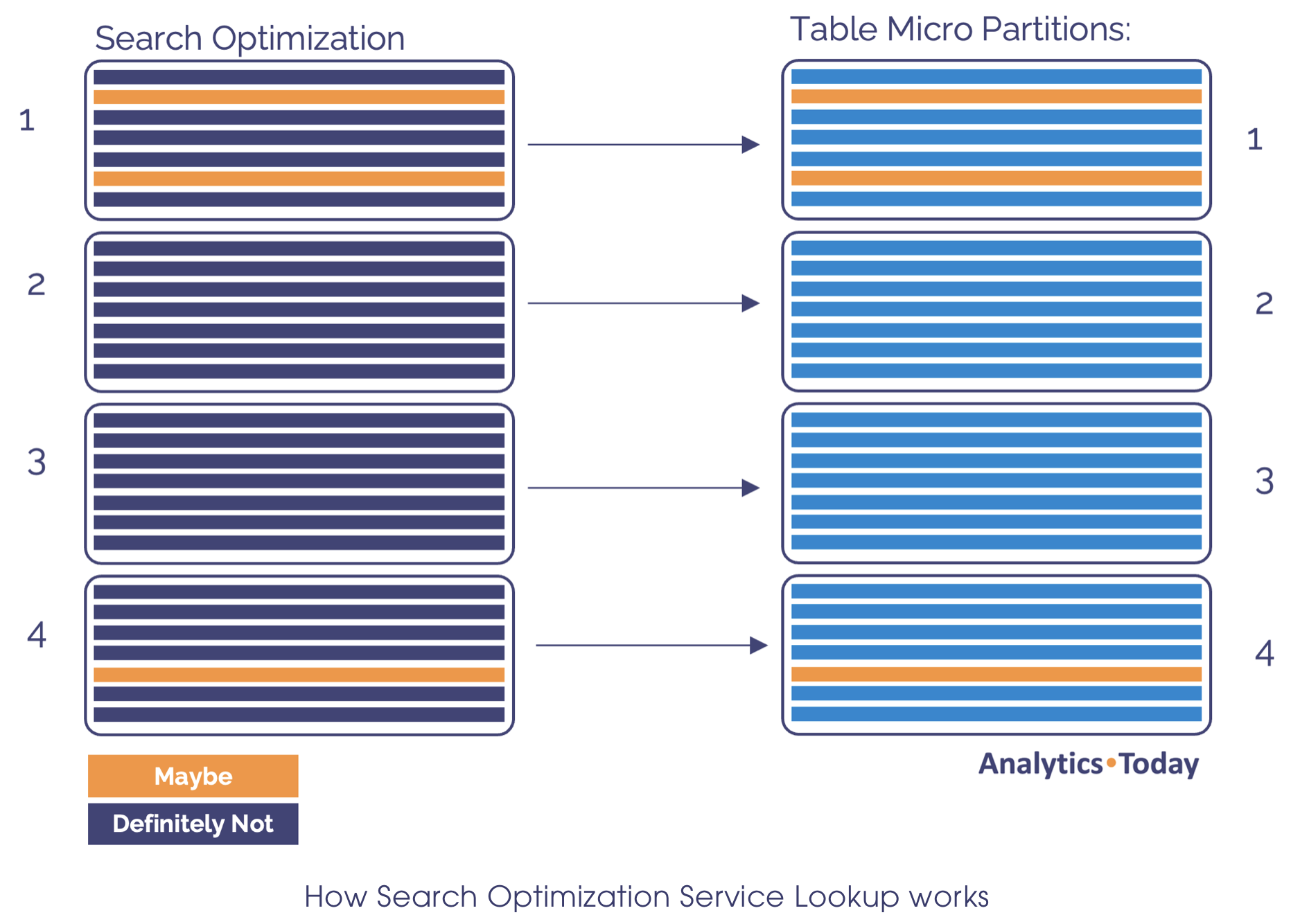

The diagram below illustrates how the Search Optimization Service works. The index structure on the left records for each entry, whether the data may be in the corresponding micro-partition or definitely not. Using this method, Snowflake can skip entire micro-partitions, but this effectively depends mainly on the number of distinct matching rows. The more matching rows, the higher the probability of fetching a micro partition.

In the above diagram, a query searching for the orange entries would only fetch micropartitions 1 and 4, potentially skipping thousands of others.

For example, suppose a STORE_SALES table has 28 billion rows, and you need to fetch data for a single customer from a transaction table with data for 65 million customers. In that case, you’d return an average of 443 sale records per customer.

Average rows per customer =

(28 billion sales / 65 million customers)

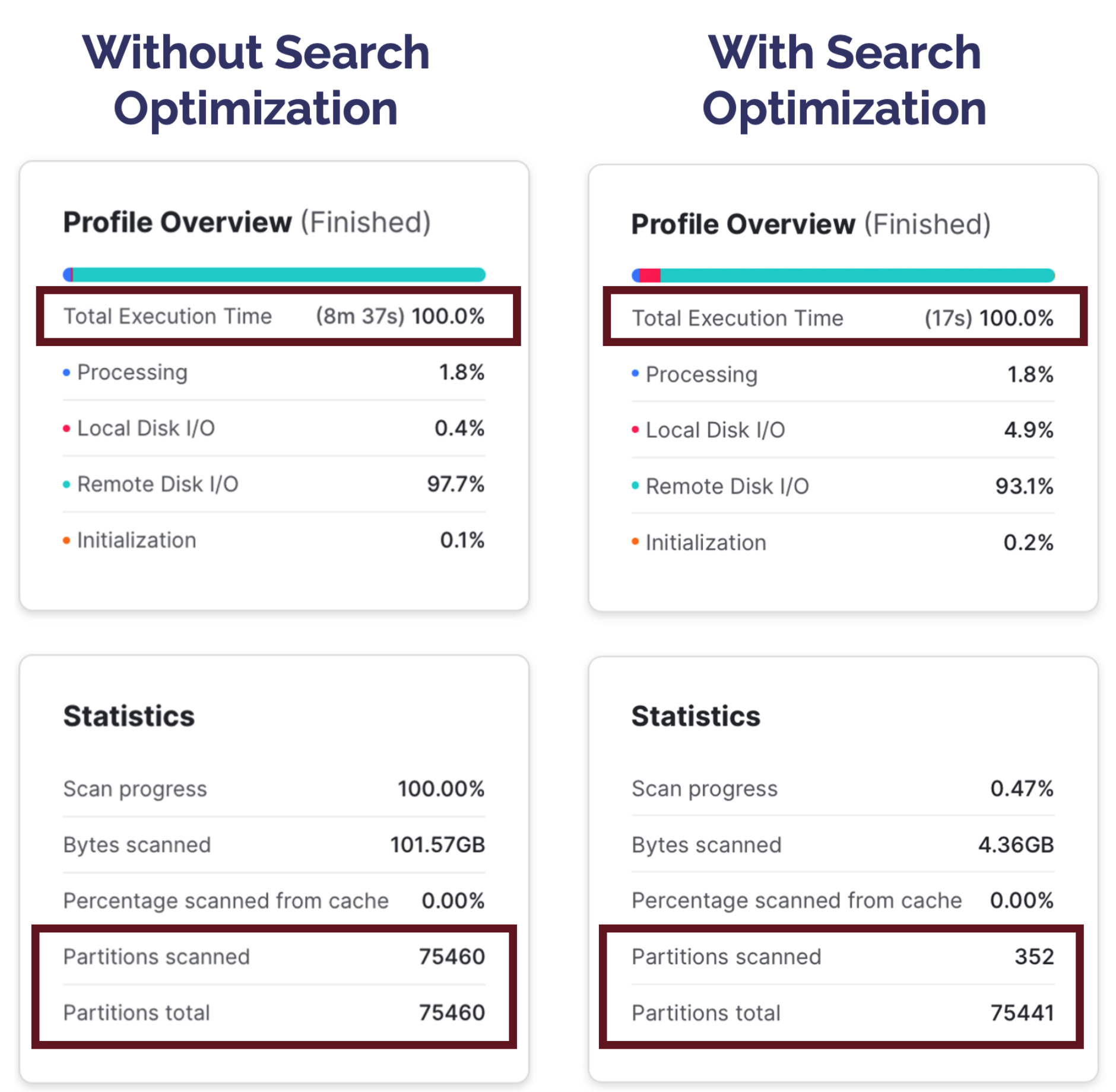

Assuming the STORE_SALES table has 75,441 micro-partitions, it’s reasonable to assume that the data must reside in at most 443 micro-partitions. Even if these rows are distributed across the entire table, with each row in a separate micro-partition, Search Optimization Service can eliminate 99.4% of the data and return results within seconds. Large tables with billions of rows can lead to astonishingly fast query performance.

The key point is performance is almost entirely based on the number of rows returned compared to the number of micro-partitions in the table.

How Fast is Search Optimization Service?

To benchmark the potential performance gains from Search Optimization Service, I created a copy of the STORE_SALES table with 28.8 billion rows and executed the following query.

select *

from store_sales

where ss_cust_sk = 41657562;

The screenshot below demonstrates the performance gain.

The screenshot above shows using Search Optimization produces a 30 times improvement in query performance from around nine minutes to under 20 seconds.

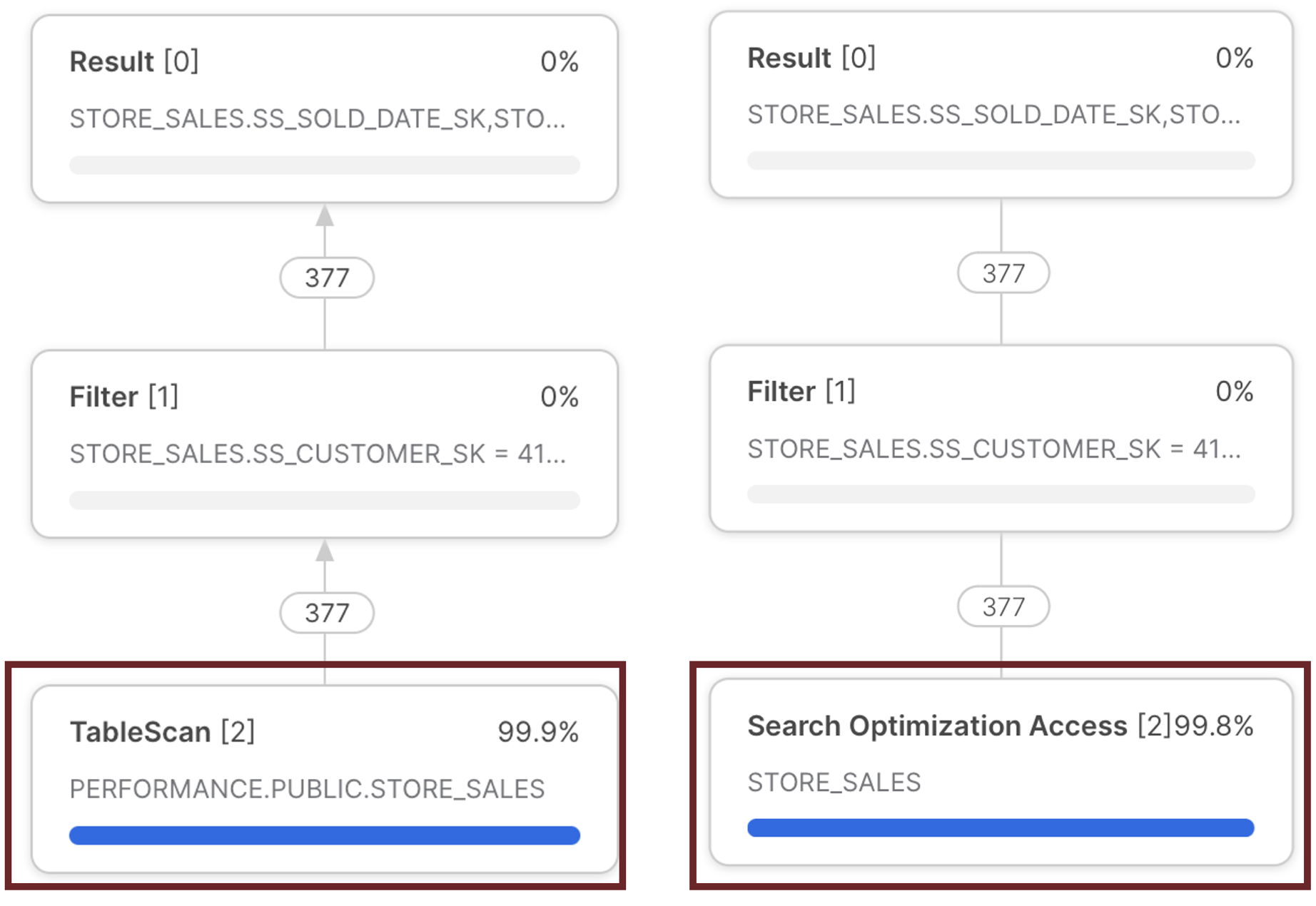

The screenshot above illustrates how to determine if the query used Search Optimization. The query plan on the left performed a full table scan, whereas on the right, the query used the Search Optimization structure to return the entries.

What Does Search Optimization Cost?

Unlike Data Clustering, which has little impact on storage cost, the index structure for SOS can be a significant size, so data storage is a factor to consider. The largest influencing factor is the number of columns indexed and the number of distinct values for each column.

However, the compute cost is likely to be the larger component, which includes:

Initial SOS Build: Which uses Snowflake background (serverless) compute resources to initially build the index structure.

Ongoing Maintenance: As entries are inserted, updated or deleted on the main table, these changes must be applied to the index structure, and this will add to the cost. For this reason, it may not be sensible to deploy Search Optimization on tables with high churn (many updates) on the indexed columns.

Be aware that, although Search Optimization Service charges for compute on a per-second basis, this is a highly sophisticated and specialized query tuning feature and the charge rate is ten times the credit rate. This can be verified in Table 5 of the Snowflake Service Consumption Table.

Performance Vs. Cost

The diagram below illustrates the challenge faced by every Snowflake Data Engineer. It highlights the trade-off between prioritizing high throughput (the ability to fetch and process billions of rows) versus the need for a very fast response time while controlling cost.

As with every performance feature, Snowflake Search Optimization Service is a trade-off of cost Vs. latency. As SOS comes with a cost, you must compare the performance benefits to the additional cost to decide whether it’s worth deploying.

Finally, search optimization works per column; the more columns are indexed, the greater the cost. Later in this article, we will present a methodology to identify the best columns to deploy, which will help reduce costs.

When should I use Search Optimization Service?

The best practices indicating when to use Search Optimization Service should by now be apparent, but to confirm, the critical decision points include:

Low latency is vital: It’s not worth solving a problem you don’t have, and search optimization should only be considered if you need very fast query performance.

Large tables, many partitions: SOS works best when applied to large tables with many micro partitions. The larger the table, the greater the potential performance benefit.

Few Rows Returned: As SOS works by elimination, the fewer rows returned as a percentage of the total micro-partitions in the table, the better. For example, a query that (on average) returns 10,000 entries in a table with 50,000 micro partitions will, on average, fetch 20% of the table and is, therefore, a poor candidate. However, a query that, on average, returns just 200 entries will return a maximum of 200 micro partitions, eliminating 99.6% of the table, an excellent candidate.

Few Updates: Similar to Data Clustering, updates applied to the base table may lead to Search Optimization Structure updates, increasing the overall cost.

Even if you have deployed Search Optimization Service on columns with equality filters, Snowflake may ignore these if it estimates the full table scan would produce results faster.

Which Queries Use Search Optimization?

When first launched, SOS only worked with queries with an equality filter in the WHERE clause. However, after a series of improvements were released, it now includes:

Queries with equality or IN filter: For example, Where ID in (1, 2)

Queries with a Regular Expression in the WHERE clause. This can include a range of predicates, including LIKE, STARTSWITH, REGEXP, or REGEXP_LINK

Queries filtering VARIANT data. This means SOS can be used against data stored in a semi-structured format.

Queries against Geospatial Data: SOS can improve query performance against geographic data queried using geospatial functions, including ST_INTERSECTS, ST_CONTAINS, and ST_COVERS.

What is an Equality Filter?

It’s vital to understand precisely when SOS will be used, or you risk spending considerable credits building and maintaining the search index structure, which queries will ignore.

One situation in which the Search Optimization Service works well is when the WHERE clause includes an equality match against one or more columns – ideally returning a few rows.

For example, the following query would be a potential SOS candidate:

select *

from store_sales

where customer_gender = 'MALE'

and credit_rating in ('A+', 'A', 'B+')

and year_of_birth = 1961;

Assuming the three columns were included in a Search Optimization Service index, all three could potentially be used to improve query performance. Consider the following query, which uses a common Kimball-based Dimensional Design method.

select *

from store_sales s

, customer_demographics cd

where s.demographics_key = cd.demographics_key

and cd.customer_gender = 'MALE'

and cd.credit_rating in ('A+', 'A', 'B+')

and cd.year_of_birth = 1961;

Although both designs achieve the same result, the second query filters columns on the lookup dimension table CUSTOMER_DEMOGRAPHICS. This means deploying Search Optimization Service on the STORE_SALES table will be ignored. You could, however, consider testing it against the dimension table, although risking a full table scan against STORE_SALES.

The only other option is denormalizing the search columns to the fact table, although this might be a prohibitively expensive design change.

How to track the cost of Search Optimization Service?

The following SQL statement can be used to track SOS costs:

select to_char(start_time,'YYYY-Mon') as month

, table_name

, sum(credits_used)

from snowflake.account_usage.search_optimization_history

where table_name = 'STORE_SALES'

group by 1,2;

In addition, you can estimate the cost of deploying search optimization using the function: system$estimate_search_optimization_costs()

Search Optimization Service: Deployment Best Practices

Having decided the SOS is a viable solution, it’s critical you follow the best practices to identify the correct columns to deploy to avoid significant costs with few benefits.

As indicated above, Search Optimization Service works best when few rows are returned as a percentage of the entire table.

As the search optimization cost increases with the number of columns indexed, you should first identify columns which appear in the WHERE clause with an equality search on long running queries against the target table.

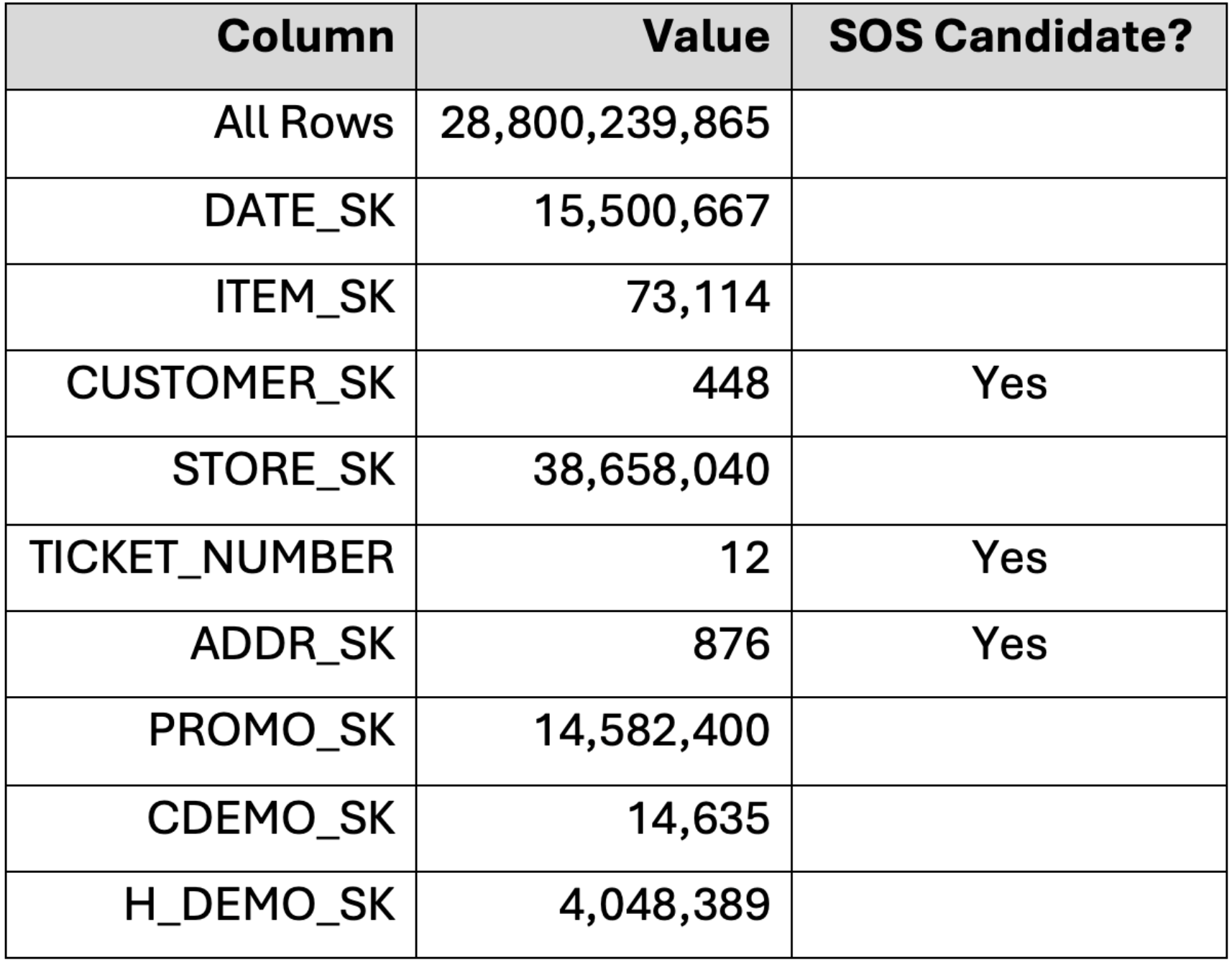

Let’s assume you’ve narrowed down the set to nine columns on the STORE_SALES table with over 28 billion rows.

Execute the following query to return the average number of distinct rows for each column.

select count(*) as all_rows

, round(all_rows/approx_count_distinct(ss_sold_date_sk)) as date_sk

, round(all_rows/approx_count_distinct(ss_item_sk)) as item_sk

, round(all_rows/approx_count_distinct(ss_customer_sk)) as customer_sk

, round(all_rows/approx_count_distinct(ss_store_sk)) as store_sk

, round(all_rows/approx_count_distinct(ss_ticket_number)) as ticket_number

, round(all_rows/approx_count_distinct(ss_addr_sk)) as addr_sk

, round(all_rows/approx_count_distinct(ss_promo_sk)) as promo_sk

, round(all_rows/approx_count_distinct(ss_cdemo_sk)) as cdemo_sk

, round(all_rows/approx_count_distinct(ss_hdemo_sk)) as h_demo_sk

from store_sales;

The table below summarizes the results of the above query:

We can identify the number of partitions in a table by executing the following SQL:

select *

from store_sales;

However, don’t wait for the query to return; simply kill the query after a few seconds and check the query profile. In this case it shows the table has 72,718 micro partitions.

This means, for example, on average, a query WHERE ITEM_SK = 1234567 would probably need to scan the entire table, whereas WHERE ADDR_SK = 1234567 would (at worst) fetch just 876 partitions, eliminating 98.8% of micro partitions.

However, be aware that if the query includes a combination of keys that include candidates like ITEM_SK, this could be considered for indexing, but only if combined with other indexed columns.

The following SQL can be used to deploy Search Optimization on the selected columns:

-- Apply Search Optimization

alter table store_sales

add SEARCH OPTIMIZATION on equality(ss_item_sk, ss_customer_sk, ss_ticket_number, ss_addr_sk);

As the SOS build is executed in the background, use the following SQL to monitor progress:

show tables like 'STORE_SALES';

select "name"

, "database_name" as database

, "schema_name" as schema

, "bytes" as table_size

, "search_optimization" as search_optimization

, "search_optimization_progress" as progress

, "search_optimization_bytes" as search_size

from table(result_scan(last_query_id()));

Finally, when the progress is 100%, start testing query performance to check the performance improvements are worth the additional cost.

Conclusion

You'll now appreciate why Snowflake Search Optimization Service is one of the most misunderstood and poorly deployed tools to maximize query performance. To summarise the best practices, only deploy Search Optimization on tables which:

Have very large numbers of micro-partitions (over 1,000 as a minimum)

Have queries with equality filters in the WHERE clause against a given table

Return a few rows in the result set relative to the number of micro-partitions in the table

Have few updates or delete operations

Need very fast query performance

So, if you have concluded that Search Optimization Service doesn’t deliver incredible query performance, perhaps it's time to reconsider.

Finally, I'd advise that if you have already deployed search optimization, you use the techniques described in this article to verify the deployment is cost-effective. Even removing a few indexed columns can lead to a significant reduction in cost.

Subscribe to my newsletter

Read articles from John Ryan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

John Ryan

John Ryan

After 30 years of experience building multi-terabyte data warehouse systems, I spent five years at Snowflake as a Senior Solution Architect, helping customers across Europe and the Middle East deliver lightning-fast insights from their data. In 2023, he joined Altimate.AI, which uses generative artificial intelligence to provide Snowflake performance and cost optimization insights and maximize customer return on investment. Certifications include Snowflake Data Superhero, Snowflake Subject Matter Expert, SnowPro Core, and SnowPro Advanced Architect.