Building a blogging website with microservices: log aggregation and monitoring

Evaldas Visockas

Evaldas VisockasTable of contents

Hi. This is the twelfth part of the diary about developing the “Programmers’ diary” blog. The open source code of this project is on https://github.com/TheProgrammersDiary. The Eleventh part: https://medium.com/@vievaldas/programmers-diary-blog-development-adding-2b83f07acce4.

The first diary entry contains explanation on why this project was done: https://medium.com/@vievaldas/developing-a-website-with-microservices-part-1-the-idea-fe6e0a7a96b5.

Next entry:

—————

2023-10-09 to 2023-10-15:

Since the whole website building point is to learn how to construct microservices and one of the most crucial parts is logging and log aggregation let's make it. After that we will add monitoring.

Log aggregation

For log aggregation the Elastic Stack is a popular choice so we will use it.

First, let’s add elasticsearch, logstash and kibana to docker-compose.yaml:

elasticsearch:

container_name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.1.1

environment:

- discovery.type=single-node

**- xpack.security.enabled=false**

ports:

- "9200:9200"

- "9300:9300"

logstash:

container_name: logstash

image: docker.elastic.co/logstash/logstash:7.1.1

depends_on:

- elasticsearch

ports:

- "5044:5044"

command: logstash -f /etc/logstash/conf.d/logstash.conf

volumes:

- ./logstash.conf:/etc/logstash/conf.d/logstash.conf:ro

kibana:

container_name: kibana

image: docker.elastic.co/kibana/kibana:7.1.1

depends_on:

- elasticsearch

ports:

- "5601:5601"

We are setting xpack.security.enabled to false since xpack is a paid feature (ElasticSearch and Kibana once had a free license but it was changed and now there are some paid features).

We need to configure logstash (which will extract and store logs from our microservices) in logstash.conf:

input {

tcp {

port => 5044

codec => json_lines

}

}

filter {

mutate {

add_tag => [ "processed_by_logstash" ]

}

}

output {

elasticsearch {

hosts => "elasticsearch:9200"

}

}

Logstash receives all input via port 5044, mutates it if required, and sends it to ElasticSearch.

We need to write log extraction logging in our microservices. In post and blog microservices, logs are collected similarly.

Let’s see blog modifications:

We have a logback.xml:

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<appender name="stdout" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern> %d{dd-MM-yyyy HH:mm:ss.SSS} [%thread] %-5level %logger{36}.%M - %msg%n </pattern>

</encoder>

</appender>

<appender name="save-to-file" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>logs/blog.log</file>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<Pattern> %d{dd-MM-yyyy HH:mm:ss.SSS} [%thread] %-5level %logger{36}.%M - %msg%n </Pattern>

</encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>logs/archived/blog_%d{yyyy-MM-dd-mm}.%i.log</fileNamePattern>

<maxFileSize>1MB</maxFileSize>

<maxHistory>30</maxHistory>

<totalSizeCap>30MB</totalSizeCap>

<cleanHistoryOnStart>true</cleanHistoryOnStart>

</rollingPolicy>

</appender>

<appender name="logstash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>logstash:5044</destination>

<encoder class="net.logstash.logback.encoder.LogstashEncoder" />

</appender>

<root level="INFO">

<appender-ref ref="stdout" />

<appender-ref ref="save-to-file" />

<appender-ref ref="logstash" />

</root>

</configuration>

This file is stored in resources folder. We have three appenders. One writes to console, another writes to file, the third sends logs to logstash. We are writing logstash:5044 instead of localhost since the services are inside containers and logstash is isolated from microservices. Looking at appender → encoder → pattern: 5 logging levels can be displayed: error, warning, info, debug, trace. Also, if logger’s name is very long it will be truncated.

Only the current log is displayed in the top of logs folder. Older logs get archived into folder called logs (as you can see in appender → file) and get the time assigned. Current log gets replaced when it reaches maxFileSize (1MB) limit. If there is lots of activity, there could be more than one log with the same time, so there is .{i}.log format for similar logs, the count just gets incremented as seen in appender → rolingPolicy → fileNamePattern (e.g. blog_2022-01-01_0 and blog_2022-01-01_1).

We also need a pom dependency for logstash communication:

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>7.4</version>

</dependency>

The setup is prepared. Let’s add some logging. We will intercept HTTP calls:

package com.evalvis.blog.logging;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.fasterxml.jackson.databind.node.ArrayNode;

import com.fasterxml.jackson.databind.node.ObjectNode;

import jakarta.servlet.FilterChain;

import jakarta.servlet.ServletException;

import jakarta.servlet.annotation.WebFilter;

import jakarta.servlet.http.HttpServletRequest;

import jakarta.servlet.http.HttpServletResponse;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.lang.NonNull;

import org.springframework.stereotype.Component;

import org.springframework.web.filter.OncePerRequestFilter;

import org.springframework.web.util.ContentCachingRequestWrapper;

import org.springframework.web.util.ContentCachingResponseWrapper;

import java.io.IOException;

import java.io.UnsupportedEncodingException;

import java.util.Collection;

import java.util.Enumeration;

@Component

@WebFilter(filterName = "HttpLoggingFilter")

public final class HttpLoggingFilter extends OncePerRequestFilter {

private static final Logger log = LoggerFactory.getLogger(HttpLoggingFilter.class);

@Override

protected void doFilterInternal(

@NonNull HttpServletRequest request, @NonNull HttpServletResponse response,

FilterChain filterChain

) throws ServletException, IOException {

ContentCachingRequestWrapper requestWrapper = new ContentCachingRequestWrapper(request);

ContentCachingResponseWrapper responseWrapper = new ContentCachingResponseWrapper(response);

long startTime = System.currentTimeMillis();

try {

filterChain.doFilter(requestWrapper, responseWrapper);

} catch (ServletException | IOException e) {

log.warn(

"While processing this request, exception was caused: "

+ requestLog(request, requestWrapper).toPrettyString()

);

throw e;

}

long timeTaken = System.currentTimeMillis() - startTime;

ObjectNode root = requestLog(request, requestWrapper);

String responseBody = new String(

responseWrapper.getContentAsByteArray(), response.getCharacterEncoding()

);

ObjectNode responseNode = root.withObject("/response");

responseNode

.put("status", response.getStatus())

.put("body", responseBody)

.put("time taken (ms)", timeTaken);

appendResponseHeaders(responseNode, response);

log.info(root.toPrettyString());

responseWrapper.copyBodyToResponse();

}

private ObjectNode requestLog(

HttpServletRequest request, ContentCachingRequestWrapper requestWrapper

) throws UnsupportedEncodingException {

String requestBody = new String(

requestWrapper.getContentAsByteArray(), request.getCharacterEncoding()

);

ObjectNode root = new ObjectMapper().createObjectNode().withObject("/HTTP");

ObjectNode requestNode = root.withObject("/request");

requestNode.put("URI", request.getRequestURI());

requestNode.put("method", request.getMethod());

requestNode.put("body", requestBody);

appendRequestHeaders(requestNode, request);

return root;

}

private void appendRequestHeaders(ObjectNode requestNode, HttpServletRequest request) {

ArrayNode headers = requestNode.putArray("headers");

Enumeration<String> headerNames = request.getHeaderNames();

while(headerNames.hasMoreElements()) {

String headerName = headerNames.nextElement();

ObjectNode header = new ObjectMapper()

.createObjectNode()

.put(headerName, request.getHeader(headerName));

headers.add(header);

}

}

private void appendResponseHeaders(ObjectNode responseNode, HttpServletResponse response) {

ArrayNode headers = responseNode.putArray("headers");

Collection<String> headerNames = response.getHeaderNames();

for(String headerName : headerNames) {

ObjectNode header = new ObjectMapper()

.createObjectNode()

.put(headerName, response.getHeader(headerName));

headers.add(header);

}

}

}

This class basically intercepts HTTP calls and writes request/response data in json format.

Kibana setup

Go to localhost:5601 (make sure Kibana container is built and running).

Press Management (Settings icon).

On Kibana section press Index patterns.

Write logstash* to find the logs index.

Press Next step.

Add additional filter: @timestamp.

Create index pattern.

Now you can press Discover (compass icon) and see all logs.

You can filter then by a specific syntax, however, this is out of scope (at least for now).

Some room for improvement:

Currently all logs are placed on one index. An improvement would be to place each microservice log on different index.

My tests will need some upgrades, since now an integration test tries to connect to Logstash. I could either add Logstash in tests or add config which disables logging in tests.

That’s it for logging aggregation for now. Sam Newmann in his Building Microservices book advised to create a standard for logging, so you could filter all microservices with easy following one standard. However, for now this is out of scope.

Bugfix in frontend

OK, so I added logs and fin a bug in frontend.

When I created an HTTP filter I noticed that my logs are getting spammed from FE.

The issue was, that if you have code:

useEffect(() => {

const effect = async () => {

await fetch(

"<http://localhost:8080/comments/list-comments/>" + postId,

{ method: "GET" }

).then(response => response.json())

.then(response => setComments(

response.map(

comment =>

<li key={comment.id}>

<p>{comment.author}</p>

<p>{comment.content}</p>

</li>

)));

}

effect();

});

This will get called over and over again in a matter of miliseconds. Therefore, additional parameter needs to be added at the end:

effect();

}, **[postId]**);

This ensures that fetch will be only called one time unless postId changes.

I updated all useEffect functions similarly.

OK, logging is complete. Now let’s setup monitoring.

Monitoring

We will use Prometheus and Grafana for that.

Again, let’s start from docker-compose.yaml:

prometheus:

container_name: prometheus

image: prom/prometheus:v2.35.0

restart: unless-stopped

ports:

- "9090:9090"

command:

- "--config.file=/prometheus.yaml"

volumes:

- "./prometheus.yaml:/prometheus.yaml"

grafana:

container_name: grafana

image: grafana/grafana-oss:8.5.2

restart: unless-stopped

ports:

- "3010:3000"

environment:

- GF_SECURITY_ADMIN_PASSWORD=admin

- GF_SERVER_DOMAIN=localhost

For Prometheus, we need a config file prometheus.yaml:

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'blog'

scrape_interval: 5s

metrics_path: '/actuator/prometheus'

static_configs:

- targets: ['host.docker.internal:8080']

- job_name: 'post'

scrape_interval: 50s

metrics_path: '/actuator/prometheus'

static_configs:

- targets: ['host.docker.internal:8081']

- job_name: 'prometheus'

scrape_interval: 5s

static_configs:

- targets: ['prometheus:9090']

In Spring, metrics are exposed on /actuator/prometheus endpoint by default. Therefore, we tell Prometheus to scrape that endpoint every 5 seconds. To experiment with scrape interval post will get scraped every 50 seconds only.

Oh, and Prometheus also can scrape itself and seen in last job_name.

In Spring, we need to update application-docker.yaml:

management:

endpoint:

prometheus:

enabled: true

endpoints:

web:

exposure:

include: '*'

prometheus:

metrics:

export:

enabled: true

Also, pom.xml needs to be updated:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

<version>3.0.4</version>

</dependency>

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-core</artifactId>

</dependency>

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

</dependency>

The monitoring is complete.

Now we can go to http://localhost:8080/actuator/prometheus and see the list of metrics (dont forget to run containers).

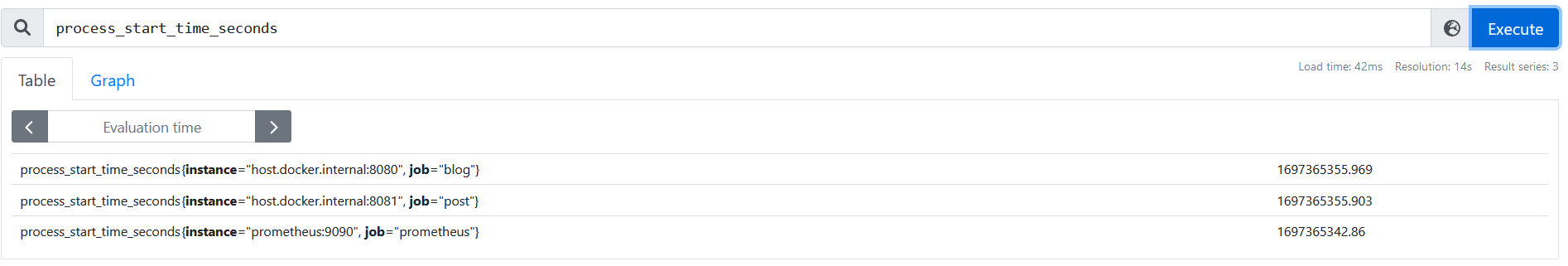

If we go to Prometheus on http://localhost:9090 we can query these metrics. E.g. let’s query process_start_time_seconds:

And we see this metric is present in all our 3 services (blog, post and prometheus itself). There are lot of possibilities to querying data on Prometheus, however, this is currently out of scope. Querying syntax can be found here: https://prometheus.io/docs/prometheus/latest/querying/basics/.

This article describes how you can access Grafana and view metrics visually: https://www.linkedin.com/pulse/monitoring-springboot-prometheus-grafana-vía-docker-few-vera-marañón/. It also has a preset dashboard!

So that was simple log aggregation setup, bugfix and simple monitoring setup. Next, we will probably take care of correlation ID which will help tracking bugs which span more than one microservice (It will allow tracing back the issue). Sam Newmann recommended correlation ID to be prepared early since it’s hard to retrofit into a bigger system yet relatively easy to apply in a small system. After correlation ID is implemented maybe tracing will come soon. For now that is all.

—————

Thanks for reading.

The project logged in this diary is open source, so if you would like to code or suggest changes, please visit https://github.com/TheProgrammersDiary.

Next part already out: https://hashnode.programmersdiary.com/building-a-blogging-website-with-microservices-sign-up.

Subscribe to my newsletter

Read articles from Evaldas Visockas directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by