Mastering Formal Grammars: An Introduction to the Chomsky Hierarchy

arman takmazyan

arman takmazyan

Previous Posts:

Creating TuffScript: Exploring Esoteric Programming Languages and Their Foundations

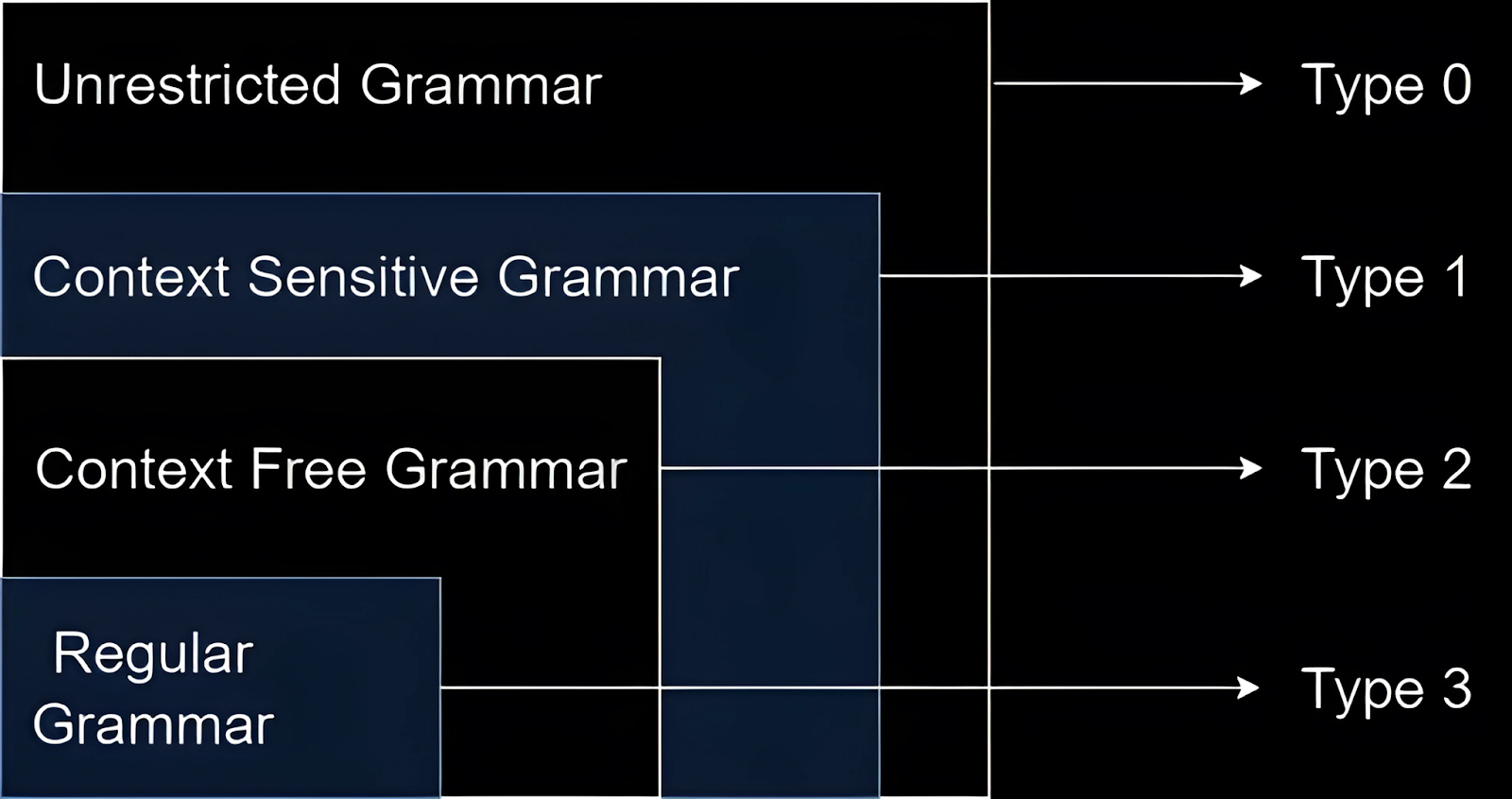

Chomsky hierarchy

When we talk about formal grammars and languages, it's important to discuss the Chomsky Hierarchy. Starting with a broad overview of the main ideas is beneficial. Before delving into the specifics of grammar, let's first consider the big picture we'll be discussing.

Noam Chomsky, the father of modern linguistics, cognitive scientist, and philosopher, developed the Chomsky hierarchy in 1956 to formally classify the complexity of formal languages and grammars. His goal was to better understand the underlying structure and computational complexity of human natural languages.

The Chomsky hierarchy classifies formal grammars into four levels, organized from the simplest to the most complex: regular, context-free, context-sensitive, and unrestricted grammars.

The Chomsky hierarchy highlights the limitations and capabilities of various computing models by distinguishing between different types of grammars and the computational power required to process them.

Regular grammars are used to describe simple constructs. They are effective for straightforward tasks like basic pattern matching, making them great tools for defining search patterns to analyze and manipulate text. For example, they can be used to find identifiers, strings, and constants. However, regular grammars have limitations when it comes to handling complex patterns, such as nested structures like balanced parentheses.

Context-Free Grammars allow for the creation and understanding of more complex patterns. This level can handle nesting, such as parentheses in math equations, enabling the description of correctly structured arithmetic expressions.

Context-Sensitive Grammars allow for the creation of even more sophisticated grammars. They enable the description of natural languages, like English or Spanish, which have complex rules influenced by the surrounding context. However, the whole class of CSGs is much bigger than natural languages.

Unrestricted grammars represent the broadest category within the Chomsky hierarchy. These grammars have no constraints on their production rules, except that each left-hand side must not be empty. This class of grammar is capable of generating any recursively enumerable language without limitation.

We will concentrate on regular and context-free grammars in order to discuss concepts that we will use for creating TuffScript.

Fundamental Definitions

Let's start with some fundamental definitions. I really like how it is done by Robert Sedgewick and Kevin Wayne, so we will not reinvent the wheel, and we will use their definitions. (Computer Science: An Interdisciplinary Approach)

A symbol is our basic building block, typically a character or a digit.

An alphabet is a finite set of symbols.

A string is a finite sequence of alphabet symbols.

A formal language is a set of strings (possibly infinite), all over the same alphabet.

Formal grammar is a set of rules used to construct all syntactically correct strings over the alphabet in a language.

Formal language is a set of all possible strings that can be constructed using its alphabet, following the rules of the language (formal grammar).

Similar to how rules in human languages dictate the structure of sentences, formal grammars provide sets of rules that define how strings in a language can be formed.

The simplest way to specify a formal language is by enumerating its strings, since languages can be large or infinite sets, we can also use informal descriptions.

| English Definition | Example in Language | Example not in Language |

| All sequences of 'a's and 'b's that end with 'a'. | 'abba', 'aa', 'bba' | 'abb', 'bab', 'bbb' |

| All sequences where the number of 'a's equals the number of 'b's. | 'abab', 'baba', 'abba' | 'aab', 'bbbaa', 'abaa' |

| All sequences of 'a's and 'b's where 'a's are not next to each other.' | 'abab', 'bab', 'baba' | 'aa', 'baa', 'aab' |

| English Definition | Example in Language | Example not in Language |

| All sequences that start with 'x' and then alternate between 'y' and 'z'. | 'xyz', 'xyzy', 'xyzyz' | 'xzz', 'yxz', 'xyzx' |

However, the English language is not well-suited for defining formal languages.

We need a way to precisely define formal languages, and this task is known as the specification problem for formal languages.

Also, we need a method to determine if a given string x is part of a language L; this is known as the recognition problem.

That's why formal grammars are usually defined in terms of the following fundamental concepts.

Terminals: Basic symbols from which strings are formed.

Non-terminals: Symbols that can be replaced with sequences of terminals and non-terminals.

Production Rules: Rules specifying how non-terminals can be replaced.

Start Symbol: The special non-terminal symbol from which string construction begins.

Terminal symbols are the actual characters that appear in the final sentence. They are the endpoints of our construction process, making up the content of the language.

Non-terminal symbols act as placeholders. They are not the final characters, but they indicate where and how further replacements should occur. We define them to structure the sentence, guiding its formation step by step.

To illustrate how it works, we will begin with the very simple grammar sets called regular grammars. They really help build a basic understanding of grammatical structures in formal language theory.

Regular grammars

A regular grammar is a type of formal grammar that is used to describe regular languages.

A regular grammar is defined as a grammar that is either left-regular or right-regular. Pay attention that in a regular grammar, at most one non-terminal appears on the right side of any production. When a non-terminal is present on the right side, it always appears either at the beginning or the end of the rule:

Left-regular grammars follow these rules:

A → a

A → Ba

A → ε

Right-regular grammars have production rules in the following forms:

A → a

A → aB

A → ε

Here, A and B are non-terminal symbols, a is a terminal symbol, and ε represents the empty string (a string with zero length).

The language described by a grammar consists of strings made up of terminal symbols and generated from the start symbol (a non-terminal symbol) using these production rules. Two grammars are considered weakly equivalent if they describe the same language.

To create a regular grammar that generates all strings consisting of **'a'**s and **'b'**s, including the empty string, we can define the grammar rules as follows:

Terminal symbols (T): {a, b}

Variables (V): {S}

Production rules (P): {S → aS, S → bS, S → ε}

Here's how it works:

Rule 1 (S → aS) allows us to add 'a' to the beginning of the string.

Rule 2 (S → bS) allows us to add 'b' to the beginning of the string.

Rule 3 (S → ε) allows us to produce an empty string.

By applying these rules, we can systematically construct strings in the following way:

Root Symbol: S

Derived String (applying "S → aS"): S ⇒ aS

Second Derived String (applying "S → bS"): aS ⇒ abS

Third Derived String (applying "S → aS" again): abS ⇒ abaS

Fourth Derived String (applying "S → ε" to terminate the string): abaS ⇒ aba

The final derived string "aba".

This example demonstrates the step-by-step process of building strings from the initial symbol S, following the specific production rules of the grammar. Each step progressively constructs the string until the rule "S → ε" is applied, indicating the completion of the string construction.

We can construct natural numbers using a regular grammar:

S → 0 | 1A | 2A | 3A | 4A | 5A | 6A | 7A | 8A | 9A

A → ɛ | 0A | 1A | 2A | 3A | 4A | 5A | 6A | 7A | 8A | 9A

I also really like an example of how we can construct floating-point numbers. The set of all strings denoting a floating-point number can be described by the following production rules (more details here).

| S → +A | A → 0A | B → 0C | C → 0C | D → +E | E → 0F | F → 0F |

| S → −A | A → 1A | B → 1C | C → 1C | D → −E | E → 1F | F → 1F |

| S → A | A → 2A | B → 2C | C → 2C | D → E | E → 2F | F → 2F |

| A → 3A | B → 3C | C → 3C | E → 3F | F → 3F | ||

| A → 4A | B → 4C | C → 4C | E → 4F | F → 4F | ||

| A → 5A | B → 5C | C → 5C | E → 5F | F → 5F | ||

| A → 6A | B → 6C | C → 6C | E → 6F | F → 6F | ||

| A → 7A | B → 7C | C → 7C | E → 7F | F → 7F | ||

| A → 8A | B → 8C | C → 8C | E → 8F | F → 8F | ||

| A → 9A | B → 9C | C → 9C | E → 9F | F → 9F | ||

| A → .B | C → eD | F → ε | ||||

| A → B | C → ε |

One more interesting thing to note is that regular languages can be recursive. However, when you combine**left regular grammars with right regular grammars, as would be necessary, for example, to match balanced parentheses, the resulting grammar is no longer regular by definition.** For example:

S → Sb (Left-regular production)

S → bS (Right-regular production)

S → ε (Termination)

Context-free grammars

Building upon the concept of regular grammars, context-free grammars are more powerful and expressive. They are used to generate context-free languages.

In CFGs, the production rules are more flexible compared to regular grammars. The production rules can be applied to a non-terminal symbol regardless of its context. In particular, in a context-free grammar, each production rule is of the form:

A → α

A is a non-terminal symbol representing syntax categories and can expand into other symbols. The symbol → denotes "produces" or "can be replaced by." α is a string of both terminal (actual language symbols) and non-terminal (pattern placeholders) symbols that replace A.

An example of a simple context-free grammar rule could be:

S → aSb ∣ ε

In this rule, S can either be replaced by 'a' followed by S and then 'b', or by ε (the empty string), which provides a termination condition for the recursive expansion. This ensures the grammar can generate strings of balanced **'a'**s and **'b'**s and terminate properly.

| Grammar Rule | Example in Language | Example not in Language |

| S → aSb, S → ε | 'ab', 'aabb', 'aaabbb' | 'aab', 'bba', 'abab' |

CFGs can describe languages that regular grammars cannot. For example, they can define languages with nested structures, which are common in programming (like nested parentheses).

In summary, while regular grammars are suitable for simpler, linear languages, context-free grammars are capable of describing more complex language structures, including nested and hierarchical patterns, making them ideal for the syntax of programming languages.

Every regular grammar is context-free, but not all context-free grammars are regular. A context-free grammar can describe all regular languages and more, but they cannot describe all possible languages.

Understanding context-free grammars is crucial for building programming languages, as they provide a structured method for defining the syntax of a language.

Leftmost and Rightmost Derivations

Since we have learned about context-free grammars and can now create more complex grammatical structures, it's important to discuss the different methods of deriving the input string by replacing non-terminal symbols.

Leftmost Derivation is the process of deriving the input string by expanding the leftmost non-terminal at each step.

Rightmost Derivation is the process of deriving the input string by expanding the rightmost non-terminal at each step.

Let's see how we can generate the string "aacbb" by sequentially applying the following production rules:

Terminal symbols: {a, b}

Variables: {S, A, B}

Production rules:

S → ASB

A → a

B → b

S → c

Using the leftmost derivation, the process will look like this:

Start with S.

Apply S→ASB to introduce both A and B with another S in the middle.

- Derivation: S⇒ASB

Apply A→a to replace the leftmost non-terminal A.

- Derivation: ASB⇒aSB

Apply S→ASB again to expand the leftmost S, aiming for more As and Bs.

- Derivation: aSB⇒aASBB

Apply A→a to replace the next leftmost non-terminal A.

- Derivation: aASBB⇒aaSBB

Apply S→c to replace the leftmost non-terminal S.

- Derivation: aaSBB⇒aacBB

Apply B→b twice to replace both Bs with bs.

- Derivation: aacBB⇒aacbB⇒aacbb

Using rightmost derivation, the process will look like this:

Start with S.

Apply S→ASB to introduce both A and B with another S in the middle.

- Derivation: S⇒ASB

Apply B→b to the rightmost B, focusing on generating the last 'b' of 'aabb'.

- Derivation: ASB⇒ASb

Apply S→ASB to the rightmost S, preparing to generate the second 'a' and 'b'.

- Derivation: ASb⇒AASBb

Apply B→b to the rightmost B, creating the second 'b' of 'aabb'.

- Derivation: AASBb⇒AASbb

Apply S→c to the rightmost S, as we now have the correct number of **'a'**s and **'b'**s.

- Derivation: AASbb⇒AAcbb

Apply A→a to the rightmost A, converting it into the first 'a'.

- Derivation: AAcbb⇒Aacbb

Apply A→a again to the next rightmost A, converting it into the second 'a'.

- Derivation: Aacbb⇒aacbb

Both leftmost and rightmost derivations are top-down strategies. This is because we start from the initial symbol, which represents the root of the parse tree. We then apply production rules to derive the string, moving down towards the leaves, which are the terminals. This method follows a hierarchical process, beginning with non-terminals and systematically applying rules to get the final result. So, we simply choose which rule to use.

Backus-Naur Form

Context-free grammar (CFG) is a concept or a framework that describes the syntax of a language in a theoretical sense. It defines how a language can be structured using rules, but it doesn't specify a particular way to write these rules. This means that the syntax for expressing context-free grammars can vary and can be implemented in different ways.

Backus-Naur Form (BNF) is one specific syntax for expressing context-free grammars. It's a notation that provides a clear, standardized way to write the production rules of a CFG.

While Context-Free Grammar (CFG) is the underlying theory, BNF (Backus-Naur Form) and other similar notations serve as practical tools for expressing that theory in a consistent and standardized way. Other notations similar to BNF, like Extended Backus-Naur Form (EBNF) or Augmented Backus-Naur Form (ABNF), also exist, each with their own slight variations in syntax and features, providing different ways to implement and express the principles of context-free grammar.

In the case of the arithmetic expressions grammar, the production rules define how to construct valid arithmetic expressions using operators, numbers, and parentheses. These rules determine the syntax of the language, ensuring that only correctly structured expressions are formed.

To write a BNF grammar for arithmetic expressions, we will focus on the basic arithmetic operations: addition, subtraction, multiplication, and division. Additionally, we'll include parentheses for controlling the order of operations.

Here's an example of such a grammar:

<expression> ::= <term> | <expression> + <term> | <expression> - <term>

<term> ::= <factor> | <term> * <factor> | <term> / <factor>

<factor> ::= <number> | ( <expression> )

<number> ::= <digit> | <number> <digit>

<digit> ::= 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

Explanation:

<expression> ::= <term> | <expression> + <term> | <expression> - <term>:

<expression> can be defined as**:**

A <term> (representing a single term) OR

<expression> followed by "+" and another <term> (representing addition) OR

<expression> followed by "-" and another <term> (representing subtraction).

<term> ::= <factor> | <term> * <factor> | <term> / <factor>:

<term> can be defined as:

A <factor> (representing a single factor) OR

<term> followed by "*" and another <factor> (representing multiplication) OR

<term> followed by "/" and another <factor> (representing division).

<factor> ::= <number> | ( <expression> ):

<factor> can be defined as:

A <number> (representing a single number) OR

An opening parenthesis "(", followed by an <expression> (representing an expression enclosed in parentheses), and then a closing parenthesis ")".

<number> ::= <digit> | <number> <digit>:

<number> can be defined as:

A single <digit> (representing a single digit number) OR

<number> followed by another <digit> (allowing for multi-digit numbers).

<digit> ::= 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9:

- <digit> is defined as any one of the digits from 0 to 9.

This BNF grammar defines how arithmetic expressions are structured, starting from the smallest building blocks (<digit>) and gradually combining them into more complex expressions. It allows for the representation of addition, subtraction, multiplication, division, and the use of parentheses to control the order of operations in arithmetic expressions.

For example, the expression (3 + 4) * 5 is valid according to this grammar.

Conclusion

Understanding formal grammars and the Chomsky hierarchy is important for anyone studying computational linguistics, programming languages, and computer science in general. The Chomsky hierarchy helps classify languages based on their complexity and the computational power needed to process them. By learning about regular and context-free grammars, we gain essential knowledge for building our own programming languages. We will discuss more topics in the future, so stay tuned.

Subscribe to my newsletter

Read articles from arman takmazyan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

arman takmazyan

arman takmazyan

Passionate about building high-performance web applications with React. In my free time, I love diving into the fascinating world of programming language principles and uncovering their intricacies.