Inbound Interface

Gautam Jain

Gautam Jain

Oracle provides flexible tools in the form of Interface programs to import the master and transactional data like Customers, Invoices, and Sales Orders etc. from external systems into Oracle Applications or to transfer the data from one module to another module.

There are 2 types of interfaces:

Inbound Interface: will be used to upload the data from legacy system (Flat files) into Oracle Applications base tables.

Outbound Interface: will be used to extract the data from oracle Database tables into the flat files.

Conversion/Interface Strategy:

Data Mapping

During the data mapping process, list of all the data sets and data elements that will need to be moved into the Oracle tables as part of conversion are identified. Data mapping tables are prepared as part of this activity that show what are the data elements that are needed by the target system to meet the business requirements and from where they will be extracted in the old system.

Download Programs

After the conversion data mapping is complete, download programs are developed that are used to extract the identified conversion data elements from the current systems in the form of an ASCII flat file. The structure of the flat file must match the structure of the Oracle standard interface tables. These flat files generated may be in text form or a comma or space delimited, variable or fixed format data file.

Upload Program

Once the data has been extracted to a flat file, it is then moved to the target file system and the data from the file is loaded into user defined staging tables in the target database using SQL Loader or UTL_FILE utilities. Then programs are written and run which validate the data in the staging tables and insert the same into the Oracle provided standard Interface tables.

Interface Program

Once the interface tables are populated, the respective interface program (each data element interface has a specific interface program to run) is submitted. The interface programs validate the data, derive and assign the default values and ultimately populate the production base tables.

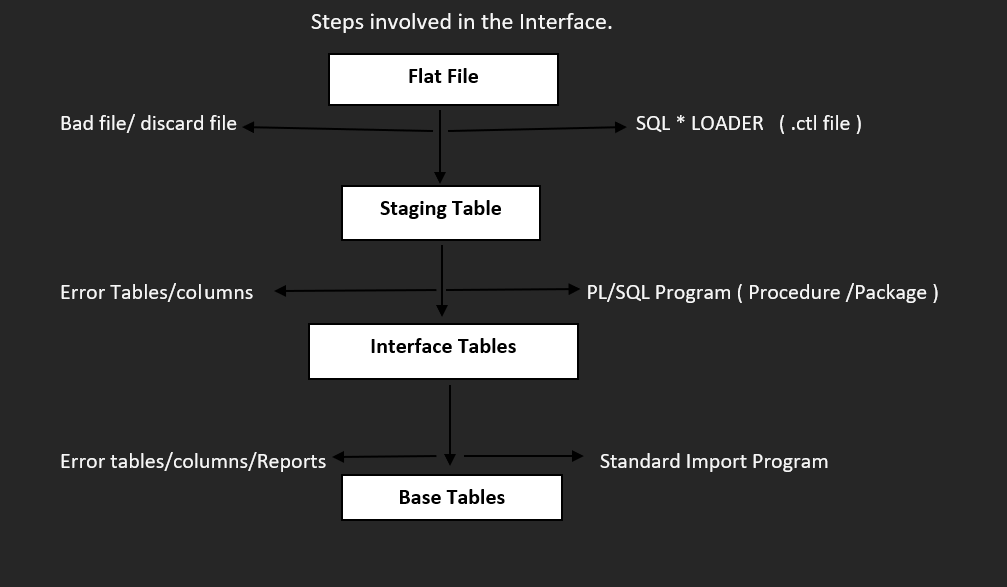

A typical path to transfer the data from Legacy System to Oracle Apps:

Inbound Interface

Inbound Interface will be used to upload the data from legacy system (Flat files) into Oracle Applications base tables.

While Developing the Inbound interface we will use SQL_Loader to import the data into base tables.

Process

Inbound Interface will be used to upload the data from legacy system into oracle application base tables with multiple times.

We will receive the flat file from the client then we will create staging table and stage table column should be mapped with flat file.

Create a .ctl (control file) for upload the data from flat file to stage table.

Develop the package to validate the stag table data weather it is valid or not, If it’s valid then we will insert data into interface table, if it is not valid, we will update the status flag as ’E’ and error messages in stag table.

Validated stag table data will be inserted into the interface tables.

Once the stag table validated data inserted into the interface table submit the standard program from SRS window then the standard program will transfer the data from interface table to base tables.

Execution

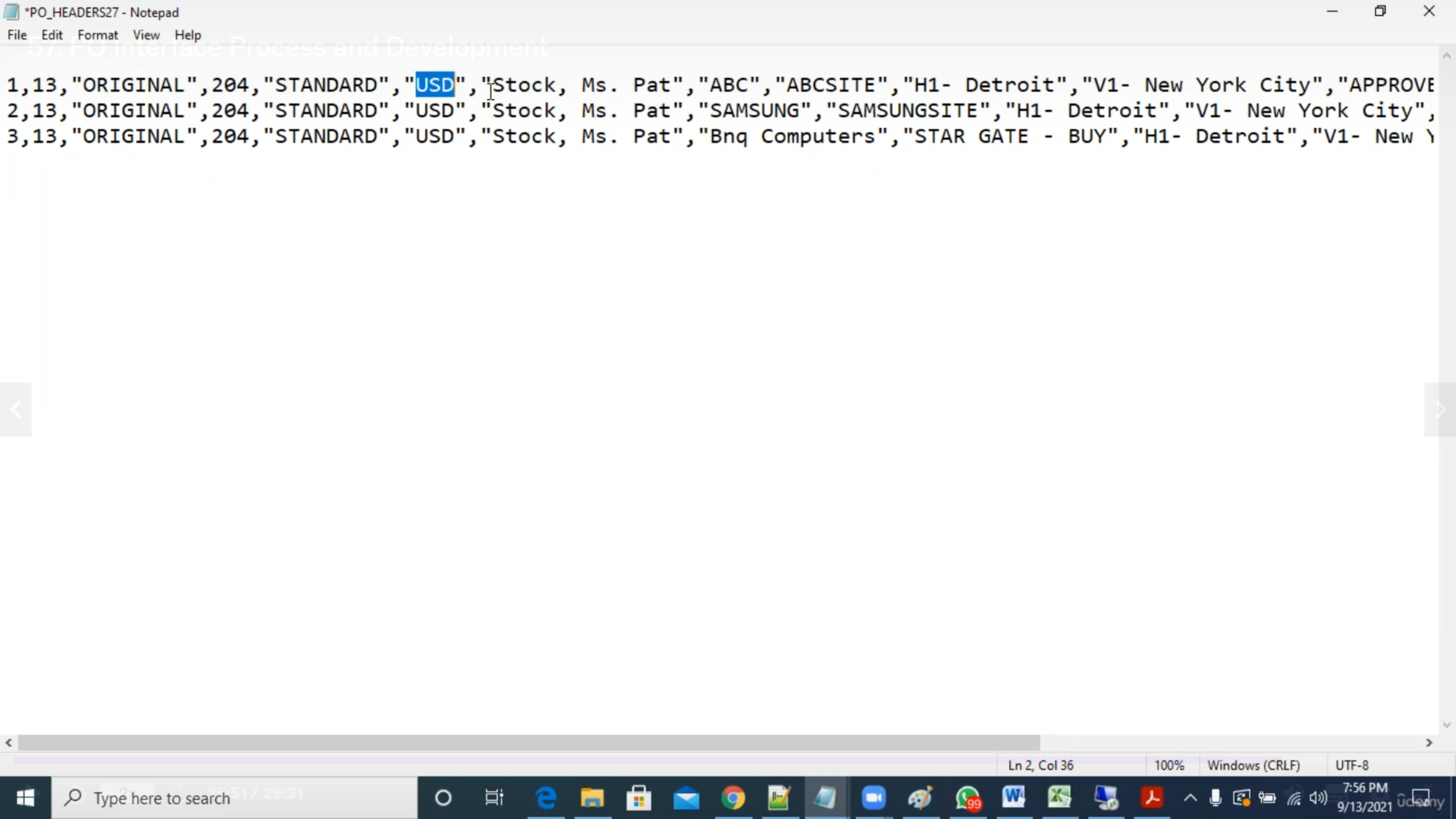

- Flat File Creation

- Move Flat File (txt file) into Server through WinSCP

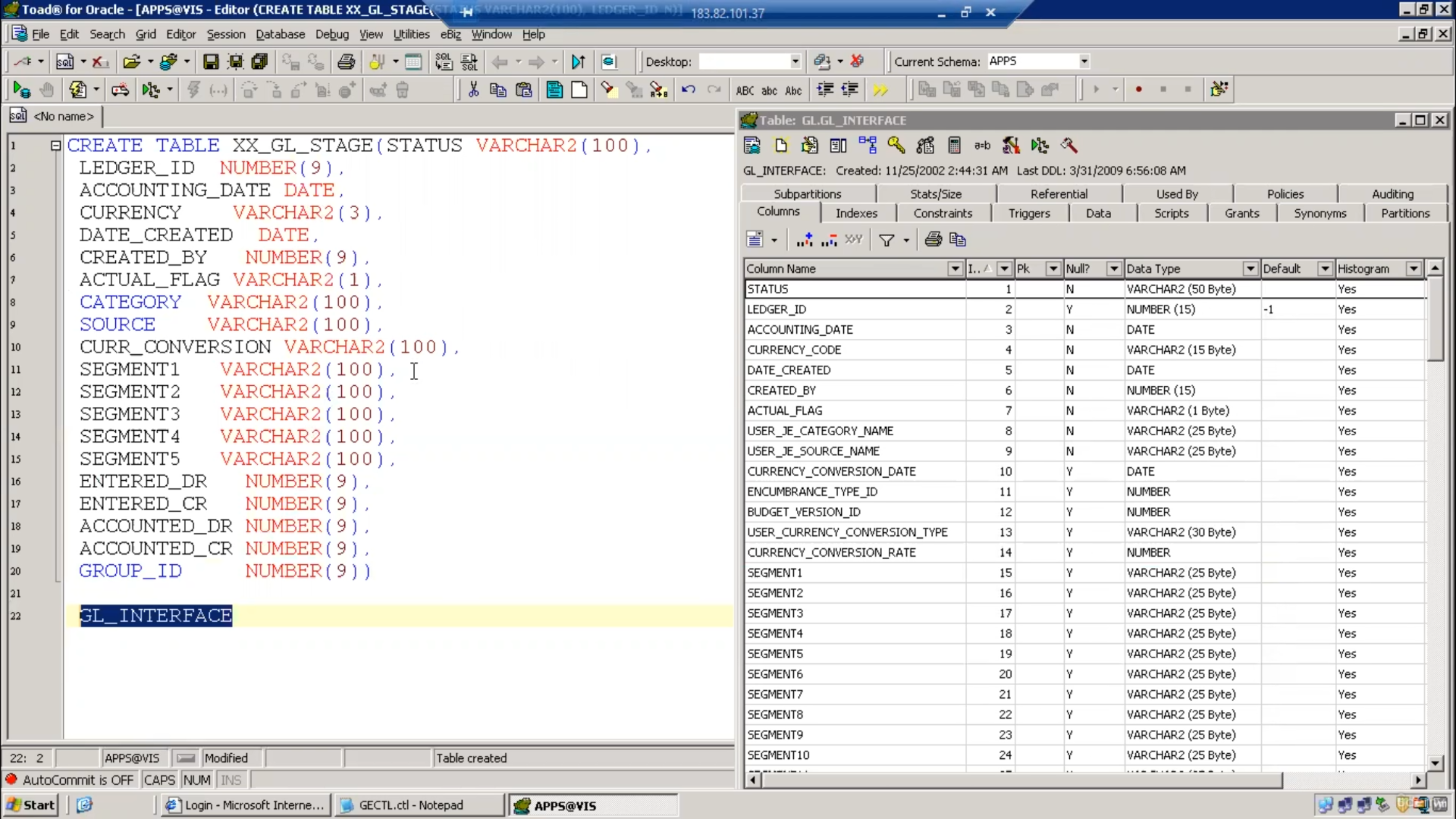

Create Table Structure (check table attribute columns as per associated interface table)

Sample Control File:

LOAD DATA

INFILE *

TRUNCATE INTO TABLE XX_GL_TEMP

FIELDS TERMINATED BY ',' OPTIONALLY ENCLOSED BY '"'

TRAILING NULLCOLS

(STATUS,

SET_OF_BOOKS_ID,

ACCOUNTING_DATE,

CURRENCY,

DATE_CREATED,

CREATED_BY,

ACTUAL_FLAG,

CATEGORY,

SOURCE,

CURR_CONVERSION,

SEGMENT1,

SEGMENT2,

SEGMENT3,

SEGMENT4,

SEGMENT5,

ENTERED_DR,

ENTERED_CR,

ACCOUNTED_DR,

ACCOUNTED_CR,

GROUP_ID)

BEGINDATA

"NEW",1,"11-AUG-2002","USD","11-AUG-2002",1318,"A","Inventory","JETFORMS","Corporate","01","110","7730","0000","000",555,555,555,555,66

"NEW",1,"11-AUG-2002","USD","11-AUG-2002",1318,"A","Inventory","JETFORMS","Corporate","01","110","7730","0000","000",554,554,554,554,66

"NEW",99,"11-AUG-2002","USD","11-AUG-2002",1318,"A","Inventory","JETFORMS","Corporate","01","110","7730","0000","000",321,321,321,321,66

"NEW",1,"11-AUG-2002","USD","11-AUG-2002",1318,"A","Inventory","JETFORMS","Corporate","01","110","7730","0000","000",431,431,431,431,66

"NEW",1,"11-AUG-2002","SGD","11-AUG-2002",1318,"K","Inventory","JETFORMS","Corporate","01","110","7730","0000","000",1500,1500,1500,1500,66

- Move CTL To Server (WinSCP):

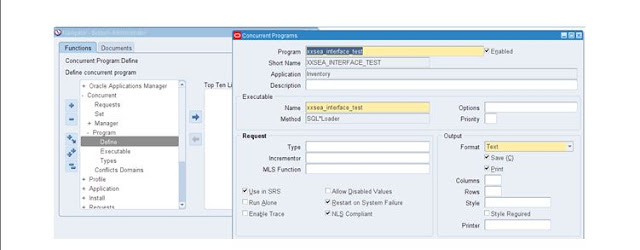

- Register SQL * Loader Concurrent Program:

- Create and run Interface Program

CREATE OR REPLACE PROCEDURE APPS.GL_RTL_LOAD(

Errbuf OUT VARCHAR2,

Retcode OUT VARCHAR2) IS

-- cursor declaration

CURSOR gl_cur IS

SELECT

status,

LEDGER_id,

accounting_date,

currency,

date_created,

created_by,

actual_flag,

category,

source,

curr_conversion,

segment1,

segment2,

segment3,

segment4,

segment5,

entered_dr,

entered_cr,

accounted_dr,

accounted_cr,

group_id

FROM XX_GL_RTL;

l_currencycode VARCHAR2(25);

l_set_of_books_id NUMBER(3);

l_flag VARCHAR2(2);

l_error_msg VARCHAR2(100);

l_err_flag VARCHAR2(10);

l_category VARCHAR2(100);

l_USERID NUMBER(10);

l_count NUMBER(9) default 0;

L_SOURCE varchar2(100);

BEGIN

FOR rec_cur IN gl_cur LOOP

l_count:=l_count+1;

l_flag :='A';

--Category Column Validation

BEGIN

SELECT USER_JE_CATEGORY_NAME

INTO l_CATEGORY

FROM GL_JE_CATEGORIES

WHERE USER_JE_CATEGORY_NAME = REC_CUR.Category;

EXCEPTION

WHEN OTHERS THEN

l_flag:='E';

l_error_msg:='Category does not exist ';

Fnd_File.put_line (Fnd_File.LOG,'Inserting data into the Interface TABLE'||'-'||l_count||' '||l_error_msg);

END;

--End Category Column Validation

--User ID column validation

BEGIN

SELECT USER_ID

INTO L_USERID

FROM FND_USER

WHERE USER_ID = REC_CUR.created_by;

EXCEPTION

WHEN OTHERS THEN

l_flag:='E';

l_error_msg:='User ID does not exist ';

Fnd_File.put_line (Fnd_File.LOG,'Inserting data into the Interface TABLE'||'-'||l_count||' '||l_error_msg);

END;

--End of Created_by OR UserID column Validation

--Set of books Validation

BEGIN

SELECT LEDGER_id

INTO l_set_of_books_id

FROM GL_LEDGERS

WHERE LEDGER_id=rec_cur.LEDGER_id;

EXCEPTION

WHEN OTHERS THEN

l_flag:='E';

l_error_msg:='Ledger ID does not exist ';

Fnd_File.put_line (Fnd_File.LOG,'Inserting data into the Interface TABLE'||'-'||l_count||' '||l_error_msg);

END;

--Set of books Validation

--Cuurency Code Validation

BEGIN

SELECT currency_code

INTO l_currencycode

FROM fnd_currencies

WHERE currency_code=rec_cur.currency

AND currency_code='USD';

EXCEPTION

WHEN OTHERS THEN

l_flag:='E';

l_error_msg:='currency code does not exists';

Fnd_File.put_line (Fnd_File.LOG,'Inserting data into the Interface TABLE'||'-'||l_count||' '||l_error_msg);

END;

--Cuurency Code Validation

--Source Validation

BEGIN

SELECT USER_JE_SOURCE_NAME

INTO L_SOURCE

FROM GL_JE_SOURCES

WHERE USER_JE_SOURCE_NAME=REC_CUR.SOURCE;

exception

WHEN OTHERS THEN

l_flag:='E';

l_error_msg:='SOURCE does not exist ';

Fnd_File.put_line (Fnd_File.LOG,'Inserting data into the Interface TABLE'||'-'||l_count||' '||l_error_msg);

END;

--Source Validation

IF l_flag!='E' THEN

Fnd_File.put_line (Fnd_File.LOG,'Inserting data into the Interface TABLE');

INSERT INTO gl_interface(status,

ledger_id,

accounting_date,

currency_code,

date_created,

created_by,

actual_flag,

user_je_category_name,

user_je_source_name,

user_currency_conversion_type,

segment1,

segment2,

segment3,

segment4,

segment5,

entered_dr,

entered_cr,

accounted_dr,

accounted_cr,

group_id)

VALUES

(rec_cur.status,

rec_cur.ledger_id,

rec_cur.accounting_date ,

rec_cur.currency,

rec_cur.date_created,

rec_cur.created_by ,

rec_cur.actual_flag,

rec_cur.category,

rec_cur.source,

rec_cur.curr_conversion,

rec_cur.segment1,

rec_cur.segment2,

rec_cur.segment3,

rec_cur.segment4,

rec_cur.segment5,

rec_cur.entered_dr,

rec_cur.entered_cr,

rec_cur.accounted_dr,

rec_cur.accounted_cr,

rec_cur.group_id);

END IF;

l_flag:=NULL;

l_error_msg:=NULL;

END LOOP;

END;

Run Standard Oracle import program

Once the data inserted into the associated interface table

Run the oracle standard program

Check the associated base tables for the successful transfer of data.

Some Important Terms

SQL Loader

SQL loader is one of the tools will be used to upload the data from the flat files into oracle stag tables.

Control file

Control file is nothing but SQL loader program we will develop the program then will execute the program while developing we will specify the datafile path, table name and column mapping when we execute data will be transferred from flat file to stag table during the data transfer following files will be generated.

OPTIONS (SKIP=1) (the first row in the data file(.csv) is skipped without loading)

LOAD DATA

INFILE '/mfgomdvl/temp/suppdatafile.csv' (specify the data file path and name)

TRUNCATE (type of loading(INSERT,APPEND,REPLACE,TRUNCATE))

INTO TABLE tst_supp_data_stg (the table to be loaded into)

FIELDS TERMINATED BY "," (specify the delimiter if variable format datafile)

TRAILING NULLCOLS (columns that are not present in the record treated as null)

(

VENDOR_NAME,

SEGMENT1,

TERM_ID,

STATUS_FLAG,

ERROR_MESSAGE

)

Insert: used only when the table is empty

Append: used when we want to add records to an already existing records in table

Replace: All records will be deleted, and new records will be added to table

Truncate: Similar to the replace

Log File

It contains the complete log information no of records rejected, upload we can see that information in the log file.

Bad File

Bad file contains the records which are rejected by the sql loader due to format problems or data type mismatch or any data errors.

Discard file

Discard file contains the records which are rejected by the control file if the record not satisfying the condition which we have specified in the control file.

Subscribe to my newsletter

Read articles from Gautam Jain directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by