Moving Forward: Beyond Gendered AI

Nityaa Kalra

Nityaa Kalra

In this final article, we explore recent advancements in AI voice assistants that transcend the traditional male/female dichotomy. This evolution aims to provide more inclusive and diverse options, but it raises important questions. Is simply adding more voices enough? Some recent attempts at gender-neutral voices have not been as successful as hoped. Understanding why these approaches have fallen short is crucial to making meaningful progress and this article sheds some light on the same. After all, the key to finding effective solutions lies in fully understanding the problem.

To begin, let's examine how we as humans, perceive and interact with these digital voices.

Anthropomorphization and User Perception

Have you ever noticed how we tend to give human traits to our gadgets? This tendency, known as Anthropomorphization, is something most of us do without thinking. The Computers as Social Actors (CASA) paradigm, developed by Clifford Nass, explains this behaviour. We often assign a gender to our technology based on subtle cues like voice tone or communication style, even when the technology isn't explicitly gendered. This inclination, shaped by societal norms, makes it incredibly challenging to eliminate gender biases in AI voice assistants.

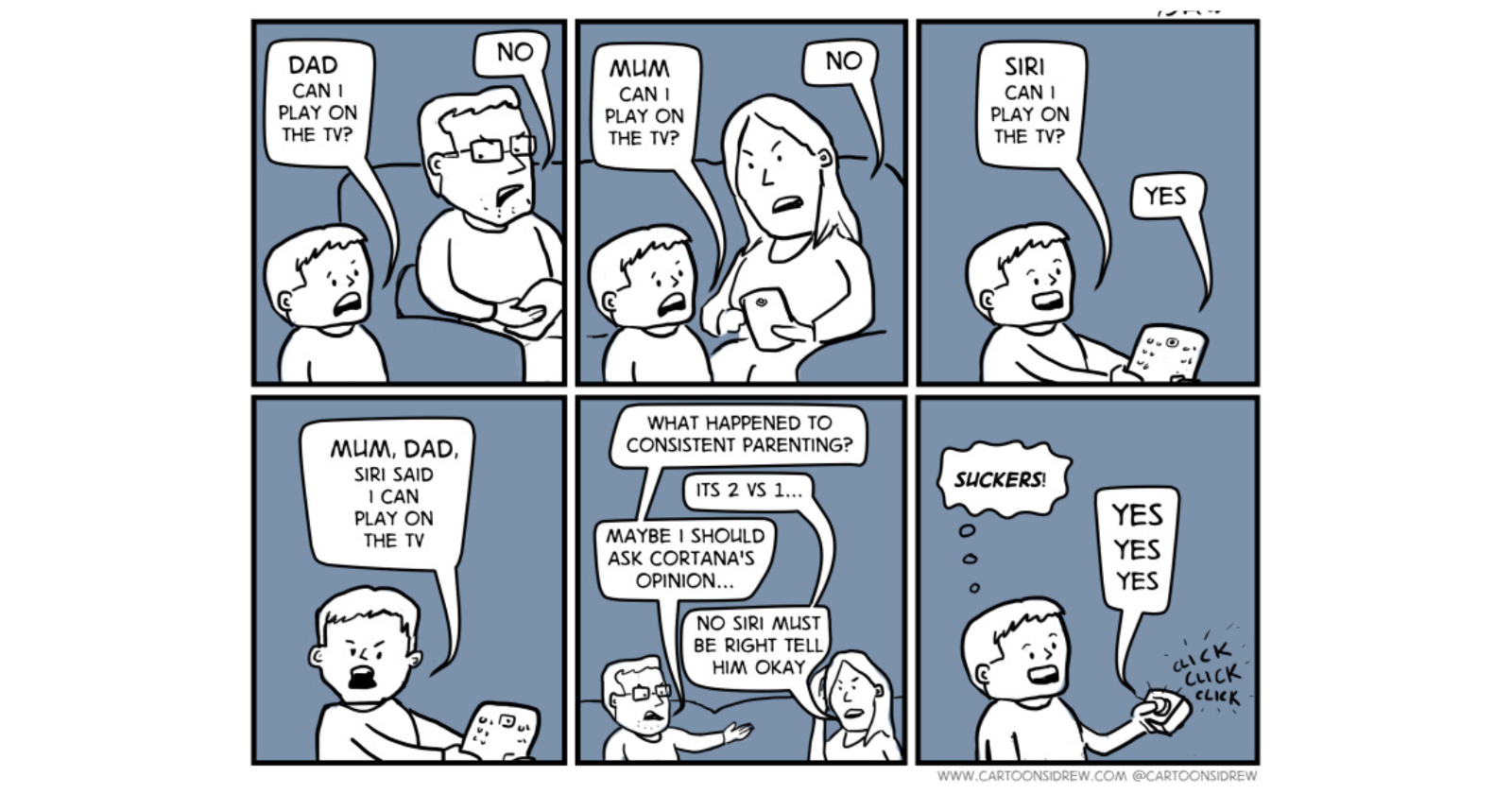

Let's say you start talking to your voice assistant as if it were a new 'female' assistant in your office. You might instinctively use the pronouns 'she/her' to refer to this piece of technology, inadvertently reinforcing some typical stereotypes associated with women. This humanising of AI plays a significant role in dehumanising women—a concept thoroughly explained by Pygmalion Displacement. This theory posits that when we frequently associate female voices with subordinate roles like assistants, we risk reinforcing the stereotype that the ideal voice of authority is the one devoid of feminine qualities. Understanding this dynamic is crucial in our efforts to create AI systems that truly inclusive and unbiased.

The Roadblocks to a Truly Gender Neutral Voice Assistant

Recent years have witnessed a concerted effort to dismantle gender bias in these voice assistants. For instance, Apple’s Siri underwent changes to offer multiple voice options, and Google adopted a gender-neutral name to symbolise its inclusivity.

However, despite these advancements, significant challenges remain. For instance, when Google introduced its Assistant in 2016, it was primarily trained using data from female voices. This resulted in better performance with female voices only and thereby discarding the idea to launch both male and female voiced assistant initially.

Studies show that users tend to trust both male and female AI voices equally, suggesting that gender neutrality might be a viable path to reducing bias. But is it really that simple? The rise and fall of 'Q', the gender-neutral voice assistant, serves as a cautionary tale. Despite its ambitious goal of inclusivity, 'Q' struggled to resonate with users.This stark reality underscores the complexities of achieving true gender equality in AI and suggests that while gender neutrality is a step forward, it may not be sufficient on its own.

Moving Towards Inclusive Design

Drawing inspiration from the concept of 'Fair Diffusion', which allows users to control AI image synthesis, we can envision similar strategies for voice design. Imagine a system where users can personalise their AI assistant's voice by adjusting pitch, accent, and communication style, creating a truly customised and inclusive experience. This approach holds promise for enhancing user engagement, but it also presents potential pitfalls.

For instance, the emphasis on extensive customisation might lead users to spend excessive time tweaking their assistant’s voice, possibly overshadowing its core functionality. Further, it might play on the same roads of existing stereotypes. Users might gravitate towards the same 'high-pitched,' 'soft-spoken,' and 'calming' voices, giving into these biases rather than challenging them.

In her paper, S. J. Sutton also suggests engaging with other gendering elements apart from just voice. She emphasises moving towards 'gender ambiguity' rather than 'gender neutrality' to create a more refined framework for gender in AI design.

Another potential solution could involve expanding the training data to incorporate not only more female voices but also voices from other marginalised communities. This does not limit to different vocal textures and tones, but also different accents and articulation. But let's be clear: more data alone isn't a magic solution. While essential, it's just one piece of the puzzle (I won't bore you with the reasons here). Overcoming the deep-rooted biases in AI requires a more comprehensive approach such as ensuring representation from marginalised groups during the development of these technologies.

As we move forward, the critical question remains: Are we ready to confront the power structures that perpetuate bias in AI design? The response will influence the future of human-AI interactions, as well as the ideals underlying these technologies. By proactively promoting inclusivity, we can ensure that AI assistants are a catalyst for positive social change, not a reflection of past inequalities :)

References

[1] C. Nass and Y. Moon, “Machines and mindlessness: Social responses to computers,” J. Soc. Issues, vol. 56, no. 1, pp. 81–103, 2000.

[2] L. Erscoi, A. V. Kleinherenbrink, and O. Guest, “Pygmalion displacement: When humanising AI dehumanises women,” SocArXiv, 2023.

[3] E. Fisher, “Gender bias in AI: Why voice assistants are female,” Adapt. [Online]. Available: https://www.adaptworldwide.com/insights/2021/gender-bias-in-ai-why-voice-assistants-are-female.

[4] Meet Q: The First Genderless Voice- FULL SPEECH. 2019.

[5] F. Friedrich, Fair diffusion: Instructing text-to-image generation models on fairness. 2023.

[6] S. J. Sutton, “Gender ambiguous, not genderless: Designing gender in voice user interfaces (VUIs) with sensitivity,” in Proceedings of the 2nd Conference on Conversational User Interfaces, 2020.

[7] Female by Default? - Exploring the Effect of Voice Assistant Gender and Pitch on Trait and Trust Attribution.

Subscribe to my newsletter

Read articles from Nityaa Kalra directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Nityaa Kalra

Nityaa Kalra

I'm on a journey to become a data scientist with a focus on Natural Language Processing, Machine Learning, and Explainable AI. I'm constantly learning and an advocate for transparent and responsible AI solutions.