Container Networking Explained (Part II)

Ranjan Ojha

Ranjan OjhaGetting Up to Speed

Last we left, we reached until having a single container running inside our host machine. We now continue where we left off and add another container on our system. If you had in the meantime reset your system, then let's get back to where we left off.

fedora@localhost:~$ sudo ip netns add container-1

fedora@localhost:~$ sudo ip netns exec container-1 ./echo-server

time=2024-07-24T16:11:17.190+02:00 level=INFO msg="web server is running" listenAddr=:8000

fedora@localhost:~$ sudo ip netns add container-1

fedora@localhost:~$ sudo ip netns exec container-1 ip link set lo up

fedora@localhost:~$ sudo ip link add veth1 type veth peer name vethc1

fedora@localhost:~$ sudo ip link set vethc1 netns container-1

fedora@localhost:~$ sudo ip netns exec container-1 ip a add 172.18.0.2/24 dev vethc1

fedora@localhost:~$ sudo ip a add 172.18.0.1/24 dev veth1

fedora@localhost:~$ sudo ip link set veth1 up

fedora@localhost:~$ sudo ip netns exec container-1 ip link set vethc1 up

fedora@localhost:~$ sudo ip netns exec container-1 ip r add default via 172.18.0.1 dev vethc1 src 172.18.0.2

fedora@localhost:~$ sudo iptables -t nat -A POSTROUTING -s 172.18.0.0/24 ! -o veth1 -j MASQUERADE

This brings us back to where we last left off.

Note: In the last post, I had switched the

vethinterface, with host havingvethc1and container havingveth1. This time host hasveth1andvethc1. It doesn't matter which ends up where, except when running command specifying the device names itself.Also if you have not enabled

ip_forwardyet, now is a good time to do it.

Setting up Bridge

In basic terms, adding another container should be just the same step as we have done above but just with another name, say container-2 but that's not just it. Until now we were using a simple ethernet to ethernet connection. In fact, going back to our physical analogy, we were connecting 2 physical devices, and using the lowest piece of technology, we simply connected them using ethernet. But this is not scalable. The moment we add another device we would have to add 2 more ethernet just to have a fully connected network.

To simplify networking we will add another network device, a Switch. Now our connections look like this,

With a switch, for each new device we now only have to add 1 more ethernet connection (up to the physical limit of the device). If you know your OSI model, switches work on Layer 2 of the networking model. This means they work on MAC addresses, and not IP but still allow for devices to communicate with each other.

Back to our container world, similar to who we had a veth for ethernet pairs, we have bridge interface for virtual switch in our system. In fact, Docker in its default mode works using bridge and veth pairs for networking.

Default mode? Does that mean there are other methods besides bridge. Yes, but for now we will focus on bridge networking.

Enough of theory, let's get our hands dirty

Creating Bridge

Creating a network bridge is really simple with our handy iproute2 tool.

fedora@localhost:~$ sudo ip link add cont-br0 type bridge

fedora@localhost:~$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:a5:e0:0e brd ff:ff:ff:ff:ff:ff

inet 192.168.122.240/24 brd 192.168.122.255 scope global dynamic noprefixroute enp1s0

valid_lft 2068sec preferred_lft 2068sec

inet6 fe80::5054:ff:fea5:e00e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

4: veth1@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 92:b2:24:76:93:41 brd ff:ff:ff:ff:ff:ff link-netns container-1

inet 172.18.0.1/24 scope global veth1

valid_lft forever preferred_lft forever

inet6 fe80::90b2:24ff:fe76:9341/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

5: cont-br0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether c6:ac:f6:b3:a2:17 brd ff:ff:ff:ff:ff:ff

Connecting veth to bridge

Before we go about connecting our veth device to bridge. There is one thing we should know. Remember how I told you our bridge works in L2 layer ? That means really it makes no sense to have IP assigned on our virtual ethernet interfaces. Because IP lies on the L3 layer of the network model. So all the IP routing decisions will be made on bridge and not on veth level.

Note: This is the reason why when you check the

vethinterfaces created by docker they don't have IP assigned to them, and only thebridgeinterface does.

So we must first undo a few things we did like taking down the veth then moving the IP to the bridge before we can bring our interfaces up.

fedora@localhost:~$ sudo ip link set veth1 down

fedora@localhost:~$ sudo ip a delete 172.18.0.1/24 dev veth1

fedora@localhost:~$ sudo ip a add 172.18.0.1/24 dev cont-br0

fedora@localhost:~$ sudo ip link set veth1 master cont-br0

fedora@localhost:~$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:a5:e0:0e brd ff:ff:ff:ff:ff:ff

inet 192.168.122.240/24 brd 192.168.122.255 scope global dynamic noprefixroute enp1s0

valid_lft 3426sec preferred_lft 3426sec

inet6 fe80::5054:ff:fea5:e00e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

4: veth1@if3: <BROADCAST,MULTICAST> mtu 1500 qdisc noqueue master cont-br0 state DOWN group default qlen 1000

link/ether 92:b2:24:76:93:41 brd ff:ff:ff:ff:ff:ff link-netns container-1

5: cont-br0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 92:b2:24:76:93:41 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/24 scope global cont-br0

valid_lft forever preferred_lft forever

Note, the master cont-br0 this is how we know our ethernet is connected to our bridge network.

Now to bring the interfaces up,

fedora@localhost:~$ sudo ip link set veth1 up

fedora@localhost:~$ sudo ip link set cont-br0 up

fedora@localhost:~$ sudo ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:a5:e0:0e brd ff:ff:ff:ff:ff:ff

inet 192.168.122.240/24 brd 192.168.122.255 scope global dynamic noprefixroute enp1s0

valid_lft 3342sec preferred_lft 3342sec

inet6 fe80::5054:ff:fea5:e00e/64 scope link noprefixroute

valid_lft forever preferred_lft forever

4: veth1@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master cont-br0 state UP group default qlen 1000

link/ether 92:b2:24:76:93:41 brd ff:ff:ff:ff:ff:ff link-netns container-1

inet6 fe80::90b2:24ff:fe76:9341/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

5: cont-br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 92:b2:24:76:93:41 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/24 scope global cont-br0

valid_lft forever preferred_lft forever

inet6 fe80::90b2:24ff:fe76:9341/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

Validating

Now we just run some tests to ensure that nothing is broken.

fedora@localhost:~$ sudo ip netns exec container-1 ping 172.18.0.1 -c3

PING 172.18.0.1 (172.18.0.1) 56(84) bytes of data.

64 bytes from 172.18.0.1: icmp_seq=1 ttl=64 time=0.150 ms

64 bytes from 172.18.0.1: icmp_seq=2 ttl=64 time=0.035 ms

64 bytes from 172.18.0.1: icmp_seq=3 ttl=64 time=0.043 ms

--- 172.18.0.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2060ms

rtt min/avg/max/mdev = 0.035/0.076/0.150/0.052 ms

fedora@localhost:~$ ping 172.18.0.2 -c3

PING 172.18.0.2 (172.18.0.2) 56(84) bytes of data.

64 bytes from 172.18.0.2: icmp_seq=1 ttl=64 time=0.061 ms

64 bytes from 172.18.0.2: icmp_seq=2 ttl=64 time=0.041 ms

64 bytes from 172.18.0.2: icmp_seq=3 ttl=64 time=0.035 ms

--- 172.18.0.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2077ms

rtt min/avg/max/mdev = 0.035/0.045/0.061/0.011 ms

fedora@localhost:~$ curl -s 172.18.0.2:8000/echo | jq

{

"header": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.6.0"

]

},

"body": "Echo response"

}

Fun fact, now you can correlate all the interfaces on your Docker host. By default Docker creates

docker0as the default bridge to which all the containers that haven't explicitly been attached to a network are added.

Adding another Container

Revisiting IP assignment

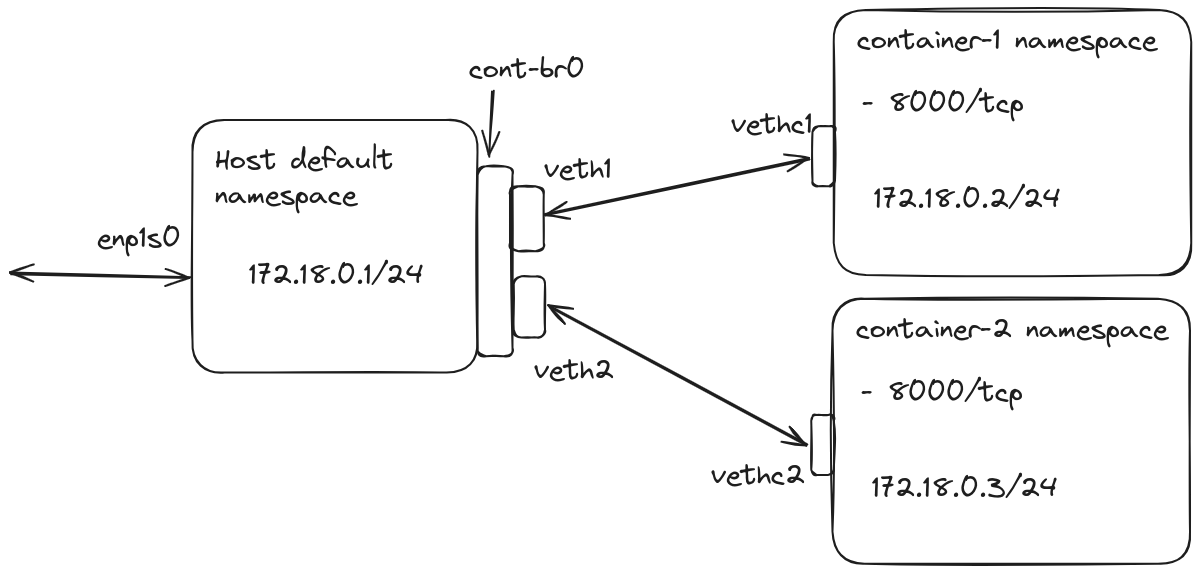

Since we now have one more container, our new IP table looks like this.

| Namespace | IP |

| Host | 172.18.0.1/24 |

| container-1 | 172.18.0.2/24 |

| container-2 | 172.18.0.3/24 |

Creating container-2

Now with all our previous knowledge, we can add a second container easily.

fedora@localhost:~$ sudo ip netns add container-2

fedora@localhost:~$ sudo ip netns exec container-2 ./echo-server

time=2024-07-24T16:59:25.228+02:00 level=INFO msg="web server is running" listenAddr=:8000

fedora@localhost:~$ sudo ip netns add container-2

fedora@localhost:~$ sudo ip netns exec container-2 ip link set lo up

fedora@localhost:~$ sudo ip link add veth2 type veth peer name vethc2

fedora@localhost:~$ sudo ip link set vethc2 netns container-2

fedora@localhost:~$ sudo ip netns exec container-2 ip a add 172.18.0.3/24 dev vethc2

fedora@localhost:~$ sudo ip netns exec container-2 ip link set vethc2 up

fedora@localhost:~$ sudo ip link set veth2 master cont-br0

fedora@localhost:~$ sudo ip link set veth2 up

fedora@localhost:~$ sudo ip netns exec container-2 ip r add default via 172.18.0.1 dev vethc2 src 172.18.0.3

fedora@localhost:~$ sudo mkdir -p /etc/netns/container-2

fedora@localhost:~$ echo "nameserver 1.1.1.1" | sudo tee -a /etc/netns/container-2/resolv.conf

nameserver 1.1.1.1

Connecting containers

The upside of using a bridge connection like this is that all the containers can communicate with each other using the cont-br0 bridge interface. They simply send out arp requests to any IP in the same network and connect directly using the bridge.

Result

And with this, we now have 2 containers running on our system. We can perform all the standard tests like ping the devices, curl, and network connectivity test and all should be good.

Also our current overview of how our devices look,

Subscribe to my newsletter

Read articles from Ranjan Ojha directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by