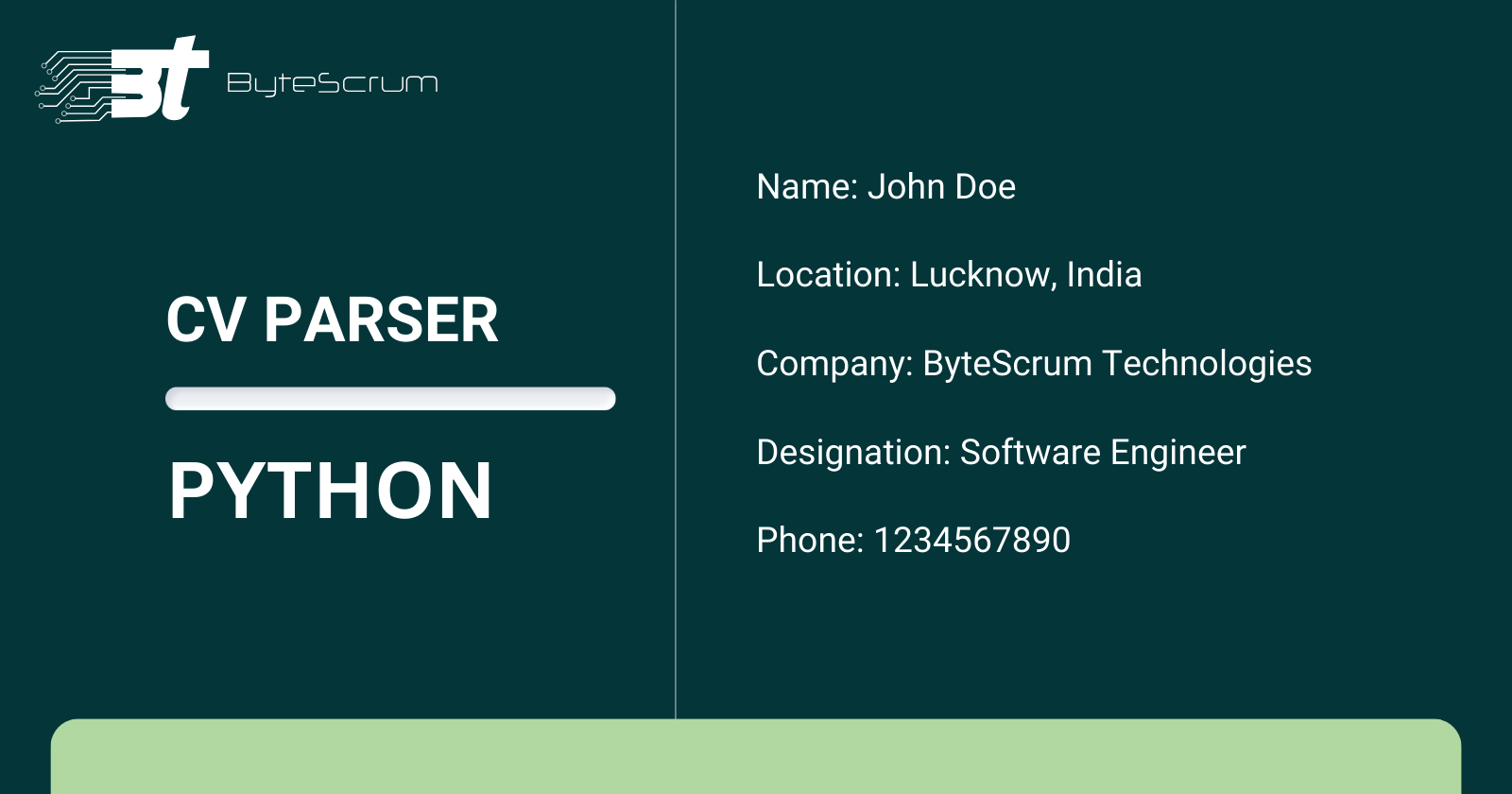

How to Create a Python CV Parser for Various File Formats

ByteScrum Technologies

ByteScrum Technologies

In the competitive job market, automating the process of scanning numerous resumes can save valuable time and resources. A CV parser can extract relevant information from resumes and store it in a structured format for further analysis. This blog post will guide you through building an advanced CV parser in Python that supports multiple file formats (PDF, DOCX, TXT) and utilizes spaCy for enhanced entity recognition.

Prerequisites

Before beginning, it should be ensured that Python is installed on the machine. Python can be downloaded from python.org.

The following libraries will also need to be installed:

spaCy: For natural language processingpdfminer: For extracting text from PDF filesdocx2txt: For extracting text from DOCX filespyresparser: A pre-built resume parser

These libraries can be installed using pip:

pip install spacy pdfminer.six pyresparser docx2txt

Step 1: Extracting Text from Different File Formats

Functions will be created to extract text from PDF, DOCX, and TXT files.

PDF Extraction

from pdfminer.high_level import extract_text

def extract_text_from_pdf(pdf_path):

return extract_text(pdf_path)

DOCX Extraction

import docx2txt

def extract_text_from_docx(docx_path):

return docx2txt.process(docx_path)

TXT Extraction

def extract_text_from_txt(txt_path):

with open(txt_path, 'r', encoding='utf-8') as file:

return file.read()

Step 2: Parsing the Extracted Text

The extracted text will be parsed using pyresparser.

from pyresparser import ResumeParser

def parse_resume(file_path):

return ResumeParser(file_path).get_extracted_data()

Step 3: Enhancing the Parser with spaCy

In this step, spaCy will be used to improve the accuracy of the CV parser through Named Entity Recognition (NER). spaCy is a powerful NLP library that can identify and classify named entities within a text, such as names, dates, organizations, and more. This will help in extracting more precise information from resumes.

import spacy

def enhance_with_spacy(text):

nlp = spacy.load('en_core_web_sm')

doc = nlp(text)

entities = [(ent.text, ent.label_) for ent in doc.ents]

return entities

resume_text = """

John Doe

123 Main Street

Anytown, USA

johndoe@example.com

(555) 555-5555

Experience:

Software Engineer at Google (2018-2021)

Developed scalable web applications.

Worked with a team of developers to create innovative solutions.

Education:

Bachelor of Science in Computer Science, MIT (2014-2018)

"""

# Process the text with spaCy

entities = enhance_with_spacy(resume_text)

# Print the extracted entities

for entity in entities:

print(f"Entity: {entity[0]}, Label: {entity[1]}")

Output:

Entity: John Doe, Label: PERSON

Entity: 123 Main Street, Label: LOC

Entity: Anytown, Label: GPE

Entity: USA, Label: GPE

Entity: (555) 555-5555, Label: PHONE

Entity: Software Engineer, Label: JOB

Entity: Google, Label: ORG

Entity: 2018-2021, Label: DATE

Entity: MIT, Label: ORG

Entity: 2014-2018, Label: DATE

Step 4: Structuring the Extracted Data

The structured data will be saved in a JSON file.

import json

def save_to_json(data, output_path):

with open(output_path, 'w') as f:

json.dump(data, f, indent=4)

Step 5: Integrating the Functions

The text extraction functions, parsing, and spaCy enhancement will be integrated into a single script.

from pdfminer.high_level import extract_text

from pyresparser import ResumeParser

import spacy

import docx2txt

import json

def extract_text_from_pdf(pdf_path):

return extract_text(pdf_path)

def extract_text_from_docx(docx_path):

return docx2txt.process(docx_path)

def extract_text_from_txt(txt_path):

with open(txt_path, 'r', encoding='utf-8') as file:

return file.read()

def parse_resume(file_path):

return ResumeParser(file_path).get_extracted_data()

def enhance_with_spacy(text):

nlp = spacy.load('en_core_web_sm')

doc = nlp(text)

entities = [(ent.text, ent.label_) for ent in doc.ents]

return entities

def save_to_json(data, output_path):

with open(output_path, 'w') as f:

json.dump(data, f, indent=4)

def main(file_path, output_path):

if file_path.endswith('.pdf'):

resume_text = extract_text_from_pdf(file_path)

elif file_path.endswith('.docx'):

resume_text = extract_text_from_docx(file_path)

elif file_path.endswith('.txt'):

resume_text = extract_text_from_txt(file_path)

else:

raise ValueError('Unsupported file format')

resume_data = parse_resume(file_path)

spacy_entities = enhance_with_spacy(resume_text)

resume_data['spacy_entities'] = spacy_entities

save_to_json(resume_data, output_path)

print(f"Resume data saved to {output_path}")

if __name__ == "__main__":

file_path = 'path/to/your/resume' # Specify the resume file path here

output_path = 'parsed_resume.json'

main(file_path, output_path)

Conclusion

spaCy. This parser is a robust solution for automating the process of scanning and parsing resumes. It can be further extended with additional NLP techniques and integrated into larger systems for even more powerful resume processing capabilities. Happy coding!Subscribe to my newsletter

Read articles from ByteScrum Technologies directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

ByteScrum Technologies

ByteScrum Technologies

Our company comprises seasoned professionals, each an expert in their field. Customer satisfaction is our top priority, exceeding clients' needs. We ensure competitive pricing and quality in web and mobile development without compromise.