Docker Networking Made Simple: Introductory Guide

Daawar Pandit

Daawar Pandit

In Docker environments, networking often gets overlooked, leading to connectivity issues, performance problems, and security vulnerabilities. Understanding Docker networking is crucial for network admins to configure container architecture correctly. This necessity led to the introduction of Docker Networking. Docker networking enables communication between Docker containers and the outside world via the host machine. Docker supports various network types, each suited for specific use cases.

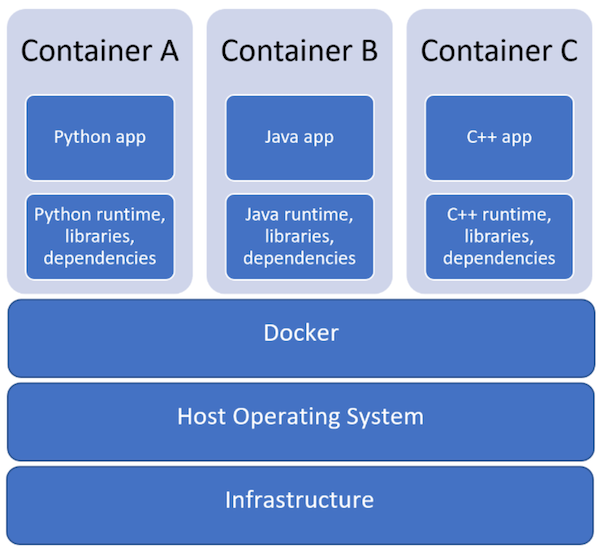

Before understanding Docker Networking, let’s quickly understand the term ‘Docker’ first.

What is Docker?

Docker is a platform that uses OS-level virtualization to help users develop, deploy, manage, and run applications in a Docker container, along with all their library dependencies.

What Is a Docker Network?

Networking is about communication among processes, and Docker’s networking is no different. Docker networking is primarily used to establish communication between Docker containers and the outside world via the host machine where the Docker daemon is running.

Docker supports different types of networks, each fit for certain use cases. We’ll be exploring the network drivers supported by Docker in general, along with some coding examples.

Docker Network Types

Docker networks configure communications between neighboring containers and external services. Containers must be connected to a Docker network to receive any network connectivity. The communication routes available to the container depend on the network connections it has.

Docker allows you to create three different types of network drivers out-of-the-box: Bridge, Host, and None. However, they may not fit every use case, so we’ll also explore user-defined networks such as overlay and macvlan. Let’s take a closer look at each one.

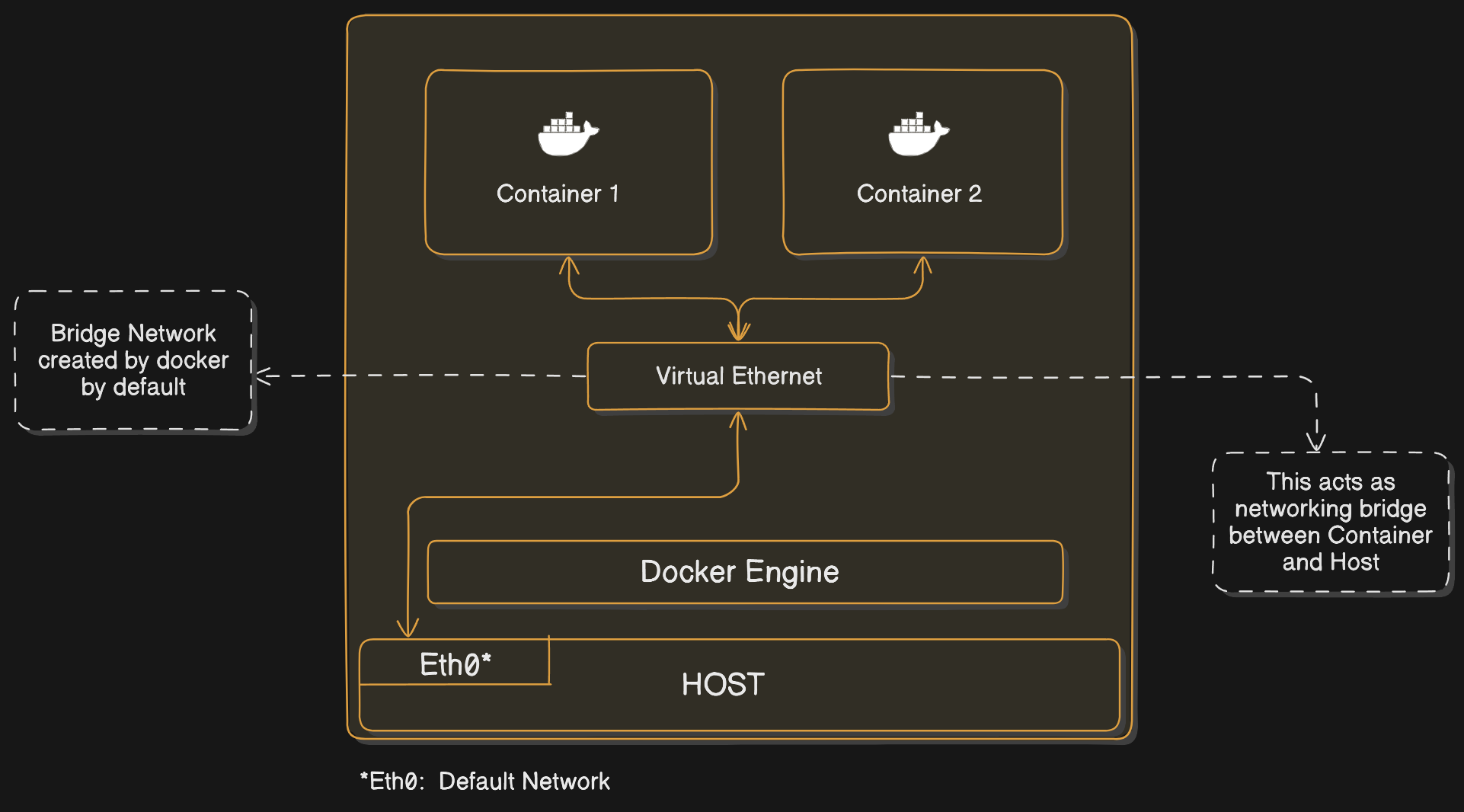

1. The Bridge Driver:

This is the default. Whenever you start Docker, a bridge network gets created and all newly started containers will connect automatically to the default bridge network. Containers running in the same bridge network can communicate with each other, and Docker uses iptables on the host machine to prevent access outside of the bridge.

This mode puts the container on a separate network namespace and shares the external IP address of the host among the many containers through the use of Network Address Translation (as shown in below figure).

Default Docker Bridge Network Configuration:

This figure illustrates the default bridge network setup created by Docker. It shows how containers (Container 1 and Container 2) communicate through the virtual ethernet managed by the Docker Engine. The bridge network connects these containers to the host machine via the default network interface (Eth0).

Desired Networking Scenarios

Scenario 1: Connection between Containers:

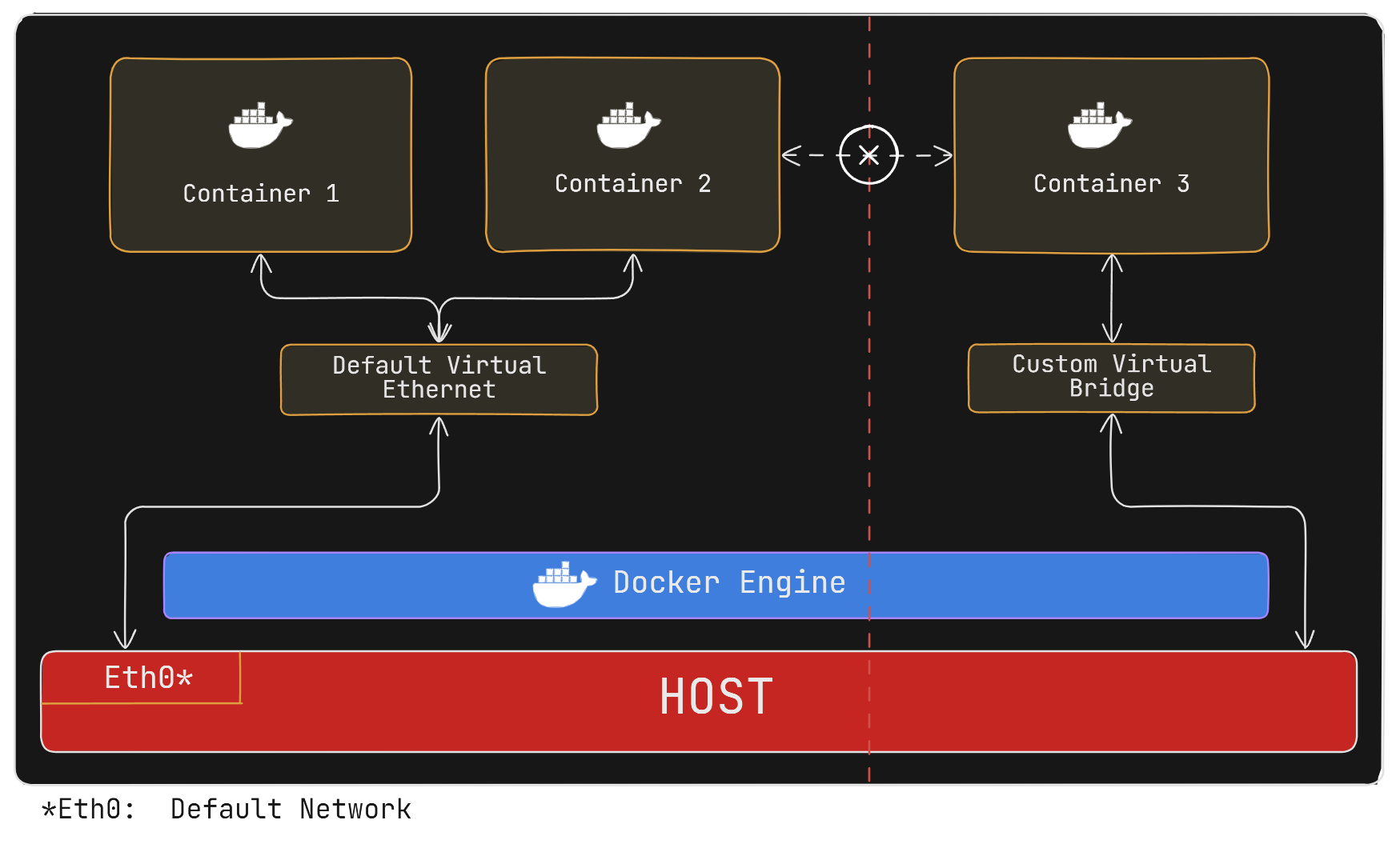

Facilitate communication between containers to ensure they work together effectively, such as in multi-container applications. This Scenario is achieved as containers share same network (bridged) by defaultScenario 2: Isolation between Containers:

Keep containers separate to enhance security and prevent unauthorized access, especially in environments with sensitive data.

How do we Isolate a Container in the Docker Network?

To isolate containers using Docker, creating a custom bridge network is a straightforward and effective method. A custom bridge network lets you control which containers can talk to each other, while keeping them separate from other containers.

As shown in the Figure above Container 3 is connected to host via a Custom Bridge network which isolates it from Containers 1 and 2 that are connected to default bridge network.

Here’s how to create Custom Bridge Network :

Ensure Docker is installed:

Create a Custom Bridge Network: This network is separate from Docker's default network.

docker network create my_custom_networkRun Containers on the Custom Network: Specify the custom network when starting your containers.

docker run -d --name container1 --network my_custom_network image:hag docker run -d --name container2 --network my_custom_network image:tag # my_custom_network is specifiedContainers connected to

my_custom_networkcan communicate with each other but are isolated from containers not on this network. This method helps you manage communication and isolation efficiently.

Understanding and configuring Docker networking is essential for managing container communication and isolation effectively. By leveraging custom bridge networks, you can precisely control how containers interact with each other while maintaining necessary isolation. This approach ensures both secure and efficient operation of your containerized applications.

2. The Host Driver

This mode allows the container to share the networking namespace of the host, making it directly exposed to the public network. Containers will use the host’s IP address and TCP port space to expose the service running inside the container.

The following command will start an Nginx image and listen to port 80 on the host machine:

docker run --rm -d --network host --name my_nginx nginx

You can access Nginx by hitting the http://localhost:80/url.

The downside with the host network is that you can’t run multiple containers on the same host having the same port. Ports are shared by all containers on the host machine network.

3. The None Driver

The none network driver does not attach containers to any network. Containers do not access the external network or communicate with other containers. You can use it when you want to disable the networking on a container.

The following command will enable None mode:

docker run --rm -d --network none --name my_nginx nginx

4. The Overlay Driver

The Overlay driver is for multi-host network communication, as with Docker Swarm or Kubernetes. It allows containers across the host to communicate with each other without worrying about the setup. Think of an overlay network as a distributed virtualized network that’s built on top of an existing computer network.

To create an overlay network for Docker Swarm services, use the following command:

#Create overlay network:

docker network create -d overlay my-overlay-network

#attach created network to Container:

docker run --rm -d --network my-overlay-network --name my_nginx nginx

5. The Macvlan Driver

This driver connects Docker containers directly to the physical host network. As per the Docker documentation:

“Macvlan networks allow you to assign a MAC address to a container, making it appear as a physical device on your network. The Docker daemon routes traffic to containers by their MAC addresses. Using the

macvlandriver is sometimes the best choice when dealing with legacy applications that expect to be directly connected to the physical network, rather than routed through the Docker host’s network stack.”

Macvlan networks are best for legacy applications that need to be modernized by containerizing them and running them on the cloud because they need to be attached to a physical network for performance reasons.

Basic Docker Networking Commands:

List Networks

docker network lsInspect a Network

docker network inspect <network_name_or_id>Create a Network

docker network create <network_name>Remove a Network

docker network rm <network_name>Connect a Container to a Network

docker network connect <network_name> <container_name_or_id>Disconnect a Container from a Network

docker network disconnect <network_name> <container_name_or_id>

Example: Creating and Using a Custom Bridge Network

Step 1: Create a Custom Bridge Network

docker network create my_bridge_network

Step 2: Run Containers on the Custom Network

docker run -dit --name container1 --network my_bridge_network alpine sh

docker run -dit --name container2 --network my_bridge_network alpine sh

Step 3: Verify Connectivity

Inside

container1:docker exec -it container1 shPing

container2fromcontainer1:ping container2

Summary:

In Docker environments, mastering networking is crucial for effective container communication, isolation, and security. This article covers Docker's network basics and delves into various built-in and user-defined network drivers—Bridge, Host, None, Overlay, and Macvlan. It provides practical examples for creating and managing Docker networks, illustrating how to connect and isolate containers efficiently. Essential Docker networking commands are also highlighted to help you manage containerized applications with precision.

Subscribe to my newsletter

Read articles from Daawar Pandit directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Daawar Pandit

Daawar Pandit

Hello, I'm Daawar Pandit, an aspiring DevOps engineer with a robust background in search quality rating and a passion for Linux systems. I am dedicated to mastering the DevOps toolkit, including Docker, Kubernetes, Jenkins, and Terraform, to streamline deployment processes and enhance software integration. Through this blog, I share insights, tips, and experiences in the DevOps field, aiming to contribute to the tech community and further my journey towards becoming a proficient DevOps professional. Join me as I delve into the dynamic world of DevOps engineering.