Kubernetes Architecture: A Clear and Simple Breakdown ☸️✔️

Malhar Kauthale

Malhar Kauthale

What is Kubernetes ?

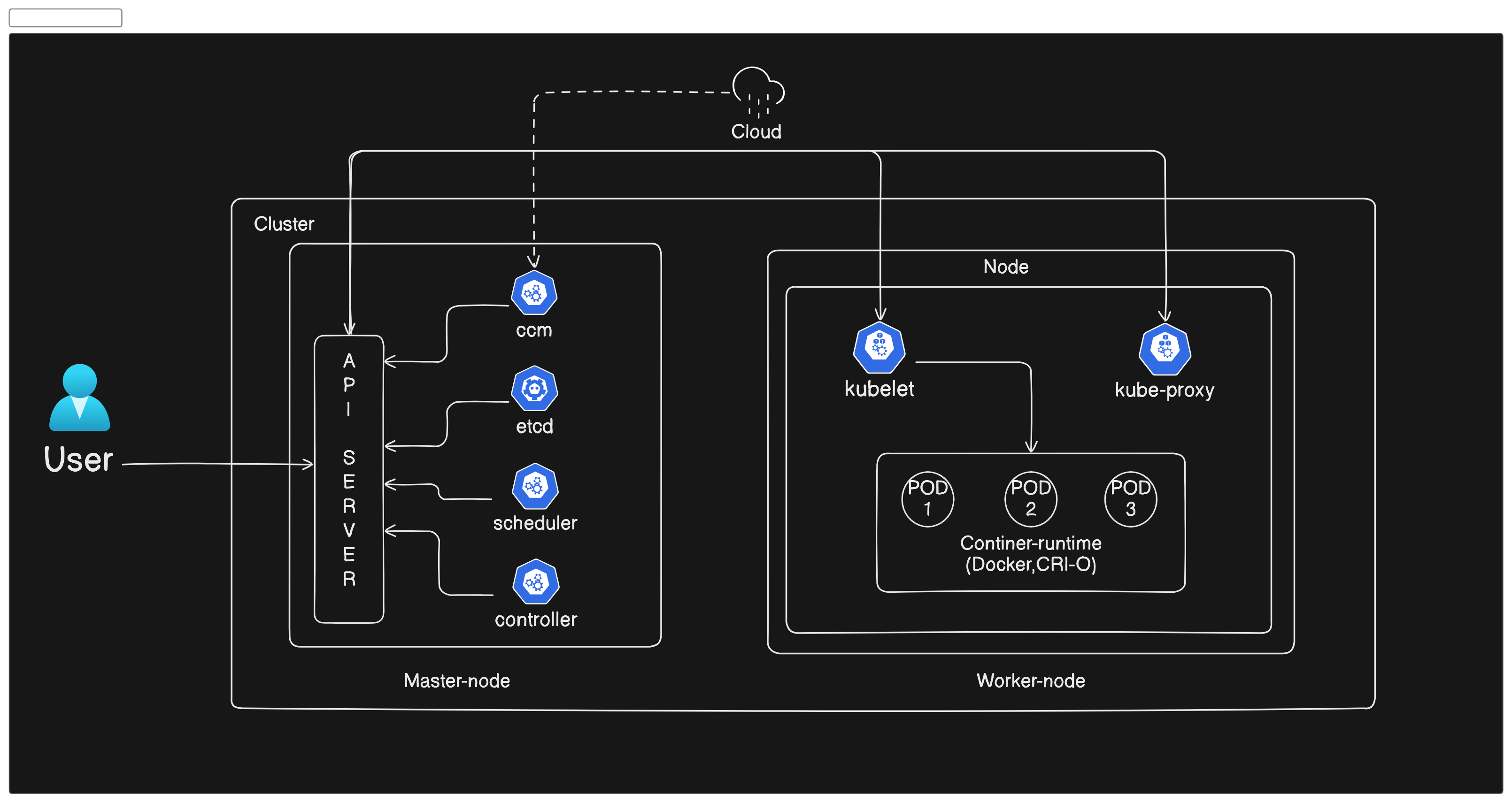

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides a framework to efficiently manage the complexities of deploying and running applications in containers across a cluster of machines. Its architecture is composed of several key components that work together to manage containerized applications. Here's a detailed explanation of its architecture

Components :

In Kubernetes, a

clusteris a set of nodes that run containerized applications. A cluster consists of one or more master nodes and a number of worker nodes. The master nodes manage the cluster and orchestrate the operations of the worker nodes, which run the applications.

1. Master Node Components

The master node is responsible for the control plane of the Kubernetes cluster. It manages the cluster's lifecycle, including scheduling, scaling, and updates. Key components of the master node include:

etcd: A distributed

key-value store that holds the configuration data, state, and metadatafor the Kubernetes cluster. This includes information about Pods, Services, replication settings, and more. It serves as the central source of truth for the cluster. It stores all objects under the /registry directory key in key-value format.The kube-api server is the central hub of the Kubernetes cluster that exposes the Kubernetes APIkube-apiserver: The API server is the front-end for the Kubernetes control plane. . It is highly scalable and can handle large number of concurrent requests. End users, and other cluster components, talk to the cluster via the API server

kube-scheduler: The Scheduler is responsible for

placing Pods onto suitable worker nodes.It takes into account factors like resource availability, constraints, CPU, memory, affinity, taints or tolerations, priority, persistent volumes (PV), etcand optimization goals.kube-controller-manager : What is a controller? Controllers are programs that

run infinite control loops.Meaning it runs continuously and watches the actual and desired state of objects. If there is a difference in the actual and desired state, it ensures that the kubernetes resource/object is in the desired state. Runs controller processes that handle routine tasks in the cluster, such as replication, endpoint creation, and node operations. It includes several controllers like:

Node Controller: Manages node health and status.

Replication Controller: Ensures that the desired number of pod replicas are running.

Endpoints Controller: Populates the Endpoints object (joins Services and Pods).

Service Account & Token Controllers: Create default accounts and API access tokens.

cloud-controller-manager: Manages cloud-specific controller logic, allowing for separation of concerns between cloud-specific code and the core Kubernetes logic.

5. Cloud Controller Manager: When kubernetes is deployed in cloud environments, the cloud controller manager acts as a bridge between Cloud Platform APIs and the Kubernetes cluster.This way the core kubernetes core components can work independently and allow the cloud providers to integrate with kubernetes using plugins. (For example, an interface between kubernetes cluster and AWS cloud API)Cloud controller integration allows Kubernetes cluster to provision cloud resources like instances (for nodes), Load Balancers (for services), and Storage Volumes (for persistent volumes).

Cloud Controller Manager contains a set of cloud platform-specific controllers that ensure the desired state of cloud-specific components (nodes, Loadbalancers, storage, etc). Following are the three main controllers that are part of the cloud controller manager.

Node controller: This controller updates node-related information by talking to the cloud provider API. For example, node labeling & annotation, getting hostname, CPU & memory availability, nodes health, etc.

Route controller: It is responsible for configuring networking routes on a cloud platform. So that pods in different nodes can talk to each other.

Service controller: It takes care of deploying load balancers for kubernetes services, assigning IP addresses, etc.

2. Worker Node Components

Worker nodes run the containerized applications and are managed by the master. Each worker node contains the following components:

- kubelet: An agent that runs on each node in the cluster. It ensures that containers are running in a pod by communicating with the master node and ensuring the specified containers are running properly.

Creating, modifying, and deleting containers for the pod. Responsible for handling liveliness, readiness, and startup probes.

Responsible for Mounting volumes by reading pod configuration and creating respective directories on the host for the volume mount.

Collecting and reporting Node and pod status via calls to the API server with implementations like

cAdvisorandCRI.

kube-proxy: A network proxy that maintains network rules on nodes. It facilitates communication between pods, services, and the external network.Kube-proxy is a daemon that runs on every node as a daemonset. It is a proxy component that implements the Kubernetes Services concept for pods. (single DNS for a set of pods with load balancing). It primarily proxies UDP, TCP, and SCTP and does not understand HTTP. When you expose pods using a Service (ClusterIP), Kube-proxy creates network rules to send traffic to the backend pods (endpoints) grouped under the Service object. Meaning, all the load balancing, and service discovery are handled by the Kube proxy.

Container Runtime: Container runtime runs on all the nodes in the Kubernetes cluster. It is responsible for pulling images from container registries, running containers, allocating and isolating resources for containers, and managing the entire lifecycle of a container on a host.Software responsible for running containers. Kubernetes supports several container runtimes, including Docker, containerd, and CRI-O.Container runtime runs on all the nodes in the Kubernetes cluster. It is responsible for pulling images from container registries, running containers, allocating and isolating resources for containers, and managing the entire lifecycle of a container on a host. To understand this better, let’s take a look at two key concepts:

Container Runtime Interface (CRI): It is a set of APIs that allows Kubernetes to interact with different container runtimes. It allows different container runtimes to be used interchangeably with Kubernetes. The CRI defines the API for creating, starting, stopping, and deleting containers, as well as for managing images and container networks.

Open Container Initiative (OCI): It is a set of standards for container formats and runtimes

3. Pod

A pod is the smallest and simplest unit in Kubernetes. It represents a single running process in a Kubernetes cluster. Each pod can contain one or more containers that work together. The containers inside a pod share the same network, allowing them to communicate with each other using localhost. This means they can easily share data and services. Pods are the basic building blocks for deploying applications in Kubernetes.

4. Cluster Networking

Kubernetes uses a flat network structure to allow all pods to communicate with each other across nodes without Network Address Translation (NAT). Key components include:

- CNI (Container Network Interface): Provides network connectivity to containers and attaches them to the network.

5. Controllers

Kubernetes controllers are control loops that watch the state of the cluster through the API server and make changes to achieve the desired state. Key controllers include:

ReplicaSet: Ensures a specified number of pod replicas are running.

Deployment: Provides declarative updates to applications and manages ReplicaSets.

StatefulSet: Manages stateful applications, ensuring that the pod order and persistence are maintained.

DaemonSet: Ensures that a copy of a pod runs on all (or some) nodes.

Job: Manages batch and parallel tasks.

CronJob: Manages time-based jobs.

6. Services

Kubernetes services are like traffic managers for your applications running in a Kubernetes cluster. They help users and other parts of your application to find and talk to the right pieces (pods) easily. They provide a stable IP address and DNS name to access a set of pods, making it easier to manage and expose applications. They abstract the complexities of pod networking. Key types of services include:

ClusterIP: Exposes the service on an internal IP within the cluster, making it accessible only within the cluster.

NodePort: Exposes the service on each node’s IP at a static port, allowing external traffic to access the service using the node's IP and the specified port.

LoadBalancer: Uses a cloud provider’s load balancer to expose the service, providing a single external IP that distributes traffic across the pods.

7. Volumes

Kubernetes volumes are a way to provide storage to your containers. Think of them as shared storage spaces that your application can use to save and retrieve data. Here’s how they work:

Ephemeral Storage: Some volumes exist only as long as the pod is running. When the pod stops, the data is lost. This is useful for temporary storage needs.

Persistent Storage: These volumes exist beyond the life of the pod, meaning the data is saved even if the pod stops or restarts. This is useful for databases and other applications that need to keep data over time.

Kubernetes provides different types of volumes, such as:

emptyDir: An empty directory that's created when a pod starts and deleted when the pod stops.

hostPath: Uses a directory or file from the host node's filesystem.

PersistentVolume (PV): A piece of storage in the cluster that has been provisioned by an administrator or dynamically by a StorageClass.

PersistentVolumeClaim (PVC): A request for storage by a user that automatically binds to an available PersistentVolume.

8. Namespaces

Namespaces are a way to divide cluster resources between multiple users. They are intended for use in environments with many users spread across multiple teams or projects. Here's how namespaces work:

Isolation: Namespaces separate different parts of a cluster, like having different rooms for different purposes. This isolation helps to avoid conflicts and organize resources.

Resource Management: Just like each room can have its own rules and limits (e.g., how much electricity can be used), namespaces can have their own resource quotas and limits to manage how much CPU and memory can be used.

Access Control: You can control who can enter each room. Similarly, namespaces help manage access and permissions, ensuring that users and applications only interact with the parts of the cluster they are allowed to.

9. ConfigMaps and Secrets

ConfigMaps: Store configuration data in key-value pairs that can be consumed by pods.ConfigMaps in Kubernetes are like shared folders where you can store configuration data for your applications. Instead of hardcoding settings in your application code, you can put them in a ConfigMap. This makes it easier to update and manage configuration without changing the application itself. For example, you can store database connection strings, URLs, or other settings in a ConfigMap, and your applications can read these values when they start up.

Secrets in Kubernetes are similar to ConfigMaps, but they are used for storing sensitive data, such as passwords, API keys, and certificates. Unlike ConfigMaps, Secrets are encrypted, providing an extra layer of security. This way, you can keep your sensitive information safe and separate from your application code. Applications can access these Secrets when they need to use the sensitive information, ensuring that it remains protected.

10. Ingress

Ingress manages external access to services, typically HTTP. It provides load balancing, SSL termination, and name-based virtual hosting. And its very cost effective

This architecture allows Kubernetes to provide a robust, flexible, and scalable platform for managing containerized applications, ensuring high availability and efficient resource utilization.

Subscribe to my newsletter

Read articles from Malhar Kauthale directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Malhar Kauthale

Malhar Kauthale

Aspiring Cloud DevOps Engineer ♾️ | AWS Enthusiast ☁️ | Python & Shell Scripting 🐍 | Docker 🐳 | Terraform 🛠️ | Kubernetes ☸️ | Web Development 🌐 | Automation & Troubleshooting 🤖🔍 | Always exploring the latest in DevOps tools and practices.