Rethinking Row space and Column space in the Data Way

Rudra Sarkar

Rudra SarkarTable of contents

Hi everyone, welcome to my blog. In this blog, I have addressed a very basic concept of linear algebra: row space and column space. While there are a huge amount of resources that address these concepts, I have shared my perspective, and I have tried to tie the concepts to their manifestation in the practical world in the form of data. It’s a common practice in machine learning and deep learning to aggregate the data in matrices and pass it to the learning model. While it seems like a fairly straightforward process, deciphering some nuances associated with this aggregation can introduce new dimensions to how we perceive these operations.

Let’s say we have tabular data consisting of \(m\\\) records. Each record is attributed with \(n\\\) number of features and a corresponding target value. For instance, let’s say we have tabular data that holds the price of houses for varied feature settings like total area, number of bedrooms, etc. The column containing the price of homes can be considered a target if we intend to build a model that can generalise the price of houses given a set of values against the predefined features. If the data for the features are aggregated in a matrix \({X}\\\)∈ \(ℝ^{m× n}\\\) and the targets in a separate vector \(Y\\\)∈\(ℝ^{m}\\\), let’s explore the structure of this data matrix \({X}\\\).

Linear Independence

Row space of a matrix is defined as the subspace in \(ℝ^n\\\) spanned by the linearly independent row vectors of that matrix. Now, what does that exactly mean? Let’s first address the linearly independent nature of a set of vectors. We call a set of vectors linearly independent if none of the vectors in the set can be expressed as a weighted sum of the vectors in the set except itself. Another way to identify a set of linearly independent vectors is by checking the existence of the unique solution to the following equation.

$$\sum_{i=1}^m \omega_i x_{ri} = 0 : \forall i, \omega_i = 0$$

Let’s first convince ourselves that this is, in fact, true. Let there be a vector \({x}_r\\\\\) which is a linear combination of the row vectors of \({X}\\\), i.e

$$x_r = \sum_{i=1}^m\theta_i x_{ri} :\theta_i \in \mathbb{R}$$

Now, instead of \(ω_i\\\\\), let us re-initialize the \({m}+1\\\) weights as\(\{k_1, k_2, ..., k_{m+1}\}\\\)

$$\therefore \sum_{i=1}^mk_ix_{ri}+k_{m+1}x_r = 0\\\implies \sum_{i=1}^mk_ix_{ri} + k_{m+1}\sum_{i=1}^m\theta_ix_{ri} = 0$$

which implies \(\\ \sum_{i=1}^m(k_i + k_{m+1}.\theta_i)x_{ri} = 0\\\). Now if we are solving for the values of \({k}_i\\\), we can ignore the row vectors and aggregate the values in a matrix and present it in the following fashion

$$\begin{bmatrix} k_1 + k_{m+1}.\theta_1\\k_2+k_{m+1}.\theta_2\\\vdots \\ k_m +k_{m+1}.\theta_m \end{bmatrix} = \begin{bmatrix} 0\\0\\ \vdots \\0 \end{bmatrix}$$

Now we can see that there are \({m}\\\) equations and \({m}+1\\\) unknowns, considering the values of \(θ_{i}\\\) are given to us, implying an infinite number of solutions. Now we can consider a similar situation with the exception of \({x}_r\\_{m+1}\\\) being linearly independent. In such a case it would be trivial to note that there would be both \({m}+1\\\) equations and unknowns, yielding us a trivial solution. By asserting non-zero on the row vectors, it leaves us with the conclusion that the weights must be zero in order to satisfy the equation.

$$\sum_{i=1}^mk_ix_{ri}+k_{m+1}x_r = 0 \implies \sum_{i=1}^{m+1}k_ix_{ri} = 0$$

$$\text{or}, \begin{bmatrix} k_1 \\k_2\\\vdots \\ k_{m+1} \end{bmatrix} = \begin{bmatrix} 0\\0\\ \vdots \\0 \end{bmatrix}$$

Row space

The set consisting of all the vectors, which can be expressed as a weighted sum of all the row vectors provided the weights are real, is called the row space of that matrix. Now in the context of \({X}\\\) , if the set of row vectors\(\{x_{r0}, x_{r1}, \cdots, x_{rm}\} : x_{ri}\in \mathbb{R}^n\\\) are linearly independent, and the relationship between \({X}\\\) and \({Y}\\\) is linear (i.e. the target value can be expressed as a weighted sum/linear combination of the feature values), then we can predict the target value for any unknown record with 100% accuracy, provided the record can be expressed as a linear combination of the row vectors. The reason why we can accomplish this is due to our simplistic assumption of linearity between the features and the target. This results in the phenomena where both the unknown feature vector and the target vector share the same space, i.e. the row space of \({X}\\\) . Under this assumption of linearity \(\forall i\in [1, m], y_i = x_{ri} \beta\\\) , where \(\beta = [\beta_1, \beta_2, \cdots, \beta_n]^T\\\) . This linearity also allows us to determine the target value for any unknown records \({x}'\\\) , which is a linear combination of the row vectors of \({X}\\\) i.e \(x' = \sum_{i=1}^m k_i.x_{ri}\\\)

$$y' = x'\beta = \bigg(\sum_{i=1}^m k_i.x_{ri}\bigg)\beta = \bigg(\sum_{i=1}^m k_i.x_{ri}\beta\bigg) = \sum_{i=1}^m k_i.y_i$$

Column space

Similar to row space, the column space of a matrix is the subspace in \(\mathbb{R}^m\\\) spanned by the column vectors of \({X}\\\). A careful reader might point out the missing criteria of linear independence, as mentioned in the row space. To be precise, the subspace spanned by the linearly independent vectors is the same as the subspace spanned by the linearly independent vectors, plus the vectors that are linearly dependent as the linearly dependent vectors will be anyways considered in the subspace spanned by the linearly independent vectors. Let’s assume that the column vectors of \({X}\\\) i.e \(\{x_{c_1}, x_{c_2}, \cdots, x_{c_n}\} : x_{c_i} \in \mathbb{R}^m\\\) are linearly independent. And like before, we will assume a linear relationship between \({X}\\\) and \({Y}\\\) which suggests,

$$Y = \sum_{i=1}^n\alpha_i x_{c_i}, \forall \alpha_i\in \mathbb{R}$$

Essentially, this suggests that the target values can be expressed as a linear combination of the column vectors. For any column vector \({x}_{c_i}\\\) , the \(j^{th}\\\) element in the vector represents the value of the \(i^{th}\\\) attribute corresponding to the record \({j}\\\) . Therefore, the column vectors can be identified as feature vectors that follow the historical data from the records to compute the composition of individual features. Like before, let’s explore the case when a new feature, which is a linear combination of the feature vectors, is considered for each record. This new feature can be expressed as : \(x_{c_{n+1}} = \sum_{i=1}^n\eta_i x_{c_i}, \forall \eta_i \in \mathbb{R}\\\) . Let’s now explore the scenario when this new feature vector is included for the computation of \({Y}\\\) . During this procedure, we’ll assume that the \(α_i\\\) s have been re-initialized as random scalars.

$$\therefore \sum_{i=1}^n\alpha_ix_{x_i}+\alpha_{n+1}x_{c_{n+1}} = Y$$

$$\implies \sum_{i=1}^n\alpha_ix_{c_i} + \alpha_{n+1}\sum_{i=1}^n\eta_i x_{c_{i}} = Y \implies \sum_{i=1}^n(\alpha_i + \alpha_{n+1}.\eta_i)x_{c_i} = Y$$

$$\implies \sum_{i=1}^n\zeta_i x_{c_i} = Y,\ \ \forall \zeta_i = \alpha_i + \alpha_{n+1}.\eta_i \ \ \& \ \ \zeta_i\in \mathbb{R}$$

This suggests that the computation of \({Y}\\\) is feasible without the new feature vector. In case where we have previously calculated the values of \(α_i\) s such that \(Y = \sum_{i=1}^n\alpha_i x_{c_i}, \forall \alpha_i\in \mathbb{R}\\\) , it is fairly easy to see that \(α_{n+1} = 0\\\) implying the useless effect of the introduction of this new feature.

Visualisation

If you think the concepts still need to be more clear, here is a fun example that can help you unlock another perspective.

Let's take the case of RGB colours. A colour can be represented using three attributes, i.e. Red(R), Green(G) and Blue(B). Each of these attributes can be assigned a positive real value, which represents the intensity of that particular colour channel. For the primary colours, i.e. red or green or blue, other than that specific channel, the rest of the channels can be assigned a value of 0. Let's look at a python code to concretise our understanding

def convert_to_color(color_list):

r, g, b = color_list[0], color_list[1], color_list[2]

r = torch.ones((32, 32))*r

g = torch.ones((32,32))*g

b = torch.ones((32, 32))*b

y = torch.stack([r, g, b]).permute(1, 2, 0)

y = (y - y.min())/(y.max() - y.min())

return y

data_matrix = np.array([

[1, 0, 0], # Red channel

[0, 1, 0], # Green channel

[0, 0, 1] # Blue channel

])

fig, ax = plt.subplots(1, 3, figsize = (12, 4))

for i in range(3):

ax[i].imshow(convert_to_color(data_matrix[i]))

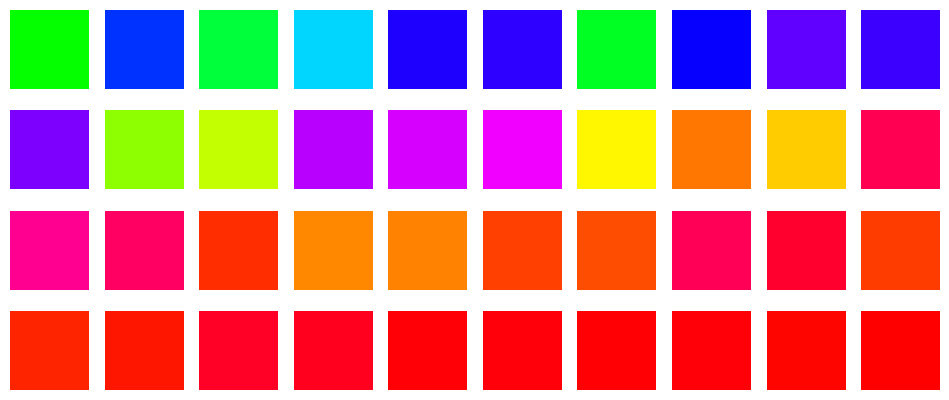

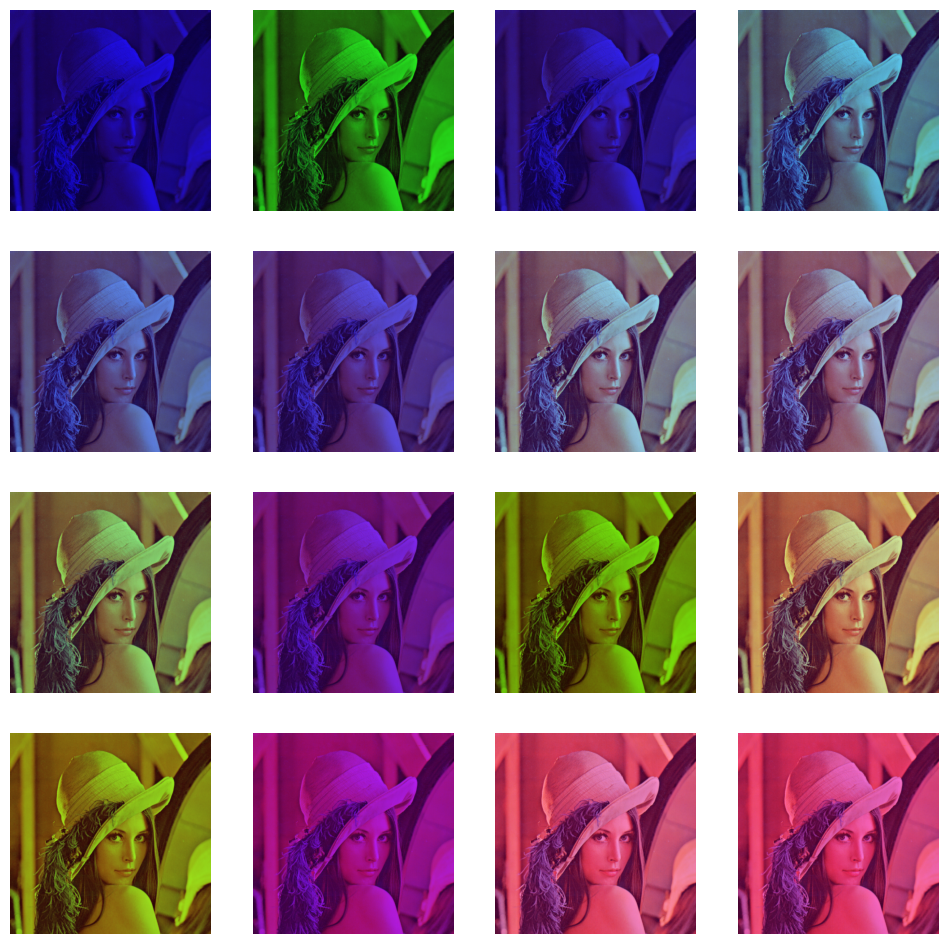

It can be noted that the records in the data matrix are linearly independent. So, ideally, if we were to generate some sample colours which would be a linear combination of these records, it would look something like this.

def generate_linearly_dependent_record(weights):

return data_matrix[0]*weights[0] + data_matrix[1]*weights[1] + data_matrix[2]*weights[2]

def generate_random_weights(bias=None, val1 = None):

if not val1:

val1 = np.random.random()

w = np.random.random()

val2 = (1 - val1)*w

val3 = (1 - val1)*(1-w)

if bias:

val1 *= bias[0]

val2 *= bias[1]

val3 *= bias[2]

return val1, val2, val3

r_values = np.linspace(0.1, 1, 40)

rowspace = [generate_linearly_dependent_record(generate_random_weights(val1 = r_values[i] )) for i in range(40)]

fig, ax = plt.subplots(4, 10, figsize = (12,5) )

for i in range(10):

ax[0, i].imshow(convert_to_color(rowspace[i]))

ax[0, i].set_axis_off()

ax[1, i].imshow(convert_to_color(rowspace[10+i]))

ax[1, i].set_axis_off()

ax[2, i].imshow(convert_to_color(rowspace[20+i]))

ax[2, i].set_axis_off()

ax[3, i].imshow(convert_to_color(rowspace[30+i]))

ax[3, i].set_axis_off()

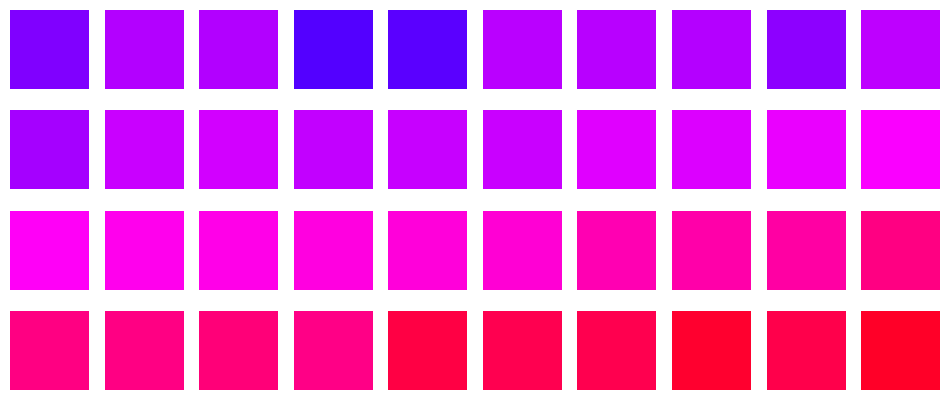

As you can see, we have generated composite colours using a linear combination of these primary colours. So, these generated colours can be considered as a subset of the row space of the primary colours. These sample colors have all the intensities of all the three red, green and blue channels. Now, let's explore what if we remove the green channel and add a linear combination of the red and blue channel.

data_matrix = np.array([

[1, 0, 0], # Red channel

[2, 0, 3], # Linear combination of the red and blue channel

[0, 0, 1] # Blue channel

])

r_values = np.linspace(0.1, 1, 40)

rowspace = [generate_linearly_dependent_record(generate_random_weights(val1 = r_values[i] )) for i in range(40)]

As expected, now all these colors are missing the intensities of the green channel. Let's explore what happens when we remove the red channel.

As you might have already guessed, the red channel intensities are missing now. So when the colours are linearly dependent on the intensities of the primary colour channels, dropping one or more channels will result in a subspace that is spanned only by the active channel. From the context of row space, it can be observed that when our records were linearly independent, the subspace consisted of samples that expressed themselves through all the colour channels. Whereas when a primary colour channel was removed, and a composite colour channel comprising of the linear combination of the active channels was introduced, the observed effect due to the introduction of the new channel was null in the sense that the effect could have been produced by those channels alone without the introduction of the new composite channel.

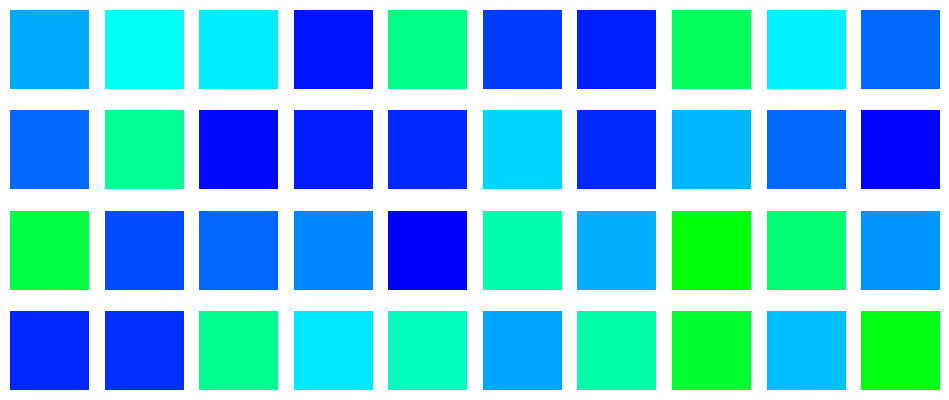

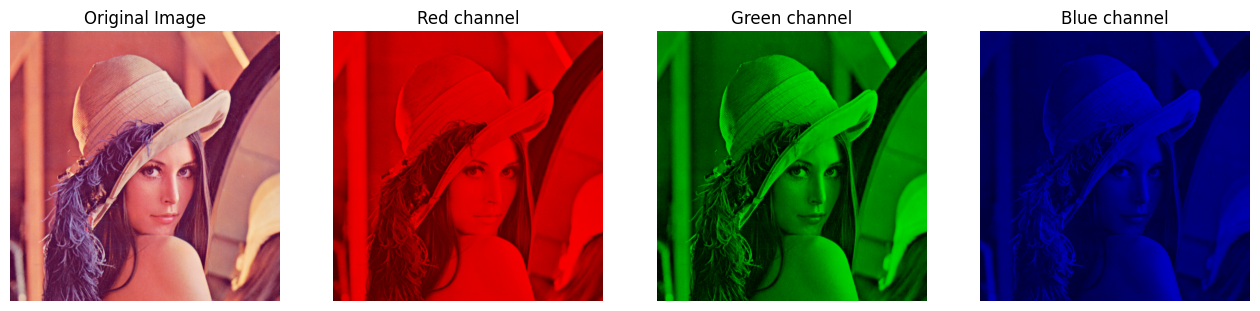

The same thing can be conveyed from the perspective of column space. Each column vector of the data matrix represents the primary colour channels. Let's look at an image and it's decomposition into the 3 primary colour channels

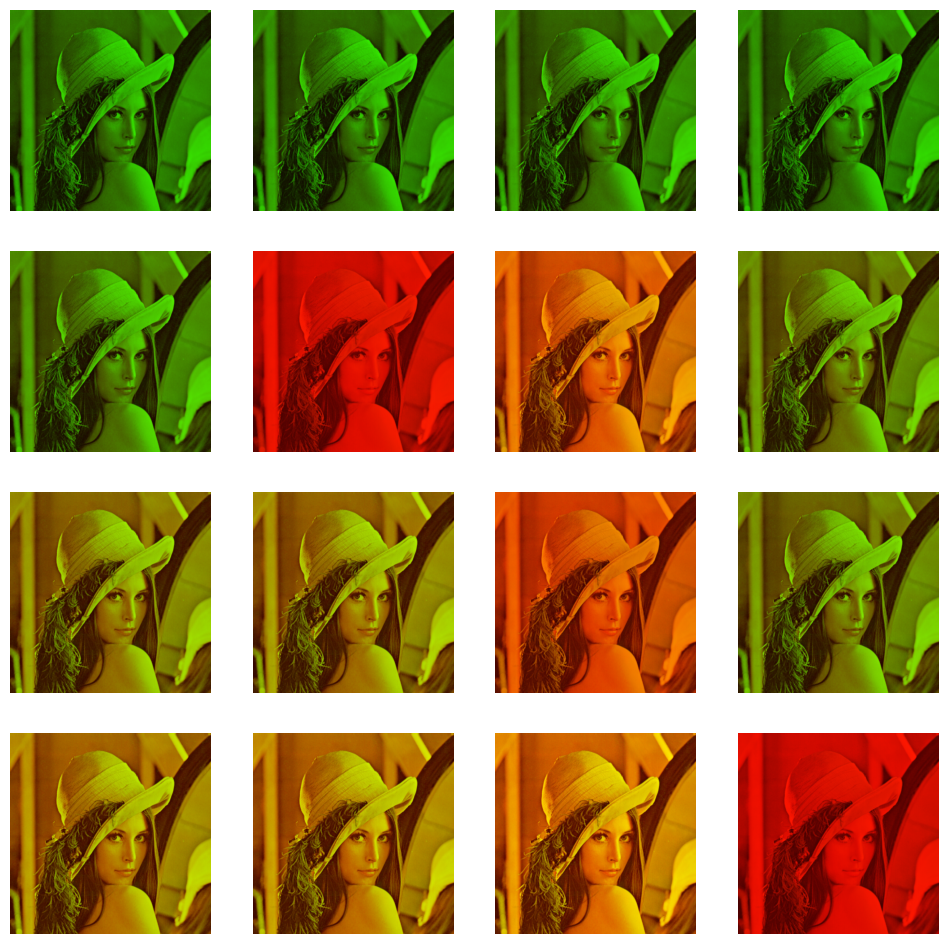

If we consider the red channel, each entry of the red channel matrix is the intensity of the red corresponding to the pixel coordinate of the original image. Hence, each channel matrix is an aggregation of the individual channel intensities corresponding to the pixel coordinates of the original image. Therefore, just like the row space, we can imagine the outputs when a linear combination of the channels is introduced.

As expected, the new features don't add any value, as the same can be achieved by tuning the intensities of each of the individual channels. While we look at some of the samples from the column space, we can see that since we considered all the primary colour channels while constructing the new features, they have been found to express themselves through all the various intensities of red, green and blue. And similarly, just like before, we can observe the effect when we remove the blue channel and construct new features based on only red and green.

No surprise, we can see that the effect of blue has been nullified. This marks the end, and I am glad to share some of my insights about linear algebra. If you think this helps, please like it and leave a comment. Let me know if you want me to cover any other topics. If you find any discrepancies, please don't hesitate to point them out, as I love learning. If you want to reproduce the results, follow this link to access my jupyter notebook

Subscribe to my newsletter

Read articles from Rudra Sarkar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by