Container Networking Explained (Part III)

Ranjan Ojha

Ranjan OjhaUntil now, we have 2 containers running in the system. However, keen-eyed among you might have noticed that we have not made much use of iptables. This is the reason why, we still need to know both the IP and the port of our running services inside to reach them from the host. And sadly still can't reach the services from outside without significant changes in our network configuration itself. This post aims to change all of that.

The work that we are doing right now is generally done by docker-proxy and kube-proxy in Docker and Kubernetes respectively.

Wait, does this mean I can access services running inside docker containers without port mapping ? Yes, you can. Much like how we can reach our services given we know the IP and Port, we can reach services running inside our cluster using host system. Same on Kubernetes, we can reach the pod services without exposing them using service on Kubernetes host node. If you add services then you can reach it from any node.

In Docker, portmapping is the process of exposing container services from the host on a specific port. The same process is done in Kubernetes using nodePort. Although the basic idea is the same the execution for both of them is different.

Docker uses a proxy process that binds to the host port. It then proxies all the incoming network traffic to the container port. However, on Kubernetes, you might notice that there might be no equivalent proxy process running on the nodes themselves. You can reach the service on the Node Port exposed as expected but don't find any process running on the Node Port. This difference is because of how they proxy the packets. kube-proxy by default operates on IPtables mode while in Docker, the docker daemon is configured to run on proxy on userland mode. Yes, that does mean, that docker is also equally capable of using iptables for kernel proxy rather than userland proxy but prefers to use userland mode instead. In fact even kube-proxy has a mode where it uses IPVS instead of IPtables.

Note: if you are running an old version of Kubernetes, you might also encounter the presence of

kube-proxyprocess in your host system. This is a legacy system that runs similar to howdocker-proxyworks. The reason Docker still continues to use this system is simply because of the architecture differences between the 2 systems.Note: In this blog I will be making extensive use of IPtables. At least a basic understanding of IPtables is recommended to understand what the rules are doing. I recommend going through my guide on basic IPtables for Container Networking post if you aren't familiar with IPtables yet.

Getting up to speed

Like previously, we will quickly set up 2 containers based on the previous IP routes we have chosen. To understand in detail what each of these commands are doing please refer to the earlier posts.

| Namespace | IP |

| Host | 172.18.0.1/24 |

| Container-1 | 172.18.0.2/24 |

| Container-2 | 172.18.0.3/24 |

fedora@localhost:~$ sudo ip netns add container-1

fedora@localhost:~$ sudo ip netns exec container-1 ./echo-server

fedora@localhost:~$ sudo ip netns add container-2

fedora@localhost:~$ sudo ip netns exec container-2 ./echo-server

Note: the

echo-serveris the same go server I have been using from the very first post. You can also run a Python webserver by replacing./echo-serverusage with,python -m http.server 8000.

fedora@localhost:~$ sudo ip netns exec container-1 ip link set lo up

fedora@localhost:~$ sudo ip netns exec container-2 ip link set lo up

fedora@localhost:~$ sudo ip netns exec container-1 ip link set lo up

fedora@localhost:~$ sudo ip netns exec container-2 ip link set lo up

fedora@localhost:~$ sudo ip link add veth1 type veth peer name vethc1

fedora@localhost:~$ sudo ip link add veth2 type veth peer name vethc2

fedora@localhost:~$ sudo ip link set vethc1 netns container-1

fedora@localhost:~$ sudo ip link set vethc2 netns container-2

fedora@localhost:~$ sudo ip link add cont-br0 type bridge

fedora@localhost:~$ sudo ip link set veth1 master cont-br0

fedora@localhost:~$ sudo ip link set veth2 master cont-br0

fedora@localhost:~$ sudo ip a add 172.18.0.1/24 dev cont-br0

fedora@localhost:~$ sudo ip netns exec container-1 ip a add 172.18.0.2/24 dev vethc1

fedora@localhost:~$ sudo ip netns exec container-2 ip a add 172.18.0.3/24 dev vethc2

fedora@localhost:~$ sudo ip link set veth1 up

fedora@localhost:~$ sudo ip link set veth2 up

fedora@localhost:~$ sudo ip link set cont-br0 up

fedora@localhost:~$ sudo ip netns exec container-1 ip link set vethc1 up

fedora@localhost:~$ sudo ip netns exec container-2 ip link set vethc2 up

fedora@localhost:~$ sudo ip netns exec container-1 ip r add default via 172.18.0.1 dev vethc1 src 172.18.0.2

fedora@localhost:~$ sudo ip netns exec container-2 ip r add default via 172.18.0.1 dev vethc2 src 172.18.0.3

fedora@localhost:~$ sudo iptables -t nat -A POSTROUTING -s 172.18.0.0/24 ! -o cont-br0 -j MASQUERADE

Configuration

Before we start, let's make sure that we understand the new configuration we are trying to achieve.

| Container | Socket | Host Port |

| container-1 | 172.18.0.2:8000 | 8081 |

| container-2 | 172.18.0.3:8000 | 8082 |

We currently have a web server running on port 8000 of each of our containers. But now we want to have our containers exposed on the host on the port 8081 and 8082 respectively.

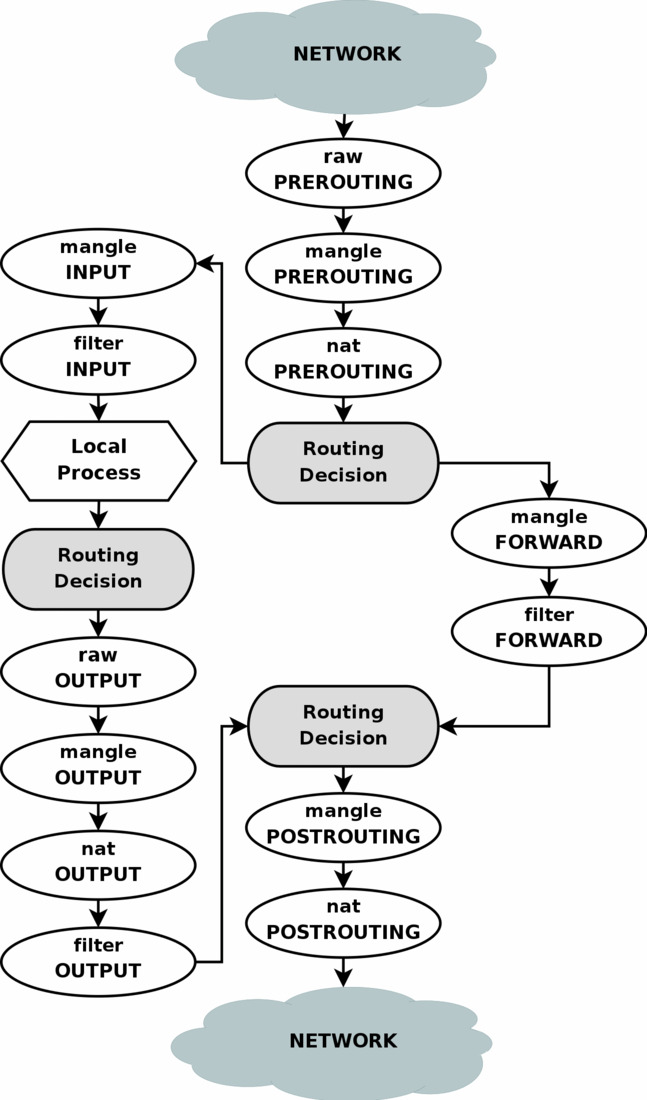

I will also put up this chart courtesy of frozentux.net which will be very handy to understand the rules we are about to make.

Adding PREROUTING nat rule

Consulting the above chart, If you focus on the very first section we can see that immediately when a packet arrives on our device we have an option to perform some extra processing on packets before our device makes any routing decision. This is when we will intercept any packets. This is because after this decision has been made the packet is already destined to be either consumed by our local process (the left branch) or forwarded (the right branch). What we really want is for any packets destined for our device (which would have taken the left branch) to be forwarded to our container (take the right branch). Hence there is no other better place to hook than PREROUTING, and since we are doing nat we select the nat table. Since we are changing the destination address we call this DNAT.

fedora@localhost:~$ sudo iptables -t nat -A PREROUTING -p tcp --dport 8081 -j DNAT --to-destination 172.18.0.2:8000

fedora@localhost:~$ sudo iptables -t nat -A PREROUTING -p tcp --dport 8082 -j DNAT --to-destination 172.18.0.3:8000

With this rule, we basically route any incoming network packets on port 8081 to 172.18.0.2:8000 and incoming packets on port 8082 to 172.18.0.3:8000.

However, if we try to make a curl request from the host,

fedora@localhost:~$ curl localhost:8081

curl: (7) Failed to connect to localhost port 8081 after 8 ms: Couldn't connect to server

Our request fails. If you understand the above diagram of iptables, this is expected behavior. For anyone else, I would like to point your attention to the left branch. There is a box for Local Process. Any packets that originate from an external source may either flow down the right path and be forwarded or may take the left path and end up on the Local Process. But any packets generated by Local Process, our curl request here, for instance, will continue traveling down the left branch from Local Process. So our DNAT rule was never allowed to work on the packet from curl. However, this does mean that if anyone were to send a request to port 8081 from outside they would be able to reach the container.

In my current setup, my host system is a VM hosted on my actual Linux machine. So to simulate an external request to the host machine, I can send a curl request from my actual device.

➜ docker-network curl -s 192.168.122.240:8081/echo | jq

{

"header": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.9.0"

]

},

"body": "Echo response"

}

And as expected, I got the response.

Connecting Localprocess

For connecting the host device, the process is not that different from what we have already done, we just need to hook into a different chain. From the figure above it is clear the nearest chain we can do this is in the OUTPUT chain of the nat table.

fedora@localhost:~$ sudo iptables -t nat -A OUTPUT -p tcp --dport 8081 -j DNAT --to-destination 172.18.0.2:8000

fedora@localhost:~$ sudo iptables -t nat -A OUTPUT -p tcp --dport 8082 -j DNAT --to-destination 172.18.0.3:8000

and...

Well depending upon your configuration, you might still be met with an issue like I did. By default, Linux kernel will not route packets with src of 127.0.0.1, i.e., localhost. So the packet just gets dropped. We thus need to allow routing of packets with the source of localhost, which is done by toggling net.ipv4.conf.all.route_localhost, as we did for the net.ipv4.ip_forward rule. The same techniques as for ip_forward apply, for temporary change use, sysctl -w and for permanent change add it to /etc/sysctl.d/. I will be going for the permanent option as,

fedora@localhost:~$ echo "net.ipv4.conf.all.route_localnet=1" | sudo tee -a /etc/sysctl.d/10-ip-forward.conf

net.ipv4.conf.all.route_localnet=1

After applying this configuration, we are almost done. Remember that this packet originated from localhost so it has the source IP of localhost. If the packet reaches our container, with the source of localhost. The application will try to return back the request to localhost but will fail to do so since that's not where we are located. So we need to also MASQUERADE the packets exiting our device.

fedora@localhost:~$ sudo iptabes -t nat -A POSTROUTING ! -s 172.18.0.0/24 -o cont-br0 -j MASQUERADE

This rule MASQUERADES all the packets that are going into the cluster but not originating from the cluster itself. Now finally our curl to localhost also works.

fedora@localhost:~$ curl -s localhost:8081/echo | jq

{

"header": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.6.0"

]

},

"body": "Echo response"

}

If we consider Docker in a single host mode we are done. Just a point to note, that we don't have any processes bound to 8081, or 8082. So in theory any process is free to bind to that port. However, the issue is that any traffic coming to these ports is directed into the containers. So the processes will themselves never get the packets. You can try this by running the web server on your host with the same colliding port. You will receive replies from your container only.

Adding Kubernetes Service

While in Docker you communicate with the processes directly, in Kubernetes you don't. You expose the pods using a service, and the service load balances traffic to your network. This load balancing isn't a typical round-robin load balancing and is more random. Also, the services are always allocated an IP CIDR pool different than that of the pod IP CIDR pool. We will see all of that now by implementing our services that load balance traffic to our pods.

Allocating Virtual IP

Until this point, we have been doing manual IP allocation to the containers. We do the same for our service. This time following Kubernetes we select a different CIDR range, 172.18.1.0/24. We also only expose port 8083 and will be load balancing the requests to our 2 pods.

| Service | IP | Host Port | Containers |

| svc | 172.18.1.1 | 8083 | container-1 |

| container-2 |

Adding IPtables rules

Until now, we have been having a single rule do all the filtering and routing needed. This created some duplications, like when we had the same rules for routing but for OUTPUT and PREROUTING chains. While making chains we will be changing things a little. We will be creating our own chains, and reducing duplications.

Starting off, we will create a SVC chain. This chain will contain all the necessary filtering and routing for services.

fedora@localhost:~$ sudo iptables -t nat -N SVC

We now create another chain for our container service,

fedora@localhost:~$ sudo iptables -t nat -N SVC-CNT

Next, we put the necessary OUTPUT and PREROUTING chains to our service chain.

fedora@localhost:~$ sudo iptables -t nat -A PREROUTING -j SVC

fedora@localhost:~$ sudo iptables -t nat -A OUTPUT -j SVC

next, is the rule pointing our virtual service IP 172.18.1.1/32 on port 8083 to the SVC-CNT chain.

fedora@localhost:~$ sudo iptables -t nat -A SVC -s 172.18.1.1/32 -p tcp --dport 8083 -j SVC-CNT

At this point, we just need to route the packets randomly to the containers. However, before that, I will also add a mark rule. This rule will allow me to later on filter out packets that need to have MASQUERADE rule applied, without having to duplicate all the filters again.

fedora@localhost:~$ sudo iptables -t nat -A SVC-CNT -j MARK --set-xmark 0x200

Then the routing to container rules,

fedora@localhost:~$ sudo iptables -t nat -A SVC-CNT -p tcp -m statistic --mode random --probability 0.5 -j DNAT --to-destination 172.18.0.2:8000

fedora@localhost:~$ sudo iptables -t nat -A SVC-CNT -p tcp -j DNAT --to-destination 172.18.0.3:8000

And finally the MASQUERADE rule,

fedora@localhost:~$ sudo iptables -t nat -A POSTROUTING -m mark --mark 0x200 -j MASQUERADE --random-fully

Now, if you curl on your host node,

fedora@localhost:~$ curl -s 172.18.1.1:8083/echo | jq

{

"header": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.6.0"

]

},

"body": "Echo response"

}

What makes IP virtual

Note, how our device is responding to the IP address that isn't currently assigned to any network interface in the device. The IP is just an address to dynamically map requests to our containers. Note with just this Virtual IP we can't get easy north-south traffic. As we would have to make the same changes on every device in the network. If you are configuring your cluster, this is possible but not so much when you want to expose your service to devices outside your network.

Subscribe to my newsletter

Read articles from Ranjan Ojha directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by