Mockree - AI Powered Job Interview Simulator

9th Tech

9th Tech

So first off if you get a lot or even a bit nervous on camera, forget your words, struggle to keep absolute composure because you're cursed with the knowledge of the conscious gaze of others then i strongly recommend this article.

Problem

We apply for jobs a lot, i myself personally live on LinkedIn and i equate my premium subscription to a rental bill, I've applied for thousand of Software Development jobs and I've gotten invited to more than a few interviews, though eventually with experience you kind of get use to them and don't get nervous anymore, but! your first few interviews will most often than not feel quite intimidating, especially when you're starting out as a beginner to things, I've been there and I remember how many interviews I bottled because this exact reason.

Solution

A way to solve this very personal issue is through effective practice, but practicing a realistic interview will mostly likely require more than yourself to be effective, the very reason of needing a extra party is more than enough to not go deeper into other reasons practicing job interviews may seem like overkill, because not only would you need a extra party, said party needs to be knowledgeable enough to ask questions worthy of a quality interview. Overall, to effectively implement this solution, a certain amount of dedication is needed, and sometimes might feel like you're doing too much considering high likeliness of not even getting the job

Well Mockree is here to save that, a web tool where you can practice Mock-like interviews provided by generative AI, which isn't just the best when it comes to up to date knowledge on basically all the fields of life, but also able to simulate real life voices, so it doesn't feel like you're talking to robot. I've taken a lot of pre interviews where you have record some video of yourself answering some questions, then send the video file via DropBox link or something, but with Mockree, companies using this method will not have to worry about having to grade interviewees manually.

Mockree Source Code here ->

Recently, i had made the decision to start posting more as a developer about tech stuff, created and grew my LinkedIn to 5K, which really isn't a lot but is insane for a place like LinkedIn. I decided; heck i could post videos too, help others the way tech youtubers like Fireship, JSM, Web Prodigies and co helped me become a better developer, so I created a YouTube channel also, thought of project to share and decided on this one.

Why all the yap? well Mockree was going to be my first project to record a tutorial for on my channel, so if you want to learn how i built this application with Next 15, Typescript, TailwindCSS, Shadcn UI, Clerk, Drizzle and a few other tools from scratch, literally from empty folder to deployment, then subscribe and click the bell icon on my very empty YouTube channel, for it's only a matter time before i drop a full tutorial from start to finish, i learnt a lot working this project, things only full stack devs can relate too.

And since i will be posting a full tutorial on the development process of this project, i deemed it unnecessary to bore my readers with technical code explanations, I'll save that for the GitHub readme. Instead let me give you a full gist instead of the development process, what i learnt and my general experience so far.

Development Process

Working on this project has been quite the adventure. Grab a coffee, get comfy, and let me take you through this wild ride of coding, problem-solving, and me occasionally facepalming myself.

Tech Stacks

I already decided i was going to post web development tutorials with NextJs, so in an underwhelming format, i went with these weapons:

Next.js 15 for the frontend (and a bit of backend magic)

Clerk for authentication

TypeScript (well because i wanted Shadcn dark mode feature on the app)

Tailwind CSS and ShadcnUI for styling (life's too short for vanilla CSS)

Drizzle ORM with PostgreSQL on NeonDB for data management

Google Gemini for the AI brains of the operation

Boilerplate

Ran create-next-app in my terminal, and we were clear for takeoff with the NextJs starter. Installed some dependencies, and got a basic layout going. The real fun began when I started integrating Clerk for auth. That Middleware dude can be a sly beast sometimes, especially since clerk deprecated some familiar functions in their latest update, but fixing it was a no brainer, and once I got it working it was smooth sailing.

Also working with the Experimental Clerk Element feature was hella fun also, i always disliked clerk for having a watermark on an auth screen, but for this new feature they definitely cooked here, even in Experimental mode. can't wait for the stable release.

AI Integration

Tho Google Gemini wasn't and wouldn't be my first choice building an app like this, but it was by far the most affordable (basically free), so it was perfect. setting it up following the docs within the scope of my use case was also a walk in park. Spent hours tweaking prompts to generate realistic interview questions based on job descriptions and experience levels (lol who am i kidding, i generated it with ChatGPT).

The real struggle came when i needed to format the JSON output. The moment I saw the first properly formatted JSON response from the AI, I did a little victory dance in my chair.

npm install @google/generative-ai

import { NextResponse } from 'next/server';

import { GoogleGenerativeAI } from '@google/generative-ai';

export async function POST(request: Request) {

const apiKey = process.env.GEMINI_API_KEY;

if (!apiKey) {

return NextResponse.json({ error: 'API key not configured' }, { status: 500 });

}

const genAI = new GoogleGenerativeAI(apiKey);

const model = genAI.getGenerativeModel({ model: "gemini-1.5-flash" });

try {

const { jobPosition, jobDesc, jobExperience } = await request.json();

const InputPrompt = `Generate ${process.env.NEXT_PUBLIC_INTERVIEW_QUESTION_COUNT} interview questions for a

${jobPosition} position with ${jobExperience} years of experience. Job description: ${jobDesc}.

Return only a JSON array of objects, each with 'question', 'answer', and 'difficulty' fields.`;

const result = await model.generateContent(InputPrompt);

const response = await result.response;

let text = response.text();

// Remove any markdown formatting if present

text = text.replace(/```json\n?|\n?```/g, '').trim();

// Validate JSON

JSON.parse(text);

return NextResponse.json({ result: text });

} catch (error) {

console.error('Error generating interview questions:', error);

return NextResponse.json({ error: 'Failed to generate interview questions' }, { status: 500 });

}

}

Database

Working with Drizzle ORM was new especially since i was already familiar with Prisma, but this was my opportunity to learn something new, so it was no brainer choosing Drizzle. There were moments when I subtly questioned my life choices on that right there. But honest right now after getting the hang of Drizzle, i can't choose which is better and i am not a biased person with tech tools(or maybe i am).

npm i -D drizzle-kit

import { defineConfig } from 'drizzle-kit'

const dbUrl = process.env.DRIZZLE_DB_URL; //removed NEXT_PUBLIC_

if (!dbUrl) {

throw new Error('Database URL is not defined');

}

export default defineConfig({

schema: "./utils/schema.ts",

dialect: 'postgresql',

dbCredentials: {

url: dbUrl,

},

verbose: true,

strict: true,

})

Setting up Neon was a breeze as i would expect, just create an account and they slap you with a free tier 500mb server for hobby project, plug in the link to your environment variables and you're done.

UI/UX

I didn't have enough time to cook up a design in Figma first, so i made the UI as simple as possible with the most interesting components from Shadcn while Aceternity UI was particularly satisfying to work with, sometimes can be pain, but more like a pinch.

The Webcam

Adding webcam functionality for mock interviews was... an experience. Let's just say I now have a deep appreciation for browser permissions and the quirks of media devices and also scary it the power kind of feel. Seeing that first video feed come through looking at my sleep deprived face? Priceless.

npm install react-webcam

const [webCamEnabled, setWebCamEnabled] = useState(false);

{webCamEnabled ? <CardContainer>

<CardBody

<CardItem>

Permissions Granted

You can now proceed to the Interview

</CardItem>

<CardItem>

//webcam component

<Webcam

onUserMedia={() => setWebCamEnabled(true)}

onUserMediaError={() => setWebCamEnabled(false)}

mirrored={true}

/>

</CardItem>

<div>

<CardItem>

Grant now →

Start Interview

</CardItem>

</div>

</CardBody>

</CardContainer>

:

<>

<CardContainer>

<CardBody >

<CardItem>

Let's Get Started

Grant Webcam and Microphone Permissions

</CardItem>

<div>

<CardItem>

Allow

</CardItem>

</div>

</CardBody>

</CardContainer>

</>

}

Challenges and Learnings

JSON Parsing

The AI responses needed some serious cleaning before they could be parsed. I spent an embarrassing amount of time debugging this, was even looking for npm packages tools that could clean JSON files, even tried to clean the JSON with Python(wondered what i was thinking right there) because i was so fed up. Only to realize I only needed a simple regex to remove some pesky quotes. JavaScript is the best language in the world 🙂

State Management

Keeping track of interview progress, user answers, and AI feedback taught me a lot about state management in React. UseEffect and UseCallback became my best friends and occasional ops.

API Routes and Security

Moving from client-side database calls to API routes was a crucial step in securing the app. It was a good reminder that convenience shouldn't come at the cost of security, and inconvenient i surely was.

Responsive Design is Key when it's Needed

Though the app is built to be primarily used on a PC, the landing page wasn't. Building the landing page made me realize the importance of mobile-first design.

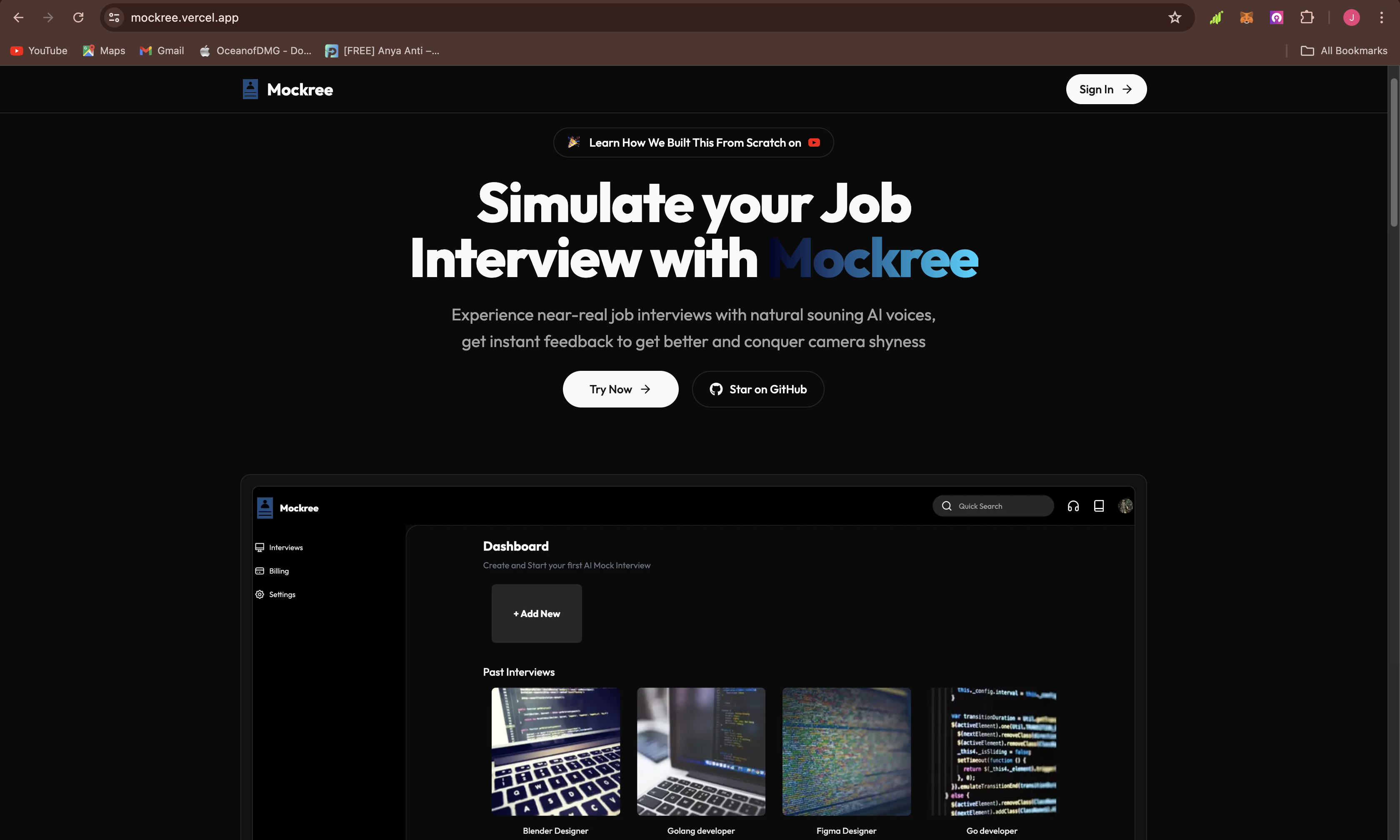

How To Use Mockree

I know some of you who are still reading till this point are already asking the question of how to use Mockree, when landing upon the landing page (terrible pun intended), you can just click Sign In on the Navbar to the auth page. at this stage i strong advise using either the Google or GitHub sign in.

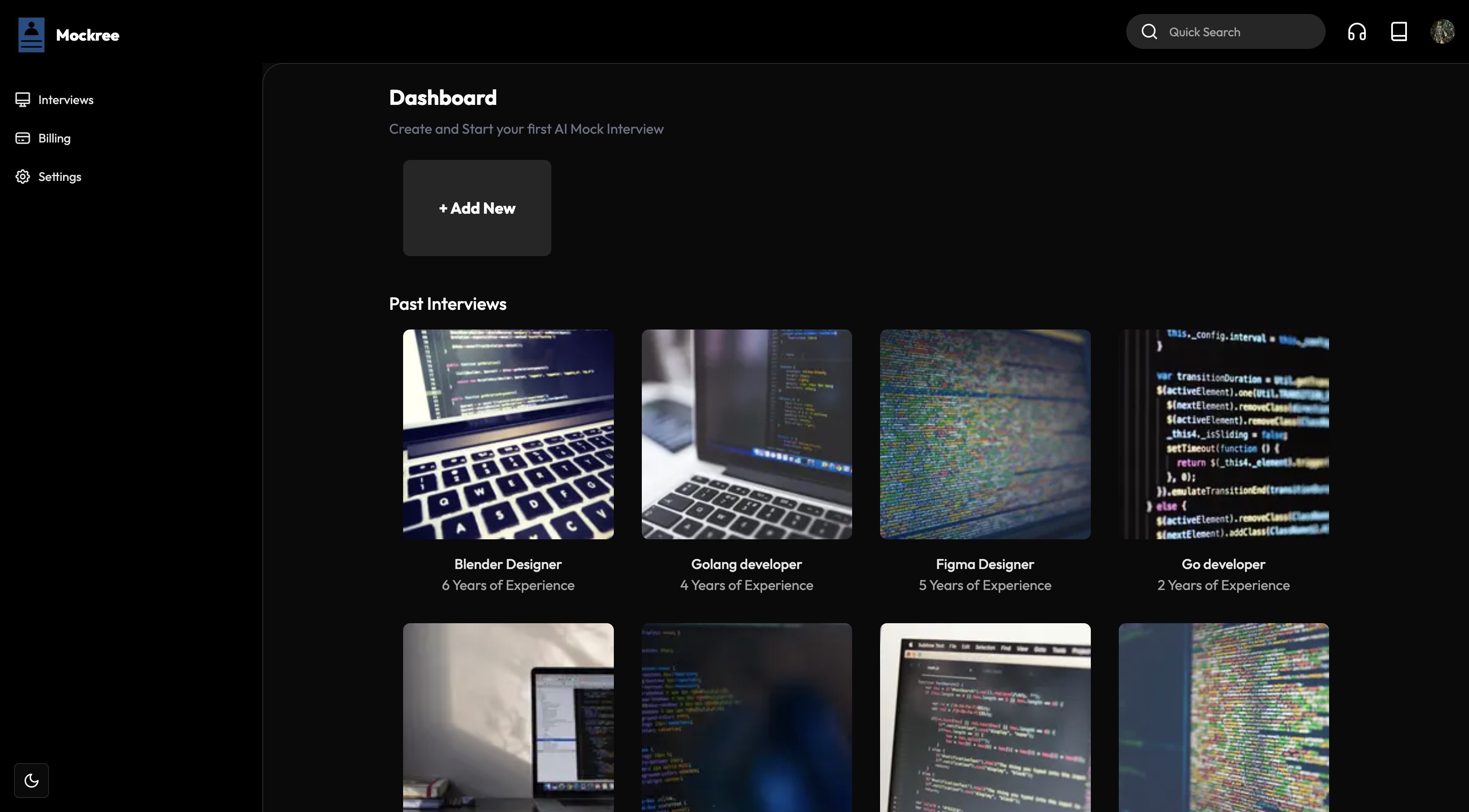

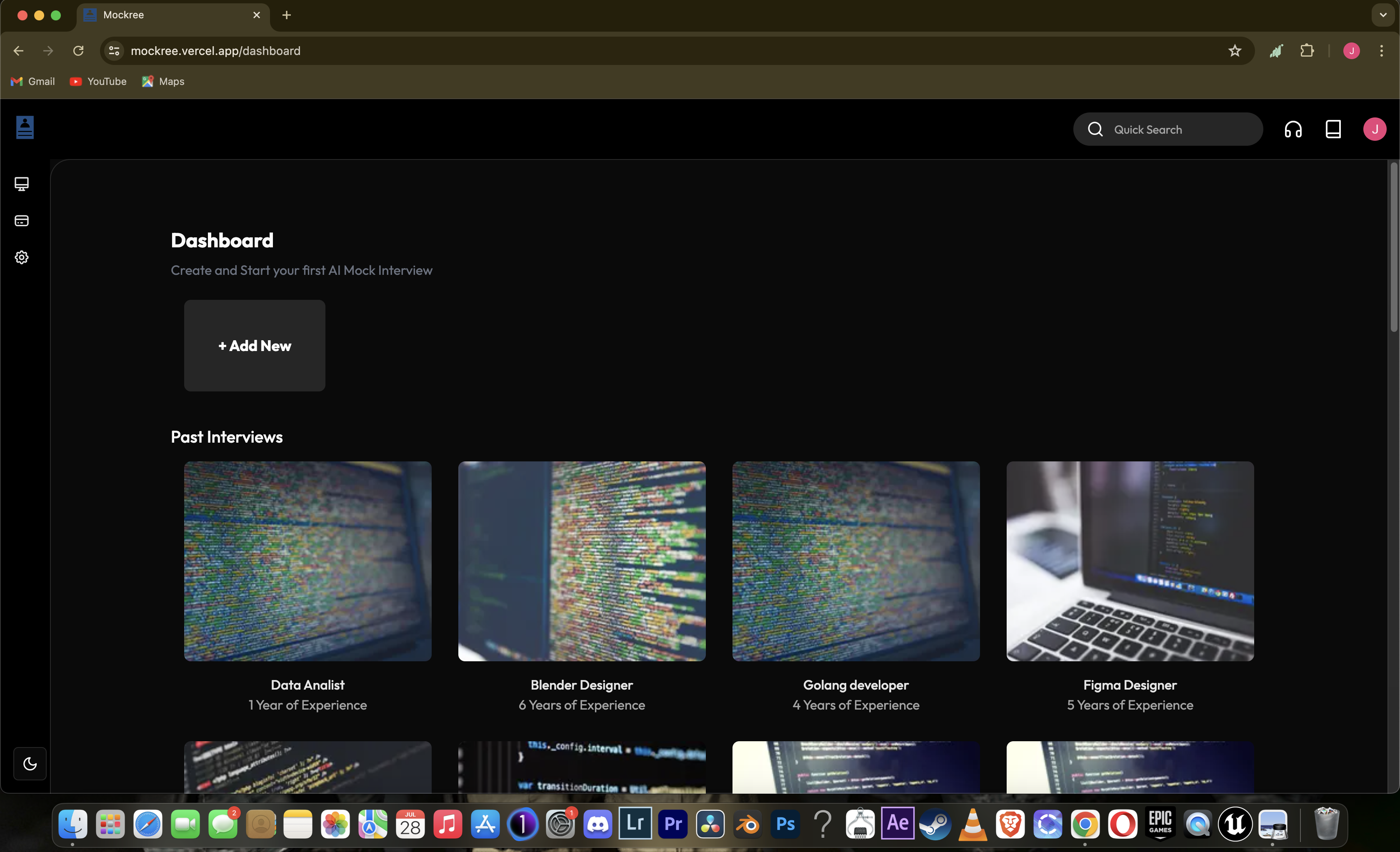

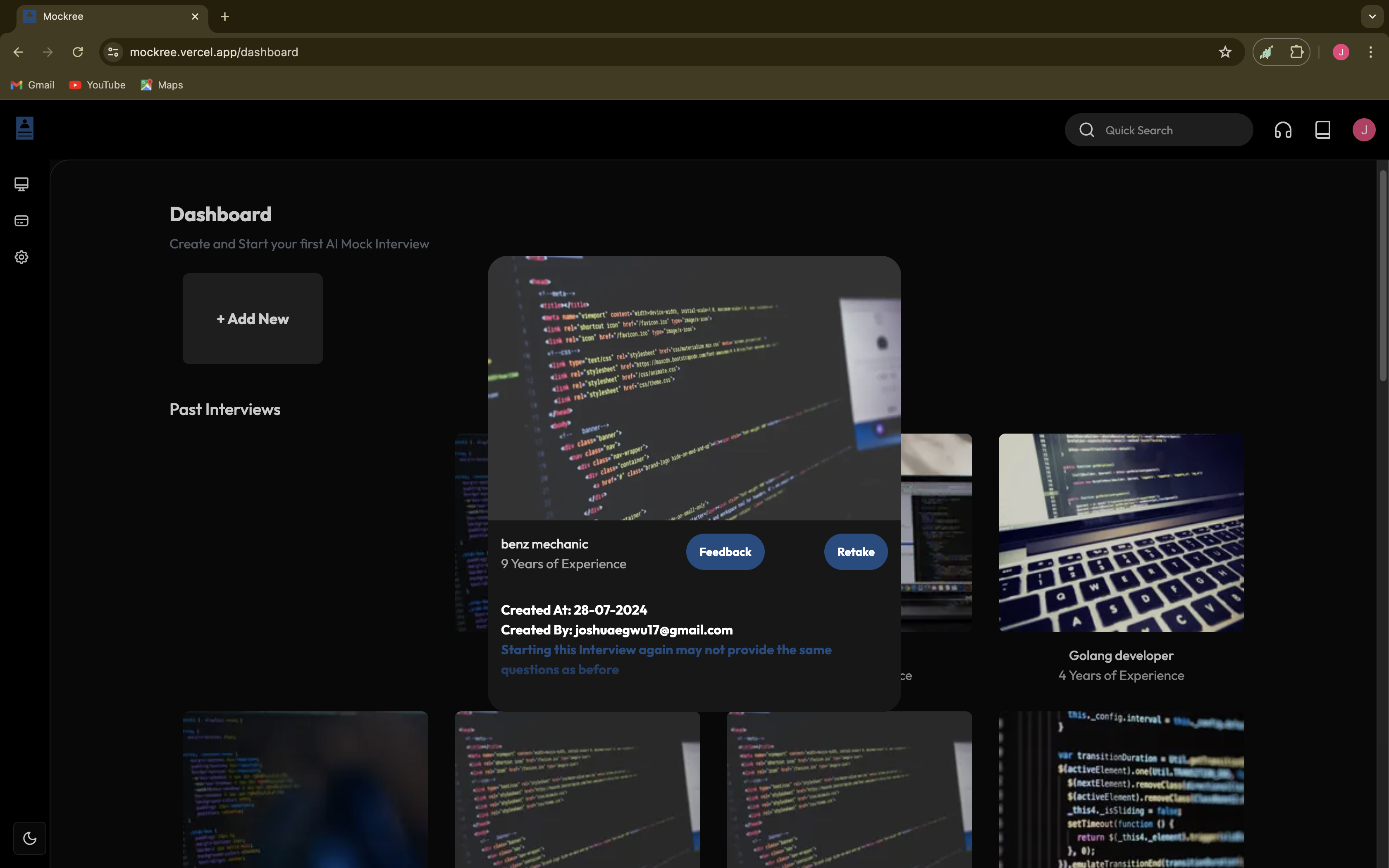

The Dashboard

As a new user, the dashboard may or mayn't be empty depending on if i remembered to fetch past interviews by specific user or all users 🤔(it's literally a one liner), but once you take an interview, it get logged in the Past Interviews tab where you can retake or re visit your past feedbacks incase you might want to take notes

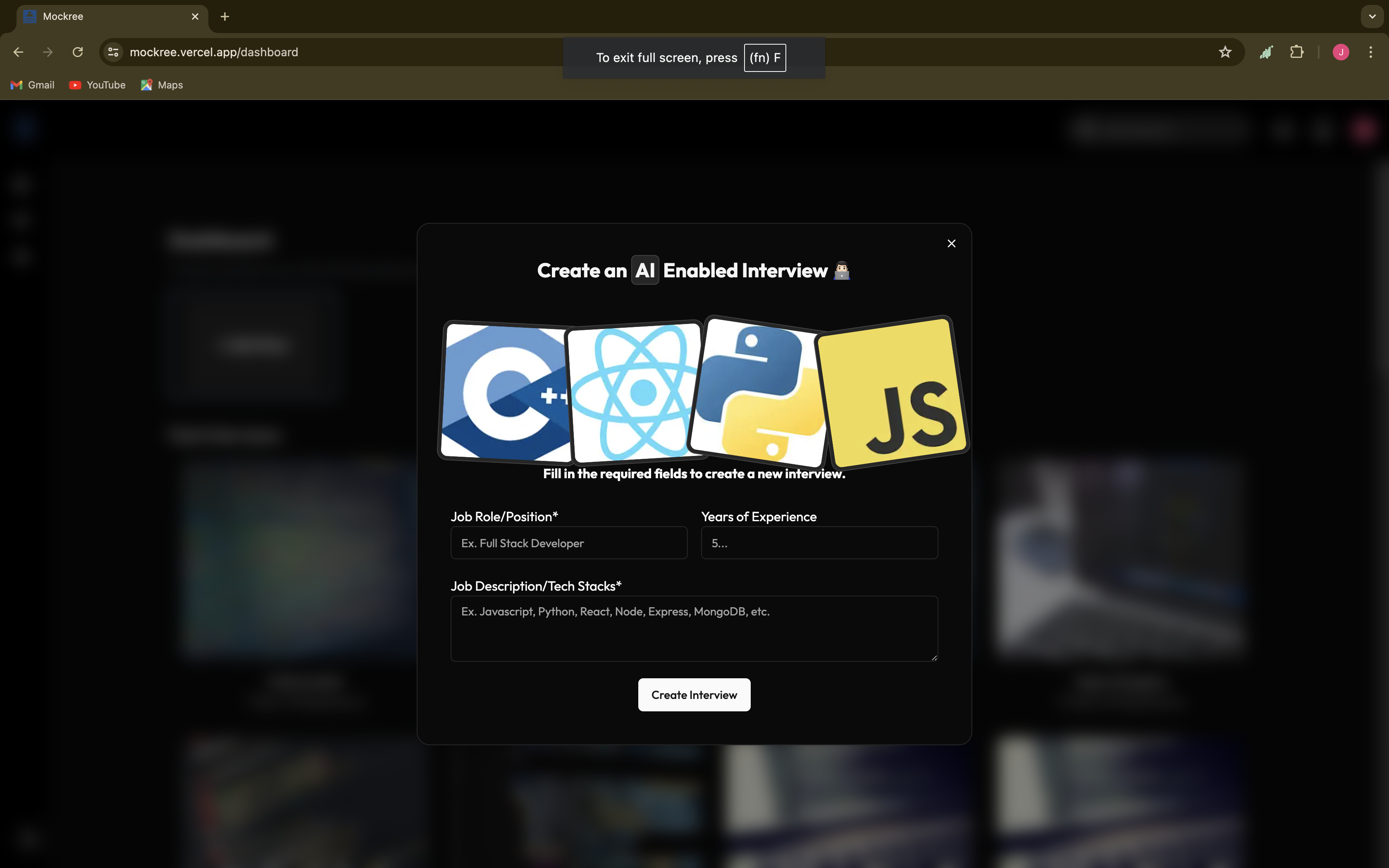

Start a new interview

On the dashboard page, there's a huge 'Add New' button you could click to start a new interview, put in your desired details as shown in the pop-up modal and create a new interview

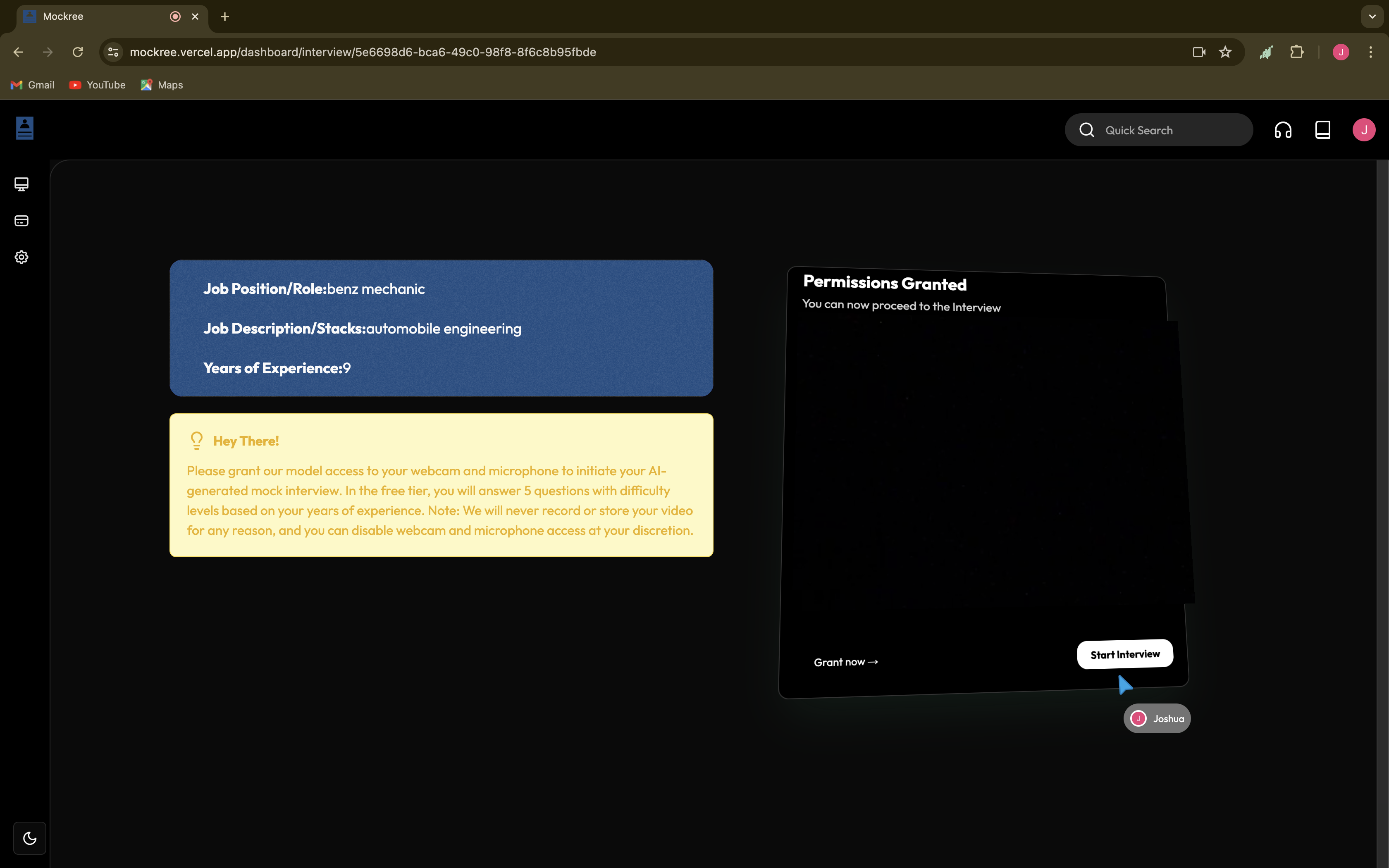

Permissions Screen

Here you just get some cards showing the info you put in the modal and another card for video capturing permissions. Fun fact, it's just for the illusion of being recorded because as stated on one of those cards, a video is not actually being recorded, even though it can but i didn't implement it because i don't think the 500mb NeonDB instance would be enough to contain them all on my budget (which is $0 btw), plus also it would be quite sus to think some random dude somewhere has recordings of you embarrassing yourself trying to remember the answer to "How to center a div"

Questions

After setting up permissions and previewing it, you enter your interview and can see your questions, you always have 5 questions at a time, amount of questions can be increased in the billing section. you can click on the little voice icon to play a Text to Speech version of the question, we can use Eleven Labs, OpenAI, or basically any third party service to run the TTS function, in our case using Google TTS API from Google Cloud, it's free but with the small inconvenience of setting up a Credit/Debit card.

npm install @google-cloud/text-to-speech

const [googleTTS, setGoogleTTS] = useState<any>(null);

useEffect(() => {

// Initialize Google Cloud TTS

const initGoogleTTS = async () => {

try {

const { TextToSpeechClient } = await import('@google-cloud/text-to-speech');

const client = new TextToSpeechClient();

setGoogleTTS(client);

} catch (error) {

console.error('Failed to initialize Google Cloud TTS:', error);

}

};

initGoogleTTS();

}, []);

const textToSpeech = async (text: string) => {

if (googleTTS) {

try {

const request = {

input: { text },

voice: { languageCode: 'en-US', ssmlGender: 'NEUTRAL' },

audioConfig: { audioEncoding: 'MP3' },

};

const [response] = await googleTTS.synthesizeSpeech(request);

const audioContent = response.audioContent;

const audio = new Audio(`data:audio/mp3;base64,${audioContent}`);

audio.play();

} catch (error) {

console.error('Google TTS failed:', error);

fallbackToBuiltInTTS(text);

}

} else {

fallbackToBuiltInTTS(text);

}

};

const fallbackToBuiltInTTS = (text: string) => {

if ('speechSynthesis' in window) {

const speech = new SpeechSynthesisUtterance(text);

window.speechSynthesis.speak(speech);

} else {

alert('Your browser does not support text to speech');

}

};

There's also a record button under the window where you're being "recorded", press it to start recording your answer and click the same button to stop the recording. The recording will be transcribed and saved on the database whilst being sent to Google Gemini for feedback, if the total recorded characters is less than 10, it won't take your answer, forcing you'll have to answer a lot more expressively(or vaguely if you wish). Note that the app does not store audio also(even though it can), the transcription is done on the client and only the transcribed text is sent out. This is because i am trying to save precious space in the db so that the app can accommodate a lot more users before i start scaling.

const RecordAnswer: React.FC<RecordAnswerProps> = ({ mockInterviewQuestion, activeQuestionIndex, interviewData, isNewInterview, answeredQuestions, setAnsweredQuestions, onAnswerSaved }) => {

const [userAnswer, setUserAnswer] = useState('');

const { user } = useUser();

const [loading, setLoading] = useState<boolean>(false);

useEffect(() => {

if (typeof window !== 'undefined') {

const savedAnswers = localStorage.getItem('answeredQuestions');

if (isNewInterview) {

localStorage.removeItem('answeredQuestions');

setAnsweredQuestions(new Set());

} else if (savedAnswers) {

setAnsweredQuestions(new Set(JSON.parse(savedAnswers)));

}

}

}, [isNewInterview, setAnsweredQuestions]);

useEffect(() => {

if (answeredQuestions.size > 0) {

localStorage.setItem('answeredQuestions', JSON.stringify(Array.from(answeredQuestions)));

}

}, [answeredQuestions]);

const {

error,

interimResult,

isRecording,

results,

startSpeechToText,

stopSpeechToText,

} = useSpeechToText({

continuous: true,

useLegacyResults: false

});

useEffect(() => {

if (results.length > 0) {

const latestResult = results[results.length - 1];

if (typeof latestResult === 'object' && latestResult.transcript) {

setUserAnswer(prevAns => prevAns + ' ' + latestResult.transcript);

}

}

}, [results])

const SaveUserAnswer = async () => {

if (isRecording) {

stopSpeechToText();

// UpdateUserAnswer will be called by the useEffect hook

} else if (!answeredQuestions.has(activeQuestionIndex)) {

setUserAnswer(''); // Reset the answer when starting a new recording

startSpeechToText();

} else {

toast('You have already answered this question');

}

}

const UpdateUserAnswer = useCallback(async () => {

if (userAnswer.length < 10) {

setLoading(false);

toast('Answer Length too short, please record again');

setUserAnswer('');

return;

}

setLoading(true);

try {

const response = await fetch('/api/record-answer', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

mockInterviewQuestion,

activeQuestionIndex,

userAnswer,

interviewData,

user,

}),

});

if (!response.ok) {

throw new Error('Failed to save answer');

}

const data = await response.json();

if (data.result) {

toast('Answer Saved Successfully');

onAnswerSaved(activeQuestionIndex);

setAnsweredQuestions(prev => new Set(prev).add(activeQuestionIndex));

}

} catch (error) {

console.error('Error saving answer:', error);

toast('Failed to save answer. Please try again.');

} finally {

setUserAnswer('');

setLoading(false);

}

}, [userAnswer, activeQuestionIndex, mockInterviewQuestion, interviewData, user, onAnswerSaved, setAnsweredQuestions]);

useEffect(() => {

if (!isRecording && userAnswer.length > 0) {

UpdateUserAnswer();

}

}, [isRecording, userAnswer, UpdateUserAnswer]);

}

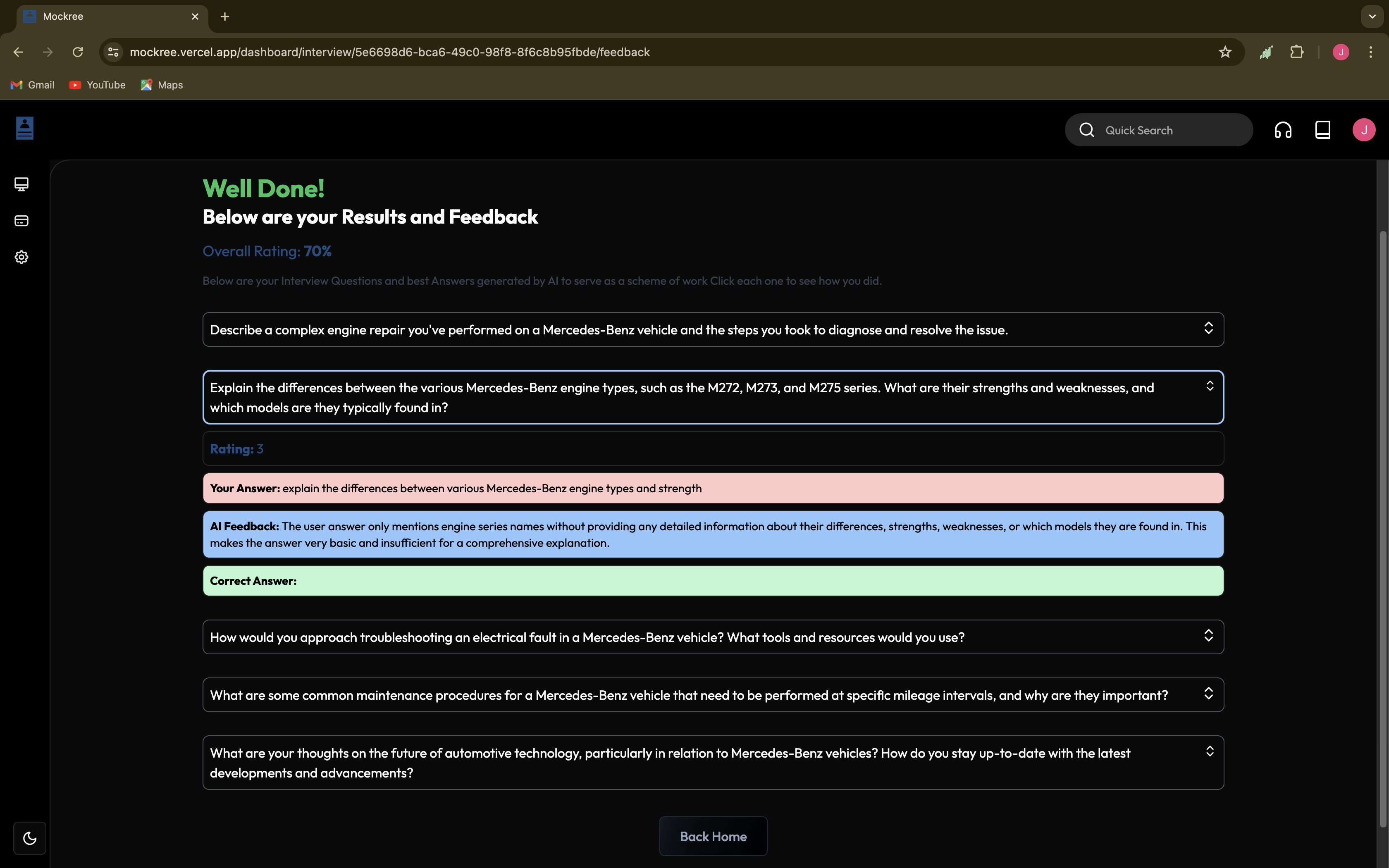

Feedback

The interviewee will only be able to move to the next question after the recorded answer transcription is being saved, only then will the 'Next Question' Button will be enabled, this logic applies to all five questions. When you're done answering all 5 questions, end the interview and see your feedback which includes the questions asked, your transcribed answers for each question, a rating on a scale of 1-10 with an opinion for how well your answer correlates to the question and what the AI thinks is the best answer to the question.

After which you can go back home and see your interview in the 'Past Interview' tab, where you can access your last feedback and even retake the interview again.

The Road Ahead

Mockree is still far from completely finished. There's still so much I want to add:

Improved feedback mechanisms (probably with better Models)

Integration with more AI models (so users could select and metric between different model)

Maybe, just maybe, a mobile app down the line(idk about this tho)

And a lot more realistic features

Wrapping Up

Building Mockree has been an nice learning experience especially in the space of two weeks(yes i stayed up all night half the time). It's pushed me to explore new technologies, solve complex problems, endure Typescript, and create something I'm truly proud of. To web devs out there working on passionate projects: keep pushing, keep learning, and don't forget to celebrate the small victories along the way.

If you're curious about Mockree or want to try it out live, head over here. And if you're a fellow developer with ideas or feedback on it, you're welcome to contribute to the GitHub repo, i am curious to see your ideas as i can also be reached here on LinkedIn, and also don't forget to keep an eye on when i drop the full development tutorial video of this project on YouTube.

Happy coding, and may your interviews be ever in your favor! 🚀👨💻👩

Subscribe to my newsletter

Read articles from 9th Tech directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by