Secure EKS Cluster

KORLA GOUTHAM

KORLA GOUTHAM

Hello Every One In this blog, I would like to explain my simple EKS project.

I have created an EKS cluster with private access using config file and deployed my simple NGINX pods in the private subnets. Additionally, I deployed the NGINX Ingress Controller pod, which creates an NLB in a public subnet. I have secured my ingress with a TLS certificate from Let's Encrypt. I obtained a free domain from Infinityfree Instead of using the AWS VPC CNI, I opted for Calico networking. I have used VPC Endpoints to connect AWS ECR to the pods which use image deployed on ECR so that the traffic will stay in AWS itself. There are advantages to using Calico over AWS CNI, which I will discuss later.

Creating EKS Cluster using Config file

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: secure-eks-cluster-1

region: ap-south-1

privateCluster:

enabled: true

vpc:

id: vpc-0696a952afbedc41e

subnets:

public:

ap-south-1a:

id: subnet-077f42f899cebc65a7

ap-south-1b:

id: subnet-054707279b5eb56a8

private:

ap-south-1a:

id: subnet-048bc3f89b5eb56a8

ap-south-1b:

id: subnet-054707279cebc65a7

ap-south-1c:

id: subnet-048bnjc5b81003279

Eks cluster will only be created if the VPC has certain configurations.

I have deployed a Cloud9 instance in the same VPC so that I could able to communicate to EKS having a private access.

Install the VPC using the cloud formation template available . EKS Cluster with private access will be created.

Calico CNI

In order to generate a Calico networking delete already existing aws vpc-cni daemon set

kubectl delete daemonset -n kube-system aws-node

Now we have to install Calico CNI using Helm

helm repo add projectcalicohttps://docs.tigera.io/calico/charts

helm repo update

helm install calico projectcalico/tigera-operator --version v3.28.0

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: secure-eks-cluster-1

region: ap-south-1

nodeGroups:

- name: ng-private

instanceType: t3.medium

desiredCapacity: 2

privateNetworking: true

maxPodsPerNode: 100

ssh:

allow: true

publicKeyName: my-keypair # Replace with your SSH key pair name

The node group has been launched in the private subnet because of the --node-private-networking option.

Setting --max-pods-per-node=100 is necessary because, without it, EKS limits the number of pods on a node based on the node type. If we don't specify this, it behaves like the VPC CNI, which means that even if the node resources are not exhausted, it will limit the number of pods. VPC CNI creates many secondary IPs based on the node type, allowing that many pods to be scheduled on the node. However, if we use Calico, the maximum number of pods is scheduled on the node until the resources are exhausted.

To apply our own networking rules, we need to download calicoctl

https://docs.tigera.io/calico/latest/operations/calicoctl/install

#pool.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: pool2

spec:

cidr: 10.0.0.0/16

ipipMode: Always

natOutgoing: true

Using calicoctl apply -f pool.yaml now kubernetes use 10.0.0.0/16 CIDR to pods , services etc..

Deploying Nginx Pods

Now I deployed a 2 simple nginx deployments and attached 2 services to it.

POD-1.yaml

Nginx Image is deployed on ecr

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dev

spec:

replicas: 1

selector:

matchLabels:

app: dev

template:

metadata:

labels:

app: dev

spec:

containers:

- name: nginx-pod

image: AWS-ACCOUNT-ID.dkr.ecr.ap-south-1.amazonaws.com/nginx

command: ["/bin/sh"]

args: ["-c", "echo 'Hello, this is dev page' > usr/share/nginx/html/index.html && exec nginx -g 'daemon off;'"]

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: devsvc

spec:

selector:

app: dev

ports:

- port: 80

targetPort: 80

POD-2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-test

spec:

replicas: 1

selector:

matchLabels:

app: test

template:

metadata:

labels:

app: test

spec:

containers:

- name: nginx-pod

image: AWS-ACCOUNT-ID.dkr.ecr.ap-south-1.amazonaws.com/nginx

command: ["/bin/sh"]

args: ["-c", "echo 'Hello, this is test page' > usr/share/nginx/html/index.html && exec nginx -g 'daemon off;'"]

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: testsvc

spec:

selector:

app: test

ports:

- port: 80

targetPort: 80

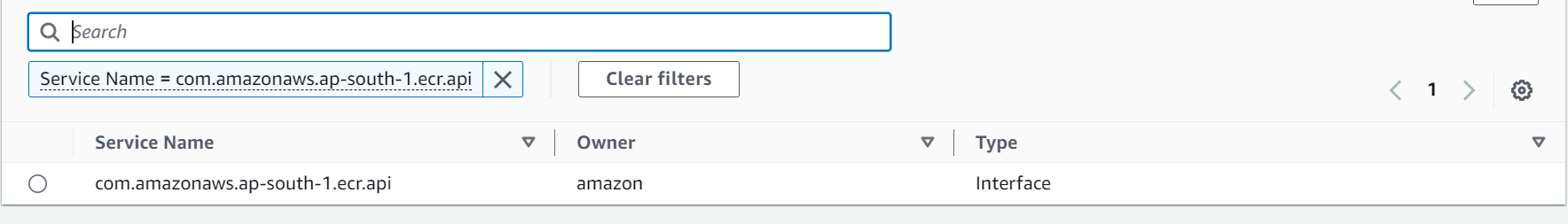

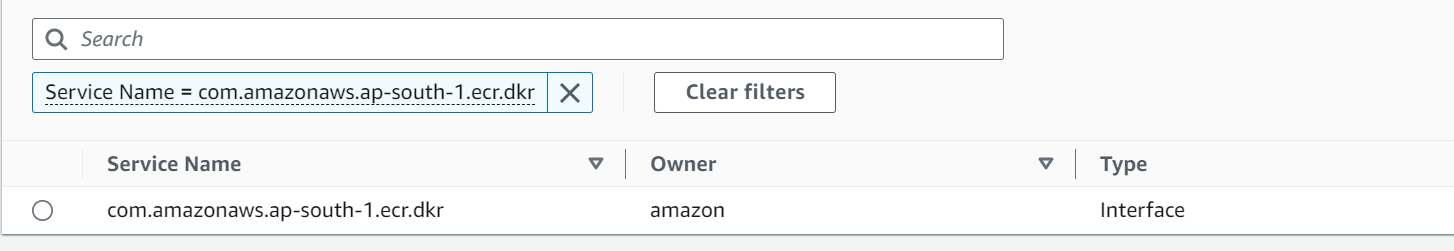

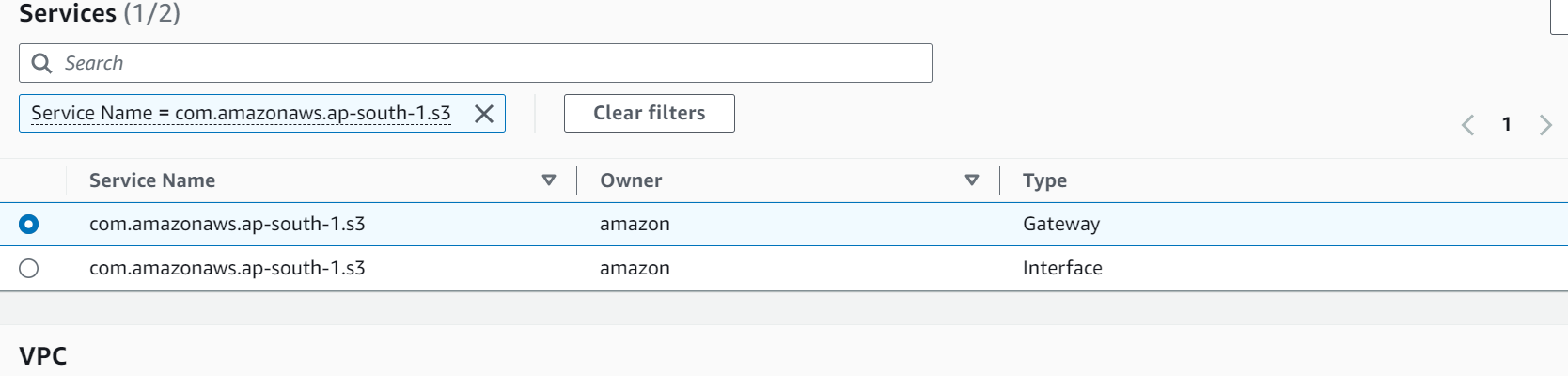

Lets us use Endpoints to connect to ECR so that traffic will stay with in VPC

Creating Endpoints

I have already written a detailed blog on endpoints. Basically Endpoints are used to connect one aws service to other (especially when one aws service is in private subnet) with out the NAT Gate way.

First We have to create a ecr endpoint and place in the private subnets

Then Docker endpoint as ecr use docker commands

Then S3 Gateway Endpoint as Container Image layers are stored in S3.

Now pods and services are up and running. Next comes to ingress.

Nginx Ingress Controller

Deploy a ingress controller pod which monitors ingress rules.

I have chosen nginx ingress controller. I have deployed using helm.

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

helm install my-ingress ingress-nginx/ingress-nginx \

--namespace ingress-nginx \

--create-namespace \

--set controller.service.type=LoadBalancer \

--set controller.service.annotations."service\.beta\.kubernetes\.io/aws-load-balancer-type"="nlb"

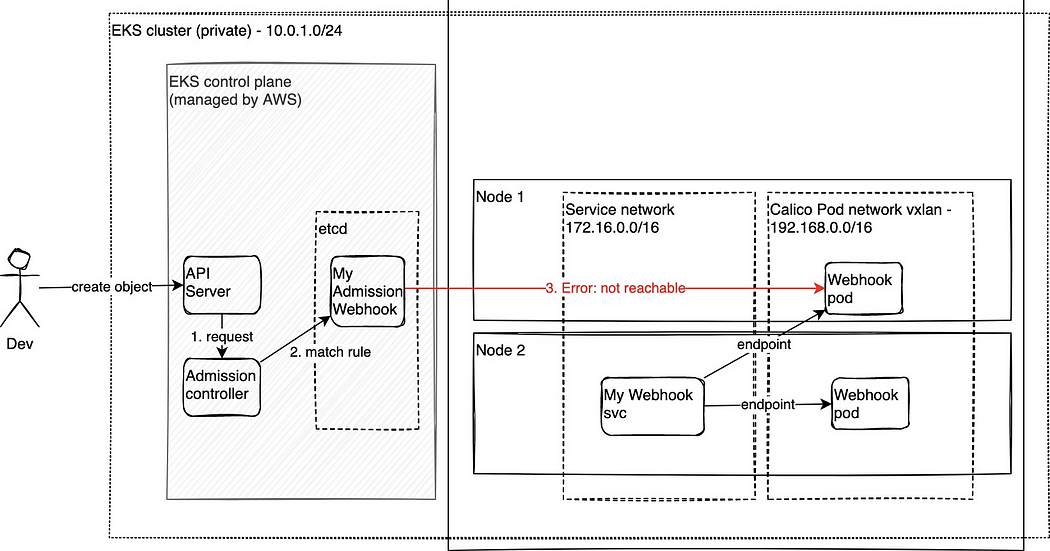

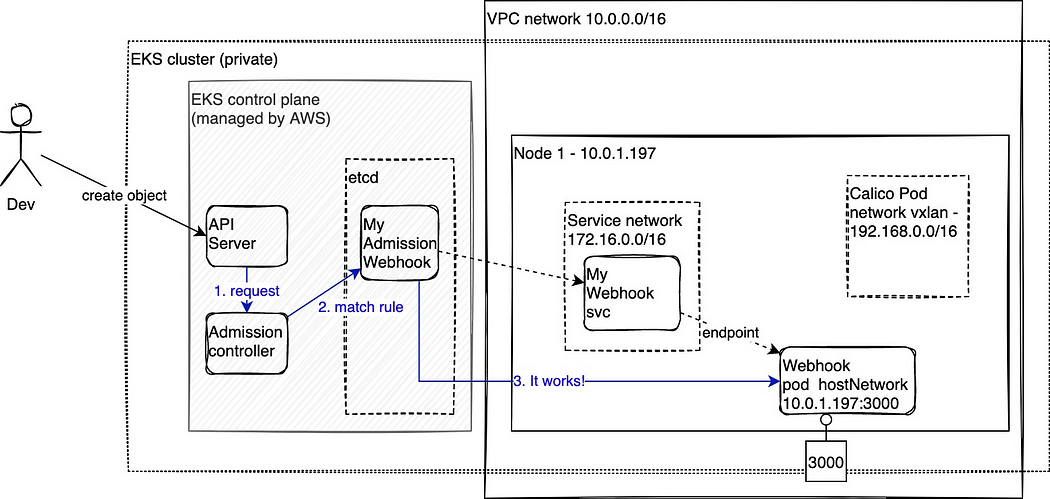

When we try to create ingress resource we get this error

Error from server (InternalError): Internal error occurred: failed calling webhook "myservice.mynamespace.svc":Post"https://myservice.mynamespace.svc:443/mutate?timeout=30s": Address is not allowed

When using EKS with only Calico CNI the Kubernetes API server on the control plane (managed by AWS) cannot reach webhooks that use a service pointing to pods on Calico pod network.

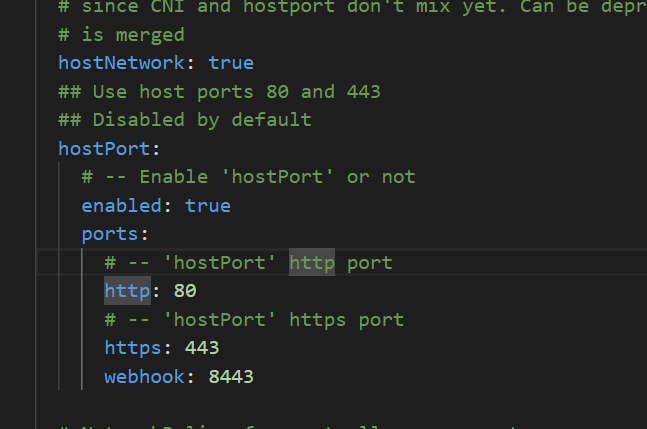

I used a setting “hostNetwork: true” to the nginx controller pod so it exposes a port in every Node. This way the Kubernetes service pointing to the pod would have <nodeIP:hostPort> in the endpoints.

As the node IP is part of the VPC the EKS Master can reach it out successfully.

Note that you have containerPort and hostPort in the pod/spec/container/ports but containerPort must match hostPort.

Images copied from @denisstortisilva medium blog

So in values.yaml file I have changed host Network to true and hostPort enabled for nginx ingress controller pod.

Now I saved from error and when tried to create a ingress resource successfully created.

SSL/TLS certificate

Now I have secured ingress using SSL certificate for my domain.

I got a free domain from dash.infinityfree.com . I have created a CName record there itself.

C Name record means when we hit domain there must be record in DNS, where this domain has to transfer the traffic to . Here it has to transfer to Network Load balancer right.

Install the cert-manger which provides the certificates using helm.

helm repo add jetstack https://charts.jetstack.io --force-update

helm install \

cert-manager jetstack/cert-manager \

--namespace cert-manager \

--create-namespace \

--version v1.15.1 \

--set crds.enabled=true

Now a bunch of crds and cluster role bindings, cluster roles get installed.

Self Signed Certificates

Now lets try to generate a self signed certificates and use it with ingress.

To do this firstly generate a issuer and certificate request. This request will be fulfilled by issuer and then cert-manager generates a secret and this secret which represents the self signed certificate has to be used in ingress rules along with our domain.

#Issuer.yaml

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: test-selfsigned

namespace: cert-manager-test

spec:

selfSigned: {}

# Certificate _request.yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: selfsigned-cert

namespace: cert-manager-test

spec:

dnsNames:

- devops.goutham.wuaze.com

secretName: selfsigned-cert-tls

issuerRef:

name: test-selfsigned

We are asking for this devops.goutham.wuaze.com domain generate a secret named selfsigned-cert-tls in namespace cert-manager-test to issuer.

Issuer then creates the certificate then cert-manager places the certificate as secret in namespace cert-manager-test.

Lets use the secret in ingress rules.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

namespace: default

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

spec:

ingressClassName: nginx

tls:

- hosts:

- devops.goutham.wuaze.com

secretName: selfsigned-cert-tls

defaultBackend:

service:

name: defaultservice

port:

number: 80

rules:

- host: devops.goutham.wuaze.com

http:

paths:

- path: /dev

pathType: Prefix

backend:

service:

name: devsvc

port:

number: 80

- path: /test

pathType: Prefix

backend:

service:

name: testsvc

port:

number: 80

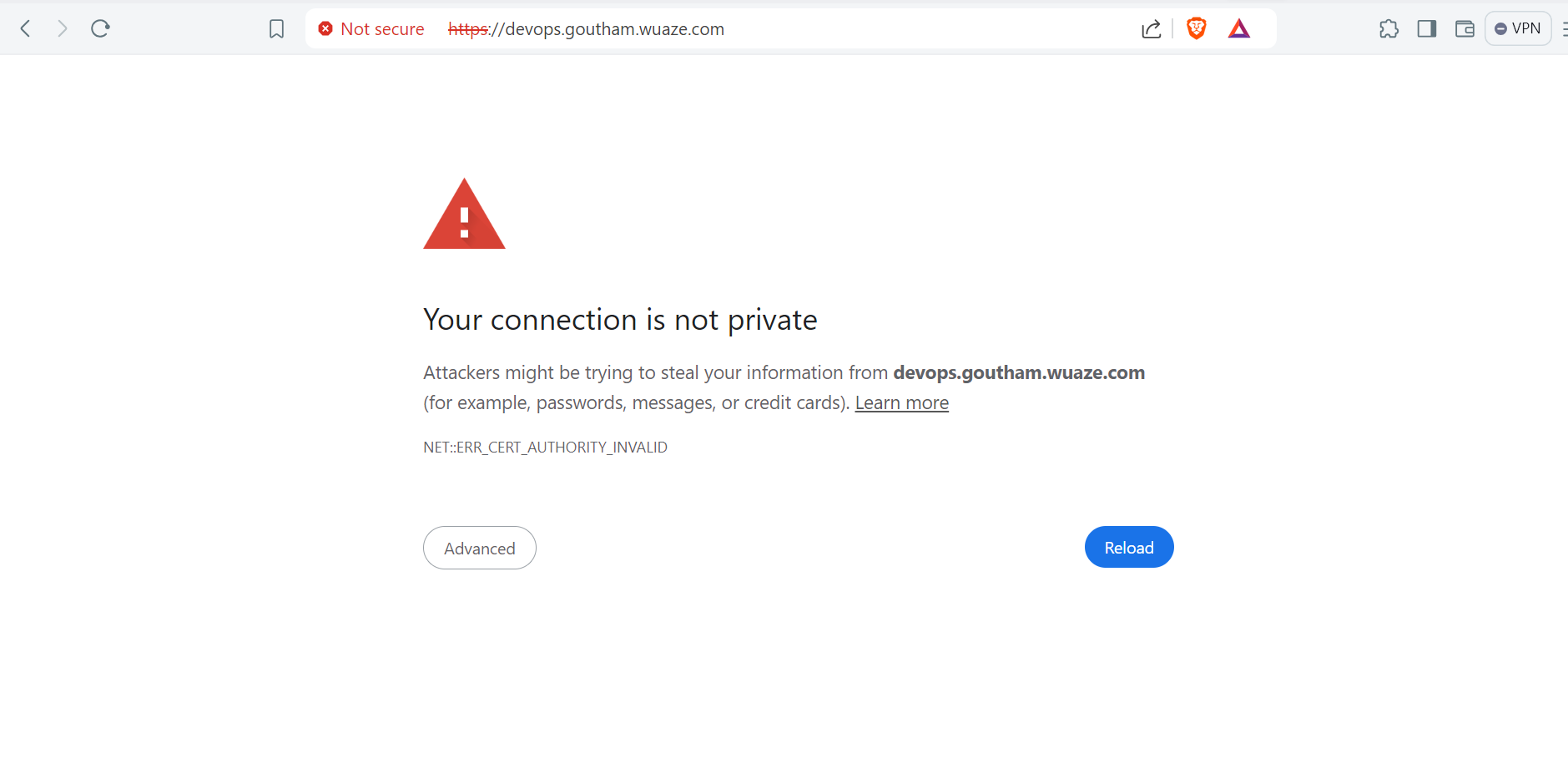

As our certificate is not trusted we will get this page.

Lets Encrypt

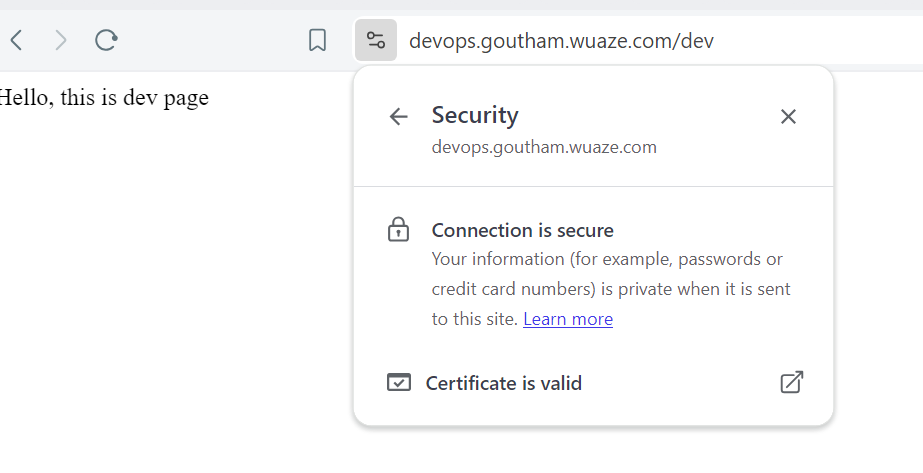

Lets Encrypt is a trusted certificate Authority when cert manager generates a certificate signing request lets encrypt generates a challenge , cert-manager fulfills it and lets encrypt provides a certificate. Now Our Certificate is signed by Lets Encrypt means our public key is encrypted with CAs private key.

Now lets generate the same Issuer as Lets Encrypt and a Certificate request.

# Issuer.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-cluster-issuer

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: gouthamkorla1023@gmail.com

privateKeySecretRef:

name: letsencrypt-cluster-issuer-key

solvers:

- http01:

ingress:

class: nginx

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: nginx-app

namespace: default

spec:

dnsNames:

- devops.goutham.wuaze.com

secretName: nginx-app-tls

issuerRef:

name: letsencrypt-cluster-issuer

kind: ClusterIssuer

Now replacing the nginx-app-tls secret name in the ingress resource provides

That's it this is my simple secure EKS Project. Hope you liked it. Thanks for reading my article have a great day.

Subscribe to my newsletter

Read articles from KORLA GOUTHAM directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

KORLA GOUTHAM

KORLA GOUTHAM

I am an Undergraduate having having a good Knowledge in Frontend development with React.js,competetive programming with C++, AWS fundementals.