AI Adventures at a HealthTech Hackathon: A Developer's Perspective

Marika Bergman

Marika Bergman

I recently participated in MediHacks 2024 hackathon. During the hackathon, our team accepted the challenge of creating an AI-powered conversational co-pilot for emergency dispatchers. As emergency dispatchers' training and guidebooks are often outdated, there is a need to develop a training tool, that would leverage modern technology. This tool would be a conversational AI that can simulate various life-threatening scenarios, helping dispatchers rehearse and improve their response skills.

As I was working in a team with two amazing AI developers, this seemed like a very exciting opportunity to get my first experience building a solution that utilizes AI. I'm not going into a detailed explanation of the AI solution in this article as it is not my area of speciality, instead of I am focusing on my experience as a a fullstack developer who took part in designing, developing and testing the app as well as integrating some of the AI endpoints to our frontend application. We had additionally also a frontend developer in the team, so we had a balanced team with different skills and interests and that was a great starting point.

Designing the app

We started our project by brainstorming what kind of functionalities the app should have for the user and what kind of building blocks would be required. Do we need

a database where to save data --> yes, we want to save user data and data of completed simulations

a backend --> yes, to interact with the database

authentication --> yes, an easy implementation with Firebase

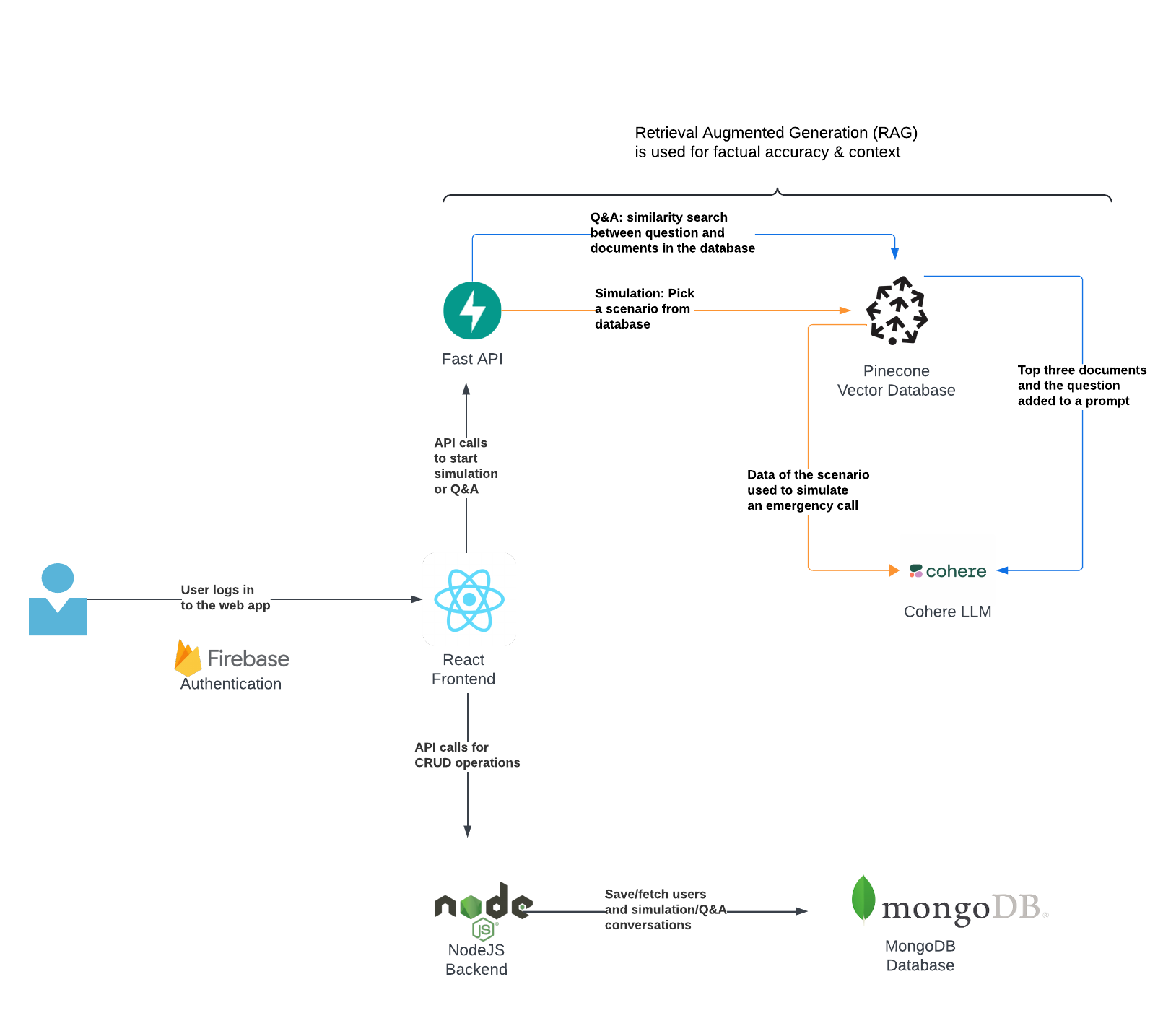

We would need a React app, NodeJS backend, MongoDB database and the AI element, which our application would access via Fast API. It was decided that we would connect the React frontend directly to the Fast API as for the purposes of this hackathon it would be easier for us to integrate the endpoints directly into the React app. The architecture of our application is described in the below diagram:

Implementation

As we had team members with various specialities in our team, we started working on all the different components simultaneously. We started creating the frontend components based on Figma design, building the NodeJS backend that interacts with MongoDB and verifies authentication as well as building the AI backend.

Each system was tested separately - for example by testing the NodeJS backend with Postman, we made sure that the database interactions worked as expected and Firebase authentication tokens were validated. Similarly, the AI API was tested using similar tools to make sure that it is accepting the API requests and returning the kind of information we expect.

When everything was working, it was just a matter of integrating everything. Well, they should fit together like pieces of a puzzle as we already planned everything, right? It's never that straightforward, but always a lot of fun and a great learning experience.

Integrating frontend, backend and AI backend

Integrating the frontend to the NodeJS backend was straightly straightforward as our application is dealing with simple CRUD actions. Integrating the frontend application to the AI API was something I didn't have previous experience with. In all fairness, it was not that different from making 'traditional' API calls, especially as our AI API was built with a simple architecture for the purposes of this hackathon.

We didn't have a way of managing context in the LLM application, but instead, we sent a lot of data (all previous conversation history) with every single API call. This needed careful management at the frontend for the data that should always be passed to the backend. This would of course be an inefficient way of running a production application, but worked well for our prototype.

Lessons learnt

For me, the main benefit of this project was getting a better understanding of how AI APIs are built. I got a lot of insight into the challenges of collecting appropriate data and had a chance to see how adjusting the prompt can fully change the responses we get from the AI.

I also learnt how beneficial it is to do early integrations during development, even if it is just a part of the applications or one API endpoint. For example, we hadn't considered how there would be a delay in the responses we get from the AI, which meant we didn't have the time to change the UI to make it more obvious to the user that they have to wait. Had we tested it earlier, we could have still applied some UI changes that could have improved the user experience by making the waiting period more transparent and less frustrating.

Overall, it was a great hackathon where I learned a lot and it was very rewarding to see how we managed to build an application where the user can interact with AI within such a short timeline.

Further technical details (including the AI implementation) can be found in the GitHub repository.

Subscribe to my newsletter

Read articles from Marika Bergman directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by