Streaming Protocols and Chunking Explained

ritiksharmaaa

ritiksharmaaa

Understanding Video Streaming Protocols and Chunking Mechanisms

1. What is a Protocol?

Real-Life Scenario: Imagine you’re attending an international conference where everyone speaks different languages. To communicate, you use translators who follow specific rules to ensure accurate communication. Similarly, a protocol in computing is a set of rules that allows different devices to communicate effectively over a network.

Introduction to HTTP: One of the most fundamental and widely used protocols on the internet is HTTP (Hypertext Transfer Protocol). It governs how data is transmitted over the web, ensuring that web pages load correctly in browsers.

2. How Streaming Protocols Differ from HTTP

Beyond Basic Web Browsing: While HTTP is sufficient for loading web pages, streaming protocols are optimized for delivering continuous media like video and audio. These protocols handle real-time data transmission, which is crucial for live streaming and on-demand video services.

Examples of Streaming Protocols:

HLS (HTTP Live Streaming): Developed by Apple, HLS breaks video content into smaller segments (MPEG-TS files) and uses an .m3u8 playlist file to organize these segments.

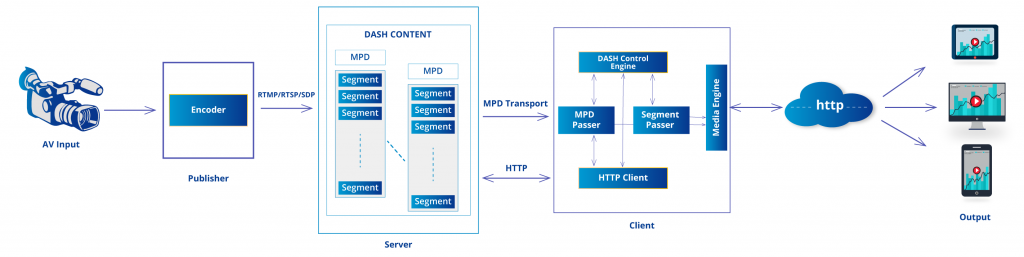

DASH (Dynamic Adaptive Streaming over HTTP): An international standard that segments video into MP4 or WebM formats, using a .mpd (Media Presentation Description) file.

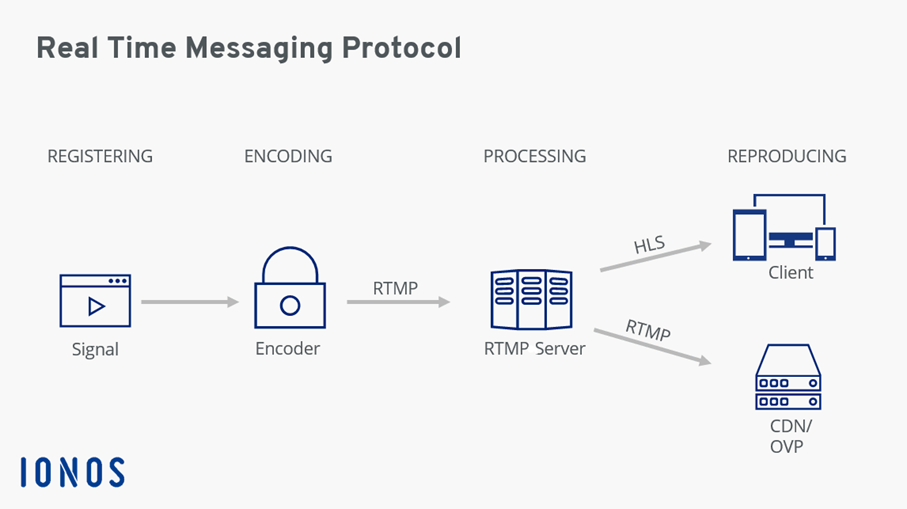

RTMP (Real-Time Messaging Protocol): Initially used by Adobe for Flash Player, RTMP is primarily employed for live streaming, requiring a media server for data transmission.

MSS (Microsoft Smooth Streaming): Developed by Microsoft, MSS uses fragmented MP4 files and a .manifest file to deliver adaptive bitrate streaming.

CMAF (Common Media Application Format): A relatively new standard that aims to simplify and unify the streaming process across various devices and platforms. It works with protocols like HLS and DASH.

MPEG-DASH: An extension of DASH, MPEG-DASH is a standardized format that supports a wide range of codecs and is designed for adaptive streaming over the internet.

HDS (HTTP Dynamic Streaming): Developed by Adobe, HDS uses fragmented MP4 files and a .f4m file to deliver adaptive streaming.

HLS with CMAF: A combination that leverages the CMAF standard within the HLS protocol, providing lower latency and better compatibility.

RTSP (Real-Time Streaming Protocol): A network control protocol designed for use in entertainment and communications systems to control streaming media servers.

SRT (Secure Reliable Transport): A protocol designed to optimize streaming performance across unpredictable networks like the internet, with added encryption for security.

3. Introduction to Adaptive Bitrate Streaming (ABR)

Definition and Basic Concept: ABR dynamically adjusts the quality of a video stream based on the viewer's network conditions and device capabilities. This ensures a smooth viewing experience by preventing buffering and interruptions.

How ABR Works with Transcoding: During the transcoding process, the original video is converted into multiple versions at different bitrates and resolutions. ABR algorithms switch between these versions in real-time, depending on the viewer’s bandwidth and device.

Benefits of ABR in Video Streaming:

Maintaining Video Quality: ABR ensures that video quality is maintained as closely as possible to the viewer’s network capacity, avoiding quality drops and buffering.

Device Compatibility: ABR makes it possible to serve content to a wide range of devices, from smartphones to 4K TVs, by adjusting the stream quality.

Examples in Popular Services: Platforms like YouTube and Netflix use ABR to provide a seamless viewing experience across different devices and network conditions.

4. The Chunking Mechanism in Streaming

What is Chunking?

Simple Explanation: Chunking divides a video file into smaller, manageable segments called chunks. Think of it as cutting a large cake into slices, making it easier to serve.

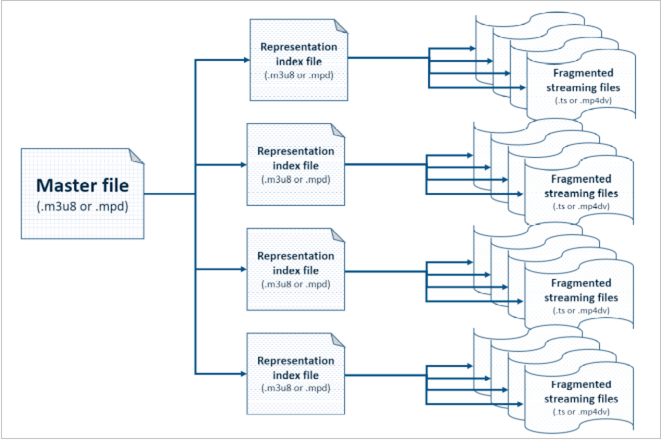

How It Works: The video is divided into chunks, and an index file (such as an .m3u8 or .mpd file) is created. This index file lists all the chunks and provides the video player with the information needed to fetch them.

Different Chunking Mechanisms by Protocol

you can see more mechanism and protocol in our article series you can see those to get better understanding the this topic

HLS (HTTP Live Streaming):

How It Works: HLS divides the video into chunks using the MPEG-TS format. The .m3u8 playlist file acts as an index, listing all the available chunks and their URLs.

Real-Life Scenario: Imagine a recipe book with individual recipes for different dishes. Each recipe represents a chunk, and the table of contents (the .m3u8 file) helps you navigate to the desired recipe.

DASH (Dynamic Adaptive Streaming over HTTP):

How It Works: DASH segments the video into chunks using formats like MP4 or WebM. The .mpd file serves as an index, detailing all available segments.

Real-Life Scenario: Think of DASH as a series of DVDs in a box set, where each DVD represents a chunk. The .mpd file is like the box's index, guiding you to the specific DVD and scene.

RTMP (Real-Time Messaging Protocol):

How It Works: Unlike chunk-based protocols, RTMP streams video in a continuous flow. It requires a consistent connection to maintain the stream.

Real-Life Scenario: RTMP is akin to a live radio broadcast where the content is delivered continuously, and listeners tune in in real-time.

MSS (Microsoft Smooth Streaming):

How It Works: MSS uses fragmented MP4 files, with a .manifest file acting as an index. It supports adaptive bitrate streaming similar to HLS and DASH.

Real-Life Scenario: MSS can be compared to a photo album where each photo represents a chunk. The .manifest file is the album's index, organizing the photos by events.

CMAF (Common Media Application Format):

How It Works: CMAF standardizes the segment format for streaming, working across both HLS and DASH. It uses .cmf files for segments and .m3u8 or .mpd files for indexing.

Real-Life Scenario: CMAF is like a universal adapter for different electronic devices, ensuring compatibility and seamless operation across various platforms.

MPEG-DASH:

How It Works: MPEG-DASH segments video using multiple formats and codecs, indexed by a .mpd file. It is versatile and widely supported.

Real-Life Scenario: MPEG-DASH is like a multi-tool that can handle different jobs. The .mpd file serves as a manual, guiding you on how to use each tool.

HDS (HTTP Dynamic Streaming):

How It Works: HDS uses fragmented MP4 files, with a .f4m file serving as the index. It was developed by Adobe for Flash-based content.

Real-Life Scenario: HDS can be likened to a serialized book release, where each installment represents a chunk, and the .f4m file is a schedule or guide.

HLS with CMAF:

How It Works: This combines the HLS protocol with the CMAF format, providing lower latency and better compatibility. It uses .cmf files for segments and an .m3u8 file for indexing.

Real-Life Scenario: This setup is like a hybrid car that combines the best features of gasoline and electric engines. The .m3u8 file is the dashboard, showing the status of each power source.

RTSP (Real-Time Streaming Protocol):

How It Works: RTSP is designed for controlling media streaming and does not handle chunking in the same way as HTTP-based protocols. It establishes and controls the media sessions between client devices and servers.

Real-Life Scenario: RTSP is like a TV remote that controls what channel you're watching. It doesn't store content but directs the flow of information.

SRT (Secure Reliable Transport):

How It Works: SRT focuses on providing secure and reliable streaming over unreliable networks. It includes encryption and packet recovery features.

Real-Life Scenario: SRT can be compared to a secure delivery service that ensures your package (data) arrives intact, even in bad weather (network conditions).

File Creation During Chunking

HLS Example:

.m3u8 File: This file contains metadata and URLs for each chunk. It looks something like this:

#EXTM3U #EXT-X-VERSION:3 #EXT-X-TARGETDURATION:10 #EXT-X-MEDIA-SEQUENCE:0 #EXTINF:10, chunk0.ts #EXTINF:10, chunk1.ts #EXTINF:10, chunk2.tsExplanation: The

#EXTM3Utag indicates the start of the file.#EXT-X-VERSIONspecifies the HLS version.#EXT-X-TARGETDURATIONindicates the maximum duration of each segment.#EXT-X-MEDIA-SEQUENCEprovides a sequence number for the first chunk. Each#EXTINFtag specifies the duration of a chunk, followed by the chunk's file name.

Why Multiple Chunking Mechanisms?

- Device Compatibility: Different devices and platforms support different protocols and formats

. For instance, HLS is preferred for iOS devices, while DASH offers broader compatibility across various platforms.

Network Conditions: Chunking mechanisms like HLS and DASH are designed to handle varying network speeds, ensuring smooth playback even under poor conditions.

Content Type: Live streaming might prefer RTMP for its low latency, while VOD services may use HLS or DASH for better control over quality and buffering.

Example: Watching a Video Online

Starting the Video: Upon clicking a video, the video player reads the index file (.m3u8 or .mpd). This file lists all available chunks and their respective URLs.

Red and White Progress Lines: The red line shows the part of the video you've watched, while the white line indicates buffered content. As the video plays, the player requests new chunks based on your viewing position.

Requesting Chunks: The player continuously checks the index file for the next chunk to request. For instance, if you skip to 10 minutes in a 30-minute video, the player retrieves the corresponding chunks from that point.

5. What Happens If We Don't Use Chunking?

Consequences of Not Chunking: Without chunking, viewers would need to download the entire video before playback, leading to long load times and a poor experience. It's like waiting for an entire movie to download before watching any part of it.

Real-Life Scenario: Consider attending a live sports event. Without chunking, you’d only be able to watch after the game ends and the entire footage is available, missing out on the live excitement.

6. Challenges and Considerations in ABR

Bandwidth and Storage: ABR requires storing multiple versions of each video, increasing storage and bandwidth needs.

Codec and Format Handling: Different codecs and formats must be managed to ensure compatibility across devices.

Latency and Buffering: Minimizing latency and buffering is crucial for live streaming, requiring efficient chunking and delivery.

7. When to Choose Different Protocols and Chunking Mechanisms

HLS: Best for Apple devices and widely supported across other platforms. Suitable for both live and on-demand content.

DASH: Offers flexibility and support for a wide range of devices and browsers. Ideal for adaptive streaming in diverse environments.

RTMP: Still used for live streaming due to its low latency. Best for live events where real-time transmission is critical.

MSS: Often used in Windows environments, compatible with Silverlight. Good for on-demand and live content within Microsoft ecosystems.

CMAF: Provides a unified format for HLS and DASH, reducing complexity. Ideal for content delivery across multiple platforms.

MPEG-DASH: A standardized approach offering extensive codec support. Suitable for adaptive streaming in various scenarios.

HDS: Used for Flash content, now less common. Suitable for legacy systems that still rely on Flash.

HLS with CMAF: Offers low latency and broad compatibility. Best for scenarios requiring quick playback start times and smooth streaming.

RTSP: Good for controlling media sessions and used in video conferencing systems. Best for scenarios requiring direct control over streaming sessions.

SRT: Ideal for streaming over unreliable networks with added security. Best for live events where network conditions are unpredictable.

8 .Choosing the Right Protocol and Chunking Mechanism Based on Use Cases

Selecting the appropriate streaming protocol and chunking mechanism depends on the specific requirements and goals of your streaming service. Here’s a guide to help you choose based on different use cases:

1. Building a Service Like Netflix

Protocols: DASH and HLS with CMAF

Why: Netflix delivers content to a wide range of devices and platforms. DASH and HLS with CMAF provide adaptive bitrate streaming, ensuring that users get the best possible quality based on their device capabilities and network conditions.

Details:

DASH is versatile and widely supported, making it ideal for adaptive streaming across various platforms and devices.

HLS with CMAF combines the benefits of HLS with the unified format of CMAF, offering low latency and better compatibility for a high-quality streaming experience.

Chunking Mechanism:

DASH uses MP4 segments, and CMAF standardizes these segments for better performance and compatibility.

Example: Netflix uses ABR to adjust video quality in real-time, providing a seamless viewing experience even on varying network conditions.

2. Creating a Platform Like YouTube

Protocols: HLS and DASH

Why: YouTube needs to handle both live streaming and on-demand content, making HLS and DASH suitable for diverse scenarios.

Details:

HLS is widely used for live streaming and on-demand content, especially on Apple devices.

DASH is effective for adaptive streaming and supports a variety of codecs, ensuring broad compatibility.

Chunking Mechanism:

HLS breaks video into MPEG-TS chunks with an .m3u8 playlist, while DASH segments video into MP4/WebM chunks with an .mpd file.

Example: YouTube uses HLS for live streams and DASH for on-demand content to optimize performance and user experience across different devices.

3. Live Streaming with Low Latency

Protocols: RTMP and SRT

Why: For applications requiring real-time interactions, such as live sports events or online gaming, low latency is crucial.

Details:

RTMP is known for its low latency but is becoming less common in modern setups.

SRT provides secure and reliable streaming over unpredictable networks, making it suitable for live events where network conditions are unstable.

Chunking Mechanism:

RTMP doesn’t use chunking in the traditional sense but streams content continuously.

SRT manages packet loss and network issues, ensuring smooth live streaming.

Example: Platforms like Twitch may use RTMP for live broadcasting and SRT for improved reliability in challenging network environments.

4. Delivering Content on Legacy Systems

Protocols: HDS and RTSP

Why: For applications that need to support older systems or specific media control functionalities.

Details:

HDS is suitable for legacy systems using Flash, though it's less common now.

RTSP is used for controlling media sessions, ideal for applications like video conferencing or surveillance.

Chunking Mechanism:

HDS uses fragmented MP4 files with a .f4m index file.

RTSP focuses on session control rather than chunking.

Example: Older video conferencing systems may use RTSP for managing media streams, while content delivery systems on legacy platforms might still rely on HDS.

5. Unified Format for Multiple Platforms

Protocols: CMAF

Why: To simplify and unify the streaming process across various devices and platforms.

Details: CMAF works with both HLS and DASH, providing a standardized approach to segmenting and delivering media content.

Chunking Mechanism:

CMAF uses a unified format for segments, ensuring compatibility with both HLS and DASH.

Example: Services that need to deliver consistent content across different platforms can benefit from CMAF’s versatility and compatibility.

9 . Conclusion

- Summary: Video streaming protocols and chunking mechanisms are essential for delivering a seamless viewing experience. The choice of protocol depends on factors like device compatibility, network conditions, and content type. Understanding these technologies is crucial for anyone involved in content creation, delivery, or consumption.

This article provides a detailed overview of various streaming protocols and chunking mechanisms, offering real-life analogies and scenarios for better understanding. The explanation includes the creation of index files like .m3u8 in HLS, highlighting the specific data they contain and how they guide the video playback process. The content is structured to provide a comprehensive understanding of the technical aspects, making it accessible and informative for a broad audience.

Subscribe to my newsletter

Read articles from ritiksharmaaa directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

ritiksharmaaa

ritiksharmaaa

Hy this is me Ritik sharma . i am software developer