Sustained Attention Fatigue in Vulnerability Analysis

Arshan Dabirsiaghi

Arshan DabirsiaghiThe predominant hobby of my teenage years and the main focus of my first 10 years of my career was a mix of very similar activities: code review, bug hunting, exploit development, and building scanners/reviewing scanner output. One particularly intense contract I had involved working for a well-known bank for a 6 month stretch where I did nothing but look at SAST scans all day, every day. You get to know the where the tools excelled and failed, how developers fix things and think about security, and most importantly -- how to reach maximum review efficiency. It was intense and I learned a lot, even after already having felt like I was already particularly good at all this.

Human Vulnerability Review Is Intense, Sort of Harmful, and Error Prone

But, I also got tired -- mentally, and quickly. Over time, that "tired" became utter exhaustion. I got lazy, more distracted, and irritable. You get tempted to mass-close similar-looking issues. In truth, that was the only way to get through a scan with thousands of findings. You make mistakes. Hell, even the best people in this business make mistakes on their best days because the subject matter is full of complexity. And, most companies couldn't even find people with combined security+developer skill sets to put through this ringer -- how screwed were those companies?

But anyway, during those 6 months, I was doing my favorite thing, and I couldn't possibly sustain it.

There's a term for this phenomena: directed attention fatigue. The bottom line is doing intense tasks of a certain type for sustained time will result in degraded performance and make you no fun to be around.

DAF can also be brought about by performing concentration-intensive tasks such as puzzle-solving or learning unfamiliar ideas.

Triaging vulnerability scans feels like an activity designed by a malicious alien to explore how DAF affects humans. Puzzle solving is exactly what triaging a vulnerability report is. You're trying to understand if the report points to an exploitable code path. The pieces to that puzzle include the code, the framework, the expected security controls or risk factors, the architecture of the application, the data involved, the business domain, and more.

Orienting your way around a new codebase is a daunting challenge in and of itself, let alone in the context of determining subtle clues about its workings in order to determine the realness and seriousness of a vulnerability. The prospect of having to learn a new codebase every single day made me feel a sense of dread that was hard to live with.

And, by the way -- if you're wrong and miss something, maybe the company you're working for goes boom 🤷♂️ . To state the obvious: humans shouldn't do this task all day, every day. They need a mix of lighter work, more breaks, and more time outside.

Agentic AI-Based Vulnerability Triage

But, technology can also help. I realized this when I was trying to fix SAST results automatically for developers at my job at Pixee. In order to decide which fixes I should send to developers, I was performing parts of the job I had before using AI because I didn't want to send pull requests to developers for issues that were obviously false positives. But, I wasn't actually delivering this as value to developers or security practitioners. We productized this recently and you can see our announcement here.

I want to spend more time in another piece soon about the how of Pixee's Agentic AI solution, because I think the architecture is interesting and it deserves its own post. But, first I want to show you a sample result so we can get back to solving for the DAF. I know a lot of us in the hacker community like to think of ourselves as artists, and there is art in many exploits. But, there's also a lot of predictable, boring analysis that can be taught, to both people and LLMs, but not to the scanner tools themselves, mostly around these two things:

What are the security controls you expect to see?

Are there any contextual risk factors for this vulnerability class?

An example: SSRF

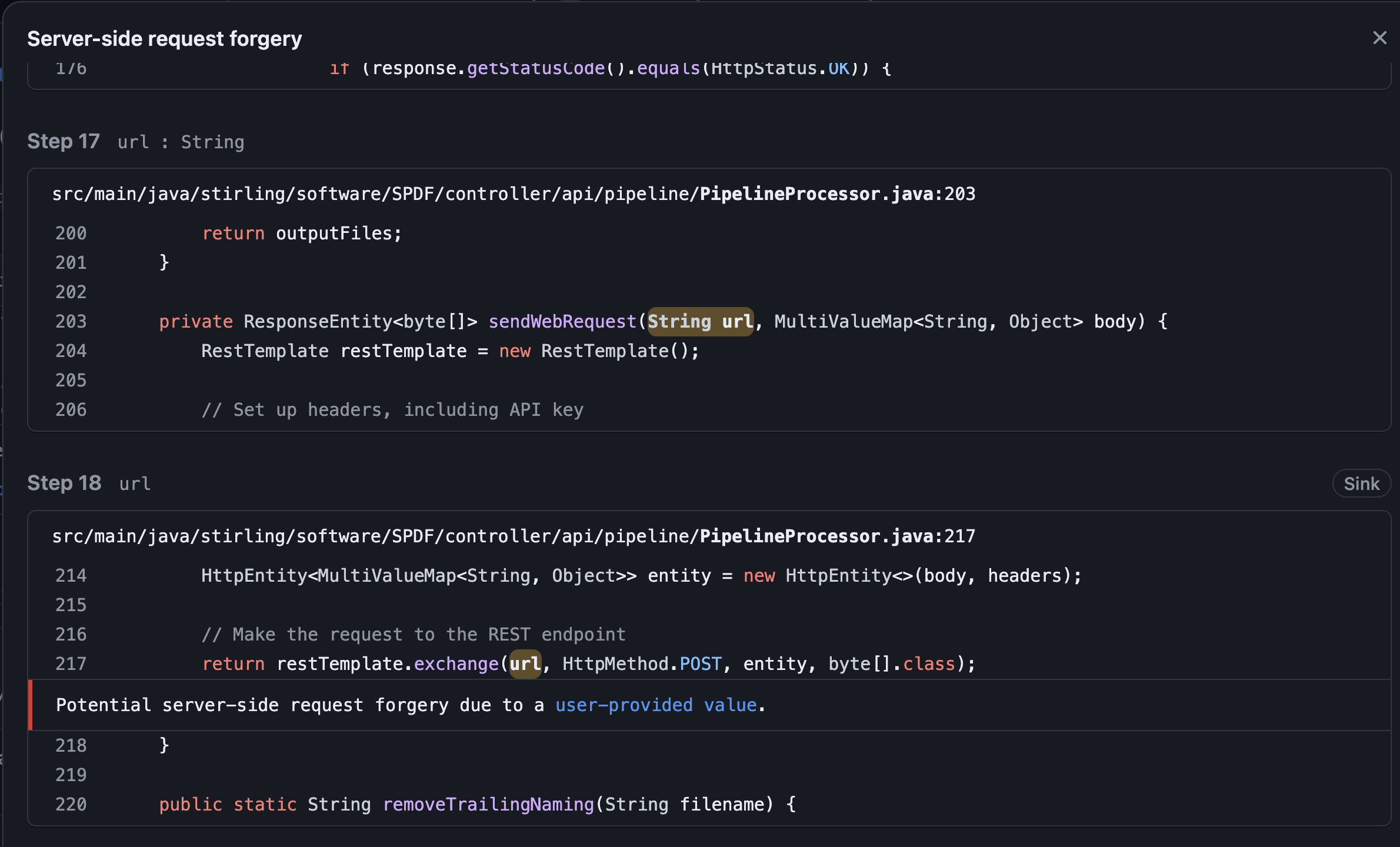

In 2021 I wrote about how SAST can't practically find SSRF with any precision. While this is still true, it is interesting that automatic triage tools can complement these tools to figure this out in many cases. Consider this "Critical" CodeQL-reported SSRF vulnerability in some open-source code:

Nowhere in the 18-step dataflow shown in GitHub UI is anything that looks like the expected security controls, so a cursory glance seems to suggest this is a true positive.

However, our custom-built AI agent, which we've taught to triage SSRF like I would, has this to say:

In the provided code, the URL is composed as follows:

url = getBaseUrl() + operation. ThegetBaseUrl()method constructs a base URL using the application's context path and port, which are fixed and not influenced by user input. Theoperationstring, however, is derived from thePipelineOperationobject, which could potentially be influenced by user input. Given this composition, the user can influence the path, querystring, and fragment by providing a string likeoperation?more_stuff. This means that while the scheme, host, and port are controlled by the application, the path, querystring, and fragment can be manipulated by the user.Change Severity from CRITICAL -> MEDIUM

Hmm, let's look at the code and see if it's right:

String url = getBaseUrl() + operation; // operation is tainted

...

private String getBaseUrl() {

String contextPath = servletContext.getContextPath();

String port = SPdfApplication.getStaticPort();

return "http://localhost:" + port + contextPath + "/";

}

Yes, the AI is right. The CodeQL SARIF is missing events, and adjacent to the reported data flow we confirm what the AI saw. The taint is placed after the initial part of the URL construction. The difference between controlling the protocol, host, or port is different than controlling the path, querystring, etc. The severity of this issue is lower.

Also, the AI never gets tired, so it will:

... not become cranky or make mistakes due to attention fatigue.

... give every analytic it's own proper, unbiased analysis.

It's not just about right-sizing the severity. It's also about talking with developers, suggesting attacks, quickly surfacing the important factors for a more senior person to review, and more. I know our first instinct with these tools is to de-prioritize issues because there's so much noise, but it can also prioritize issues where none of the expected controls are present.

AI Isn't Perfect, But It's Very Helpful

And, of course, the AI is still vulnerable to indeterminism, occasional misinterpretation, or arguing the facts to a slightly different conclusion -- just like a human. But, it scales, it's generally right, and it generally makes reviewing a finding much, much faster.

I look forward to saving humans from DAF, helping teams find the right analytics to act on, and scaling appsec in the era of robots writing code!

Subscribe to my newsletter

Read articles from Arshan Dabirsiaghi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Arshan Dabirsiaghi

Arshan Dabirsiaghi

CTO @ Pixee. ai (@pixeebot on GitHub) ex Chief Scientist @ Contrast Security Security researcher pretending to be a software executive.