How to Easily Parse JSON into Oracle Database Tables

Tomas Kucharzyk

Tomas Kucharzyk

In my previous article, I described my first encounter with the new Oracle Database 23ai functionality called JSON-Relational Duality Views.

It is a very useful feature, especially if you have to parse or create JSON objects in your Oracle database.

Today I will try to write a custom procedure, that will make JSON parsing even easier.

Solving the problem

In my opinion, it is not worth it to write a custom procedure if you use small JSON objects with not very complicated hierarchy. But if you use JSON a lot and you have to maintain multiple user-defined types and duality views, it may be worth it to create a "Swiss knife" procedure, that will do the work for you.

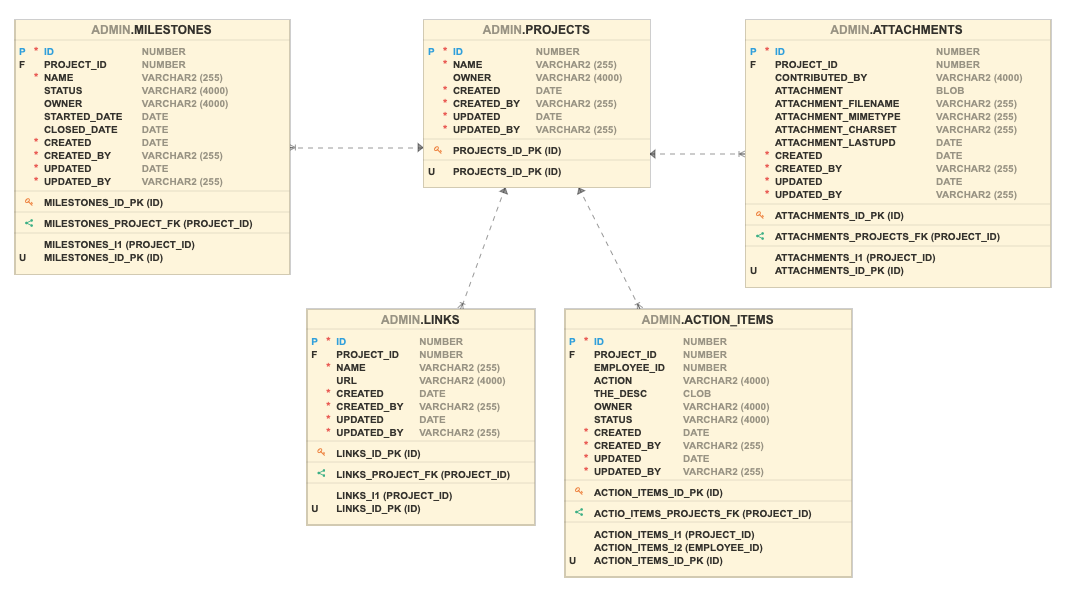

The tables I will work on are Project Milestones table examples from Quick SQL in APEX.

The JSON representation of a typical project will have a main JSON object with few attributes and then arrays for milestones, attachments, links, and action items.

{

"projects": [

{

"id": "1",

"name": "Pharmacy project",

"owner": "Tomas",

"created_by": "TK",

"links": [

{

"name": "Rachel Morris",

"url": "http://datefjo.do/memwez"

}

],

"action_items": [

{

"project_id": "1",

"action": "Action 2",

"the_desc": "N/A",

"owner": "N/A",

"status": "completed"

}

],

"milestones": [

{

"project_id": "1",

"name": "Milestone 4",

"status": "completed",

"owner": "N/A",

"started_date": "01.02.2024",

"closed_date": "01.04.2024"

}

],

"attachments": [

{

"project_id": "1",

"contributed_by": "TK"

}

]

}

]

}

Creating the procedure

Before I start to code the procedure, I want to create criteria that I want to accomplish.

✅ Procedure input will be just JSON

✅ Parse hierarchical JSON representing multiple tables

✅ Get and validate table and column names automatically

✅ Insert the data into tables in hierarchical order

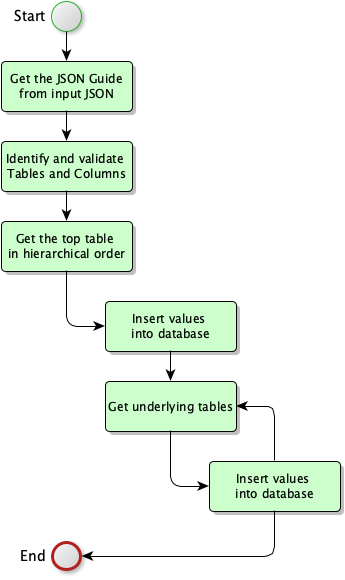

I created a diagram of the steps that are necessary to parse the JSON into tables. It looks as below.

JSON structure and validation

First I use a JSON_DATAGUIDE function to get the structure of input JSON, then I run a few queries to check if the tables (arrays) exist in the database and insert all the information into a custom json_structure_to_process table.

The table will hold the structure for me, so I don't have to query it multiple times from input JSON if I need it.

Processing the structure and data

In the next step, I take the object that's the highest in the hierarchy. In my case, it is a "projects" array.

I run a simple loop where I create a dynamic SQL query, that will generate an insert with select from a JSON_TABLE. The query for the projects table looks just like below.

insert into projects (id,name,owner,created_by)

select *

from json_table('input_json','$.projects[*]'

columns (

id VARCHAR2(11) PATH '$.id',

name VARCHAR2(26) PATH '$.name',

owner VARCHAR2(18) PATH '$.owner',

created_by VARCHAR2(12) PATH '$.created_by'

)

);

After this query is executed the loop will pick up on underlying arrays (tables) and create similar inserts for them.

In my case, the other arrays are Links, Action items, Milestones, and Attachments. The queries created by the procedure are below.

--Links

insert into links (url,name)

select *

from json_table('input_json','$.projects.links[*]'

columns (

url VARCHAR2(42) PATH '$.url',

name VARCHAR2(26) PATH '$.name'

)

);

--Action items

insert into action_items (owner,action,status,the_desc,project_id)

select *

from json_table('input_json','$.projects.action_items[*]'

columns (

owner VARCHAR2(14) PATH '$.owner',

action VARCHAR2(18) PATH '$.action',

status VARCHAR2(26) PATH '$.status',

the_desc VARCHAR2(14) PATH '$.the_desc',

project_id VARCHAR2(11) PATH '$.project_id'

)

);

--Milestones

insert into milestones (name,owner,status,project_id,closed_date,started_date)

select *

from json_table('input_json','$.projects.milestones[*]'

columns (

name VARCHAR2(26) PATH '$.name',

owner VARCHAR2(14) PATH '$.owner',

status VARCHAR2(26) PATH '$.status',

project_id VARCHAR2(11) PATH '$.project_id',

closed_date DATE PATH '$.closed_date',

started_date DATE PATH '$.started_date'

)

);

--Attachments

insert into attachments (project_id,contributed_by)

select *

from json_table('input_json','$.projects.attachments[*]'

columns (

project_id VARCHAR2(11) PATH '$.project_id',

contributed_by VARCHAR2(12) PATH '$.contributed_by'

)

);

Most of my columns are VARCHAR2, but you can probably use any type of column because the type is automatically detected by JSON_DATAGUIDE. But you can always tune your procedure to be more precise if you need some specific type of column.

After I run through all the objects in JSON and all the data is inserted, the loop is done.

Cons and Complications

As you already noticed, the biggest con of this solution will be probably the naming of the arrays and columns. It has to match the table and column names. But you can always do some kind of matching function that will not demand a 100% accurate table or column name.

The second compromise will be the performance. It will take a lot of time if you have a big input JSON with a complicated hierarchy.

Pros and advantages

The biggest advantage of using the custom procedure will be definitely the saved time in creating and maintaining the User-defined type or JSON duality views. This solution will allow you just drop a JSON into a procedure, without any previous preparation or mapping.

So it depends on what time you value more, the time of the procedure that will parse the JSON? Or the development and maintenance time?

Talk is cheap, show me the code!

All the code I used for this article is available here.

The procedure is very simple and I did not spend much time on it, so if you will want to use it for your case, it will need some tuning here and there to fit your needs.

Please let me know what you think about this solution! And thank you for reading my article.

Subscribe to my newsletter

Read articles from Tomas Kucharzyk directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by