How to do Comparative Analysis ?

Jyoti Maurya

Jyoti Maurya

Comparative analysis in research within Machine Learning (ML) and Artificial Intelligence (AI) involves evaluating different algorithms, models, or approaches to determine their effectiveness, efficiency, and suitability for specific tasks or problems and to identify patterns, differences, or similarities.

Unlike general analysis, which might focus on understanding a single entity or phenomenon in depth, comparative analysis explicitly seeks to draw comparisons across multiple entities to uncover insights that might not be apparent when examining them individually.

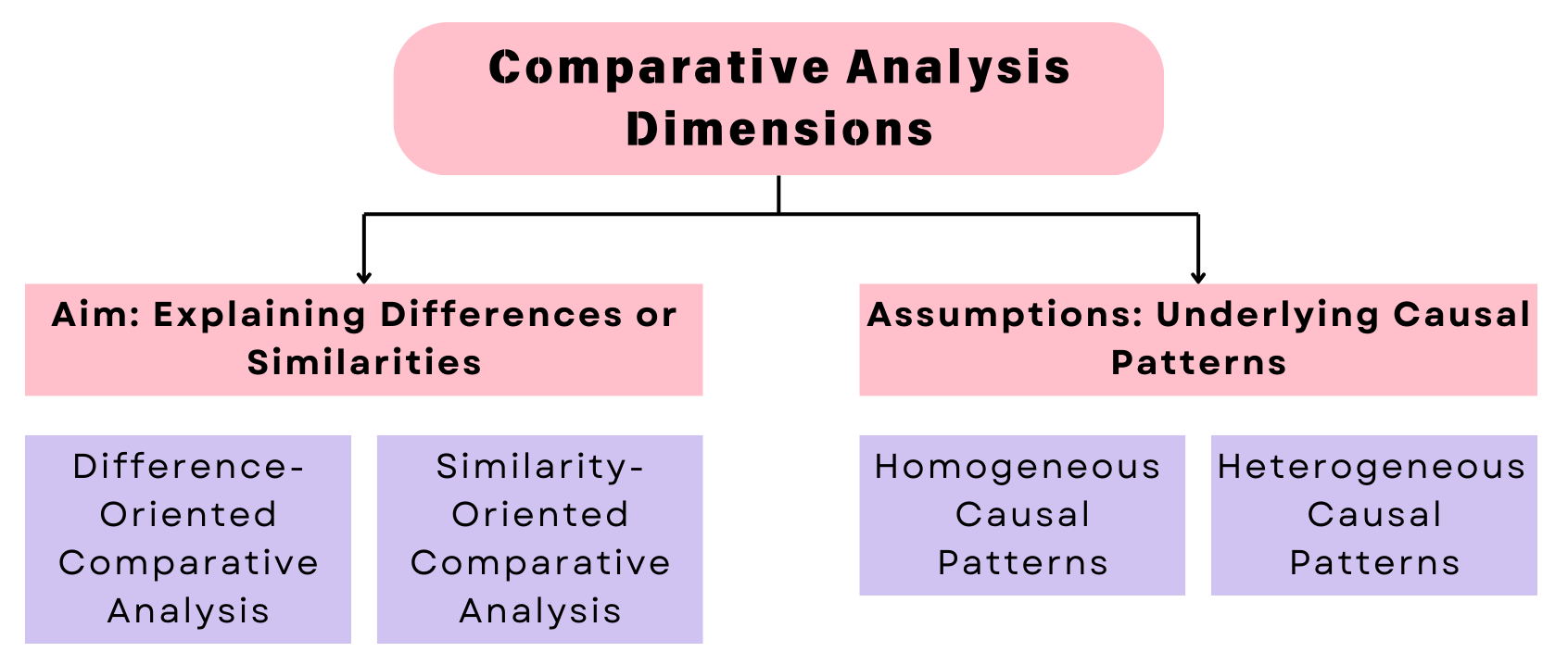

Dimensions of Comparative Analysis

Comparative analysis can be categorized based on two dimensions:

Aim: Explaining Differences or Similarities

Assumptions: Underlying Causal Patterns

Aim: Explaining Differences or Similarities

Difference-Oriented Comparative Analysis

Objective: To explain why entities differ from each other.

Approach: Focuses on identifying the factors that lead to variations among the entities.

Example:

Comparing different urban housing policies to understand why some cities have higher rates of homelessness than others.

Comparing the performance of decision trees, random forests, and gradient boosting on a credit scoring dataset to understand why certain algorithms perform better in terms of accuracy and robustness against overfitting.

Similarity-Oriented Comparative Analysis

Objective: To explain why entities are similar to each other.

Approach: Focuses on identifying common factors or conditions that lead to similar outcomes among the entities.

Example:

Comparing different educational systems to understand why students in several countries achieve similar levels of academic performance.

Comparing different convolutional neural network (CNN) architectures (e.g., VGG, ResNet, Inception) on the MNIST dataset to understand why they all achieve high accuracy in digit recognition, focusing on the common features and techniques that contribute to their success.

Assumptions: Underlying Causal Patterns

Homogeneous Causal Patterns

Objective: Assumes that the same causal patterns apply across all entities being compared.

Approach: Looks for consistent relationships and generalizable findings.

Example:

Assuming that the same factors (like income level, education, and employment) affect health outcomes similarly in different regions.

Analyzing the impact of hyperparameter tuning (e.g., learning rate, batch size, dropout rate) on the performance of various deep learning models (e.g., CNNs, RNNs, GANs) to determine if there are consistent effects of these parameters across different types of neural networks.

Heterogeneous Causal Patterns

Objective: Assumes that different entities might have different causal patterns.

Approach: Focuses on identifying context-specific factors and unique relationships.

Example:

Investigating how different cultural contexts influence the effectiveness of marketing strategies in various countries.

Investigating how different types of feature engineering (e.g., manual feature selection, automated feature extraction using PCA, or feature generation using deep learning embeddings) impact the performance of ML models (e.g., SVM, random forests, neural networks) across different domains (e.g., image recognition, text classification, financial forecasting)

Following image shows the types of comparative analysis according to whether the starting point is similarities or differences

Research Designs for Comparative Analysis

To effectively conduct comparative analysis, specific research designs are necessary. These designs must be tailored to the type of comparative analysis being conducted.

Case Study Design

Use: Suitable for in-depth analysis of a few entities (models or algorithms).

Approach: Detailed examination of each case to understand unique and shared factors.

Example: Conducting a detailed examination of how transfer learning is implemented in two specific deep learning models (e.g., BERT for NLP tasks and ResNet for image classification) to understand the unique and shared factors that contribute to their success.

Cross-Sectional Design

Use: Suitable for analyzing many entities (models or algorithms) at a single point in time.

Approach: Collects data from a large number of cases to identify patterns and correlations.

Example: Comparing the performance of multiple ML algorithms (e.g., logistic regression, SVM, k-NN, and neural networks) on a benchmark dataset like UCI Machine Learning Repository to identify patterns and correlations in their performance metrics

Longitudinal Design

Use: Suitable for analyzing entities (models or algorithms) over a period of time.

Approach: Tracks changes and developments in entities to understand causal relationships over time.

Example: Tracking the performance of a reinforcement learning agent in a dynamic environment (e.g., OpenAI Gym) over several iterations to understand how the agent’s policy evolves and improves with experience

Experimental Design

Use: Suitable for controlled comparisons with manipulated variables.

Approach: Conducts experiments to test specific hypotheses and causal relationships.

Example: Conducting experiments to test the effectiveness of different regularization techniques (e.g., L1, L2, dropout) on neural networks' performance and comparing the results to determine which technique provides the best balance between preventing overfitting and maintaining model accuracy.

Problems in Comparative Analysis

Comparative analysis, while powerful, faces several challenges:

Selection Bias: The choice of entities to compare might not be representative, leading to skewed results.

- Example: Only comparing high-performance neural networks without considering simpler algorithms might overlook valuable insights from less complex models

Measurement Issues: Different entities might use different metrics, making direct comparisons difficult.

- Example: Comparing models using accuracy versus F1-score can be challenging if the dataset is imbalanced.

Contextual Differences: Context-specific factors might affect the entities being compared, complicating the identification of generalizable patterns.

- Example: A model performing well on image classification might not necessarily excel in a different context, such as text classification.

Complex Causality: Causal relationships might be complex and multidimensional, making it hard to isolate specific factors.

- Example: Understanding the interplay between different hyperparameters and their combined effect on a model's performance can be intricate.

Data Availability: Lack of consistent and comparable data across entities can hinder comprehensive analysis.

- Example: Comparing models trained on different datasets with varying qualities and quantities of data can lead to misleading conclusions.

Comparative analysis offers valuable insights by systematically comparing entities to explain differences or similarities, considering homogeneous or heterogeneous causal patterns. The chosen research design must align with the type of comparative analysis, and researchers must navigate various challenges to ensure robust and meaningful conclusions

Subscribe to my newsletter

Read articles from Jyoti Maurya directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Jyoti Maurya

Jyoti Maurya

I create cross platform mobile apps with AI functionalities. Currently a PhD Scholar at Indira Gandhi Delhi Technical University for Women, Delhi. M.Tech in Artificial Intelligence (AI).