Streaming Responses via AWS Lambda

Jones Zachariah Noel N

Jones Zachariah Noel N

Originally posted on The Serverless Terminal blog - Streaming Responses via AWS Lambda.

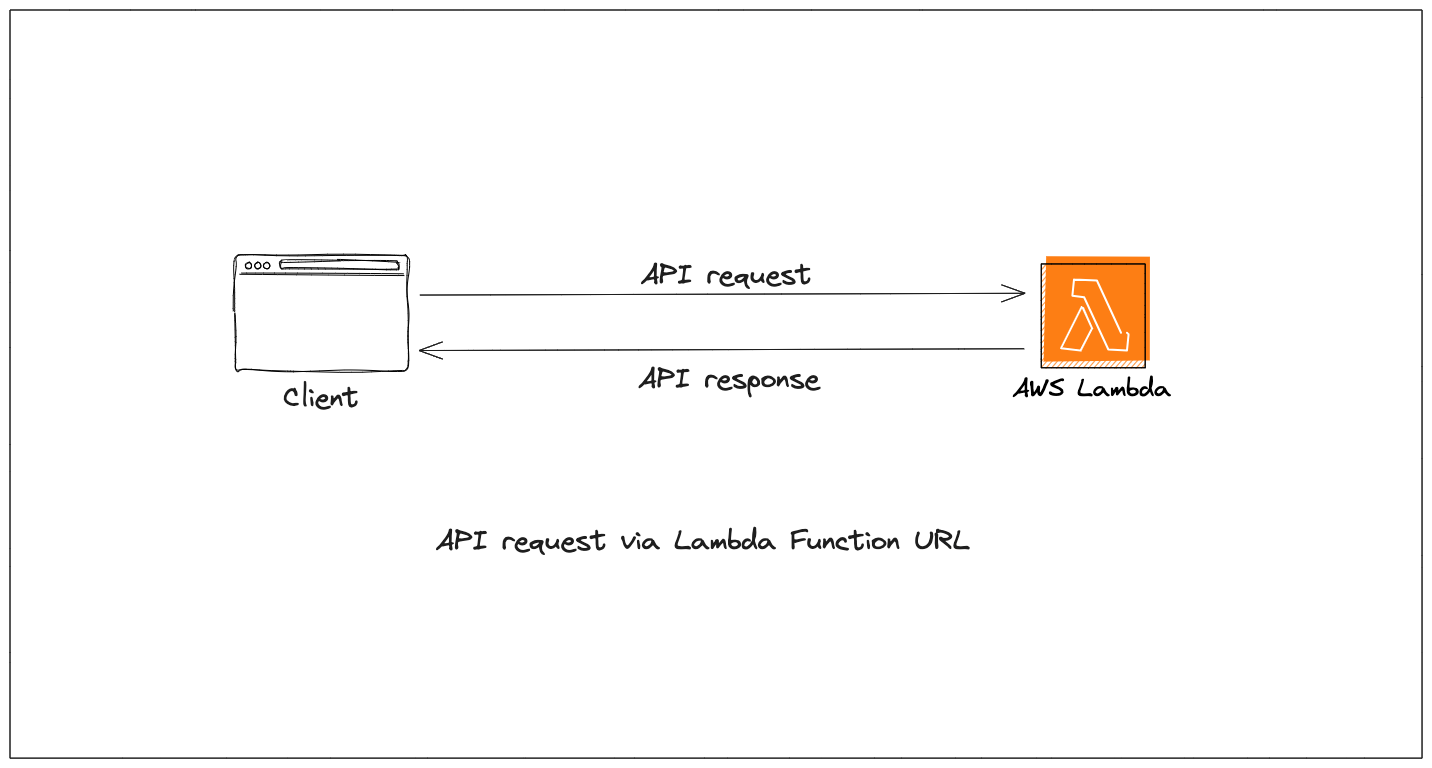

Building APIs on a Serverless stack includes using AWS Lambda Function URLs where the client traditionally requests the Lambda function and it responds after the Lambda function has completed execution.

This pattern for large payloads induces latency that can negatively affect the application's performance.

In this blog, we will look at how Lambda function's Response Streaming would be helpful and identify when it would be ideal to use Response Streaming for your workloads.

Lambda Response Streaming

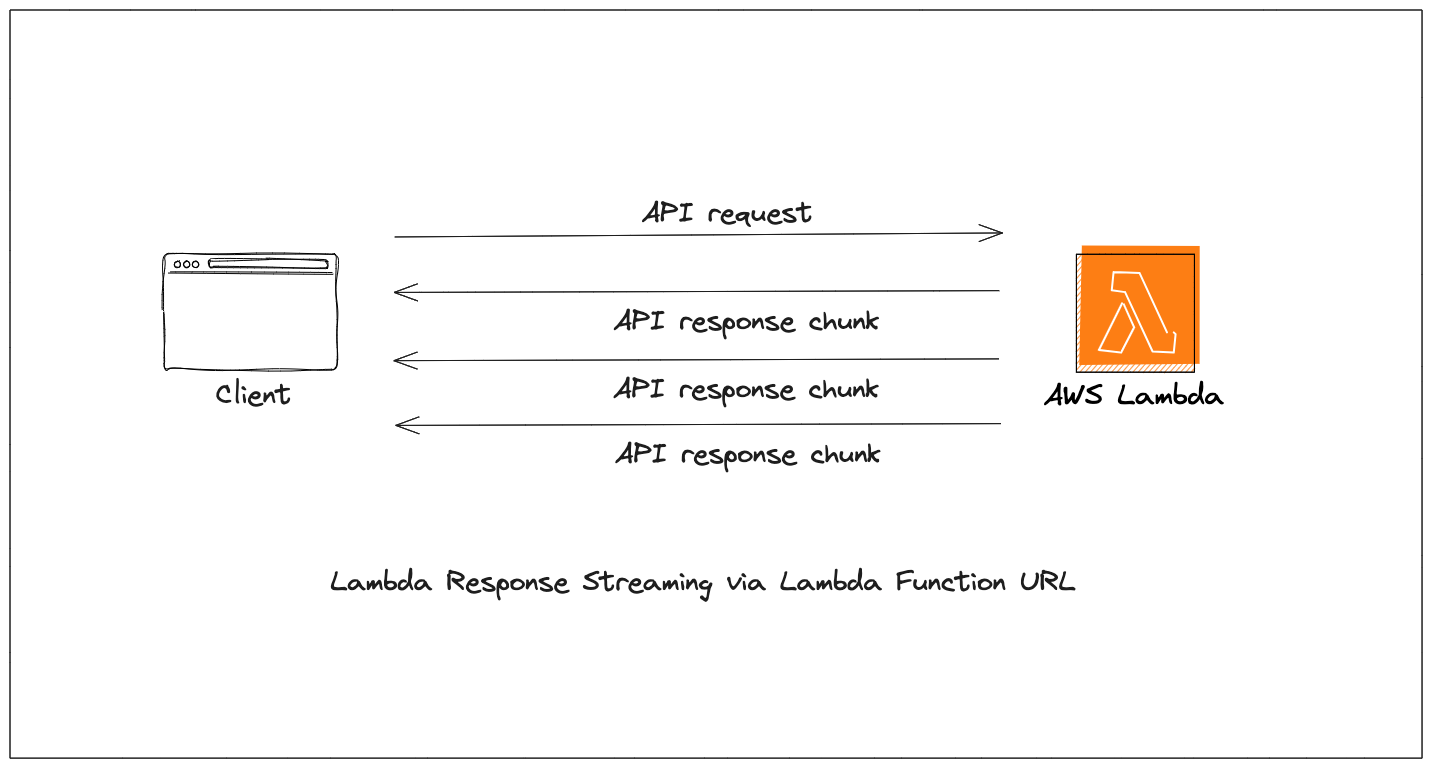

AWS Lambda launched support for response streaming where this new pattern of API invocation allows the Lambda function to progressively send the response in chunks of data back to the client.

What does this pattern bring in?

Improved time to first byte (TTFB) that improves the performance where the latency of the API requests is reduced and response is received as chunks of data as and when they are available.

API response from Response Streaming supports larger payloads which have a soft limit of 20MB without the need to send it as a whole response.

Enabling asynchronous data streaming which sends the API response when the data is available.

Gotchas of this pattern

Runtime support: AWS Lambda supports the capability of Response Streaming with only NodeJS runtime as part of the managed runtimes but with custom runtimes, you can leverage the Runtimes API to implement this.

Payload size: The maximum response payload size supported is 20MB which is a soft limit you can request more via AWS Support. The first 6MB of the response is streaming without bandwidth constraints but as the data exceeds 6MB, you will have a maximum throughput of 2MB/s.

Network cost: Similar to the payload limits, the first 6MB of the response is free and there would be data transfer charges for the total data streamed from the Lambda function.

Function timeout: This goes without saying that you need to configure the right timeout for the Lambda function, the default 3s may be short but something beyond the 60s would also result in the API client terminating with the timeout error.

API endpoints: The Response Streaming feature is available with only Lambda Function URL and expects the Function URL to use

ResponseStreamas the invocation mode. APIs are best managed with AWS API Gateway or Application Load Balancer (ALB) which doesn't support the response feature yet.

Building AWS Lambda with Response Streaming

AWS Lambda uses the Node's Writable Stream API, so you can use write() from NodeJS to write into the stream, or Lambda functions introduced pipeline() which extends the capability of the stream from util.

Node's write()

Response Streaming with write() would directly write into the Streams and whenever there is data in the stream, that would be sent to the client as a response. This method would expect you to manually handle the stream end.

exports.handler = awslambda.streamifyResponse(

async (event, responseStream, context) => {

const httpResponseMetadata = {

statusCode: 200,

headers: {

"Content-Type": "text/html",

}

};

responseStream = awslambda.HttpResponseStream.from(responseStream, httpResponseMetadata);

responseStream.write("<html>");

responseStream.write("<p>Hello!</p>");

responseStream.write("<h1>Let's start streaming response</h1>");

await new Promise(r => setTimeout(r, 1000));

responseStream.write("<h2>Serverless</h2>");

await new Promise(r => setTimeout(r, 1000));

responseStream.write("<h3>Is</h3>");

await new Promise(r => setTimeout(r, 1000));

responseStream.write("<h3>Way</h3>");

await new Promise(r => setTimeout(r, 1000));

responseStream.write("<h3>More</h3>");

await new Promise(r => setTimeout(r, 1000));

responseStream.write("<h3>Mature</h3>");

await new Promise(r => setTimeout(r, 1000));

responseStream.write("<p>DONE!</p>");

responseStream.end();

}

);

When you publish the above Lambda function and invoke the Function URL via a web browser, you can notice how the streaming responses are received.

Lambda's pipeline()

Lambda function's pipeline() is a util extension that handles the end of the stream automatically. This is available in util package and use pipeline(requestStream, responseStream) to write data into the stream.

import util from 'util';

import stream from 'stream';

const { Readable } = stream;

const pipeline = util.promisify(stream.pipeline);

export const handler = awslambda.streamifyResponse(async (event, responseStream, _context) => {

const httpResponseMetadata = {

statusCode: 200,

headers: {

"Content-Type": "text/html",

}

};

responseStream = awslambda.HttpResponseStream.from(responseStream, httpResponseMetadata);

let requestStream = Readable.from(Buffer.from(new Array(1024 * 1024).join('🚀')));

await new Promise(r => setTimeout(r, 1000));

requestStream = Readable.from(Buffer.from(new Array(1024 * 1024).join('⚡️')));

await new Promise(r => setTimeout(r, 1000));

requestStream = Readable.from(Buffer.from(new Array(1024 * 1024).join('🚀 Serverless is not dead!')));

await pipeline(requestStream, responseStream);

});

Let's publish the Lambda function and invoke it via the Lambda Function URL.

IaC to publish Lambda Function URL

While deploying and publishing the Lambda function, keep in mind to use the right Timeout and also FunctionUrlConfig with InvokeMode set as RESPONSE_STREAM.

Resources:

responseStreamingLambda:

Type: AWS::Serverless::Function

Properties:

Timeout: 20

Handler: index.handler

Runtime: nodejs20.x

Architectures:

- x86_64

FunctionUrlConfig:

AuthType: NONE

InvokeMode: RESPONSE_STREAM

Response Streaming is the best fit in

It's important to understand when it would be ideal to use Response Streaming and some of the use cases include -

| Use Case | Why it's the best fit? |

| Real-time chat applications | Improved User Experience with low latency. |

| Streaming large files from S3 | Enabling downloading of large (>6MB) S3 objects and receiving the data as and when available. |

| Server-side rending | When using SSR with incremental updates it improves the time to first byte (TTFB) while parts of the page render based on the data. |

| Streaming data from IoT devices | Enabling near real-time monitoring of data without delays and latency. |

Wrap up!

Lambda functions' Response Streaming is ideal for web applications and monitoring systems where near real-time data is crucial. However, considering the limitations, you need to use Lambda Function URL with NodeJS runtime and be aware of constraints on cost and network bandwidth.

Subscribe to my newsletter

Read articles from Jones Zachariah Noel N directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Jones Zachariah Noel N

Jones Zachariah Noel N

A Developer Advocate experiencing DevRel ecospace at Freshworks. Previous being part of the start-up Mobil80 Solutions based in Bengaluru, India enjoyed and learnt a lot with the multiple caps that I got to wear transitioning from a full-stack developer to Cloud Architect for Serverless! An AWS Serverless Hero who loves to interact with community which has helped me learn and share my knowledge. I write about AWS Serverless and also talk about new features and announcements from AWS. Speaker at various conferences globally, AWS Community, AWS Summit, AWS DevDay sharing about Cloud, AWS, Serverless and Freshworks Developer Platform