Understanding Transfer Learning: Benefits and Practical Applications in Pneumonia Detection

Sisir Dhakal

Sisir Dhakal

Transfer learning has revolutionized the current era of machine learning and computer vision, where models can utilize knowledge gained from prior tasks in order to solve new problems. This speeds up the training process and increases model performance, especially in cases of small datasets. In this article, we explore how transfer learning has revolutionized research; with practical application to pneumonia classification, and discuss ways to maximize its effectiveness.

What is Transfer Learning?

Transfer learning is one of the deep learning approaches in which researchers or practitioners use a pre-trained model on a huge dataset to solve a new but related problem. This method effectively saves valuable training data; the model capitalizes on its existing features (weights) and significantly reduces the training time. But this is not going to work quite well if there exists a huge difference between the original task and the new task. Using pre-trained models further fine-tuned with the help of specific needs, transfer learning produces an improved performance and cuts down on computational resources.

Why Transfer Learning Benefits, Researchers and Practitioners?

Reduced Training

The time required for training deep neural networks from scratch is computationally demanding and time-consuming. Transfer learning solves that by enabling one to start training a new task with pre-trained models. This reduces the training time drastically, hence providing a possibility to develop and deploy machine learning solutions faster.

Improved Performance under Limited Data

Transfer learning is valuable when working with small data sets. Whereas pre-trained models will have learned from large and diverse datasets, they might use their gained knowledge to adapt to new tasks with a small number of examples. This very often results in better performance compared to training a model from scratch, especially when data is scarce.

Accessibility

Transfer learning makes advanced models accessible to a wider audience. A researcher and practitioner can thus leverage the state of the art without heavy computational investments by using pre-trained models available through, for instance, TensorFlow and PyTorch.

Enhanced Accuracy

It is applied after training on the general task, and fine-tuning pre-trained models on specific tasks now makes it possible for researchers to use rich feature representations that have been learned from large datasets. Usually, this produces higher accuracy and robustness on the target task, hence rendering the models more effective and more practical in operation.

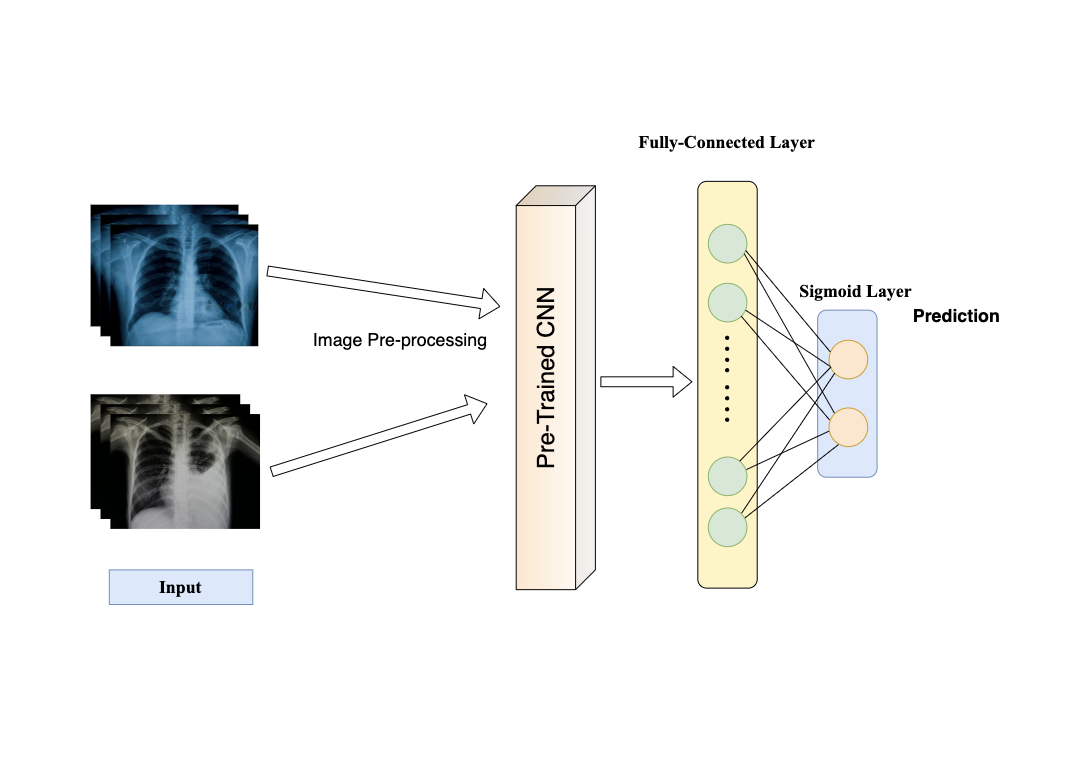

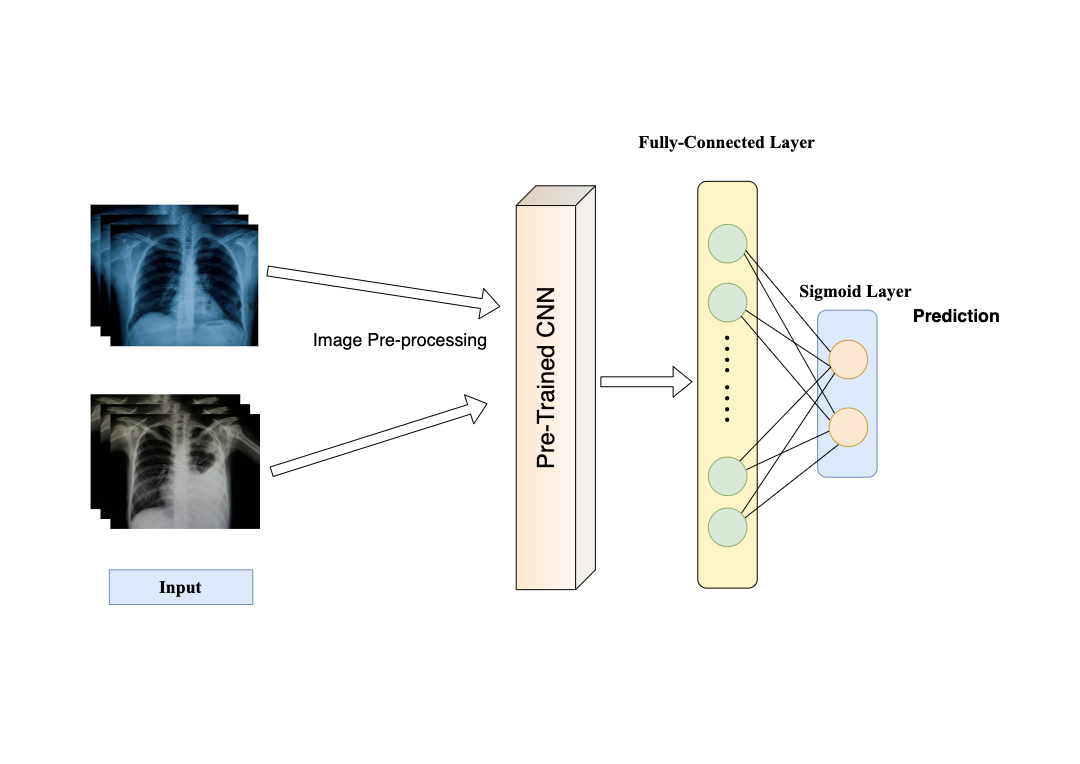

Practical Example: Chest X-ray Classification

To illustrate the power of transfer learning, let's consider a practical example involving chest X-ray image classification. We use the VGG16 model, a well-known convolutional neural network (CNN) pre-trained on ImageNet, as the base model. This model is adapted to classify X-ray images into two categories: Normal and Pneumonia. While VGG16 is effective, you can also experiment with other advanced models, such as EfficientNet or DenseNet, to potentially improve performance and leverage the latest advancements in neural network architectures.

Step-by-Step Implementation

Data Preparation: We start by importing and augmenting the X-ray dataset using

ImageDataGeneratorfor data preprocessing.from keras.preprocessing.image import ImageDataGenerator data_gen = ImageDataGenerator( rescale=1./255, shear_range=0.2, zoom_range=0.2, horizontal_flip=True, rotation_range=20, width_shift_range=0.1, height_shift_range=0.1 ) train_dataset = data_gen.flow_from_directory( train_path, batch_size=batch_size, target_size=(224, 224), class_mode='binary' ) val_dataset = data_gen.flow_from_directory( val_path, batch_size=batch_size, target_size=(224, 224), class_mode='binary' )Model Configuration: We load the VGG16 model without the top classification layers and freeze its base layers. We then add custom layers for our specific classification task.

from keras.applications import VGG16 from keras import layers, models base_model = VGG16(include_top=False, weights='imagenet', input_shape=(224, 224, 3)) base_model.trainable = False model = models.Sequential([ base_model, layers.GlobalAveragePooling2D(), layers.BatchNormalization(), layers.Dense(256, activation='relu'), layers.Dropout(0.2), layers.BatchNormalization(), layers.Dense(1, activation='sigmoid') ]) model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])Training and Evaluation: We then train the model using our dataset and apply callbacks for early stopping and learning rate adjustment.

from keras.callbacks import EarlyStopping, ReduceLROnPlateau callbacks = [ EarlyStopping(monitor='val_accuracy', patience=8, restore_best_weights=True), ReduceLROnPlateau(monitor='val_accuracy', factor=0.2, patience=3) ] history = model.fit(train_dataset, epochs=50, validation_data=val_dataset, callbacks=callbacks)

Optimizing Transfer Learning

Fine-Tuning: Train the initial model and perform further fine-tuning toward a new task. This basically means unfreezing parts of the layers in a pre-trained model and continuing with training.

Regularization: Use techniques, including dropout and batch normalization, to avoid overfitting due to small datasets used in fine-tuning.

Data Augmentation: Increase the data by different means in the hope of building a more robust model that generalizes better.

Learning Rate Scheduling: Tweak the learning rate during the training phase for better trade-offs between faster convergence and model stability.

Model Selection: Choose the pre-trained model that is most suitable for your task. Experiment with various architectures (e.g., ResNet, MobileNet) in order to choose the optimal one.

Conclusion

Transfer learning has emerged as one of the most vital tools for modern machine learning and computer vision. In that manner, it allows researchers and practitioners to achieve superior results with less data and computational power due to leveraging pre-existing knowledge. Mastering and applying transfer learning techniques unlock new opportunities across various fields, driving innovation and expanding the potential applications of machine learning.

Subscribe to my newsletter

Read articles from Sisir Dhakal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sisir Dhakal

Sisir Dhakal

I am deeply passionate about Deep Learning, Computer Vision, and Artificial Intelligence. With hands-on experience in machine learning and computer vision projects, I am an enthusiastic computer engineer eager to explore and innovate. My proficiency in Python, Pandas, TensorFlow, and other related technologies underpins my technical capabilities. I am particularly interested in the interdisciplinary applications of computer vision and AI, and I am actively seeking opportunities to collaborate with fellow enthusiasts to drive impactful contributions in these fields.