Exploratory Programming: Refining a domain model by building a custom workbench to visualise it

Mike Hogan

Mike Hogan

I love domain modeling. Trying to capture the essential rules of a domain in a coherent model is a fun puzzle to solve. The only way I can do it, is through iteration. And the way I like to assess each iteration, I found, is through a visual medium.

Recently I found myself making a custom "workbench" in SvelteKit to help me think through the domain model for scheduling rules associated with all kinds of bookable services. You can find the workbench running here .

It was so effective and enjoyable that I then started to look around to see if other folks did this.

I found my way to Exploratory Programming. So I'm going to share how I used it recently, and see if it resonates with other people.

What is Exploratory Programming?

Exploratory programming is an approach where you write code not to build a final product, but to learn about and understand the problem space itself. It's a form of thinking through coding, where the act of implementation becomes a tool for discovery and refinement of ideas.

In the context of domain modeling, exploratory programming allows me to:

1. Quickly prototype different versions of my model

2. Interact with these prototypes in real-time

3. Discover edge cases and limitations I might not have initially considered

4. Iteratively refine my model based on hands-on experimentation

The Domain: Scheduling Rules

To illustrate, let's look at a complex domain I've been working on: a flexible scheduling system for various types of services. Here are two examples from my current domain model:

// A simple service with fixed time slots

const mobileCarWash: Service = {

id: "mobile-car-wash",

name: "Mobile Car Wash",

description: "We come to you",

scheduleConfig: singleDayScheduling(

timeslotSelection([

timeslot(time24("09:00"), time24("11:00"), "Morning"),

timeslot(time24("11:00"), time24("13:00"), "Midday"),

timeslot(time24("13:00"), time24("15:00"), "Afternoon"),

timeslot(time24("15:00"), time24("17:00"), "Late afternoon")

])

)

}

// A more complex service with multi-day booking and variable length

const hotelRoom: Service = {

id: "hotel-room",

name: "Hotel Room",

description: "Stay overnight",

scheduleConfig: multiDayScheduling(

variableLength(days(1), days(365)),

startTimes(

fixedTime(time24("15:00"), "Check-in",

time24("11:00"), "Check-out")

)

)

}

These examples demonstrate the flexibility required in the model. But how do I ensure the model can handle all the complexities of real-world scheduling scenarios? This is where exploratory programming comes in.

Building an Exploratory Programming Workbench with Svelte

To facilitate exploration, I built a custom workbench using Svelte. This workbench allows me to rapidly prototype different service configurations and interact with them in real-time. Here's a glimpse of the main component:

<script lang="ts">

import { allConfigs } from "./types3";

import SingleDaySchedule from "./SingleDaySchedule.svelte";

import MultiDaySchedule from "./MultiDaySchedule.svelte";

import CodePresenter from "$lib/ui/code-presenter/CodePresenter.svelte";

let service = allConfigs[0].service;

function onConfigChange(event: Event) {

const target = event.target as HTMLSelectElement;

const found = allConfigs.find(c => c.service.name === target.value);

if (found) {

service = found.service;

}

}

// ... other utility functions

</script>

<div class="card bg-base-100 shadow-xl max-w-sm mx-auto">

<div class="card-body p-4">

<div class="form-control mb-4">

<label class="label">

<span class="label-text font-semibold">Select Service Type</span>

</label>

<select class="input-bordered input" on:change={onConfigChange}>

{#each allConfigs as c}

<option value={c.service.name} selected={c.service === service}>{c.service.name}</option>

{/each}

</select>

</div>

{#key service.id}

<CodePresenter

githubUrl="https://raw.githubusercontent.com/cozemble/breezbook/main/apps/playground/src/routes/uxs/various-services/demo/types3.ts"

codeBlockId={service.id}/>

{/key}

</div>

</div>

{#key service.id}

{#if service.scheduleConfig._type === "single-day-scheduling"}

<SingleDaySchedule dayConstraints={service.scheduleConfig.startDay ?? []}

duration={maybeDuration(service.scheduleConfig.times)}

times={flattenTimes(service.scheduleConfig.times)}/>

{:else}

<MultiDaySchedule startDayConstraints={service.scheduleConfig.startDay ?? []}

endDayConstraints={service.scheduleConfig.endDay ?? []}

length={service.scheduleConfig.length}

startTimes={service.scheduleConfig.startTimes}

endTimes={service.scheduleConfig.endTimes ?? null}/>

{/if}

{/key}

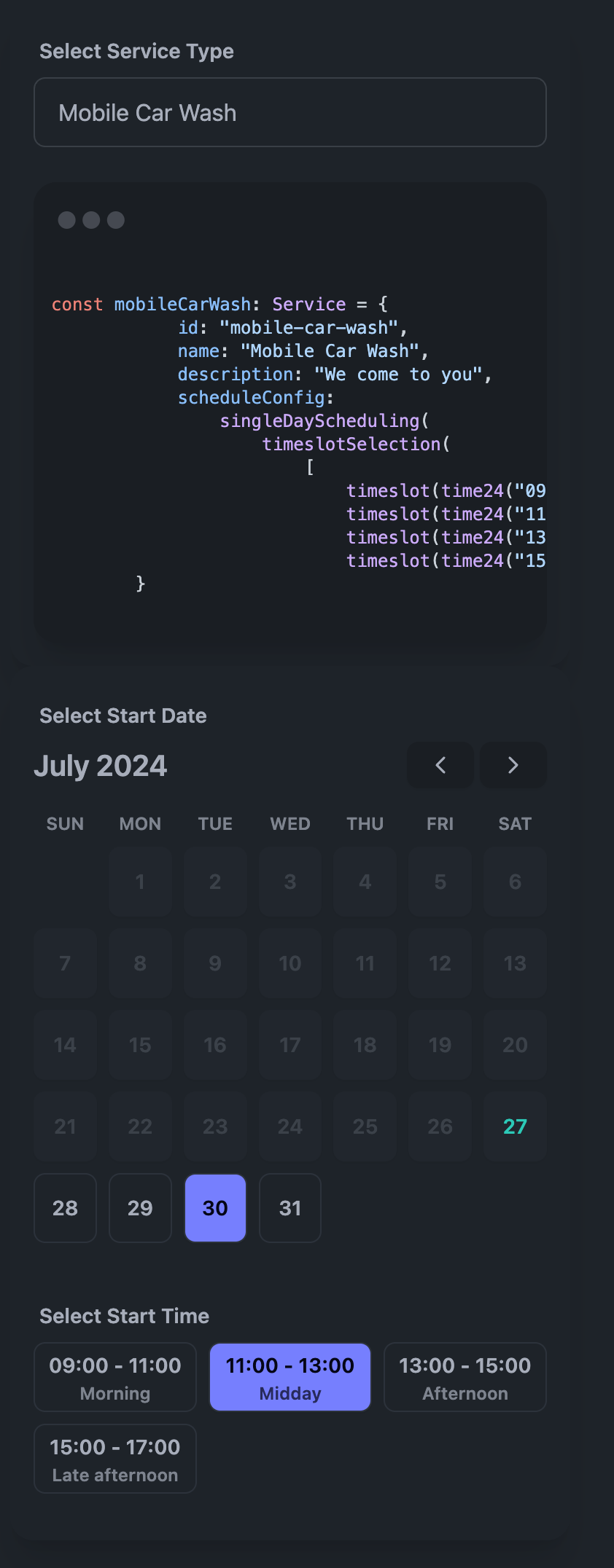

And this is what it looks like in operation:

This workbench allows me to play with various configurations of my domain model.

I register all my examples with the select box at the top of the screen. When I select an option, it shows me the code to remind me what I'm dealing with.

It then displays a simple date and time selector that exercises the model. In the above example, the code configures a time-slot selector, and so I get to play with a time slot selector. Obviously I had to code the svelte app to handle this configuration. That activity refined the model, because I was applying a "can this model support a user interface" force to it.

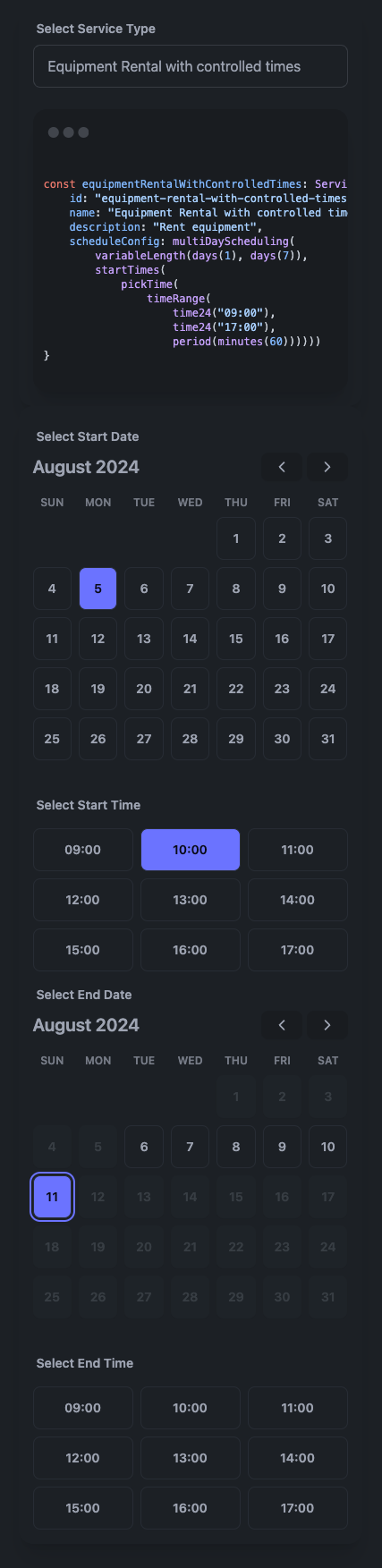

Here is another example:

This configuration is for a booking that varies between 1 and 7 days in length, and has hourly collect and return slots between 9am and 5pm.

The tension between expressing the model cleanly in the configuration section, and building a svelte app to display that configuration, adds two complementary forces to the design work: can I express the rules clearly, can those rules support a user interface.

With this approach, I was able to:

1. Experiment Rapidly: Quickly create and modify service configurations.

2. Visualize the Model: See the actual configuration code alongside an interactive scheduling interface.

3. Test Edge Cases: Easily try out different scheduling scenarios to uncover limitations in the model.

4. Iterate Quickly: Make changes to the model and immediately see their effects.

The Exploratory Programming Process

With this workbench, my exploratory programming process looks like this:

1. Prototype: Implement an initial version of the scheduling rules model.

2. Explore: Create various service configurations and interact with them in the workbench.

3. Discover: Identify limitations, edge cases, or awkward implementations through hands-on testing.

4. Refine: Adjust the core model based on discoveries made during exploration.

5. Repeat: Continuously cycle through this process, each time gaining deeper insights into the domain.

Through this process, I went through four major iterations of the domain model. Between these major shifts, there were numerous minor tweaks, all driven by insights gained through exercising the model.

Balancing Code Quality: Domain Model vs. Workbench

An important aspect of this approach is understanding where to focus my efforts on code quality:

1. Domain Model: The quality of the domain model code must be high. This is the end goal of my exploration, and it needs to be robust, maintainable, and reflective of my deepened understanding of the problem space.

2. Workbench Code: The code for the exploratory workbench doesn't need to be of the same high quality. It's a means to an end, a tool for exploration rather than a production system. To speed up development of the workbench, I leveraged AI assistance, using Claude to generate much of the boilerplate and UI code.

This allowed me to focus my energy on what truly matters - crafting a high-quality domain model - while still benefiting from a functional and flexible exploration tool.

Lessons Learned from Exploratory Programming

1. Wait until exploratory programming becomes necessary: I did not pile in on day one into exploratory programming. I worked on my domain model until it started to annoy me. Then I deliberately started again in exploratory fashion, to discover something better, given the constraints I was aware of.

2. Leverage AI for Ideation: Use LLMs to expand your exploration space and uncover edge cases. Create loads of sample cases, and use case based modeling.

3. Interaction Reveals Truth: Hands-on testing often uncovers issues that aren't apparent from just reading code.

4. Visualize to Understand: Seeing the model in action alongside its code representation deepens understanding. It also keeps your domain model "honest", in that it has to be able to underpin a user interface.

5. Focus on Quality Where it Matters: Prioritize the quality of your domain model code over your exploration tools.

6. Embrace Change: Yes, it's a cliche, but be ready to significantly alter your model based on exploratory findings. I did that four times, and it can be deflating to have to "restart".

7. Choose Flexible Tools: Tools like Svelte that allow for rapid prototyping are invaluable for exploratory programming.

Is it worth it?

Building this Sveltekit mini-app took time. Was it worth it? For me, the workbench kept my enthusiasm high throughout the exploration, especially when faced with four major "start again" iterations. Its very rewarding to "see" the model in action.

Each time I had to substantially revise the model, the workbench provided a tangible way to interact with and validate my new ideas quickly. The workbench code survived these major model revisions reasonably well, because it's only calendars and time slots. So, the immediate feedback loop kept me engaged and motivated, gamifying the experience of building the domain model.

I have no metrics on the ROI of this activity, but I am happy with the outcome, and am convinced I wouldn't have got here without the tactile feedback of the workbench.

Next steps

I know that pricing rules are next. I have a pricing domain model already, and things about it are starting to annoy me. So I'm going to start again and, using what I already know about the pricing domain, do a cleanroom implementation in exploratory fashion.

There may be other areas that lend themselves to this approach. And probably this ScheduleConfig that I am now happy with will in tie become deficient as I discover more areas of the domain I need to model.

But, in any case, this idea of "custom canvases" to explore models is definitely committed to my toolkit.

Other approaches

I'm also tracking what the folks over at Moldable Development are doing. Some key statements from their site:

"You build a tool that shows your current problem in a way that makes it easy to grasp. It's like data science, but for software."

"Software is shapeless. We, humans, need a shape to reason about anything. Tools provide the shape of software."

I think they are on to something.

Notebook based programming as implemented by Jupyter and others is also in the same tool space.

I also feel this is related to "Strategic Programming", first discovered by me on reading A Philosophy of Software Design by John Ousterhout and summarised here.

Subscribe to my newsletter

Read articles from Mike Hogan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Mike Hogan

Mike Hogan

Building an open source booking and appointments software stack - https://github.com/cozemble/breezbook