A Revolutionary Approach: Leveraging Generative AI In Exploratory Testing

Sam Atinkson

Sam Atinkson

Introduction

Generative AI, epitomized by ChatGPT from OpenAI, is ushering in a new era of convenience, seamlessly integrating into our daily lives. This advanced language model is a versatile assistant adept at solving complex problems and generating content for various testing-related tasks. As generative AI becomes increasingly ubiquitous, it is crucial to grasp its usage nuances and acknowledge its inherent limitations.

In this blog, we delve into leveraging ChatGPT for exploratory testing and enhancing the quality of its outputs.

Understanding Exploratory Testing

Exploratory testing embodies a dynamic and adaptive approach to uncovering the true nature of a software product through hands-on exploration. Like an experienced explorer venturing into uncharted territory, an exploratory tester navigates the software landscape, gathering insights from various sources such as UI design, language usage, logs, and underlying infrastructure.

In contrast to traditional testing methods that rely on pre-scripted scenarios and predetermined expectations, exploratory testing prioritizes contextual understanding and real-time assessment. It acknowledges that software quality is multidimensional and can vary depending on individual perspectives and situational context.

Enhancing Software Testing with Generative AI

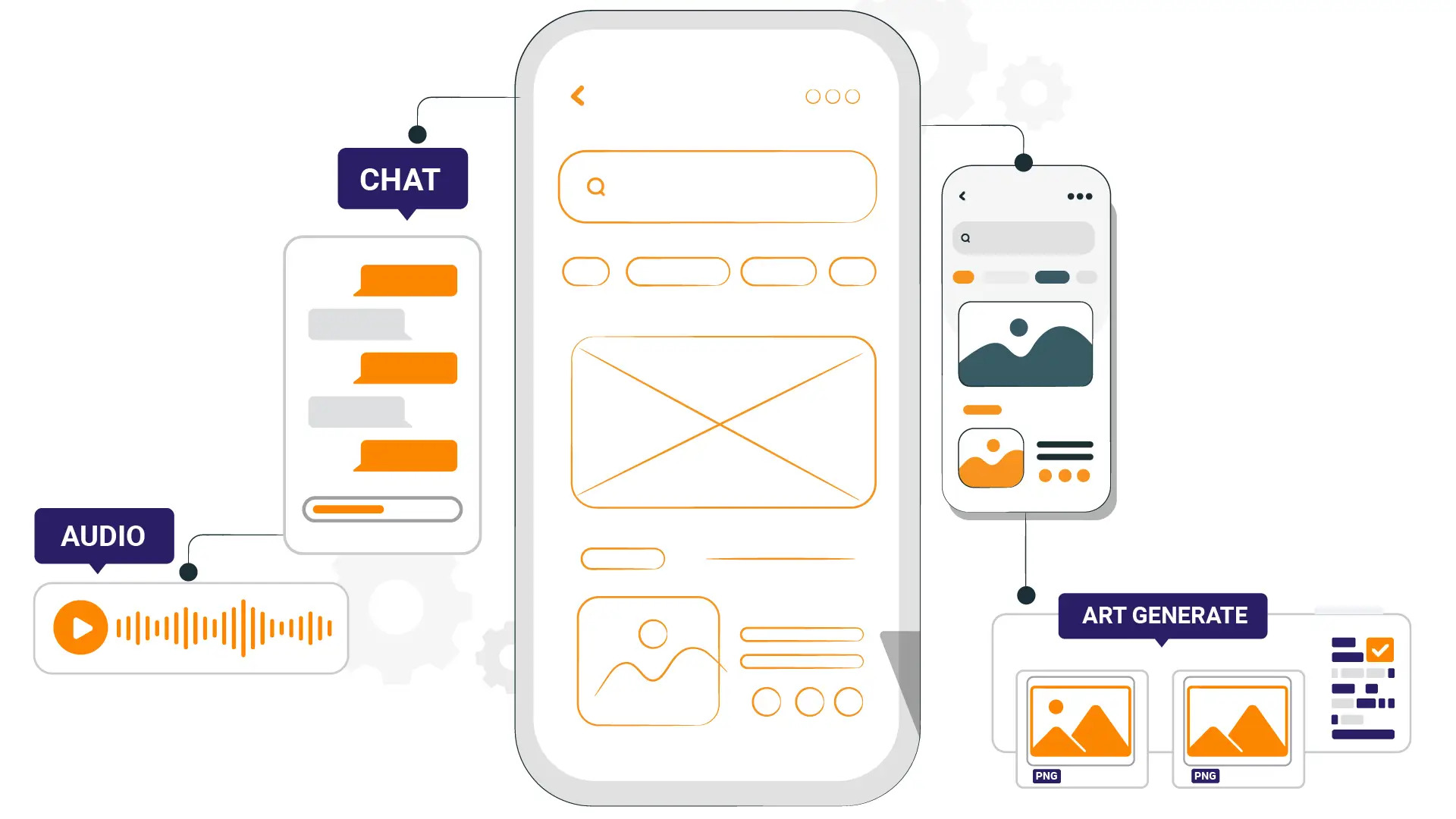

Generative AI introduces innovative methods to enhance software testing processes, utilizing various models to generate outputs. Text-to-text models interpret natural language inputs to produce textual outputs, while text-to-task models execute specific tasks based on textual instructions.

Here's a framework to effectively integrate generative AI into software testing practices:

● Automatic Test Case Generation: Generative AI utilizes machine learning techniques to analyze software code and behavior, automatically generating comprehensive test cases. This approach covers positive and negative scenarios, enhancing test coverage and efficiency. It reduces manual effort, which is particularly beneficial for complex applications.

Example: Generative AI can analyze an e-commerce website's code to generate test cases for user interactions like product searches, cart operations, and payment processing, covering both successful and failed scenarios.

● Defect Detection Using Predictive Analytics: Leveraging historical data and code analysis, generative AI predicts potential defects early in the development cycle. By identifying high-risk areas, testers can focus on critical issues preemptively, improving software quality and reliability.

Example: By analyzing software code history, generative AI identifies modules prone to defects, allowing testers to prioritize thorough testing and early issue resolution.

● Test Data Generation: Generative AI ensures meaningful and reliable tests by generating diverse test data for positive and negative scenarios. This approach supports testing under varied data conditions, which is crucial for validating data-centric applications and ensuring data quality and privacy.

Example: For healthcare applications, generative AI creates synthetic patient data with diverse medical conditions and treatment histories, facilitating rigorous testing of data handling capabilities.

● Test Automation: Automating testing tasks through generative AI enables testers to focus on complex activities such as test design and analysis. It simplifies test script creation without manual coding, making test automation accessible across different technical skill levels.

Example: Testers can describe test scenarios in natural language, prompting generative AI to generate corresponding test scripts automatically, streamlining the automation process.

Rigorous manual verification of generative AI outputs is essential to ensure accuracy and quality, mitigating potential errors caused by misinterpreted inputs. Clear prompts and validation at each stage are critical to achieving precise testing outcomes.

Effective Use of Generative AI for Exploratory Testing

Generative AI serves as a valuable complement to human expertise in exploratory testing, offering insights and inspiration rather than replacing the role of human testers. It's crucial to recognize that exploratory testing hinges on human evaluation, learning, and iterative exploration to derive meaningful insights.

Generative AI, unlike traditional automation tools, represents a significant evolution. It combines extensive knowledge from vast internet resources with the ability to generate new content and insights in textual formats such as test cases, strategies, and bug reports.

Critical considerations for integrating generative AI into exploratory testing include:

- Understanding AI Capabilities: Generative AI possesses extensive knowledge and can generate diverse textual outputs, offering a fresh perspective and aiding in exploratory testing tasks.

- AI as a Collaborative Tool: Rather than viewing AI as a replacement, exploratory testers can embrace it as a collaborative partner. Testers can assess AI's contributions objectively by testing its responses against their expert insights.

- Practical Evaluation: Testers should engage with AI on specific testing aspects, such as evaluating a system like Google's homepage. This involves comparing AI-generated responses with human-generated insights to validate and enhance testing approaches.

By leveraging generative AI as a supportive tool in exploratory testing, testers can enhance efficiency, gain new perspectives, and improve overall testing outcomes while maintaining the critical role of human judgment and expertise.

Step-by-Step Guide to Harnessing Generative AI for Effective Exploratory Testing

Generative AI offers significant advantages when integrated into exploratory testing processes, enhancing creativity and efficiency in several key areas:

1. Generating Exploratory Test Ideas: Generative AI, such as ChatGPT, is a valuable tool for stimulating creativity by generating fresh test ideas. Testers can brainstorm innovative test scenarios by providing prompts related to specific user stories or functionalities.

Example Prompt: Given a user story about importing attachments via a CSV file, generate exploratory test ideas focusing on functionality and usability.

2. Creating Exploratory Test Charters: Test charters guide exploratory testing sessions, outlining objectives and areas of focus. Generative AI can assist in crafting these charters by suggesting test scenarios tailored to specific quality attributes like security or usability.

Example Prompt: Create test charters related to security considerations for a feature that involves importing attachments using Xray Test Case Importer.

3. Summarizing Testing Sessions: After conducting exploratory testing, summarizing findings is crucial. Generative AI can help extract and consolidate key observations, identified defects, and overall quality assessments from testing notes.

Example Prompt: Based on testing notes for a banking app exploration session, summarize the session's results and assess the confidence in its quality aspects.

4. Enumerating Risks: Identifying and prioritizing user stories or requirements risks is essential. Generative AI aids in brainstorming and analyzing potential risks, providing insights based on input parameters.

Example Prompt: Identify risks associated with a requirement involving importing attachments via CSV files using Xray Test Case Importer.

5. Exploring Scenario Variation: Generative AI can generate variations of test scenarios to explore different paths and behaviors within an application. This capability assists testers in comprehensively assessing application functionality under various conditions.

Example Prompt: Explore performance testing scenarios for a mobile banking app to evaluate responsiveness under heavy user load.

6. Generating Sample Data: Automating sample data generation for testing purposes is another area where generative AI proves beneficial. It can create realistic datasets or populate user profiles with simulated transactions, saving time and effort.

Example Prompt: Generate sample financial transactions (deposits, withdrawals, transfers) to test a banking application's functionality.

Integrating generative AI into exploratory testing enhances productivity and encourages innovative testing approaches. It complements human expertise by providing new perspectives and insights, thereby improving the overall effectiveness of software testing efforts.

Advantages of Generative AI for Exploratory Testing

Generative AI offers significant benefits to exploratory testing, enhancing test coverage, bug detection, and software development efficiency:

- Enhanced Test Coverage: Generative AI automates the creation of comprehensive test cases across various scenarios and inputs, ensuring thorough testing without extensive manual setup.

- Improved Bug Detection: By analyzing large datasets and code patterns, generative AI identifies complex software issues early, including bugs, vulnerabilities, and performance bottlenecks.

- Accelerated Development: Automating tasks like test case generation and design prototyping frees developers to focus on innovation, speeding up the development lifecycle.

Tech giants like Facebook and Google increasingly utilize generative AI capabilities to optimize testing processes and improve software quality.

Challenges of Generative AI in Exploratory Testing

- Contextual Understanding: Generative AI testing like ChatGPT may need help grasping the full context, purpose, and audience relevance of software products.

- Time-Consuming Interactions: Despite intended ease, interactions with ChatGPT in exploratory testing can be time-consuming.

Training Dataset Dependency: Generative AI heavily relies on its training dataset and parameters for knowledge accumulation.

Limitations in Contextual Depth: Input size restrictions may limit the AI's ability to provide contextually rich outputs.

- Concerns: Significant concerns include hallucination of facts, reliance on non-verifiable sources, and potential bias in generated content.

Future Directions and Opportunities for Generative AI

Generative AI is poised to transform automated software testing, offering substantial efficiency and test quality benefits. Key future trends include:

- Automated Test Case Generation: Generative AI will automate the creation of tailored test cases, ensuring comprehensive coverage and identification of potential failures.

- Enhanced Exploratory Testing: AI-driven automation will facilitate exploratory testing, uncovering unexpected bugs through intuitive software exploration.

- Visual Testing Automation: Generative AI will streamline visual testing, ensuring the software meets design requirements and appears as intended.

In addition, generative AI will play a crucial role in:

- Test Case Prioritization: Identifying critical test cases likely to uncover bugs and optimizing testing efforts.

- Automated Test Maintenance: Ensuring test cases remain current and effective as software evolves, enhancing overall testing efficiency.

These advancements promise to revolutionize automated software testing, enhancing productivity and software quality.

HeadSpin's AI-driven Approach to Exploratory Testing

HeadSpin excels in enhancing enterprise testing through its innovative exploratory testing capabilities. Here's how HeadSpin adds value to businesses:

- Real-world Evaluation: HeadSpin employs real devices, networks, and users to conduct comprehensive testing, uncovering critical issues often missed in simulated environments.

- Comprehensive Testing: By assessing app performance across diverse devices, networks, and locations, HeadSpin identifies performance bottlenecks and network issues, ensuring robust app performance.

- Accelerated Time-to-market: HeadSpin's exploratory testing strategy enables swift identification and resolution of significant issues in real-time, facilitating quicker app launches and enhancing user engagement.

- Enhanced User Experience: Through real-time functionality and user interface testing, HeadSpin improves app usability, ensuring superior user experiences that foster retention.

- Cost-effective Solutions: Leveraging real-world testing resources, HeadSpin provides cost-efficient testing solutions, optimizing investment returns while delivering thorough testing coverage.

Key Considerations

Exploratory software testers should explore how generative AI can elevate their productivity, value, and job security—otherwise, others will. Managers overseeing exploratory testing teams can leverage tools like GPT to evaluate team performance swiftly.

HeadSpin's advanced data science-driven platform equips testers with profound insights into app performance, real-time issue detection, and optimization strategies, ensuring superior user experiences. Integrating exploratory testing with HeadSpin enhances app quality, delivers seamless user interactions, and instills confidence in app deployments.

Embrace HeadSpin's omnichannel digital experience testing platform for guaranteed app development and deployment success.

Originally Published:- https://www.headspin.io/blog/how-to-use-generative-ai-in-exploratory-testing

Subscribe to my newsletter

Read articles from Sam Atinkson directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sam Atinkson

Sam Atinkson

Sam Atkinson is a results-driven SEO Executive with 3 Years of experience in optimizing digital visibility and driving organic growth. Skilled in developing and executing strategic SEO initiatives, Sam excels in keyword research, technical audits, and competitor analysis to elevate online presence and improve search engine rankings. With a keen eye for detail and a passion for delivering measurable results, Sam collaborates cross-functionally to align SEO efforts with business objectives and enhance website performance. Committed to staying ahead of industry trends.