Day 26/40 Days of K8s: Network Policies in Kubernetes !! ☸️

Gopi Vivek Manne

Gopi Vivek Manne

✴ What is a Network Policy

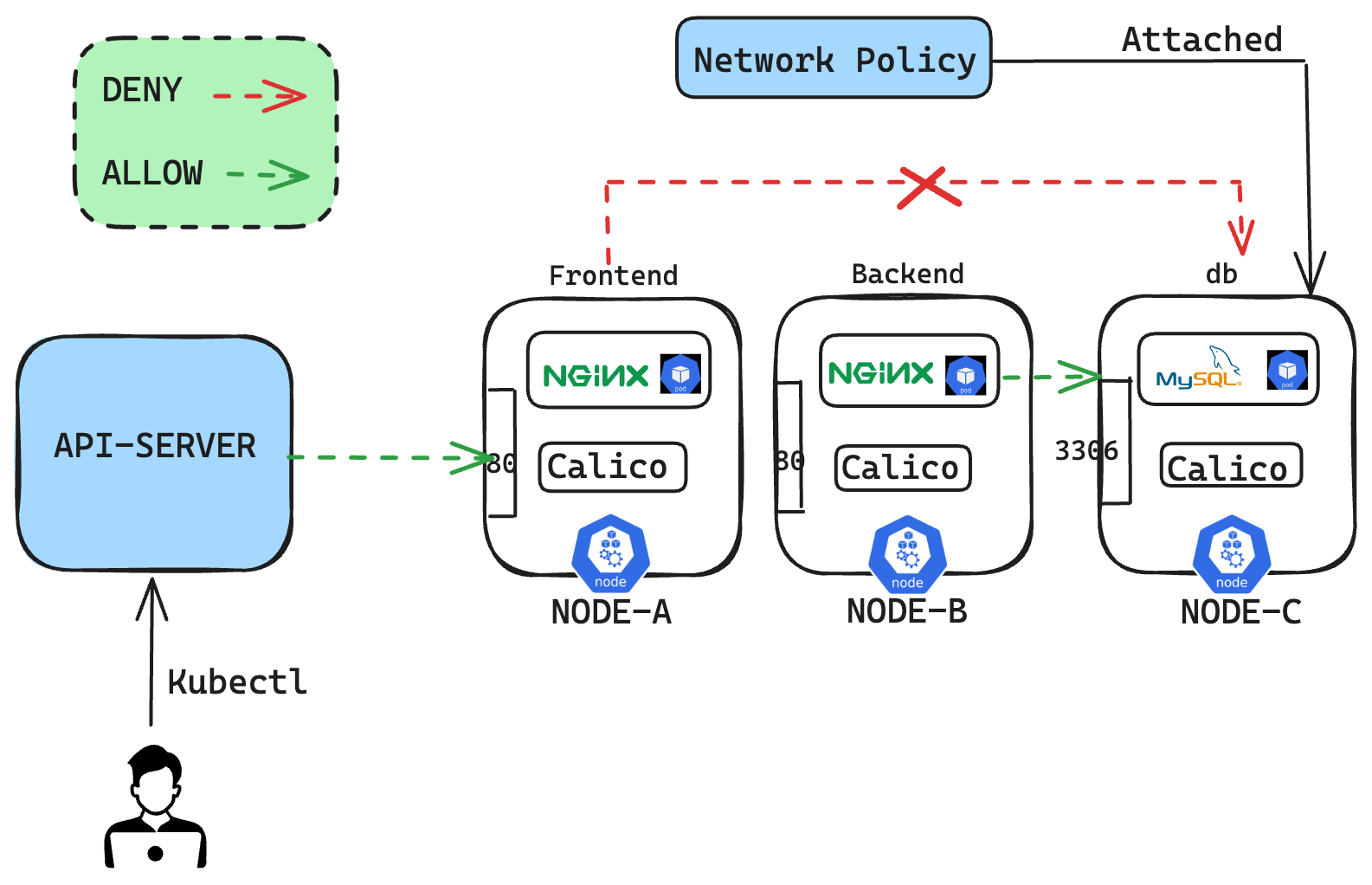

Network Policies are used to configure rules for how traffic flows in and out of Pods within a Kubernetes cluster. They help control communication between Pods, Services, and external endpoints. Network Policies are implemented by a Container Network Interface (CNI) plugin, which runs as a DaemonSet on all nodes to enforce the defined rules.

✴ Why do we use a network policy in Kubernetes

There are many reasons why we use Network Policies

Traffic Control: How Pods communicate with each other and with Services, allowing users to restrict or allow traffic based on specific criteria such as labels, namespaces, and ports.

Security: By default, Kubernetes allows all traffic between Pods and between Pods and Services within the cluster network. Network Policies enable users to deny all traffic and then explicitly allow only the necessary communication. This enhances the security of the applications running in the cluster.

Isolation: Network Policies help isolate different applications or services within the cluster.

Example: A frontend application can be restricted to only communicate with its backend service, while the backend service may only communicate with a database service.

Compliance: (Policy as Compliance) Network Policies help achieve compliance by allowing organizations to define and enforce stricter network access rules.

✴ Create a KIND cluster that supports network policy

When creating a Kind cluster, the default CNI plugin used is kindnet.

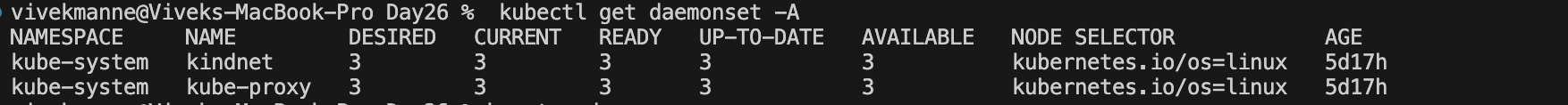

Verify the CNI plugin on our existing KIND cluster

CNI plugins are add-ons to the cluster, deployed as a DaemonSet on all worker nodes, enabling communication between Pods by default.

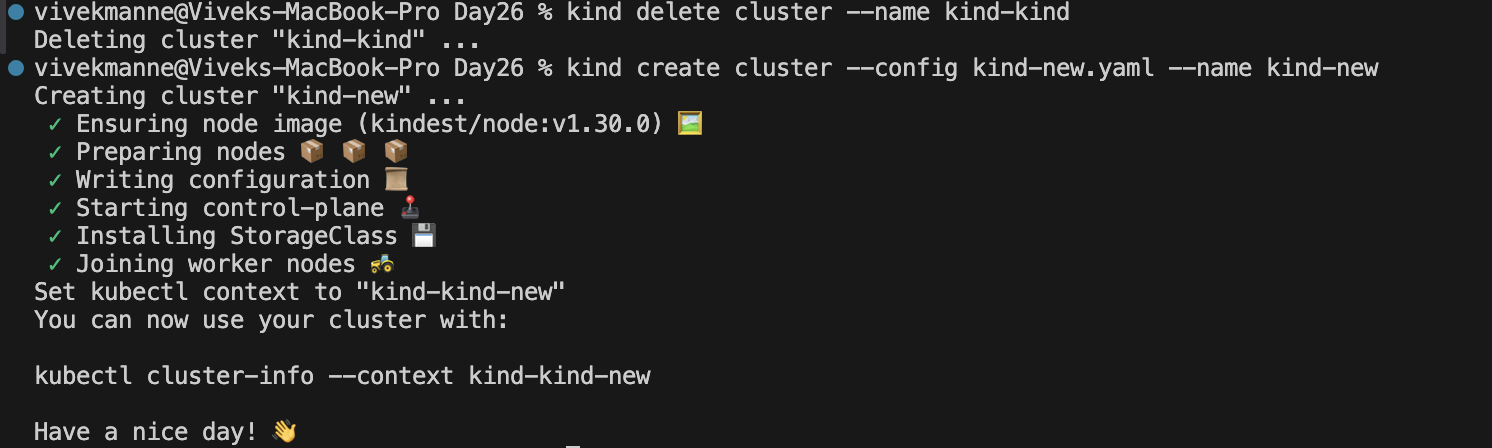

Let's recreate the KIND cluster with the default network plugin disabled, you can specify that option during cluster creation.

Kind cluster yaml

kind: Cluster apiVersion: kind.x-k8s.io/v1alpha4 nodes: - role: control-plane extraPortMappings: - containerPort: 30001 hostPort: 30001 - role: worker - role: worker networking: disableDefaultCNI: true ##This will disable the default CNI plugin comes with KIND cluster podSubnet: 192.168.0.0/16

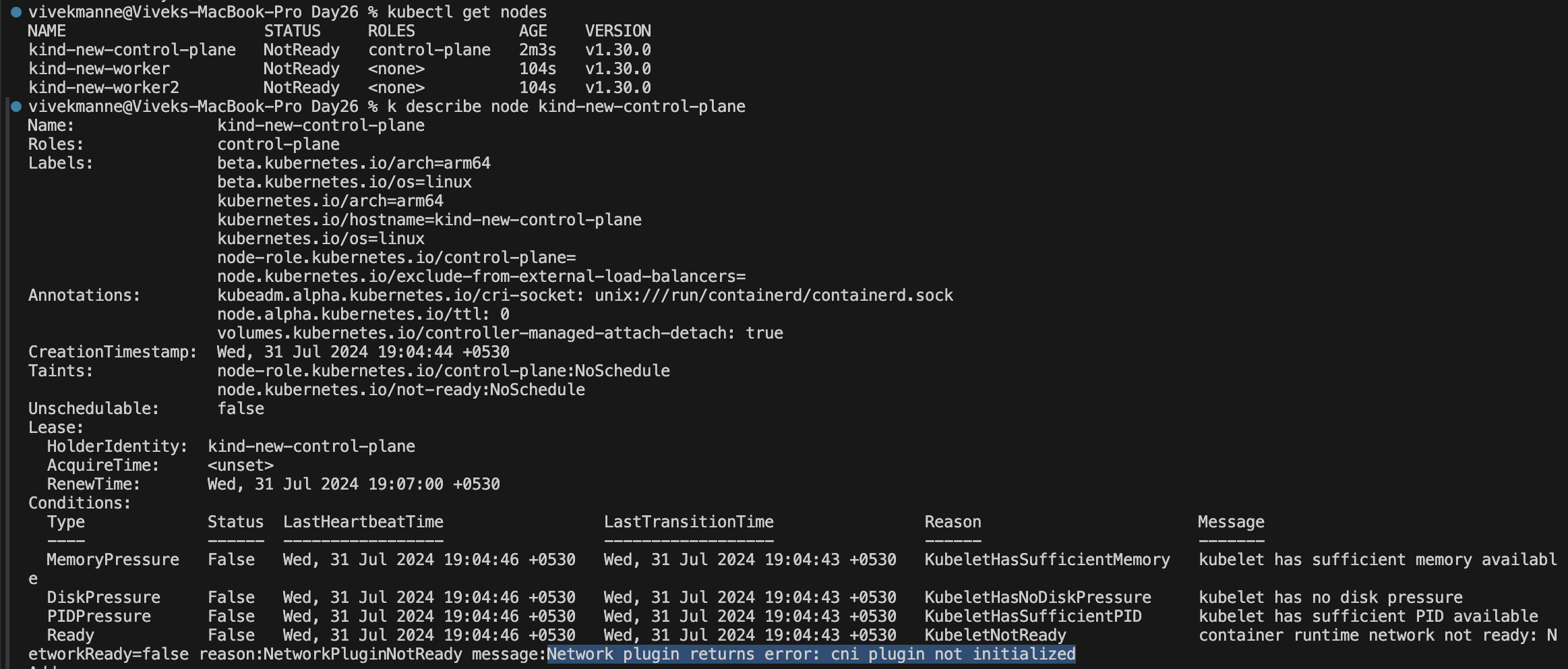

Once the cluster is ready, Verify the nodes are up and running or not

As you can see the nodes are not ready yet since the CNI plugin is not initialized.

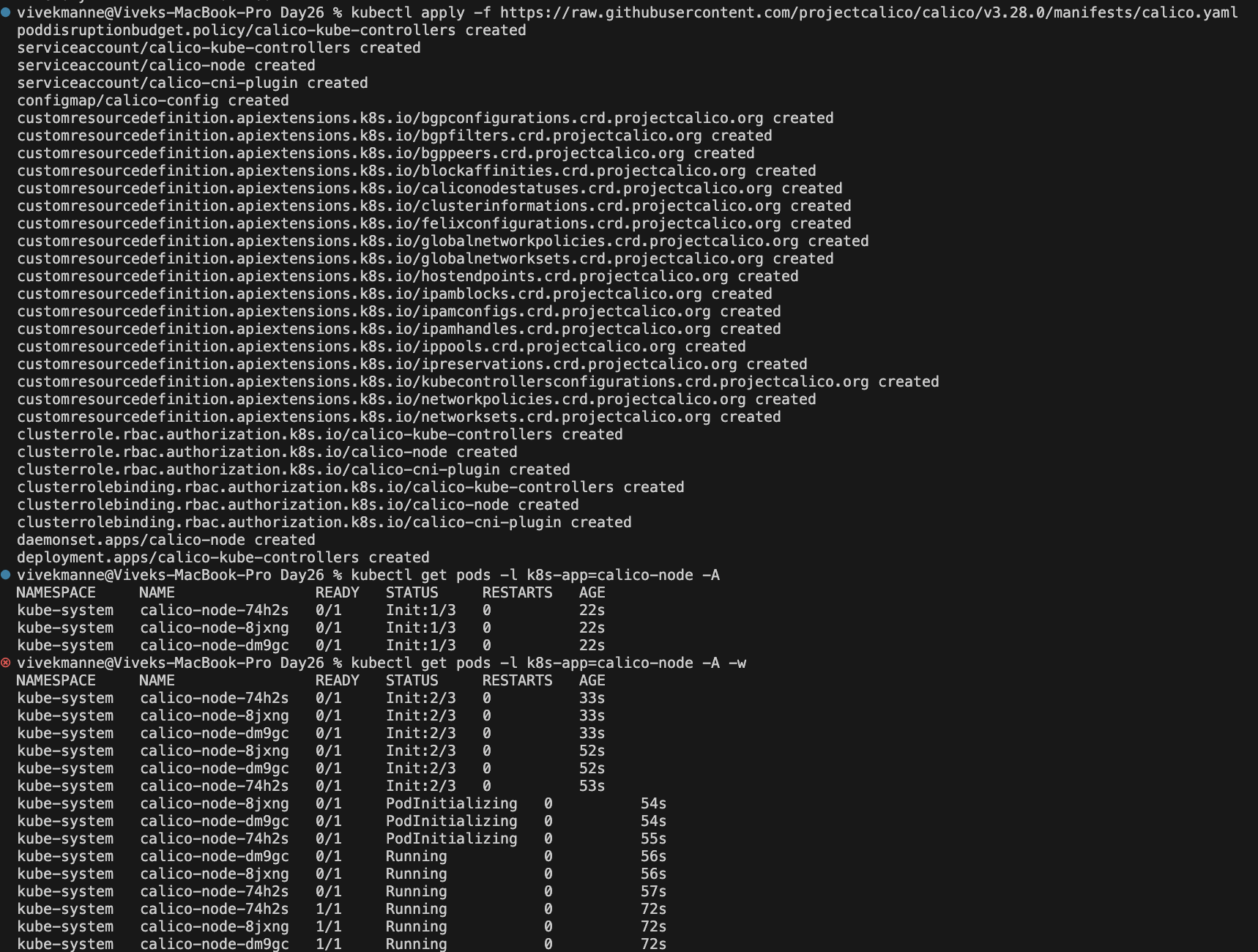

Install

CalicoNetwork Add-on to our Kind cluster

Document to installcalicoon the clusterhttps://docs.tigera.io/calico/latest/getting-started/kubernetes/kind

Once Installed, Verify Calico installation by issuing the following command

kubectl get pods -l k8s-app=calico-node -A -w

NOTE: Not all CNI plugins support Network Policies (ex: Flannel doesn't)

Create 3 deployments with configuration as below:

name: frontend , image-name: nginx , replicas=1 , containerPort: 80

name: backend , image-name: nginx , replicas=1 , containerPort: 80

name: db , image-name: mysql , replicas=1 , containerPort: 3306

Application manifest

apiVersion: v1 kind: Pod metadata: name: frontend labels: role: frontend spec: containers: - name: nginx image: nginx ports: - name: http containerPort: 80 protocol: TCP --- apiVersion: v1 kind: Service metadata: name: frontend labels: role: frontend spec: selector: role: frontend ports: - protocol: TCP port: 80 targetPort: 80 --- apiVersion: v1 kind: Pod metadata: name: backend labels: role: backend spec: containers: - name: nginx image: nginx ports: - name: http containerPort: 80 protocol: TCP --- apiVersion: v1 kind: Service metadata: name: backend labels: role: backend spec: selector: role: backend ports: - protocol: TCP port: 80 targetPort: 80 --- apiVersion: v1 kind: Service metadata: name: db labels: name: mysql spec: selector: name: mysql ports: - protocol: TCP port: 3306 targetPort: 3306 --- apiVersion: v1 kind: Pod metadata: name: mysql labels: name: mysql spec: containers: - name: mysql image: mysql:latest env: - name: "MYSQL_USER" value: "mysql" - name: "MYSQL_PASSWORD" value: "mysql" - name: "MYSQL_DATABASE" value: "testdb" - name: "MYSQL_ROOT_PASSWORD" value: "verysecure" ports: - name: http containerPort: 3306 protocol: TCP

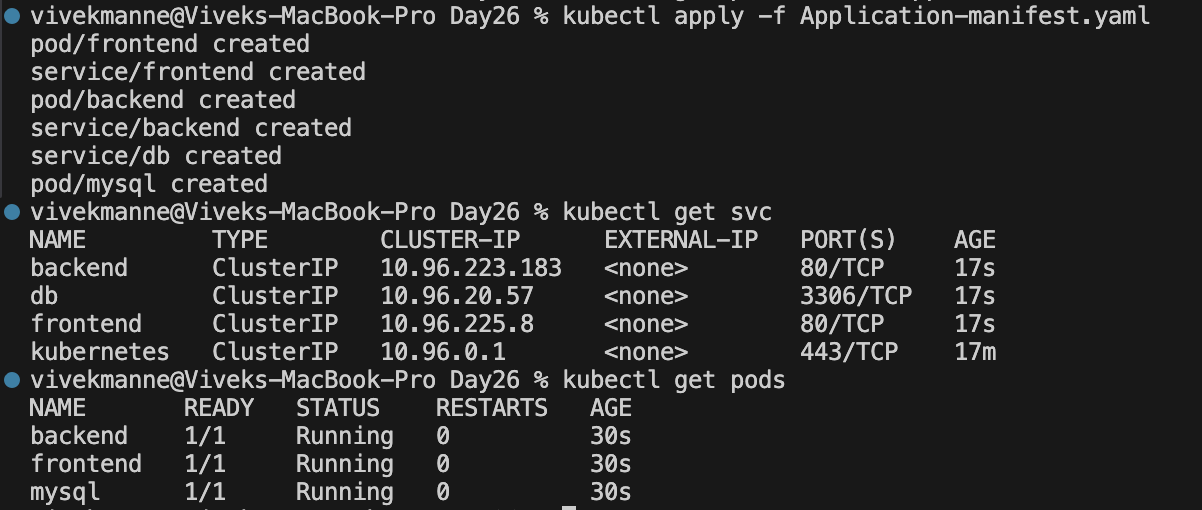

This shows that our frontend,backend,db service and pods are created.

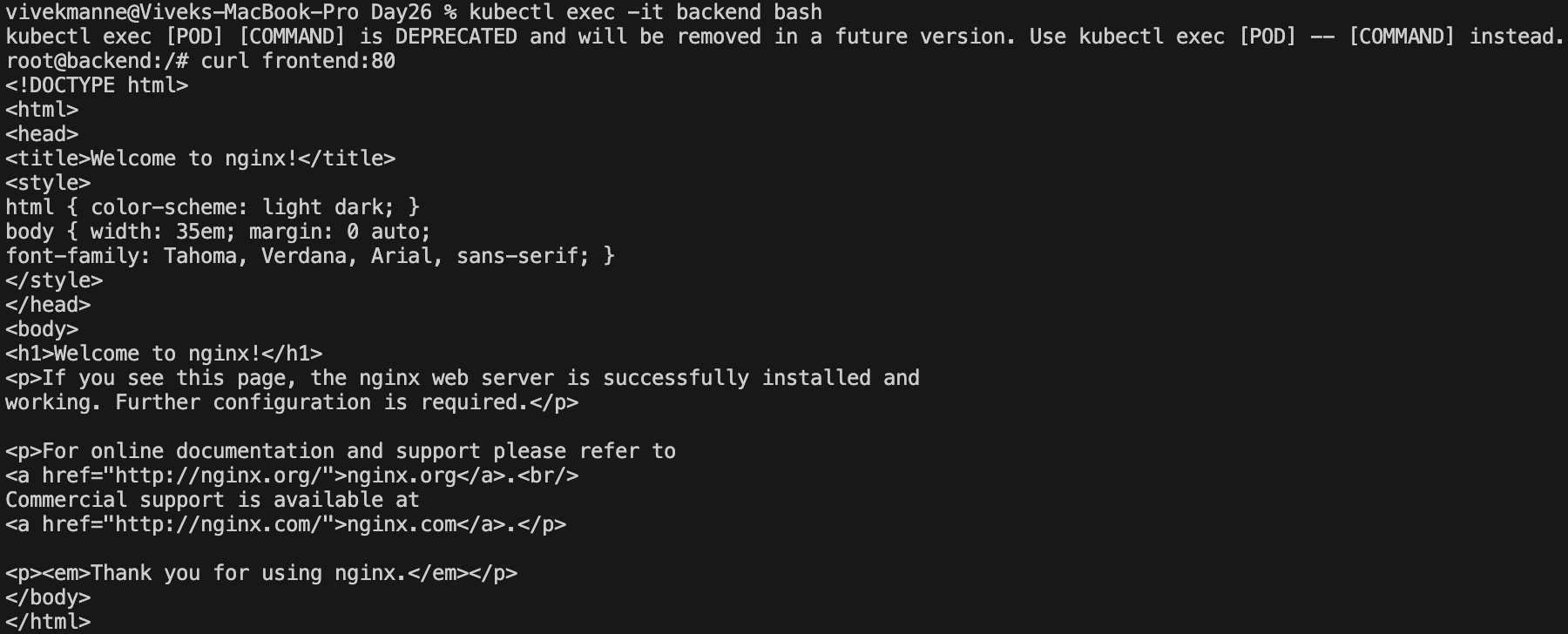

Let's do the connectivity test that all of your pods are able to interact with each other.

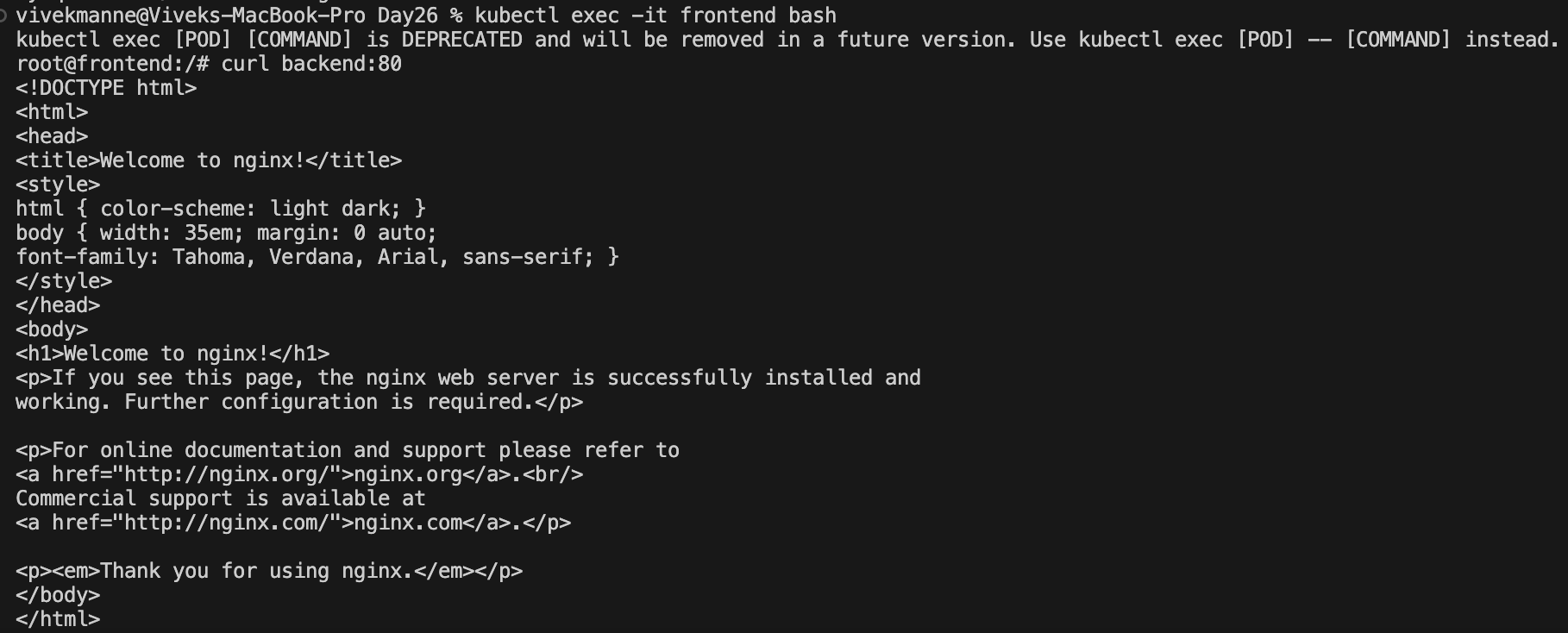

✅ frontend pod ---> backend pod

✅ frontend pod ---> db pod

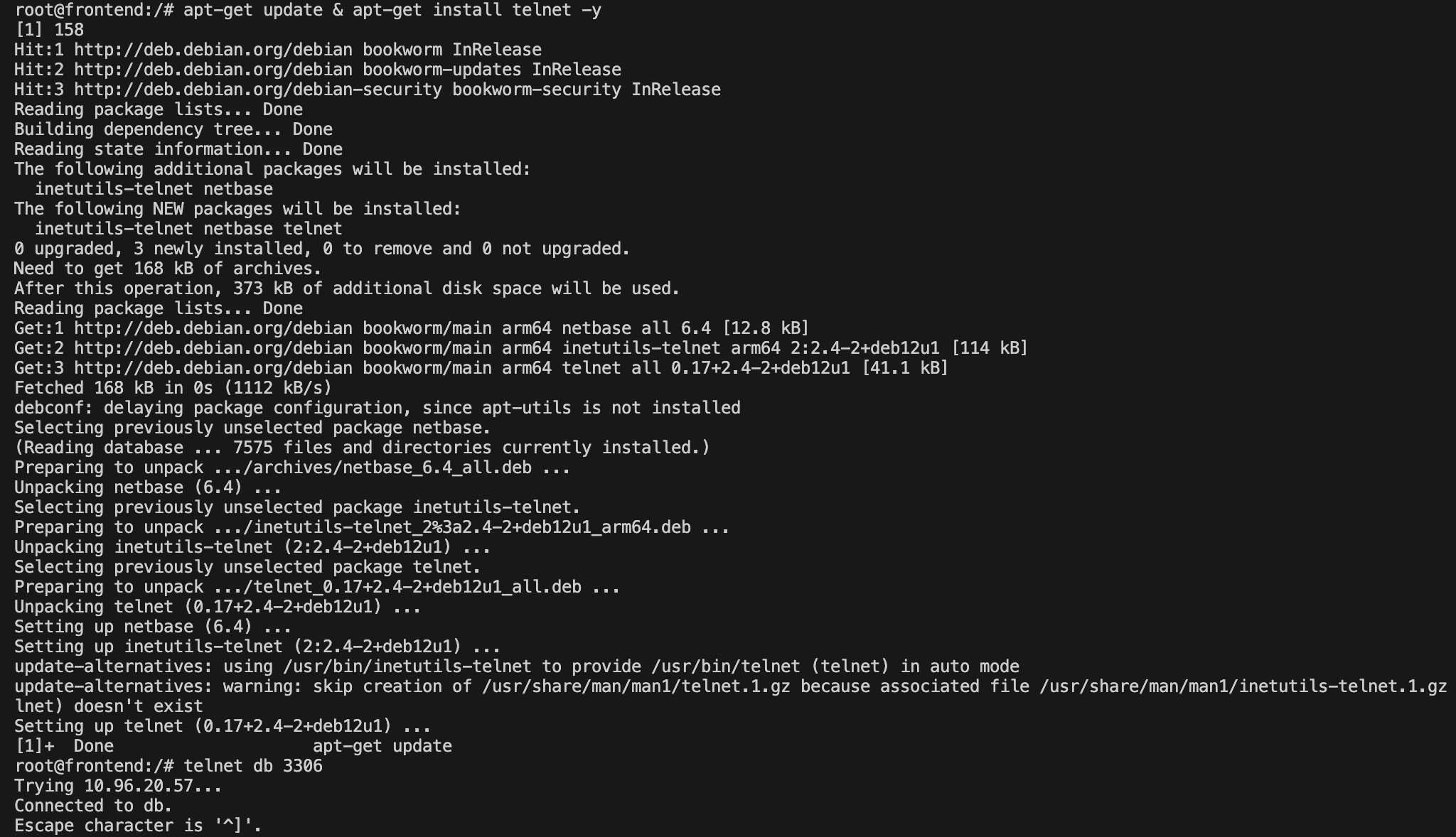

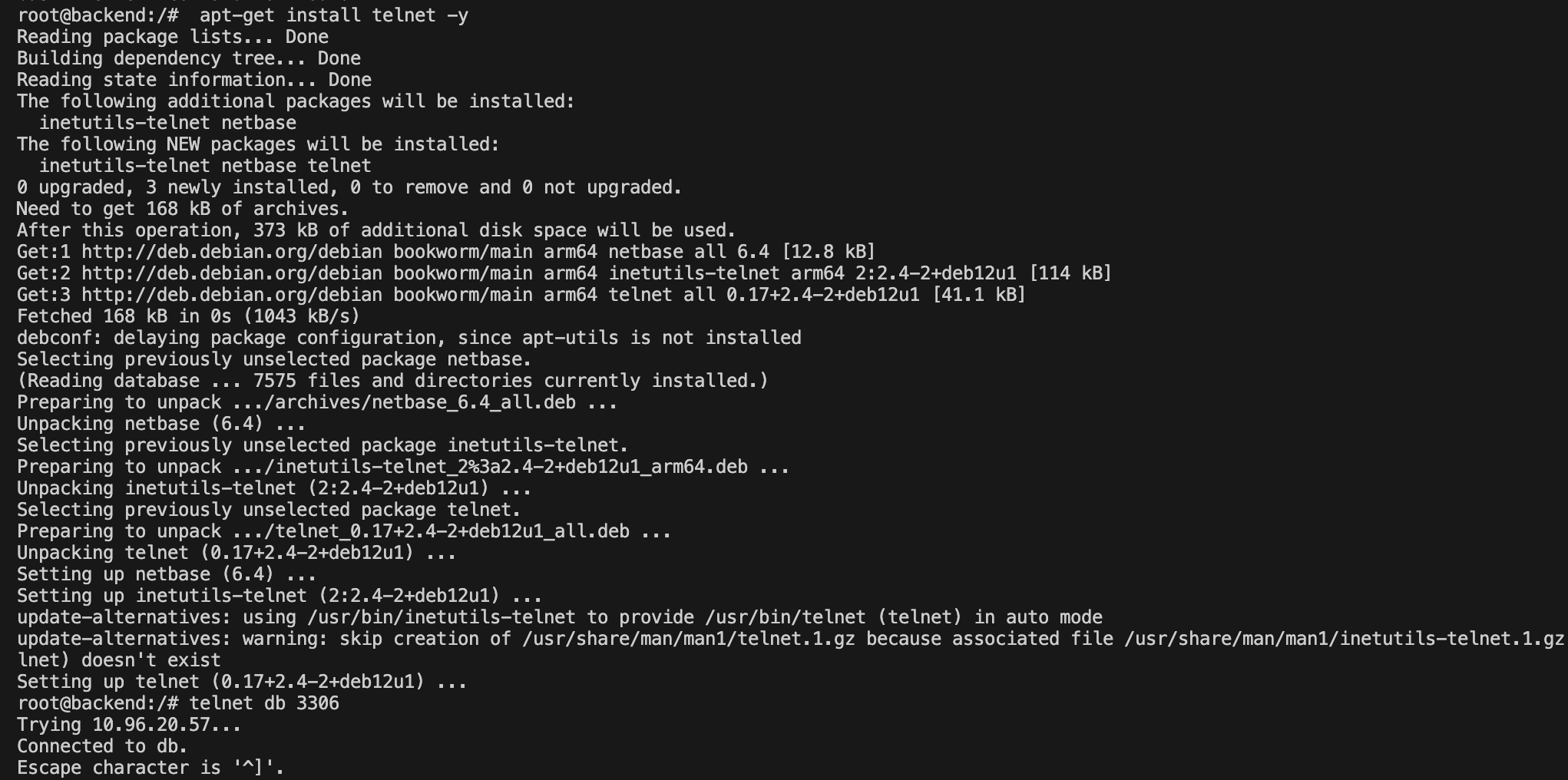

To establish db connection, install telnet in the pod first and then check for db connectivity.

✅ backend pod ----> frontend pod

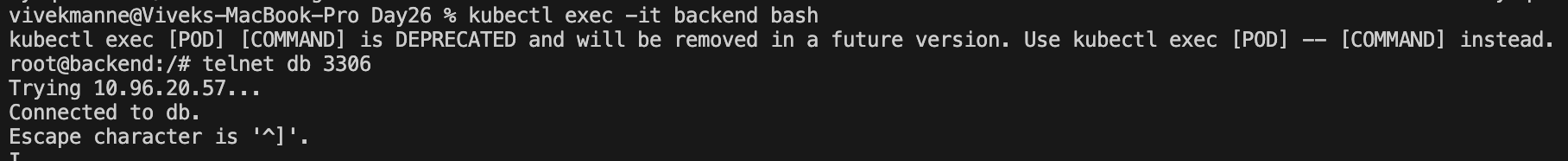

✅ backend pod ----> db pod

This shows that all pods can communicate with all pods as long as they are in same cluster network unless we don't have any network policies in place.

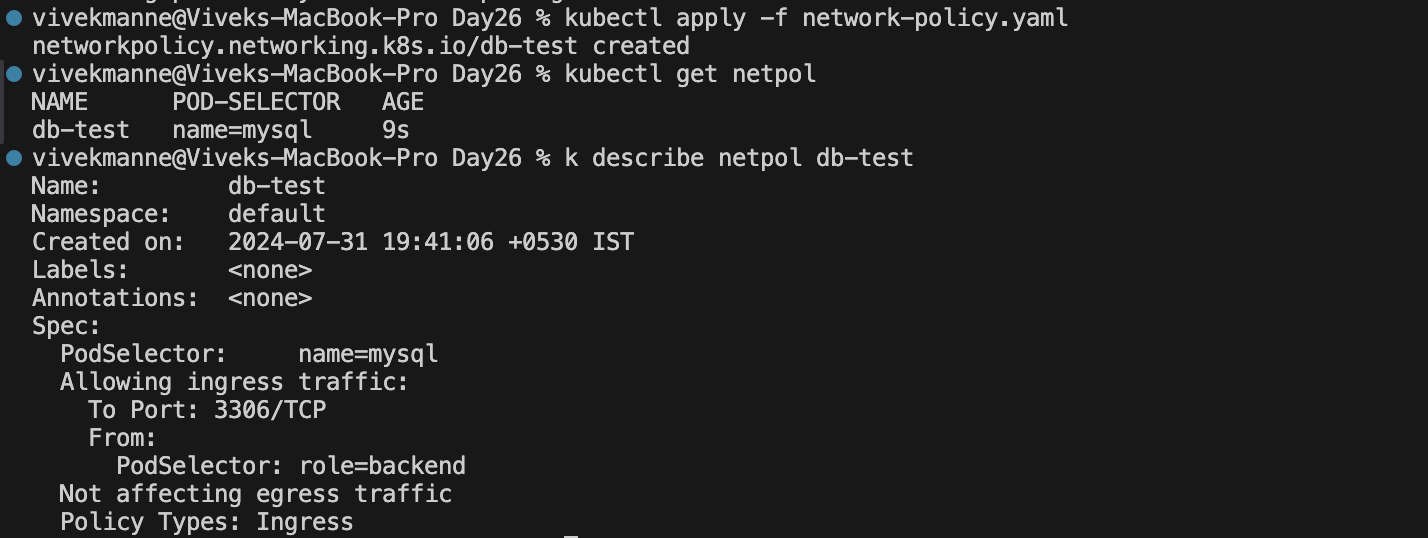

Create a network policy and restrict the access so that only backend pod should be able to access the db service on port 3306.

# This NetworkPolicy is applied to the Pod with the label `mysql`, allowing inbound traffic from Pods with the matching label `role: backend` on port 3306. apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: db-test spec: podSelector: matchLabels: name: mysql policyTypes: - Ingress ingress: - from: - podSelector: matchLabels: role: backend ports: - port: 3306

Now, this network policy is attached to mysql pod to allow the inbound traffic only from backend on port 3306.

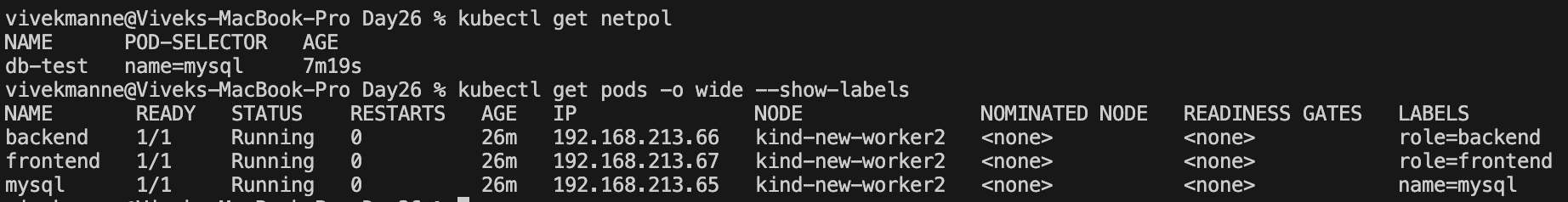

Verify the label of the mysql pod to confirm this

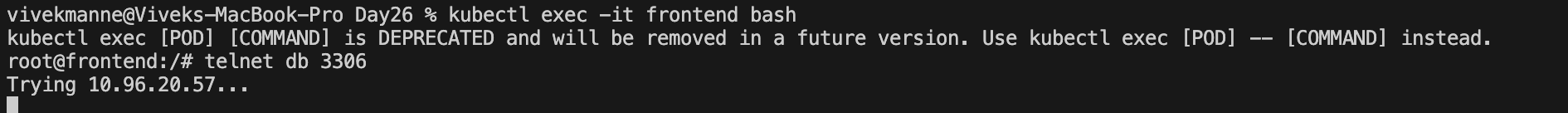

Let's check the connectivity from backend pod to db pod, frontend pod to db pod now

✅ backend pod ----> db pod

❌ frontend pod ----> db pod

This clearly shows that we have restricted network traffic between the frontend Pod and the db Pod, allowing only traffic from the backend Pod to access the db Pod through the configured Network Policy enforced by the Calico CNI plugin within the cluster.

#Kubernetes #NetworkPolicies #CNIPlugins #40DaysofKubernetes #CKASeries

Subscribe to my newsletter

Read articles from Gopi Vivek Manne directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gopi Vivek Manne

Gopi Vivek Manne

I'm Gopi Vivek Manne, a passionate DevOps Cloud Engineer with a strong focus on AWS cloud migrations. I have expertise in a range of technologies, including AWS, Linux, Jenkins, Bitbucket, GitHub Actions, Terraform, Docker, Kubernetes, Ansible, SonarQube, JUnit, AppScan, Prometheus, Grafana, Zabbix, and container orchestration. I'm constantly learning and exploring new ways to optimize and automate workflows, and I enjoy sharing my experiences and knowledge with others in the tech community. Follow me for insights, tips, and best practices on all things DevOps and cloud engineering!