Techniques and Tools for Communication in Distributed Training

Denny Wang

Denny Wang

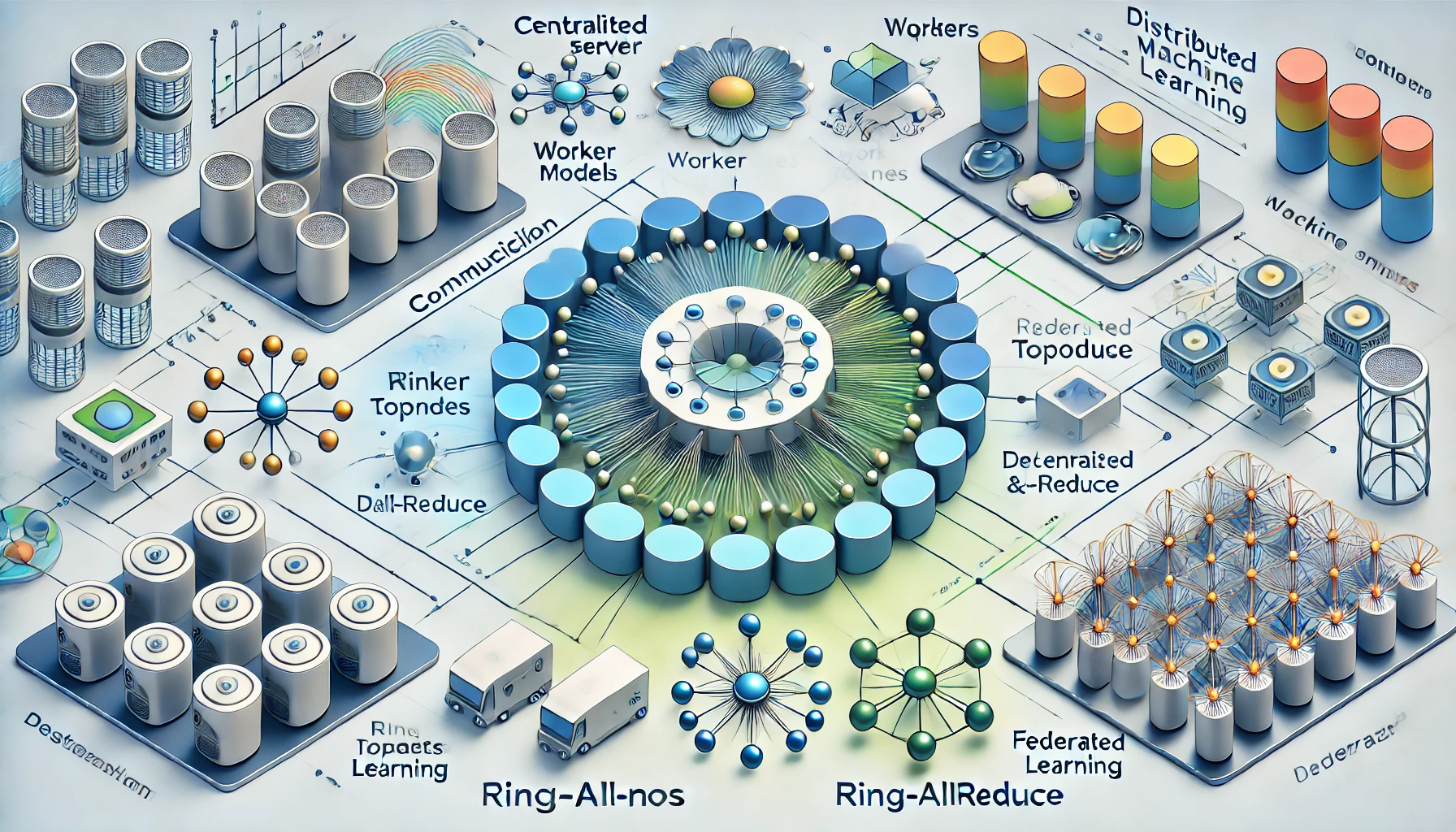

Distributed training in machine learning often involves multiple nodes working together to train a model. Effective communication between these nodes is crucial for synchronizing updates, sharing information, and ensuring consistency. Several techniques and tools facilitate this communication, each with its own strengths and use cases.

Parameter Server Model

The Parameter Server model is a common approach in distributed training, particularly in traditional data parallelism. In this setup, there are two types of nodes: parameter servers and worker nodes.

Parameter Servers: These nodes store and manage the global model parameters. They aggregate updates from worker nodes and distribute the updated parameters back to them.

Worker Nodes: Each worker processes a subset of the data, computes gradients based on its local dataset, and sends these gradients to the parameter servers. The parameter servers then use these gradients to update the global model.

This model is efficient for handling large-scale datasets and models but can face communication bottlenecks, particularly as the number of worker nodes increases.

All-Reduce and Ring-AllReduce

All-Reduce is a technique where each node computes gradients and then participates in an operation to reduce these gradients across all nodes. This operation sums the gradients and distributes the result back to all nodes, ensuring each node has the averaged gradients to update its local model.

- Ring-AllReduce: A variant of All-Reduce, Ring-AllReduce, organizes nodes in a logical ring. Gradients are passed along the ring in chunks, reducing the communication overhead. Each node sends and receives data from its neighbors, aggregates the results, and eventually, all nodes converge on the averaged gradients.

All-Reduce and Ring-AllReduce are often implemented using libraries such as NCCL (NVIDIA Collective Communication Library) or MPI (Message Passing Interface), providing efficient communication in GPU-based systems.

Gossip Algorithms

Gossip algorithms offer a decentralized approach to communication, where each node randomly selects a few peers to exchange information. This process is repeated iteratively, allowing information to spread throughout the network.

Advantages: Gossip algorithms are robust and fault-tolerant, making them suitable for scenarios with unreliable networks or heterogeneous systems.

Challenges: While they provide a decentralized solution, gossip algorithms may have slower convergence compared to more centralized methods like All-Reduce.

Horovod

Horovod is an open-source library designed to simplify distributed training. It abstracts the communication layer, allowing seamless integration with popular deep learning frameworks such as TensorFlow, PyTorch, and MXNet. Horovod uses NCCL or MPI for communication and supports both data and model parallelism, making it versatile for various distributed training scenarios.

Federated Learning: A Decentralized Approach

Federated Learning is a unique form of distributed training where data remains decentralized across multiple devices. Instead of transferring raw data to a central server, each device trains a local model and shares only the model updates (e.g., gradients or weights) with a central aggregator. This approach is particularly beneficial for privacy-sensitive applications.

Key Features of Federated Learning

Data Privacy and Security: By keeping data on local devices, Federated Learning significantly enhances privacy. Techniques like differential privacy and secure aggregation further protect individual data during the model update process.

Reduced Communication Costs: Only model updates are transmitted, rather than large datasets, reducing the overall communication overhead.

Scalability: Federated Learning leverages the computational power of numerous edge devices, enabling scalable training across a vast number of data sources.

Challenges and Considerations

Heterogeneous Data and Devices: Devices in a federated learning system may have varying computational power and data distributions, complicating the training process and model convergence.

Communication and Coordination: Despite reduced data transmission, coordinating updates across potentially millions of devices can introduce latency and require robust communication protocols.

Choosing the Right Approach

When deciding between Federated Learning and traditional data parallelism, consider factors such as data privacy requirements, the distribution of data, and the computational resources available. Federated Learning excels in environments where data privacy is paramount and data cannot be centralized. In contrast, traditional data parallelism with a Parameter Server model or All-Reduce techniques is more suited for centralized data environments with homogeneous hardware.

Conclusion

Both Federated Learning and traditional data parallelism offer valuable approaches to distributed machine learning, each with its own advantages and trade-offs. Understanding these techniques and tools is crucial for selecting the right strategy to meet your specific training needs and constraints.

Subscribe to my newsletter

Read articles from Denny Wang directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Denny Wang

Denny Wang

I'm Denny, a seasoned senior software engineer and AI enthusiast with a rich background in building robust backend systems and scalable solutions across accounts and regions at Amazon AWS. My professional journey, deeply rooted in the realms of cloud computing and machine learning, has fueled my passion for the transformative power of AI. Through this blog, I aim to share my insights, learnings, and the innovative spirit of AI and cloud engineering beyond the corporate horizon.