Local Kubernetes Cluster Setup Made Easy with k3s

Ankan Banerjee

Ankan Banerjee

I don't want to use the cloud 🌩!

Kubernetes (K8s) has revolutionized the way we manage containerized applications/microservices, scaling and orchestrating them with ease. However it can seem daunting to set up, leading most of us to consider cloud options.. While cloud-based Kubernetes services offer many advantages, there are some downsides to consider:

Cost: Running Kubernetes clusters in the cloud, using services like AKS or EKS, can become expensive, especially as you scale up your resources and add more services. This is particularly important for organizations where budgeting and cost-saving are major concerns.

Vendor Lock-In: Using a specific cloud provider's Kubernetes service can lead to vendor lock-in, making it difficult to migrate to another provider or an on-premises solution.

Complexity: Managing and configuring cloud-based Kubernetes can be complex, requiring a good understanding of both Kubernetes and the specific cloud provider's infrastructure.

Limited Customization: Cloud-based Kubernetes services might have limitations in terms of customization and control compared to running your own Kubernetes cluster on-premises or in a self-managed environment.

Enter k3s – a lightweight Kubernetes distribution perfect for local development, home labs, testing and even production environments. The best part is that it comes as a distribution and doesn't require you to set up all the Kubernetes components from scratch!

Why k3s❓

Lightweight: k3s has a smaller footprint than full-fledged Kubernetes, making it ideal for resource-constrained environments.

Simple Installation: Get your cluster running with minimal configuration.

Features: k3s includes many essential Kubernetes features out-of-the-box, like networking, storage, and load balancing.

Why not Minikube?: Unlike Minikube, which is primarily designed for local development and playing around, k3s is optimized for both development and production environments. It provides a more seamless transition from development to production.

Community and Support: This is the best part about k3s. It has strong community support and is backed by Rancher Labs, providing a reliable and well-maintained solution. This can be a crucial factor when choosing a Kubernetes distribution for long-term projects. So if you get errors or bugs, the community has got your back!

Prerequisites

💡 Before we begin, ensure you have:

A Linux machine (virtual or physical) with atleast 1 GB RAM – This guide will use Debian.

Basic understanding of Linux commands.

Installation Steps 🔧

Install k3s Server:

curl -sfL https://get.k3s.io | sh -After installation, the kubeconfig file will be written to

/etc/rancher/k3s/k3s.yaml, and thekubectlinstalled by K3s will automatically use it. The K3s service is set up to restart automatically if the node reboots or if the process crashes or is killed.Update kubeconfig environment variable: Export the kubeconfig file path to the

KUBECONFIGenvironment variable and add it to your.bashrcfile.export KUBECONFIG=/etc/rancher/k3s/k3s.yamlSet correct permissions: Ensure your user has the necessary permissions to access the kubeconfig file.

sudo chown $(id -u):$(id -g) /etc/rancher/k3s/k3s.yaml && chmod 600 /etc/rancher/k3s/k3s.yamlVerify Installation:

kubectl get nodesYou should see a node named

serverwith the statusReady.

P.S.*: This is the default installation and includes the Traefik ingress controller. If you don't want to install Traefik, use this command:*

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="--disable traefik" sh -

For additional configuration, referring to the actual documentation is supreme!

Adding Worker Nodes (Optional)

On your additional nodes/VMs, repeat the k3s server installation command:

curl -sfL https://get.k3s.io | K3S_URL=https://<server_ip>:6443 K3S_TOKEN=<token> sh -Replace

<server_ip>with the actual IP of your server node and<token>with the token found in/var/lib/rancher/k3s/server/node-tokenon the server node.Verify Workers: Run

kubectl get nodesagain to see the new nodes.

Alright, we are all set with the setup! Wasn't that quick and easy? Let's dive into using our shiny new cluster!

Interacting with Your Cluster

Deploy a Sample Application:

kubectl create deployment hello-world --image=nginx --port=80 kubectl expose deployment hello-world --type=LoadBalancer --port=8000 --target-port=80❗ You might want to use

--port=80for the service, but ports 80 and 443 are already used by the Traefik ingress controller. If you disabled the controller during installation, you can safely use port 80.Access the Application: Get the NodePort or LoadBalancer IP to access the deployed application:

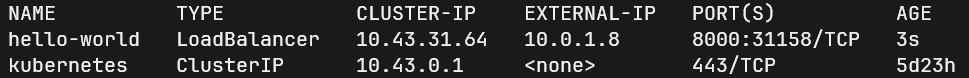

kubectl get svc

Now go to your browser at the specified external IP at port 8000 to view the Nginx default page.

Additional Tips

Learn the Basics of Kubernetes: If you bumped into Kubernetes just now, make sure you have a solid understanding of Kubernetes concepts such as pods, services, deployments, and namespaces before diving into k3s. This foundational knowledge will make it easier to work with k3s. Refer to the official Kubernetes docs.

Use a Virtual Machine: If you're new to Kubernetes and k3s, consider using a virtual machine for your initial setup. This allows you to experiment without affecting your main system and makes it easier to start over if something goes wrong.

Backup Your Configurations: Regularly back up your kubeconfig file and other important configurations. This ensures you can quickly restore your setup in case of a failure or accidental deletion.

Join the Community: Engage with the k3s community through forums, Slack channels, and GitHub. The community can provide support, share best practices, and help you resolve issues more quickly.

For advanced configuration, I am providing the official docs link to go further:

k3s-docs

Conclusion

You now have a fully functional Kubernetes cluster running on your local machine! How exciting is that? Use this to experiment, learn, and build awesome applications—or maybe even use it in production! k3s makes getting started with Kubernetes super easy, opening the door to a world of possibilities for container orchestration. Let's get building! 😄

Feel free to reach out to me if you have any questions, need help, or just want to chat!

Subscribe to my newsletter

Read articles from Ankan Banerjee directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by