Building a Chat Application with Streamlit, Llama Models, and Groq

Rahul Boney

Rahul Boney

In this article, we'll walk through creating a simple chat application using Streamlit, Groq, and Llama models. We'll explain the code, the models used, and how these technologies work together to create a functional chat interface.

Project Overview

Our project involves a chat application where users can interact with an AI model. The app is built using:

Streamlit: A powerful framework for building interactive web applications in Python.

Llama Models: Advanced AI models used for generating responses.

Groq: A library that simplifies the use of Llama models.

You can find the complete code and project setup on GitHub.

Code Explanation

Here's the complete code for the chat application:

import os

import json

import streamlit as st

from groq import Groq

st.set_page_config(

page_title="Boney Chat",

page_icon="🐐",

layout="centered",

initial_sidebar_state="collapsed"

)

st.markdown(

"""

<style>

.main {

background-color: #121212;

}

.stApp {

color: #e0e0e0;

font-family: 'Arial', sans-serif;

}

.stTitle {

color: #bb86fc;

}

.chat-message.user {

background-color: #333333;

border-radius: 10px;

padding: 10px;

margin-bottom: 10px;

border: 1px solid #444;

}

.chat-message.assistant {

background-color: #424242;

border-radius: 10px;

padding: 10px;

margin-bottom: 10px;

border: 1px solid #555;

}

.stChatInput input {

border-radius: 20px;

border: 1px solid #bb86fc;

padding: 10px;

font-size: 16px;

background-color: #333;

color: #e0e0e0;

}

.stButton {

background-color: #bb86fc;

color: #121212;

border-radius: 5px;

}

.stButton:hover {

background-color: #9a67ea;

}

</style>

""",

unsafe_allow_html=True

)

working_dir = os.path.dirname(os.path.abspath(__file__))

config_path = os.path.join(working_dir, 'config.json')

try:

with open(config_path, 'r') as f:

config_content = f.read().strip()

if not config_content:

raise ValueError("config.json is empty")

config_data = json.loads(config_content)

GROQ_API_KEY = config_data.get("GROQ_API_KEY")

if not GROQ_API_KEY:

raise ValueError("GROQ_API_KEY not found in config.json")

os.environ["GROQ_API_KEY"] = GROQ_API_KEY

except FileNotFoundError:

st.error("Configuration file not found.")

st.stop()

except json.JSONDecodeError as e:

st.error(f"Error reading config.json: {e.msg}")

st.stop()

except ValueError as e:

st.error(str(e))

st.stop()

client = Groq()

if "chat_history" not in st.session_state:

st.session_state.chat_history = []

st.title("🐐 Boney Chat")

for message in st.session_state.chat_history:

with st.chat_message(message["role"]):

st.markdown(message["content"], unsafe_allow_html=True)

user_prompt = st.chat_input("Ask the bot...")

if user_prompt:

st.chat_message("user").markdown(user_prompt, unsafe_allow_html=True)

st.session_state.chat_history.append({"role": "user", "content": user_prompt})

messages = [

{"role": "system", "content": "hello"},

*st.session_state.chat_history

]

try:

response = client.chat.completions.create(

model="llama-3.1-8b-instant",

messages=messages

)

assistant_response = response.choices[0].message.content

st.session_state.chat_history.append({"role": "assistant", "content": assistant_response})

with st.chat_message("assistant"):

st.markdown(assistant_response, unsafe_allow_html=True)

except Exception as e:

st.error(f"Error: {e}")

Key Components

Streamlit: We use Streamlit to create the interactive web interface. It allows us to easily display messages and handle user inputs.

Llama Models: These models are used to generate responses based on user input. They are part of the Groq library and provide advanced conversational capabilities.

Groq: Groq is a library that simplifies interaction with Llama models. It handles API calls and provides an easy interface to work with AI models.

How It Works

Configuration: We load API keys from a

config.jsonfile and set them as environment variables. This is crucial for authenticating requests to the Llama model.User Interaction: Users can input text, which is then sent to the Llama model. The model's response is displayed in the chat window.

Chat History: The chat history is maintained in

st.session_stateto provide continuity across interactions.

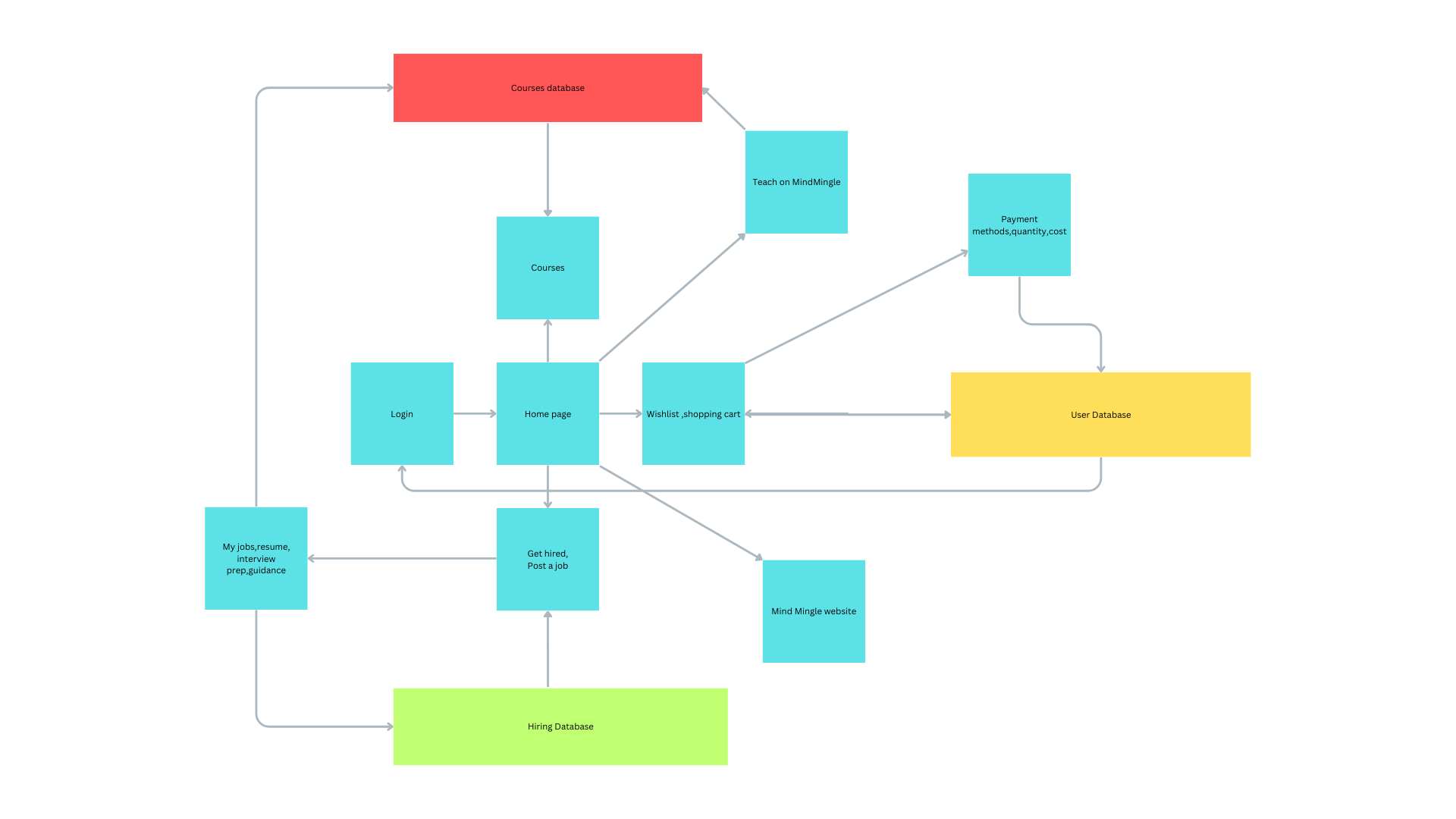

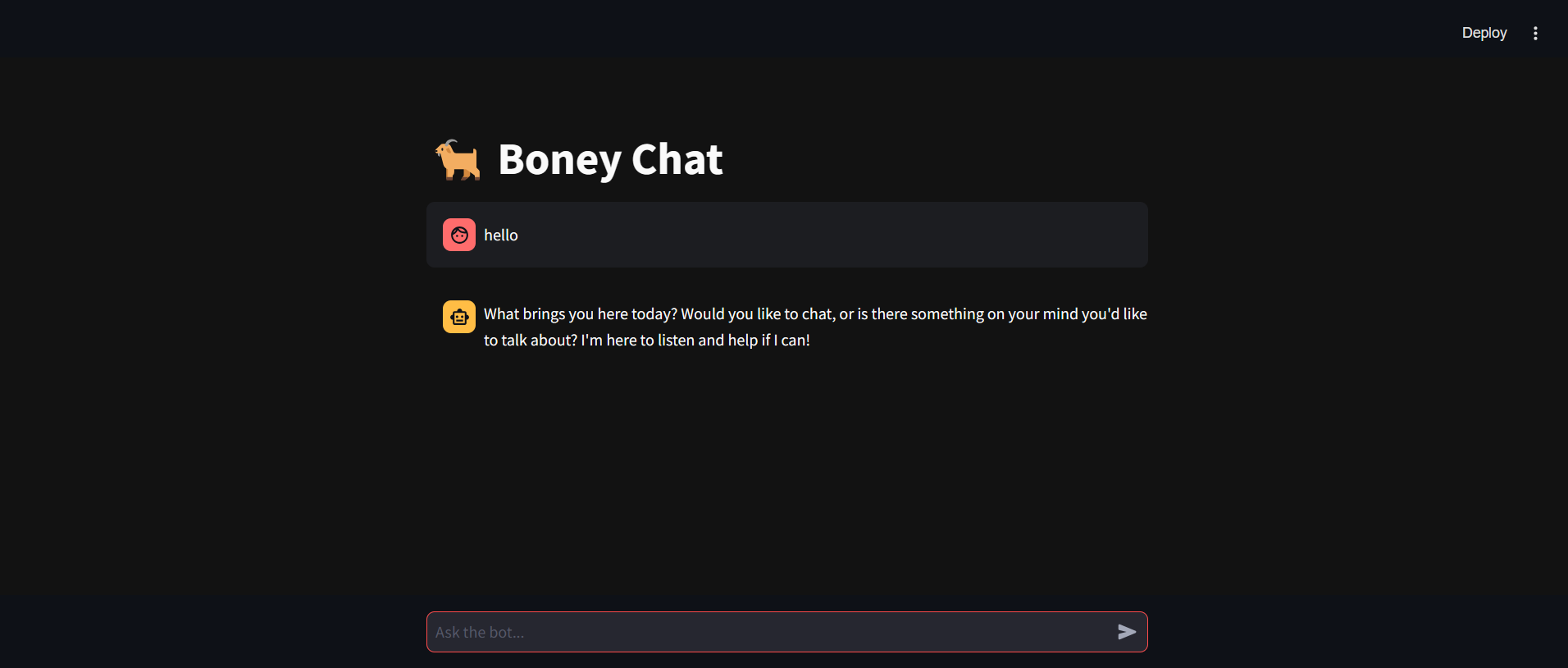

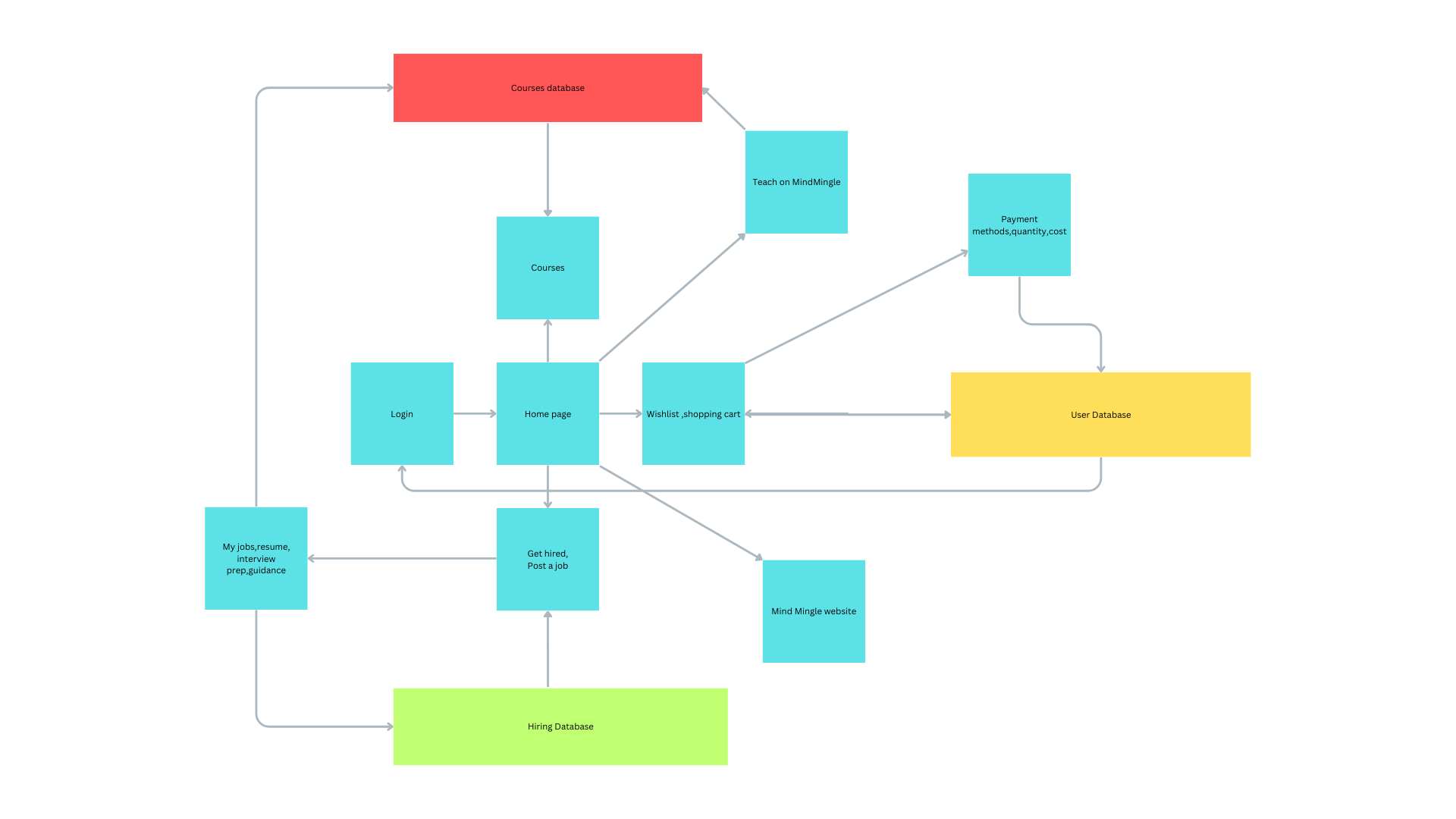

Images and Visuals

Include screenshots of your application to illustrate the design and functionality. Here are some example images you might add:

Application Interface: Show how the chat interface looks.

Code Snippets: Highlight key parts of the code.

API Interaction: Illustrate how the model generates responses.

Conclusion

In this article, we explored how to build a chat application using Streamlit, Llama models, and Groq. By integrating these technologies, we created a functional and interactive chat interface that leverages advanced AI capabilities.

Feel free to check out the complete project on GitHub and try it out for yourself!

Subscribe to my newsletter

Read articles from Rahul Boney directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Rahul Boney

Rahul Boney

Hey, I'm Rahul Boney, really into Computer Science and Engineering. I love working on backend development, exploring machine learning, and diving into AI. I am always excited about learning and building new things.