Deploying 3-Tier Architecture on AKS with Terraform, Jenkins

Vijay Kumar Singh

Vijay Kumar Singh

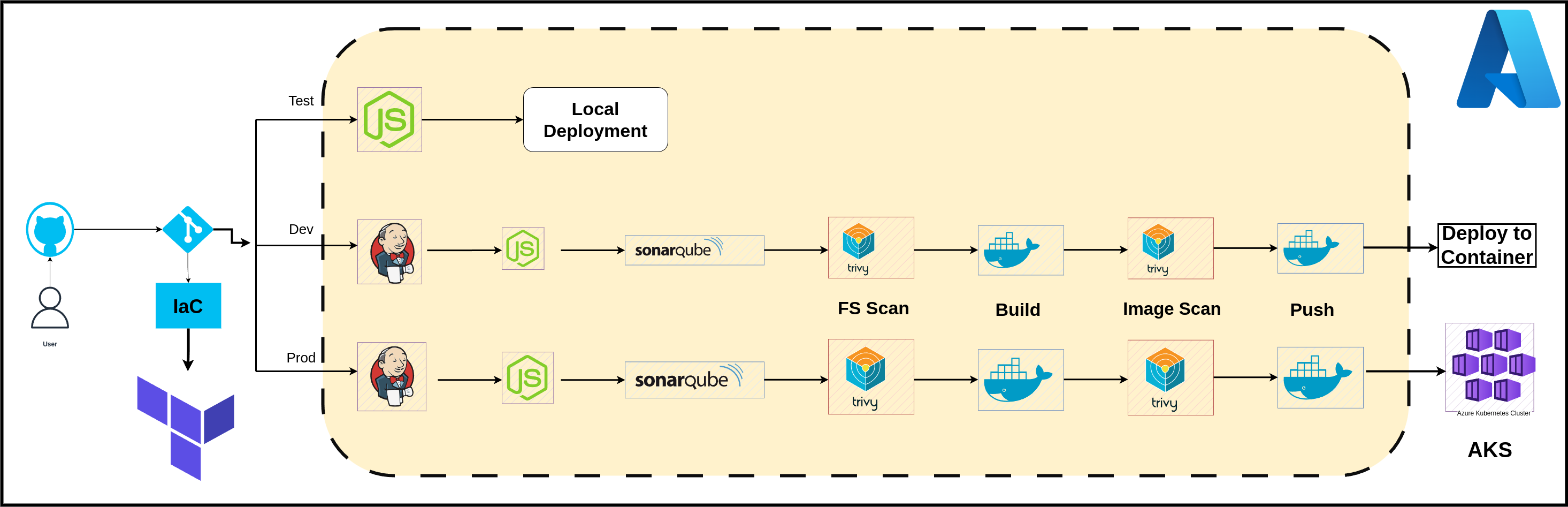

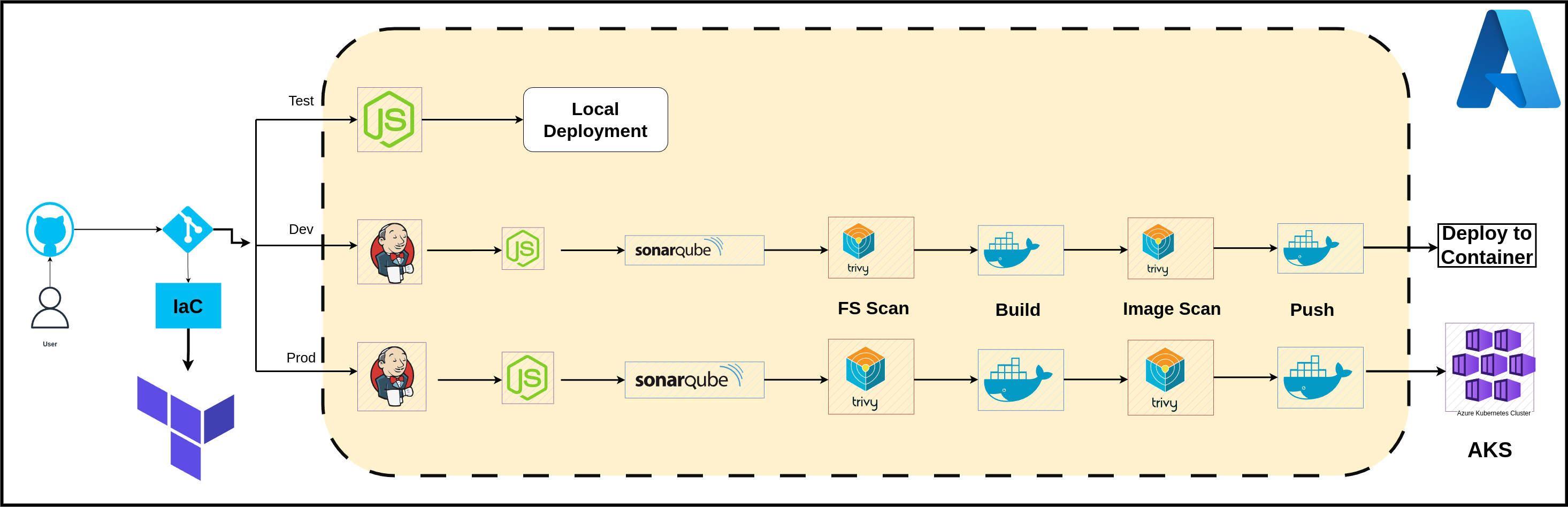

Introduction to Architecture:

YelpCamp is a 3-tier web application specifically designed for campground reviews. It boasts a variety of features such as Campground Listings, User Reviews, Photo Sharing, and User Accounts. The primary aim of YelpCamp is to assist outdoor enthusiasts in discovering the best camping spots and sharing their experiences with a community of like-minded individuals. The comprehensive features of YelpCamp make it an excellent platform for leveraging cloud and DevOps skills.

Objectives of the Project:

Provisioning Infrastructure using IaC [Terraform].

Deployment of 3-Tier App in multiple environments: Local, Dev, Prod.

Containerizing applications with Docker.

Conducting static code analysis using SonarQube and vulnerability scanning using Trivy.

Deploying applications to multiple environments for different purpose like;

- local for testing purpose, Container Deployment for development & Azure Kubernetes Service (AKS) for production.

Setting up robust CI/CD pipelines using Jenkins.

Architecture

About Infrastructure & Deployment Process:

Project Structure: Project is organized into distinct directories, each serving a specific purpose:

╰─$ tree -L 4

.

├── src // Contains sources code + Dockerfile + Manifests + .ENV

├── JenkinsPipeline-Dev

├── JenkinsPipeline-Prod

└── Terraform //terraform modular approach

├── modules

│ ├── aks

│ │ ├── main.tf

│ │ ├── output.tf

│ │ └── variables.tf

│ ├── bastion

│ │ ├── main.tf

│ │ ├── output.tf

│ │ └── variables.tf

│ ├── compute

│ │ ├── main.tf

│ │ ├── output.tf

│ │ └── variables.tf

│ ├── keyvault

│ │ ├── main.tf

│ │ ├── output.tf

│ │ └── variables.tf

│ ├── network

│ │ ├── main.tf

│ │ ├── output.tf

│ │ └── variables.tf

│ ├── pip

│ │ ├── main.tf

│ │ ├── output.tf

│ │ └── varibales.tf

│ ├── resourcegroup

│ │ ├── main.tf

│ │ ├── output.tf

│ │ └── variables.tf

│ └── ServicePrincipal

│ ├── main.tf

│ ├── output.tf

│ └── variables.tf

├── main.tf

├── variables.tf

├── local.auto.tfvars

├── dev.auto.tfvars

├── prod.auto.tfvars

└── output.tf

├── scripts // Contains scripts to automate setup

└── README.md

src/: Contains source code, Dockerfile, manifests, and environment configuration files.

JenkinsPipeline-Dev/: Jenkins pipeline configuration for the development environment.

JenkinsPipeline-Prod/: Jenkins pipeline configuration for the production environment.

Terraform/: Infrastructure as Code configuration using a modular approach.

scripts/: Automation scripts for setup and maintenance.

README.md: Documentation for the project.

Terraform Modules

I adopted a modular approach to organize Terraform code, making it reusable and easier to manage. The modules directory contains submodules for various components such as resource groups, service principals, networks, compute instances, and Azure Kubernetes Service (AKS).

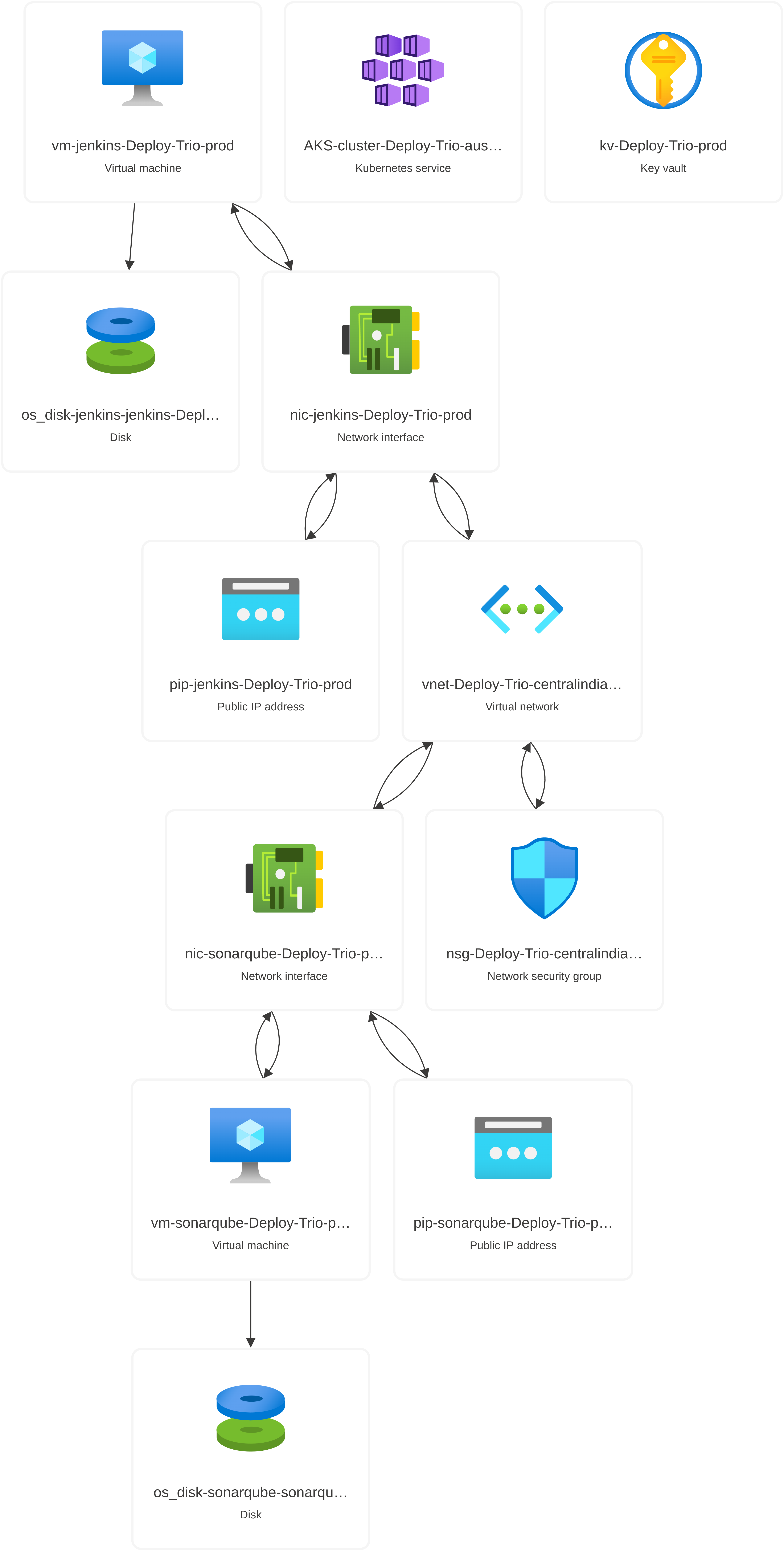

Main Infrastructure Components

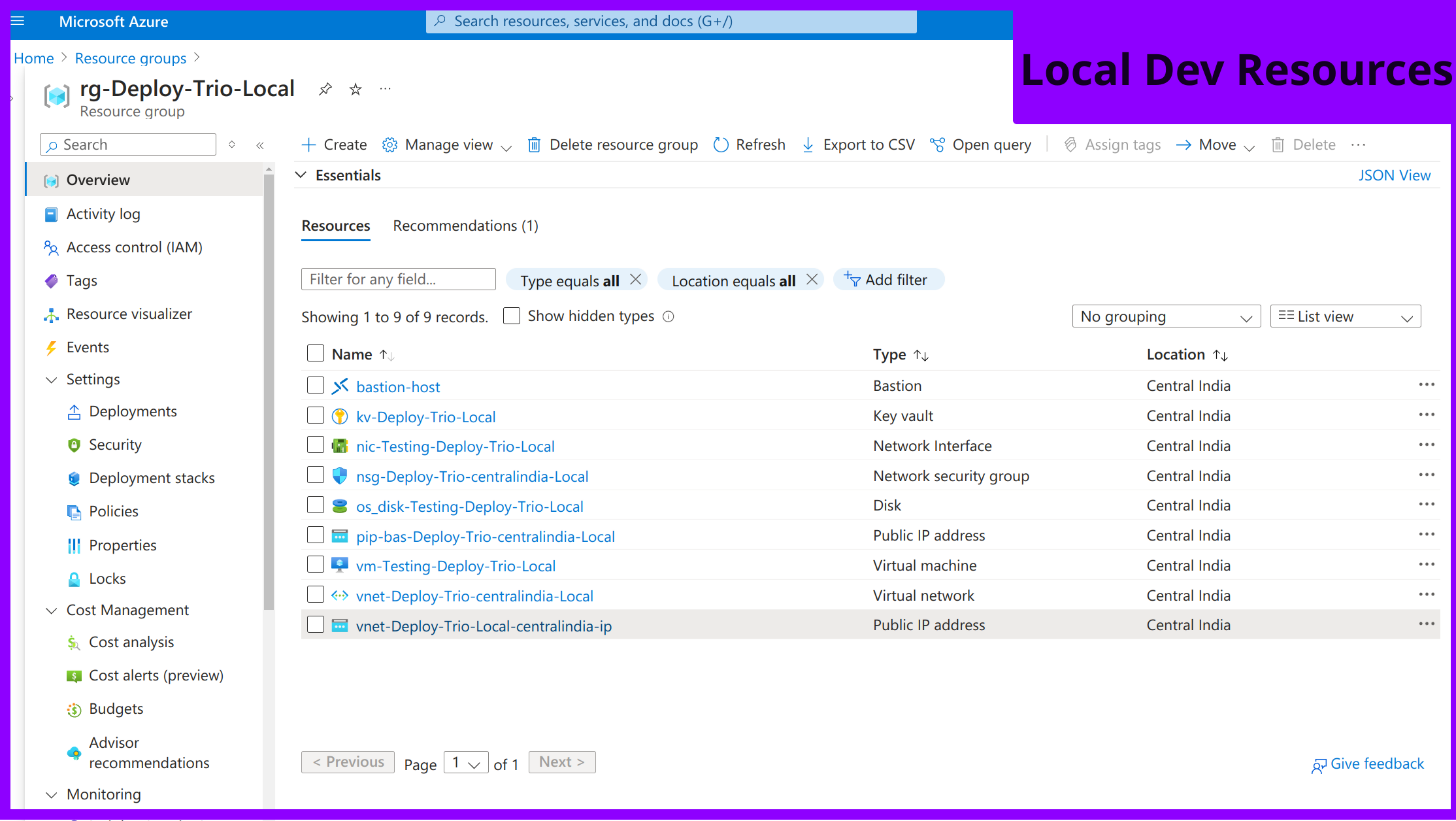

Resource Group: The foundational component where all other resources are grouped together. It is provisioned for each environment (local, dev, prod).

Service Principal: An Azure Active Directory application used for managing access to Azure resources. It is crucial for automating tasks and maintaining security.

Key Vault: Securely stores secrets, keys, and certificates. We store SSH keys and service principal credentials here for secure access.

Network: Defines the virtual network, subnets and NSG rules for isolating and managing resources efficiently.

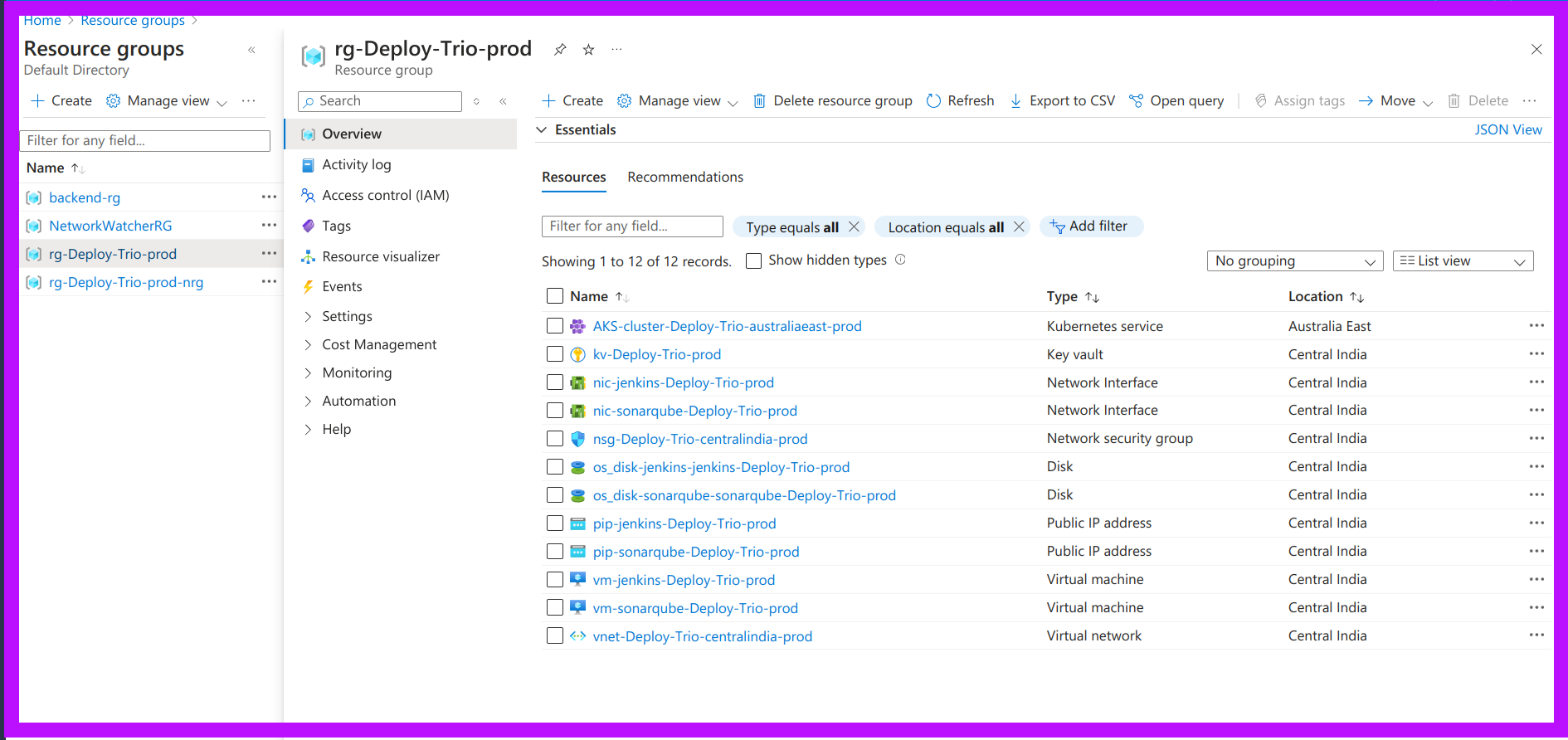

Compute Instances: Virtual machines (VMs) provisioned with specific configurations for running Jenkins and SonarQube in the development environment. In production, additional VMSS support the application workload and AKS nodes.

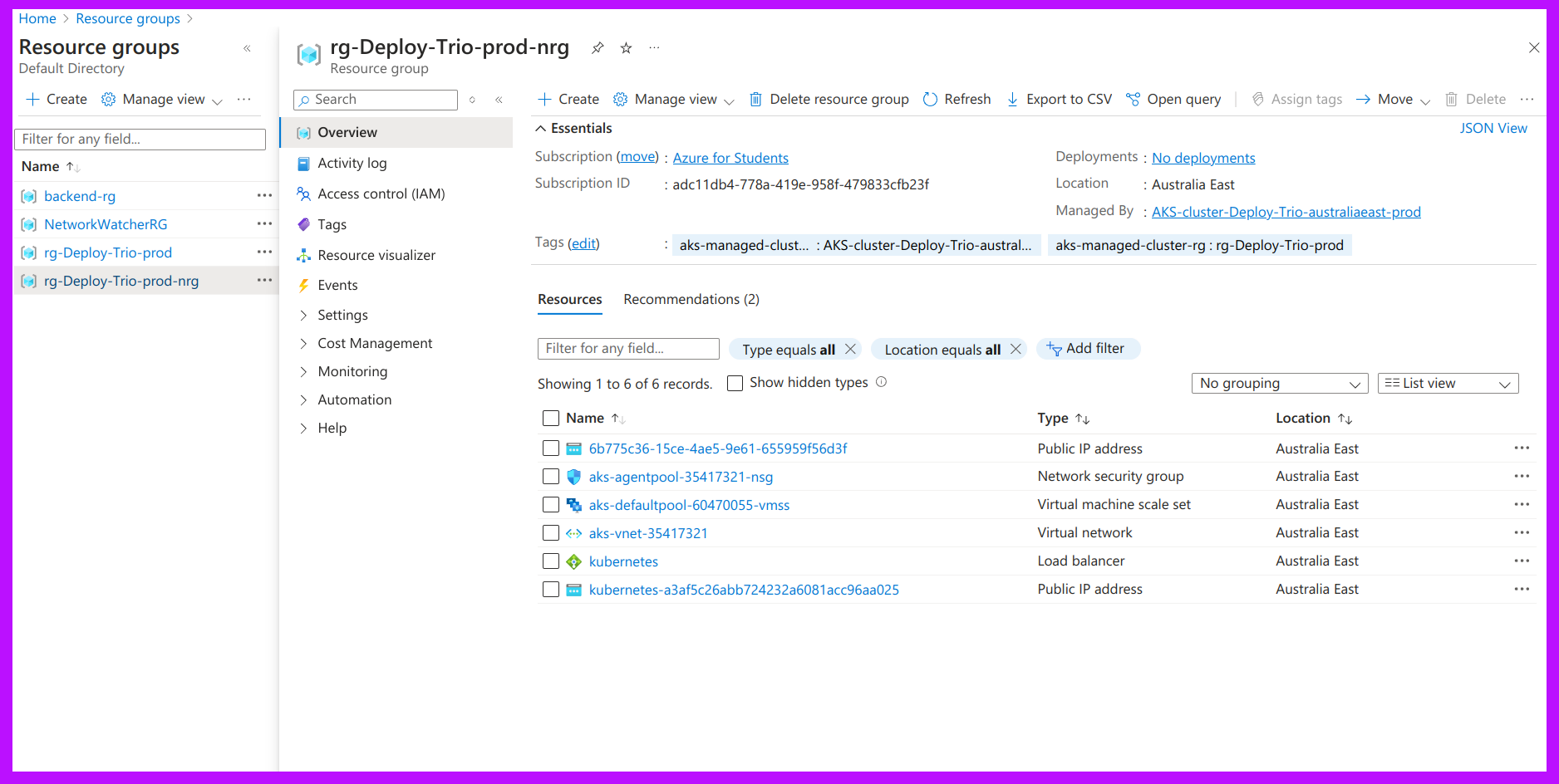

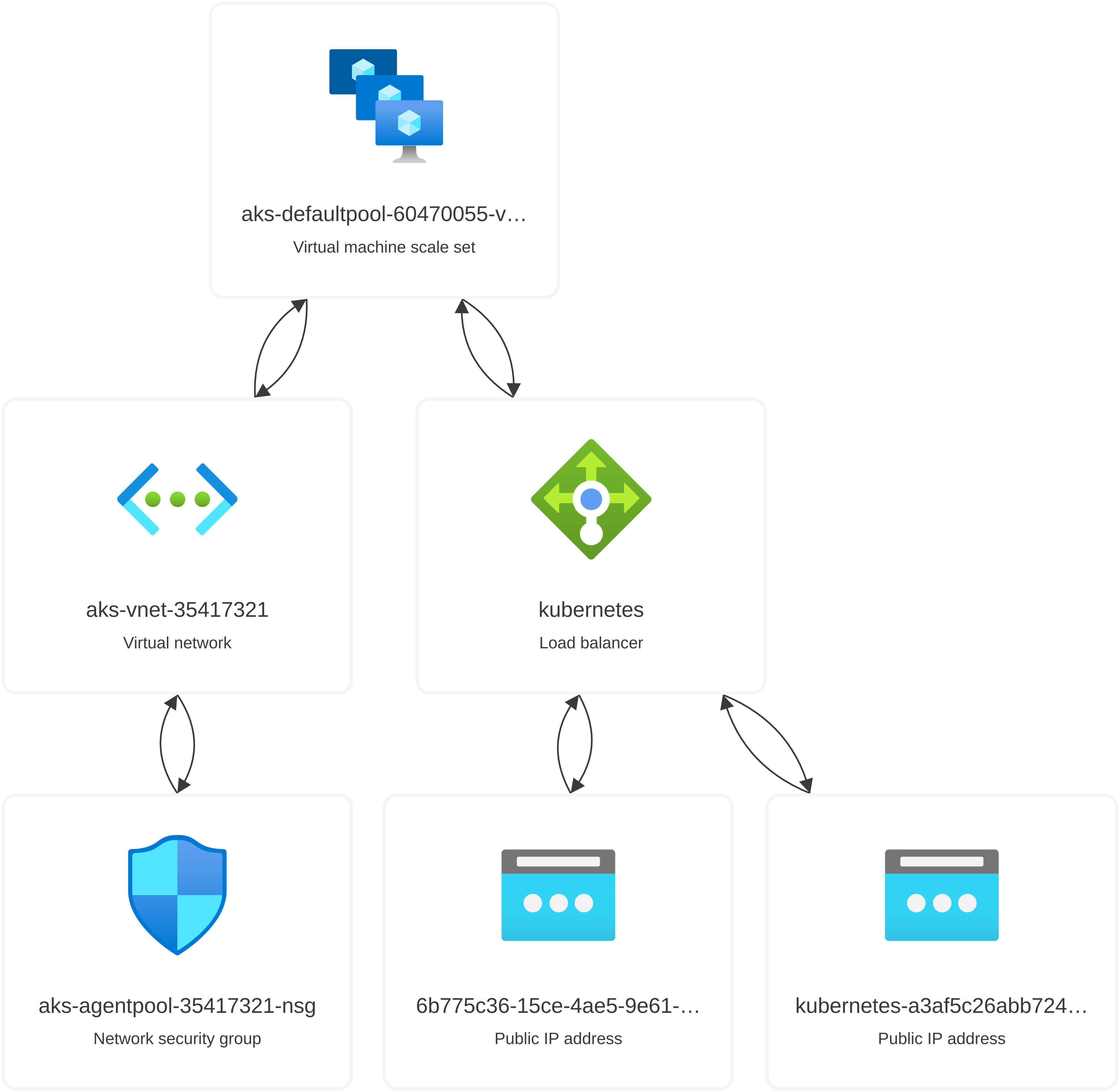

Azure Kubernetes Service (AKS): Manages our containerized applications with features like scaling, updates. It’s used in the production environment for deploying scalable applications.

Environment-Specific Configuration

Local Environment: One VM to mimic the development setup for testing and debugging.

Development Environment: Two VMs - one for Jenkins (CI/CD tool) and another for SonarQube (code quality analysis).

Production Environment: Two VMs for application workload and an AKS cluster for managing containerized applications.

Infrastructure Deployment Workflow

Provision Resource Group: Each environment starts with a resource group to logically group and manage resources.

Create Service Principal: A service principal is created and granted necessary permissions to manage resources within the resource group.

Setup Key Vault: Securely store sensitive information like client IDs and secrets used by the service principal.

Configure Network: Define virtual networks and subnets to ensure proper isolation and communication between resources.

Deploy Compute Instances: Provision VMs with specific configurations (like size, OS, SSH keys) to run Jenkins, SonarQube, and other application components.

Setup AKS: In the production environment, deploy an AKS cluster to manage containerized applications, providing features like scaling and self-healing.

Terraform Workspaces

Terraform workspaces allow us to manage multiple environments within the same configuration. By using workspaces, we can separate the state files and configurations for local, development, and production environments.

Local Workspace: For testing and debugging on a single VM.

Development Workspace: For deploying Jenkins and SonarQube on separate VMs.

Production Workspace: For deploying the application workload and AKS cluster.

Executing the Code

Install Terraform: Ensure Terraform is installed on your machine. You can download it from the official website.

Clone the repository:

%[https://github.com/vsingh55/3-tier-Architecture-Deployment-across-Multiple-Environments]

git clone https://github.com/vsingh55/3-tier-Architecture-Deployment-across-Multiple-Environments.gitInitialize Terraform: Authenticate and Navigate to the Terraform directory and before initializing the configuration fill appropriate value in .auto.tfvars files.

az login cd Terraform terraform initSelect the Workspace: Create and Select the appropriate workspace for your environment (local, dev, prod).

terraform workspace select local # For local environment terraform workspace select dev # For development environment terraform workspace select prod # For production environment# Some usefull commands to use terraform workspace $ terraform workspace Usage: terraform [global options] workspace new, list, show, select and delete Terraform workspaces. Subcommands: delete Delete a workspace list List Workspaces new Create a new workspace select Select a workspace show Show the name of the current workspaceReview and Apply Configuration: Plan and apply the Terraform configuration to provision the infrastructure.

💡If you are using workspace method then ignore -var-file option.terraform plan -var-file=local.auto.tfvars # For local environment terraform plan -var-file=dev.auto.tfvars # For development environment terraform plan -var-file=prod.auto.tfvars # For production environment terraform apply -var-file=local.auto.tfvars # For local environment terraform apply -var-file=dev.auto.tfvars # For development environment terraform apply -var-file=prod.auto.tfvars # For production environmentThe

plancommand allows you to see the changes that will be made, while theapplycommand provisions the resources.

CI/CD Pipeline Steps:

Install Dependencies: Ensure all necessary dependencies are installed.

Run Tests: Execute unit and integration tests.

Code Analysis: Used tools like SonarQube for static code analysis.

File System Scan: Performed security scans using tools like Trivy.

Build Docker Image: Created a Docker image of the application.

Scan Docker Image: Ensuring the image is secure.

Push Image to Repository: Pushed the Docker image to a Docker Hub registry.

Deploy Application: Deploy the Docker image to the target environment ( AKS, container).

Step-by-Step Deployment Process:

Before begin the deployment process, it's essential to understand the components and tools you will be using. One critical decision is the type of database (DB) to deploy. You can choose between a container-based DB or a cloud-based DB. Here are the considerations:

Container-based DB: Requires manual setup of deployments, services, and volume management. This option offers more control but requires more effort for configuration and maintenance.

Cloud-based DB: Provides flexibility and ease of management but may be more expensive depending on usage. For this project, we will use a cloud-based DB (MongoDB) for its scalability and convenience.

In addition to the database, you'll need to configure several environment variables:

Cloudinary: For storing images in the database.

Mapbox Token: For linking campground locations on the map.

Prerequisite:

Cloud Provider Account [Azure]

Knowledge of tools like Trivy, SonarQube, Docker, GIt, Jenkins used in project & IaC (Terraform)

Getting Cloudinary variables:

Sign Up for Cloudinary Account:

- Go to the Cloudinary website and sign up for a new account.

AccessDashboard:

- Once you have signed up and logged in to your Cloudinary account, you will be taken to the dashboard.

Find Your Credentials:

In the Cloudinary dashboard, navigate to the "Dashboard Settings" section.

You will find your CLOUDINARY_CLOUD_NAME, CLOUDINARY_KEY, and CLOUDINARY_SECRET there. These are unique for your account and should be kept secure.

Use Credentials in Your Application:

- Now that you have obtained these credentials, you will use later them in your application to connect to Cloudinary for image and video management.

Getting Mapbox token:

Signup and login to your Mapbox account.

Navigate to create acces token.

Fill out token name and check all Secret scopes as you are practising it not using for corporate project.

Click create and copy and save it.

Getting DB_URL:

Sign up for MongoDB Atlas:

Go to the MongoDB Atlas.

You can sign up using your Google account, or you can fill in the required information to create a new account.

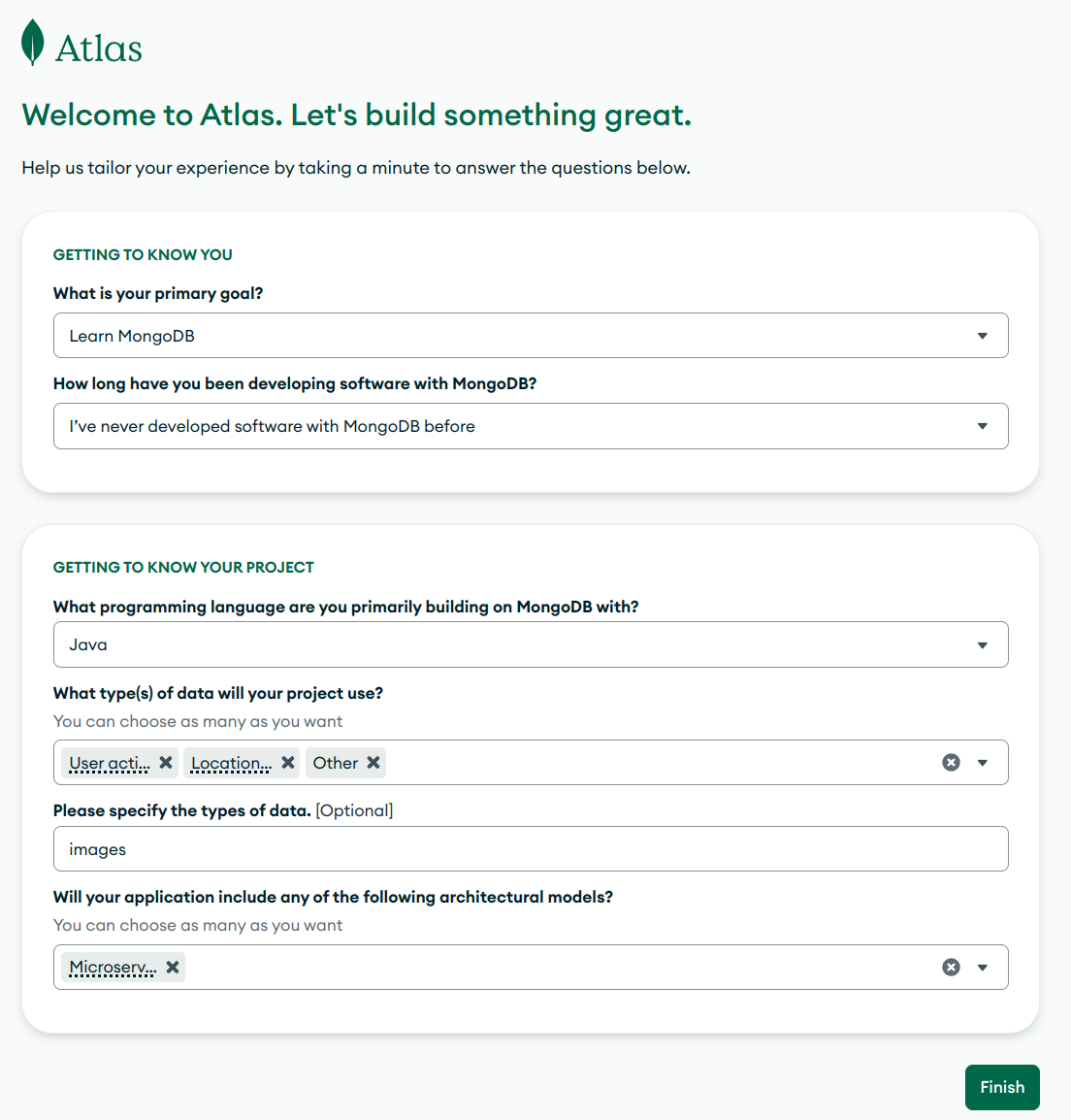

Fill the details as shown in figure:

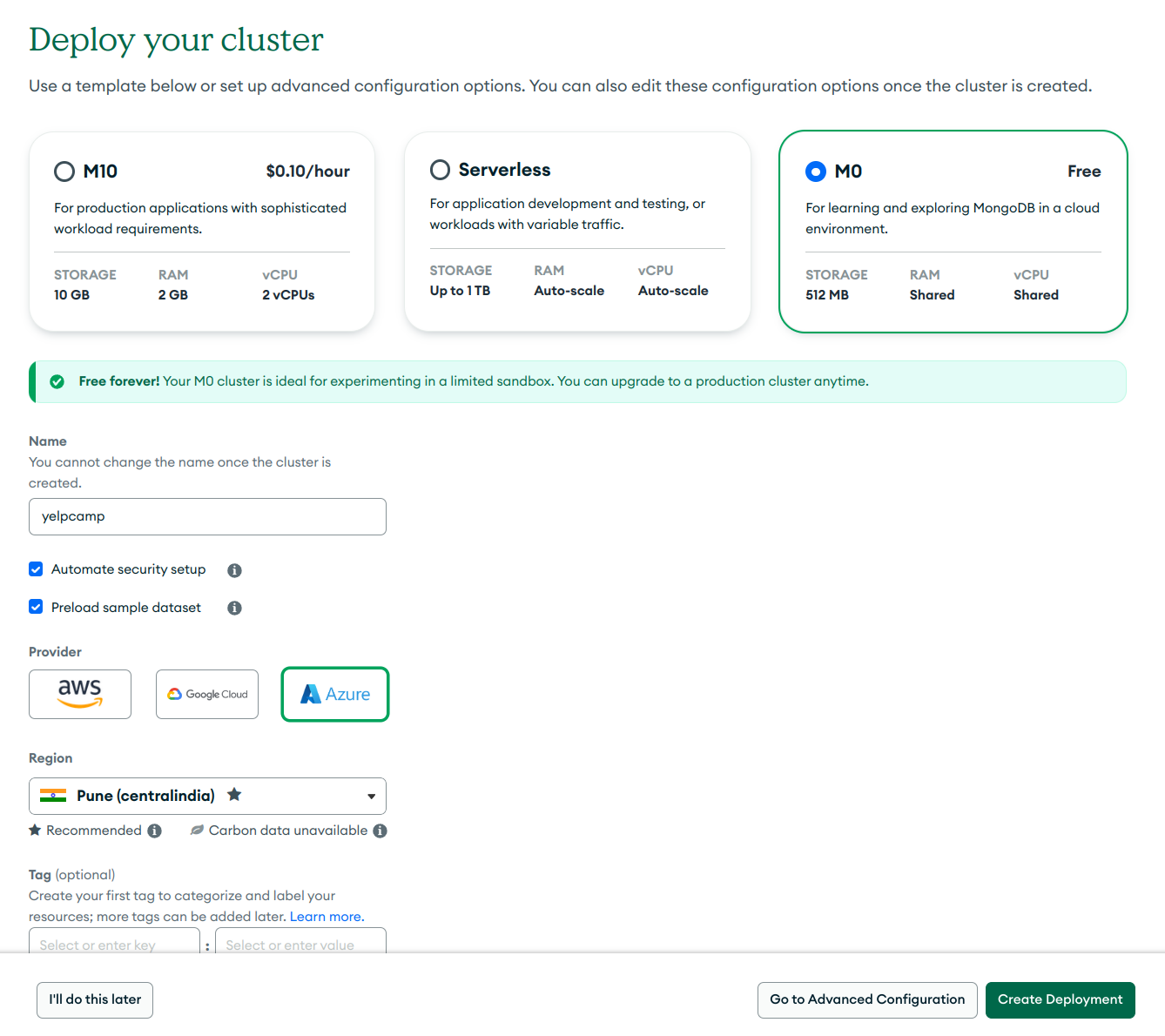

You can choose your own cloud provider but keep in mind choose your nearest region.

Click Create Deployment and follow instructions.

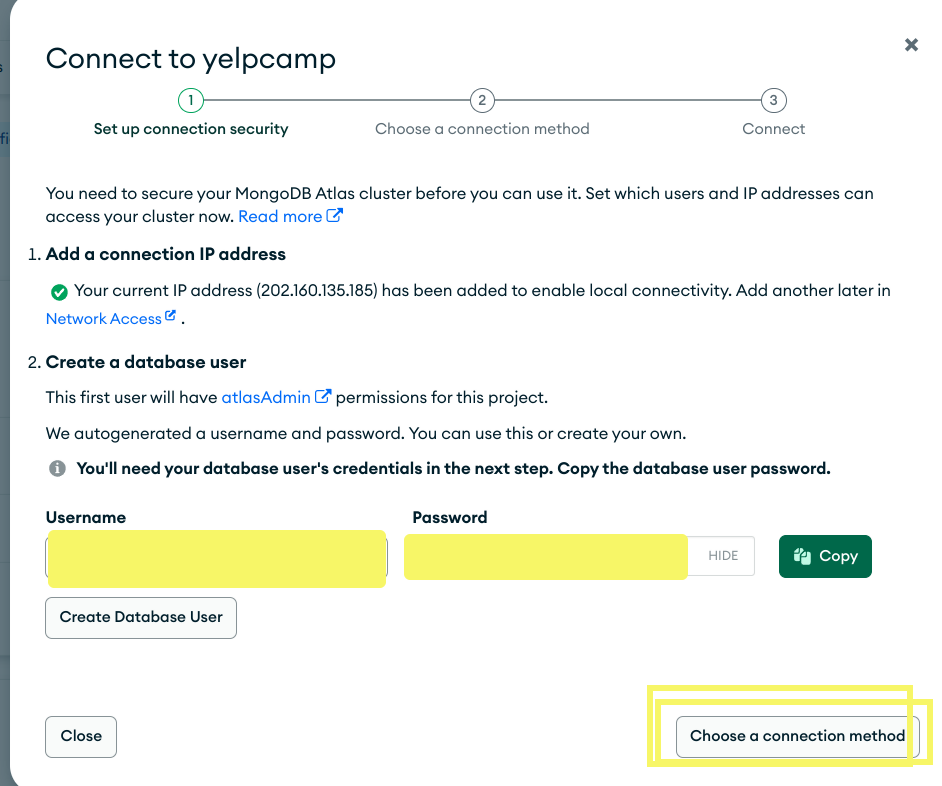

Now copy Username & Password on notepad and save it.

First click on Create Database User then Choose a connection method.

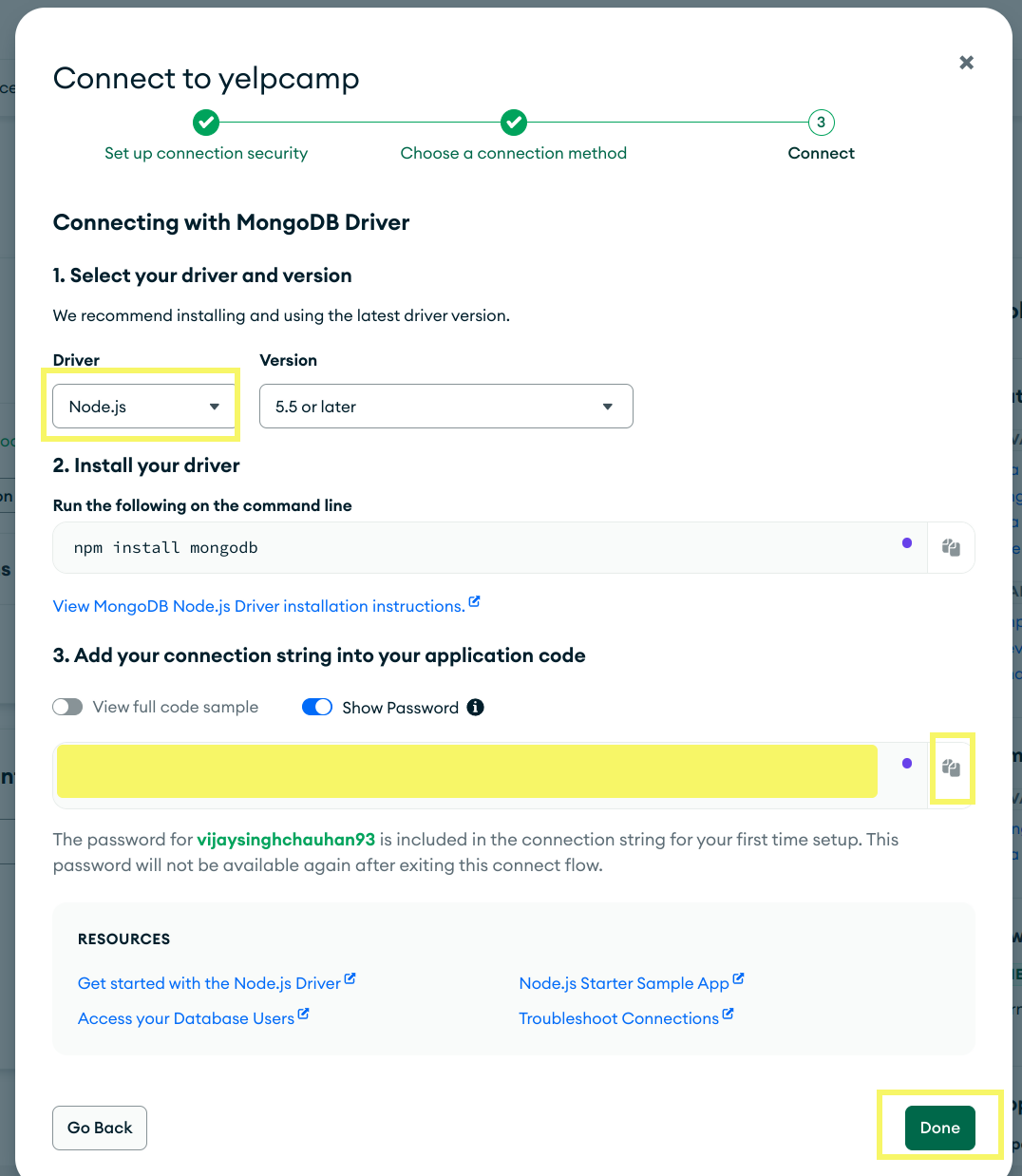

Select Driver and choose Node.js (as we are using App written in Node.js).

Copy DB_URL and save it.

Local Deployment:

Local environment deployment plays a crucial role in the DevOps process for several reasons:

Local deployment enables rapid development and testing by allowing developers to quickly build, test, and debug code without remote servers, speeding up the development cycle.

It ensures consistency and reliability by mimicking the production environment, reducing environment-specific bugs.

Additionally, it is resource-efficient and cost-effective, as it eliminates the need for expensive cloud resources, leveraging local machine resources instead.

Let's proceed with deployment steps:

Step.1: As we have already discussed about infrastructure provisioning.

Step.2: Put the environment variables into .env file present in /src directory.

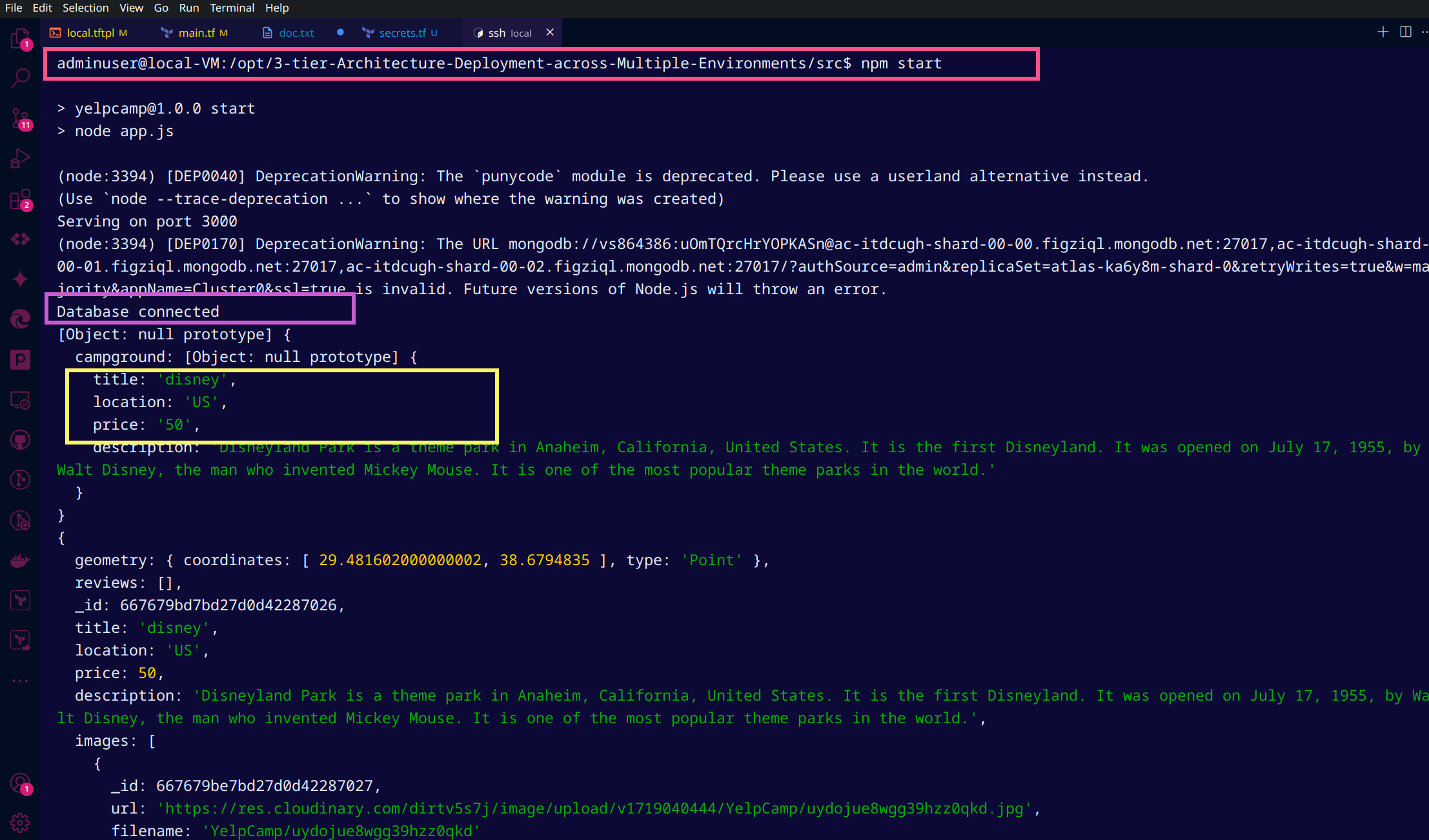

Step.3: SSH to provisioned VM and follow the instruction:

run [ ls /opt/ ] & make sure git cloned

if git cloned then run:

cd /opt/3-tier-Architecture-Deployment-across-Multiple-Environments/now you need to run following cmd to install npm:

export NVM_DIR="/opt/nodejs/.nvm" && source "$NVM_DIR/nvm.sh"Verify is npm installed by running

npm-vrun

cd srcrun

npm startThere should be database connected message on terminal thats it.

Access Application at http://VM_PublicIP:3000/ relpace VM_PublicIP with actual PIP provisioned on your Azure Portal.

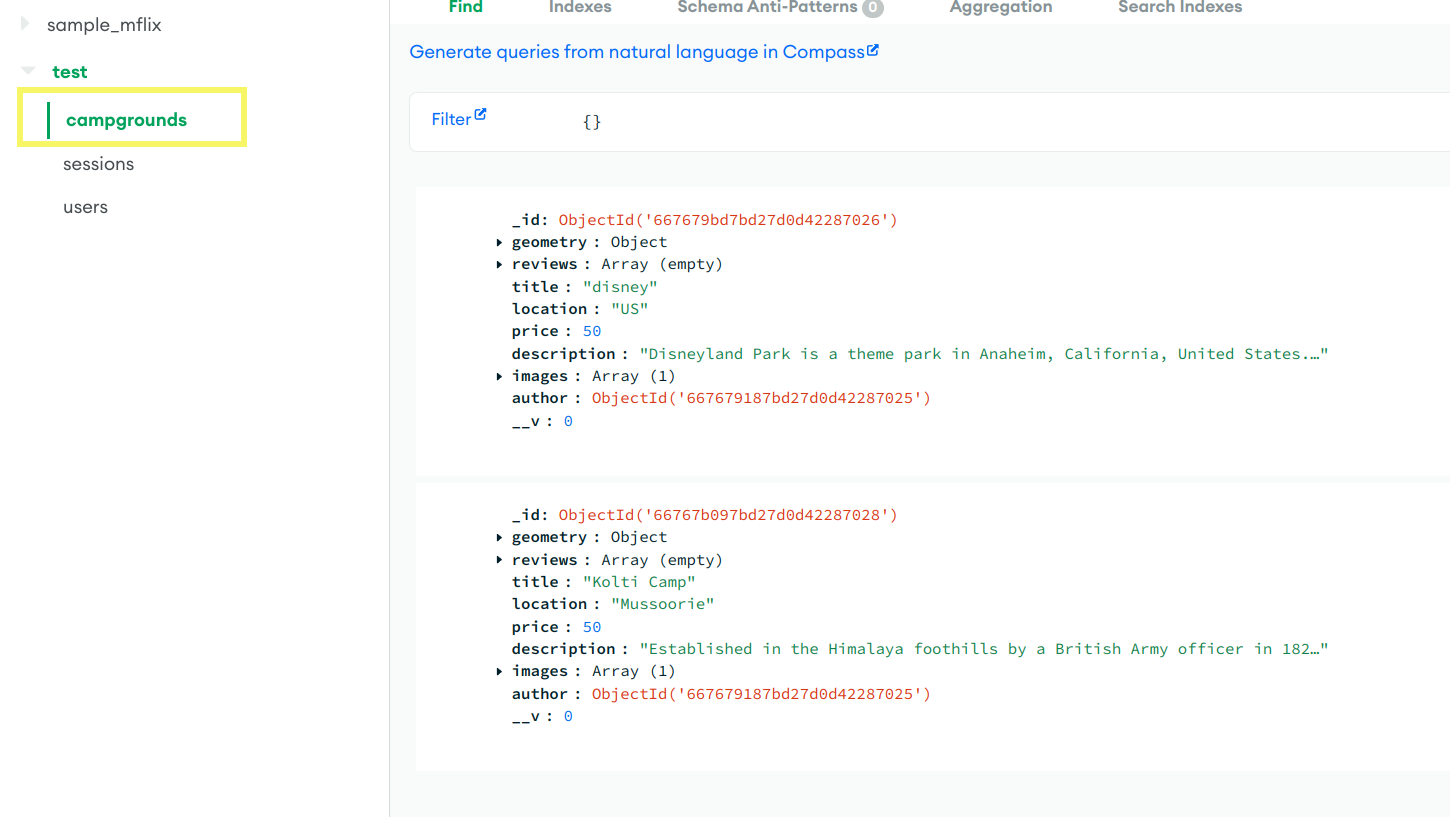

Now Register and login to Yelpcamp app and create new campgrounds and verify it on database portal in database section.

Congratulations🎉 you have deployed app in local enironment.🙌

Deploying Dev Environment:

A development environment (Dev Env) is a crucial setup for software development teams. It provides a controlled space where developers can build, test, and refine applications before they are deployed to production. Setting up a reliable Dev Env ensures that developers can work efficiently and collaborate effectively.

Overview of Deployment Process

Here’s what we will cover:

Infrastructure Provisioning: We have already discussed the way to provision infra in Infrastructure section.

Access & Configuring Jenkins and SonarQube: Setting up Jenkins and SonarQube portals for continuous integration and code quality analysis is a tedious task, so I have pinned down all the detailed steps in a separate blog as mentioned below:

%[https://blogs.vijaysingh.cloud/unlocking-jenkins]

Creating a Jenkins Pipeline: Writing and explaining a Jenkins Pipeline script step by step.

Running the Pipeline: Executing the pipeline and accessing the deployed application.

Troubleshooting: Tips for debugging common issues during the deployment process.

Step-by-Step Detailed Deployment Process

3. Creating a Jenkins Pipeline

Jenkins Pipeline script for deploying a Node.js application:

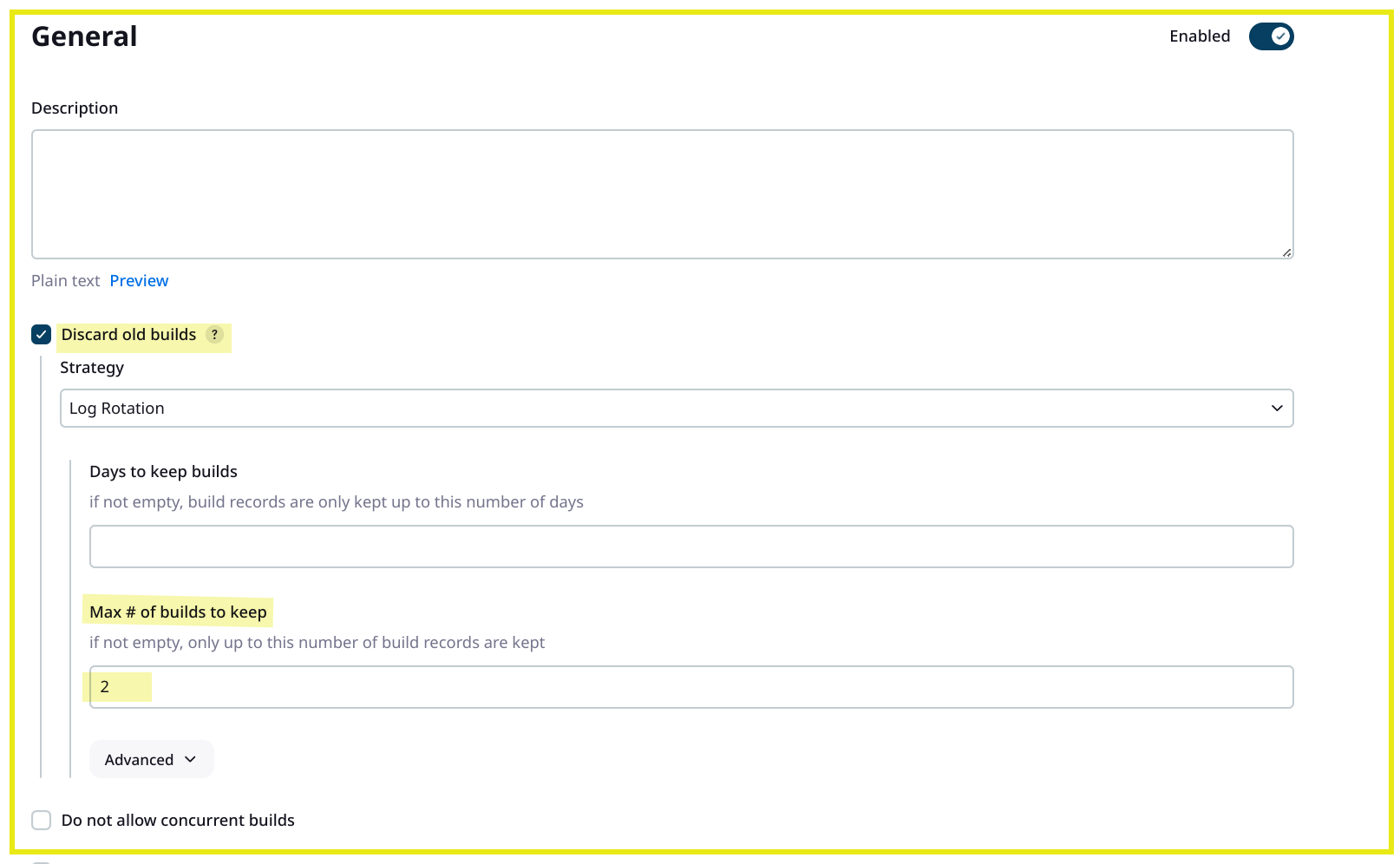

Create a New Job: Go to Jenkins Dashboard -> New Item. Create a pipeline job named Deploy-Trio-Dev. I have decided to keep only two pipelines as history.

Configure the Pipeline:

Pipeline:

pipeline {

agent any

tools {

nodejs 'node22'

}

environment {

SCANNER_HOME = tool 'sonar-scanner'

}

stages {

stage('Git Checkout') {

steps {

git branch: 'main', url: 'https://github.com/vsingh55/3-tier-Architecture-Deployment-across-Multiple-Environments.git'

}

}

stage('Install Package Dependencies') {

steps {

dir('src') {

sh 'npm install'

}

}

}

stage('Unit Test') {

steps {

dir('src') {

sh 'npm test'

}

}

}

stage('Trivy FS Scan') {

steps {

dir('src') {

sh 'trivy fs --format table -o fs-report.html .'

}

}

}

stage('SonarQube') {

steps {

dir('src') {

withSonarQubeEnv("sonar") {

sh "\$SCANNER_HOME/bin/sonar-scanner -Dsonar.projectKey=Campground -Dsonar.projectName=Campground"

}

}

}

}

stage('Docker Build & Tag') {

steps {

script {

dir('src') {

withDockerRegistry(credentialsId: 'docker-crd', toolName: 'docker') {

sh "docker build -t vsingh55/camp:latest ."

}

}

}

}

}

stage('Trivy Image Scan') {

steps {

sh 'trivy image --format table -o image-report.html vsingh55/camp:latest'

}

}

stage('Docker Push Image') {

steps {

script {

withDockerRegistry(credentialsId: 'docker-crd', toolName: 'docker') {

sh "docker push vsingh55/camp:latest"

}

}

}

}

stage('Docker Deploy To DEV Env') {

steps {

script {

withDockerRegistry(credentialsId: 'docker-crd', toolName: 'docker') {

sh "docker run -d -p 3000:3000 vsingh55/camp:latest"

}

}

}

}

}

}

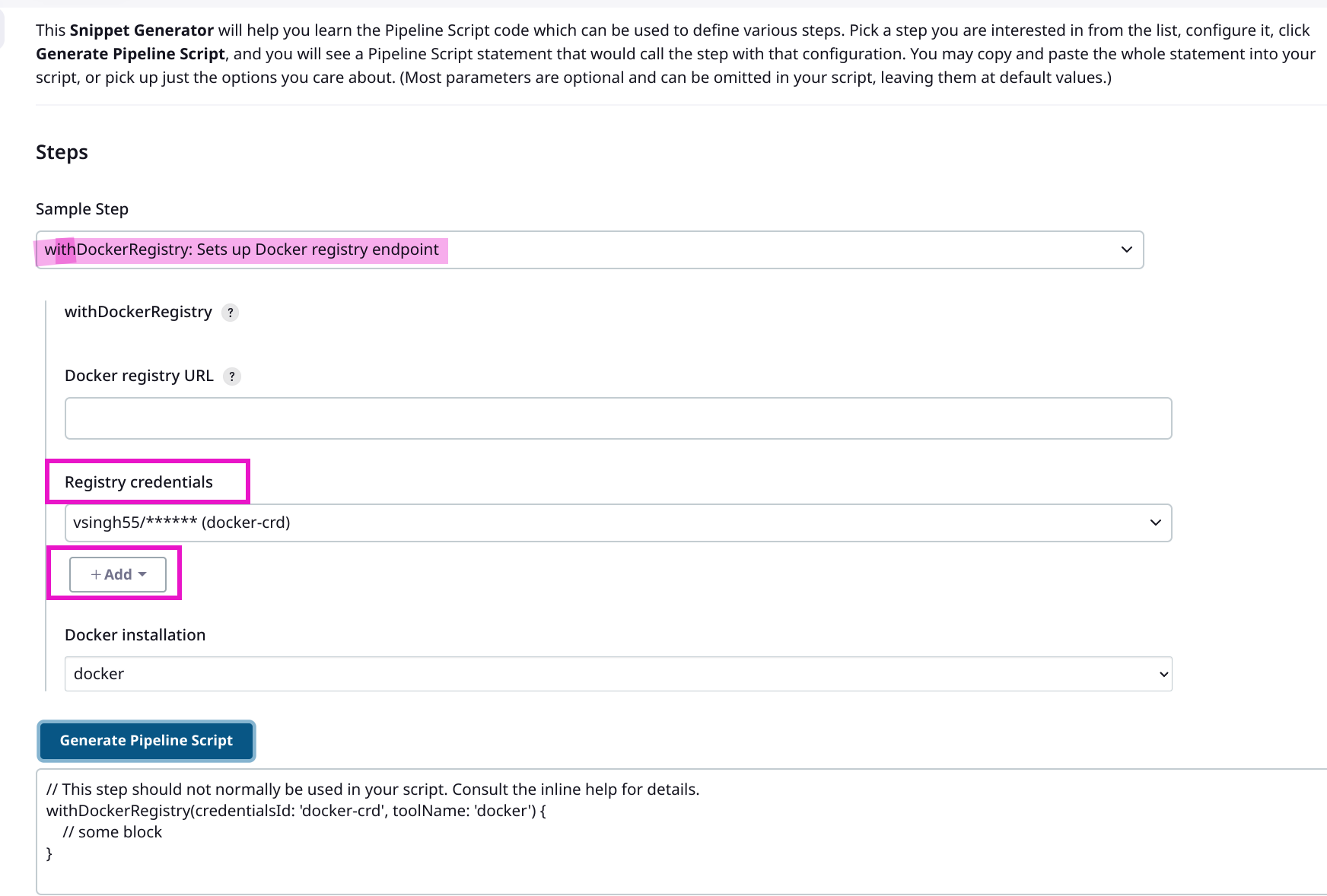

You can use pipeline syntax option provided in jenkins during writing pipeline. Example is shown below to generate script for docker.

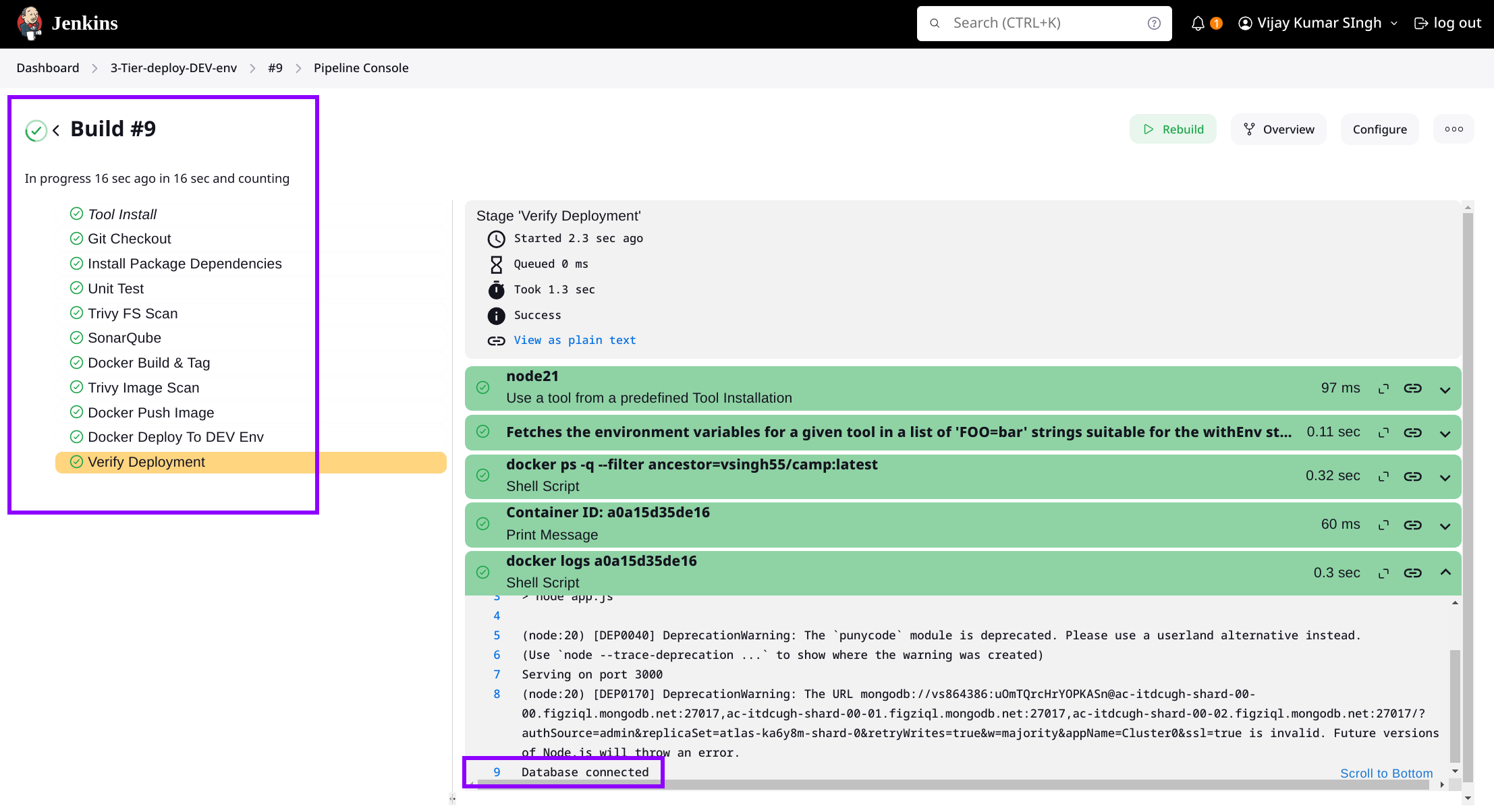

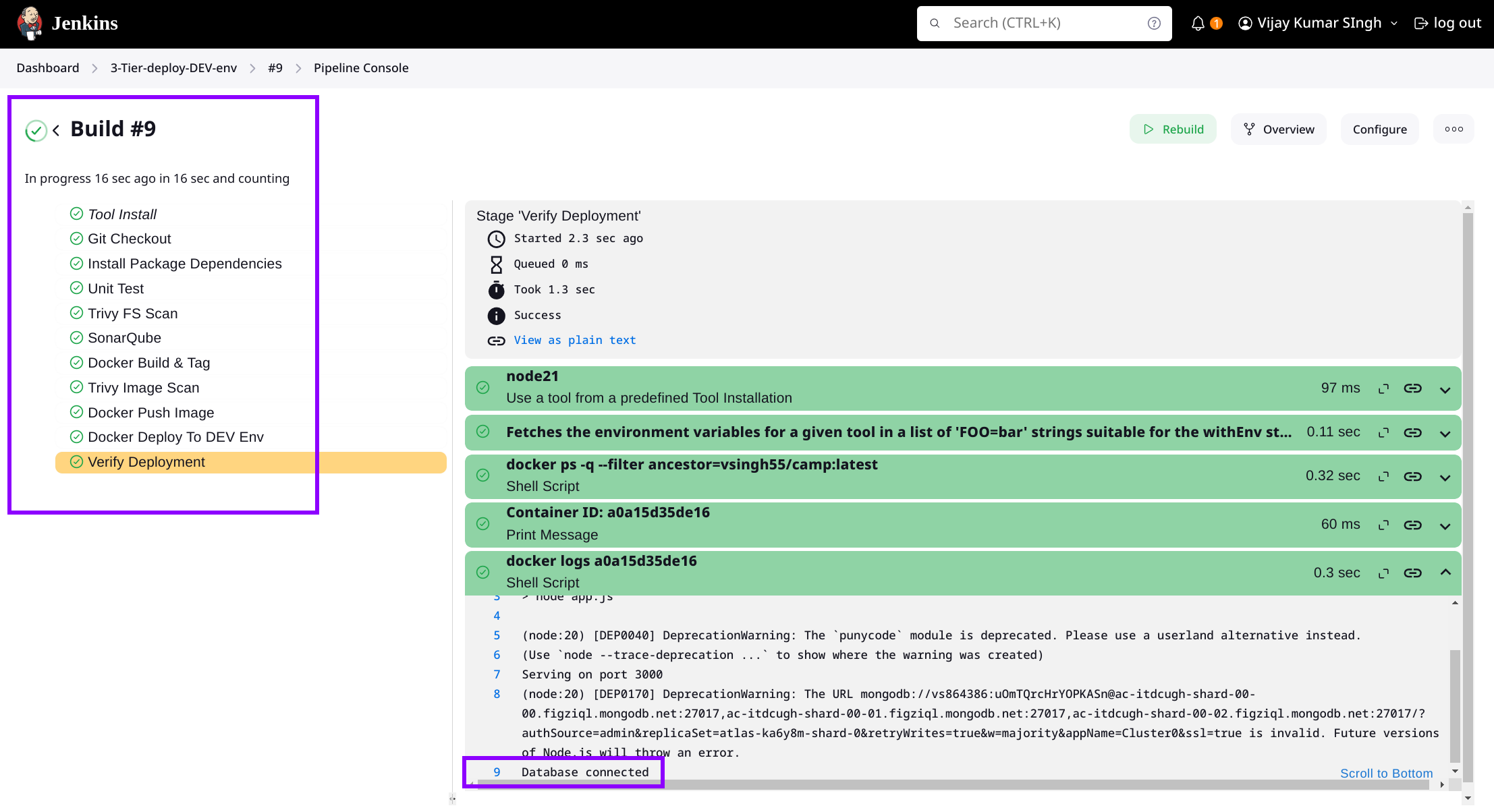

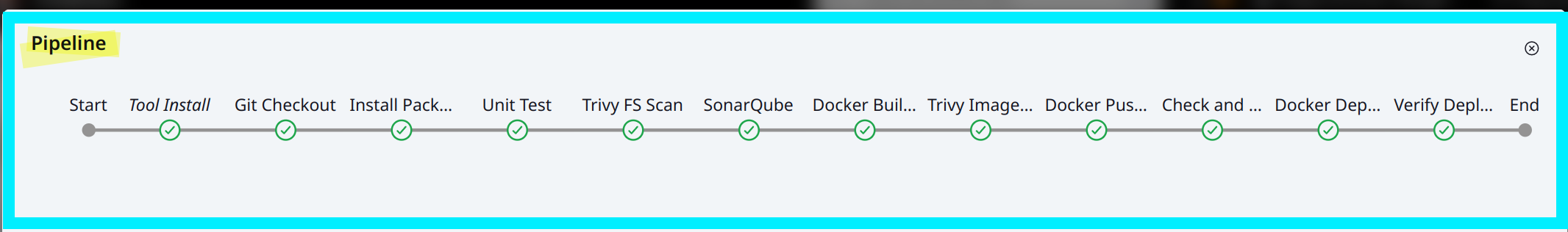

4. Running the Pipeline

Triggering the Pipeline: Commit changes to your application's Git repository to trigger the Jenkins Pipeline.

Monitoring Pipeline Execution: Watch the pipeline stages execute in Jenkins dashboard.

Accessing Deployed Application: Once deployment succeeds, access your application via

http://<Jenkins-VM-Public-IP>:3000

5. Troubleshooting Tips

Pipeline Failures: Check Jenkins console output for detailed error messages.

Infrastructure Issues: Verify resource configurations in Azure Portal or Terraform scripts.

Permission Issues: Ensure you’ve provided adds the user “jenkins” to the “docker” group.

sudo usermod -aG docker jenkins

Scenario: your pipeline is running successfully, and the Docker image is also operational, but you're unable to access the application's full features due to misconfigured environment variables. After making corrections and pushing the changes to Git, rerunning the pipeline results in an error because port 3000 is occupied by the previously running image. To resolve this, you must first stop the current image before rerunning the new image on port 3000. To automate this process, I have added two stages in the code: one to check if port 3000 is free before the deployment stage, and another to confirm that the new image is running after deployment.

stage('Checking & Stop Running Containers on Port 3000') {

steps {

script {

def runningContainers = sh(script: "docker ps -q --filter 'publish=3000'", returnStdout: true).trim()

if (runningContainers) {

echo "Stopping running containers on port 3000: ${runningContainers}"

sh "docker stop ${runningContainers}"

} else {

echo "No running containers found on port 3000"

}

}

}

}

stage('Docker Deploy To DEV Env') {

// already provided in pipeline script

}

stage('Verify Deployment') {

steps {

script {

try {

def containerId = sh(script: 'docker ps -q --filter ancestor=vsingh55/camp:latest', returnStdout: true).trim()

if (containerId) {

echo "Container ID: ${containerId}"

sh "docker logs ${containerId}"

} else {

error "No running container found for image vsingh55/camp:latest"

}

} catch (Exception e) {

echo "Error during verification: ${e.getMessage()}"

sh 'docker ps -a'

error "Verification stage failed"

}

}

}

}

Create new campgrounds, sign up, and log in with new users.

Deploying Prod Environment:

In the production environment, the application is deployed on Azure Kubernetes Service (AKS) rather than using container deployment as in the development environment.

Deploying a Docker image stored in Docker Hub to an Azure Kubernetes Service (AKS) cluster using a Jenkins pipeline involves several steps, including setting up the AKS cluster, configuring Jenkins, and creating the Jenkins pipeline. Here are the detailed instructions:

Step 1: Set up variables:

Encode and put the values of environment variables using following cmd:

$ echo 'enter the env variable' | base64

Put the values of environment variables in /src/Manifests/dss.yml file.

# Put all the values these are generated in local deployment.

data:

CLOUDINARY_CLOUD_NAME:

CLOUDINARY_KEY:

CLOUDINARY_SECRET:

MAPBOX_TOKEN:

DB_URL:

SECRET:

You can also use --literal flag with kube CLI instead of converting and putting these values individually.

Step 2: Create an AKS Cluster:

Already provisioned infra using Terraform.

Configure kubectl:

Install Azure CLI and kubectl if not already installed.

Connect to your AKS cluster:

$ az aks get-credentials --resource-group rg-Deploy-Trio-prod --name AKS-cluster-Deploy-Trio-australiaeast-prod --overwrite-existing $ kubectl create namespace webapps

Step 3: Configure Jenkins to Interact with AKS

Follow the same steps that have been suggested in Dev Deployment process.

Step 4: Create the Jenkins Pipeline

Create Jenkins Pipeline Job:

Create a new Jenkins pipeline job.

Use the following pipeline as a reference.

Pipeline Script:

pipeline { agent any tools { nodejs 'node21' } environment { SCANNER_HOME = tool 'sonar-scanner' } stages { stage('Git Checkout') { steps { git branch: 'main', url: 'https://github.com/vsingh55/3-tier-Architecture-Deployment-across-Multiple-Environments.git' } } stage('Install Package Dependencies') { steps { dir('src') { sh 'npm install' } } } stage('Unit Test') { steps { dir('src') { sh 'npm test' } } } stage('Trivy FS Scan') { steps { dir('src') { sh 'trivy fs --format table -o fs-report.html .' } } } stage('SonarQube') { steps { dir('src') { withSonarQubeEnv("sonar") { sh "\$SCANNER_HOME/bin/sonar-scanner -Dsonar.projectKey=Campground -Dsonar.projectName=Campground" } } } } stage('Docker Build & Tag') { steps { script { dir('src') { withDockerRegistry(credentialsId: 'docker-crd', toolName: 'docker') { sh "docker build -t vsingh55/campprod:latest ." } } } } } stage('Trivy Image Scan') { steps { sh 'trivy image --format table -o image-report.html vsingh55/campprod:latest' } } stage('Docker Push Image') { steps { script { withDockerRegistry(credentialsId: 'docker-crd', toolName: 'docker') { sh "docker push vsingh55/campprod:latest" } } } } stage('Deploy to AKS Cluster') { steps { dir('src') { withCredentials([file(credentialsId: 'k8-secret', variable: 'KUBECONFIG')]) { sh "kubectl apply -f Manifests/dss.yml -n webapps" sh "kubectl apply -f Manifests/svc.yml -n webapps" sleep 60 } } } } } }

Step 4: Run the Jenkins Pipeline

Trigger the Pipeline:

Trigger the pipeline manually or configure it to run on Git commits.

Monitor the pipeline stages in Jenkins to ensure each step completes successfully.

Monitor Deployment:

After the deployment stage completes, monitor your AKS cluster to ensure the application is running.

You can use the following commands to check the status:

kubectl get pods -n webapps kubectl get svc -n webapps

By following these steps, you can deploy a Docker image from Docker Hub to an AKS cluster using a Jenkins pipeline.

Conclusion:

Deploying a 3-tier architecture on Azure Kubernetes Service (AKS) using Terraform and Jenkins provides a robust and scalable solution for managing web applications like YelpCamp. By leveraging Infrastructure as Code (IaC) with Terraform, we ensure consistent and repeatable deployments across multiple environments. The integration of Docker for containerization, SonarQube for static code analysis, and Trivy for vulnerability scanning enhances the security and quality of the application. The CI/CD pipelines set up with Jenkins automate the deployment process, ensuring efficient and reliable updates to the application. This comprehensive approach not only streamlines the development and deployment process but also ensures that the application is secure, scalable, and maintainable.

References:

Refer to the following blogs to gain a better understanding of Terraform modules and Jenkins configurations:

- Terraform Module:

- Configuring Jenkins:

Subscribe to my newsletter

Read articles from Vijay Kumar Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vijay Kumar Singh

Vijay Kumar Singh

I'm Vijay Kumar Singh, a Linux, DevOps, Cloud enthusiast learner and contributor in shell scripting, Python, networking, Kubernetes, Terraform, Ansible, Jenkins, and cloud (Azure, GCP, AWS) and basics of IT world. 💻✨ Constantly exploring innovative IT technologies, sharing insights, and learning from the incredible Hashnode community. 🌟 On a mission to build robust solutions and make a positive impact in the tech world. 🚀 Let's connect and grow together! #PowerToCloud