Using GenAI Gateway capabilities for OpenAI

Osama Shaikh

Osama Shaikh

Introduction

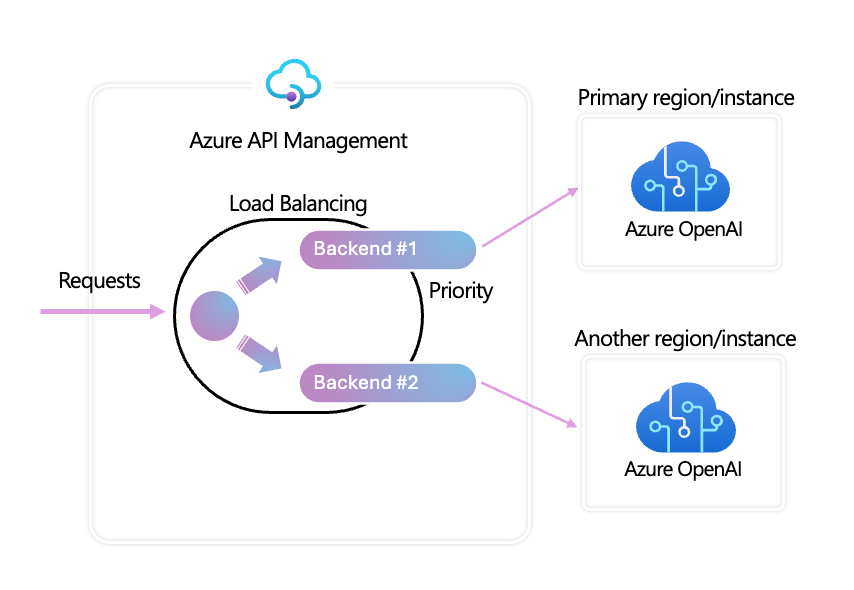

In this blog post, I will demonstrate how to leverage the newly announced GenAI gateway features in API Management (APIM) to enhance the resiliency and capacity of your Azure OpenAI deployments using circuit breaker and load balancing patterns.

Background

Previously, I have shared various methods for using different routing algorithms (weightage/priority) to load balance traffic across multiple OpenAI endpoints to improve resilience and performance. However, these custom solutions often failed under extremely heavy traffic scenarios.

Since I have already discussed the challenges associated with OpenAI TPM and APIM patterns in a previous blog post, this post will focus directly on the solution.

Load Balancing Features in APIM for OpenAI

The solution comprises two main configuration parts: load balancing and the circuit breaker. These configurations can be managed via two methods: the APIM portal (UI) and Bicep (Infrastructure as Code).

Configuration of APIM via Portal (UI)

Support for Load Balancing Techniques: Recently announced at the last Microsoft Build event, APIM now supports weighted and priority-based load balancing techniques.

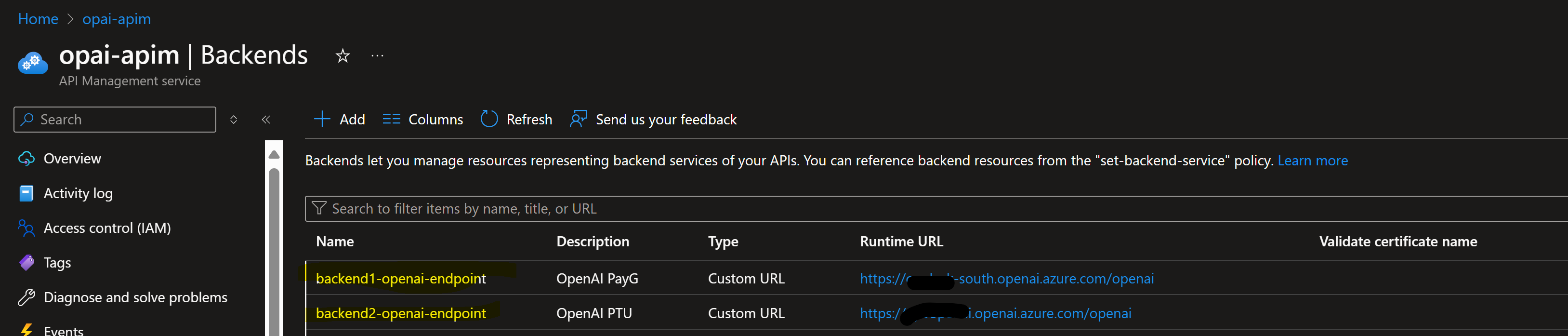

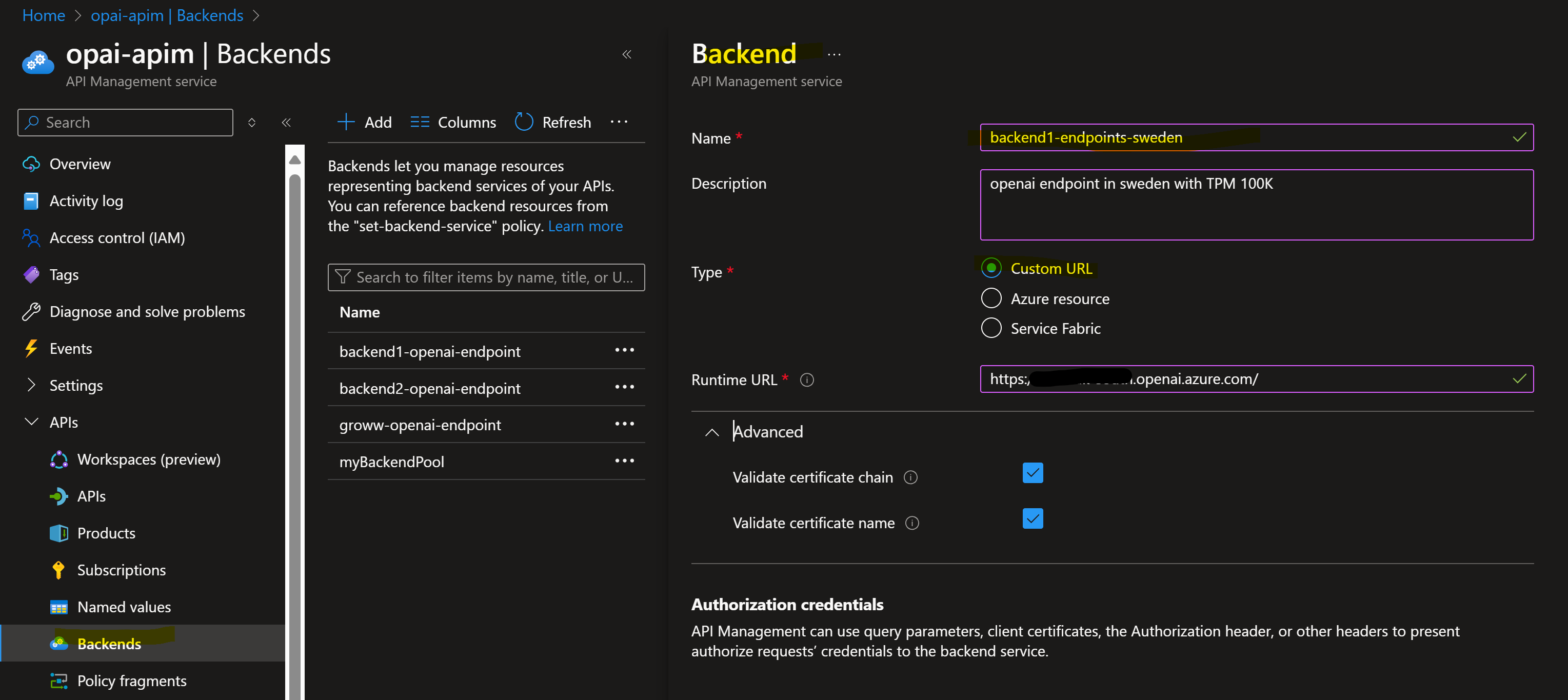

Creating Backend Resources: Begin by creating backend resources for each OpenAI endpoint in APIM. For instance, you can add two OpenAI endpoints from different regions.

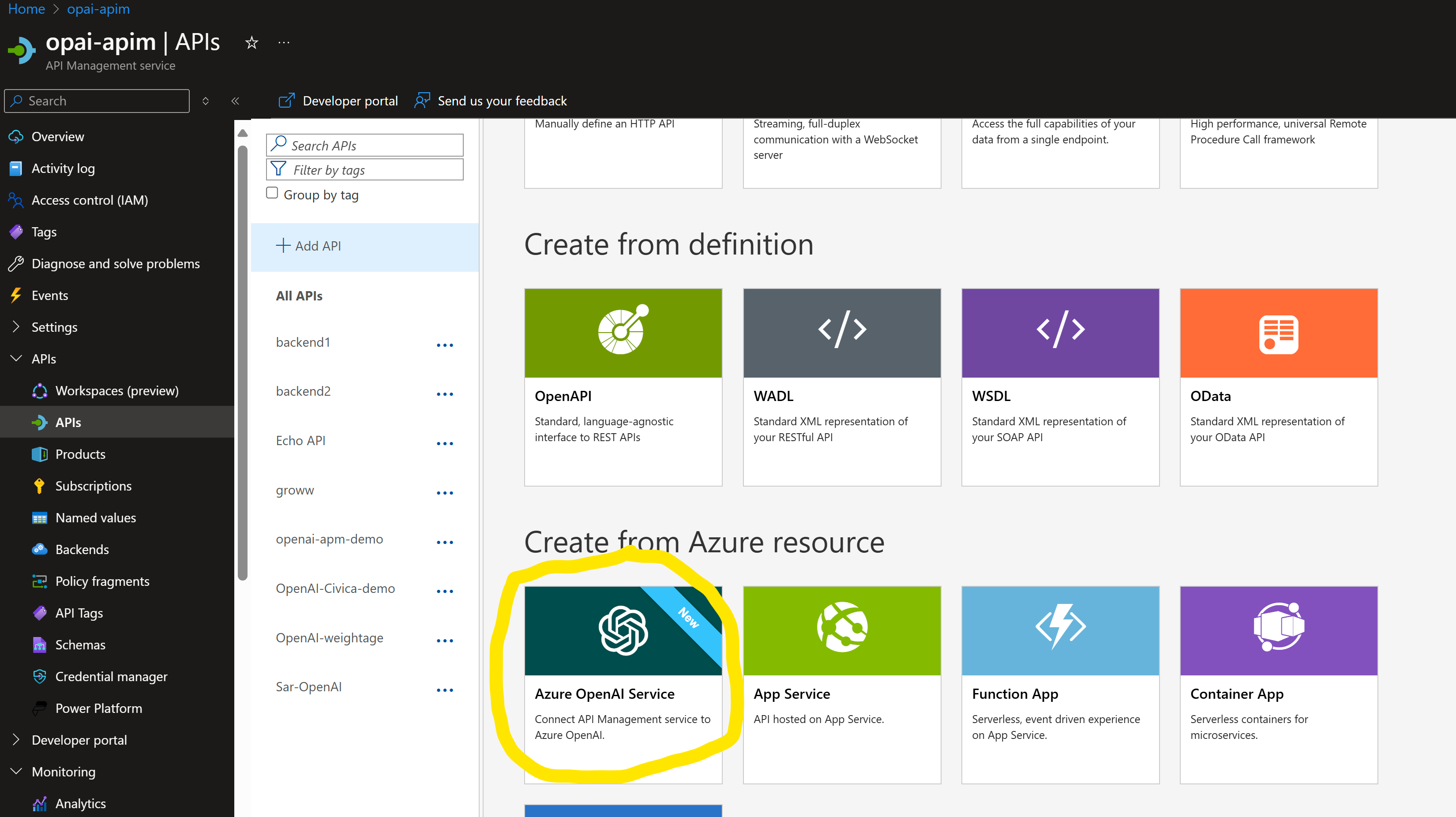

- Import New API: Use the OpenAI service template to import a new API. Select an OpenAI endpoint and base URL suffix to form the APIM URI path, e.g.,

https://myapim.azure-api.net/(basesuffix).

Add each of your Az OpenAI endpoint as backend resource in API management,

Configuration of LB & Circuit Breaker via Bicep

Due to the current lack of UI support for load balancer or circuit breaker configurations, these must be done via Bicep (Infrastructure as Code).

Load Balancer Configuration:

Define Load Balancer Method: You can configure the load balancer to use either weight or priority, or both. In this demo, we will use weight-based load balancing, where one endpoint will have a higher weight than another.

Code Snippet Adjustments: Modify the names of APIM and its backend pools, specifying the backend pools created in the previous step. This example uses two pools, but you can add more as needed.

resource symbolicname 'Microsoft.ApiManagement/service/backends@2023-09-01-preview' = { name: '(APIM-Name)/mybackendpool' properties: { description: 'Load balancer for multiple backends' type: 'Pool' pool: { services: [ { id: '/subscriptions/(subscripitonid)/resourceGroups/DefaultResourceGroup-SUK/providers/Microsoft.ApiManagement/service/opai-apim/backends/backend1-openai-endpoint' priority: 1 weight: 1 } { id: '/subscriptions/(subscripitonid)/resourceGroups/DefaultResourceGroup-SUK/providers/Microsoft.ApiManagement/service/opai-apim/backends/backend2-openai-endpoint' priority: 1 weight: 10 } ] } } }Deploy the Bicep Template: Deploy this Bicep template and look for changes in the backend pool to ensure it is deployed successfully.

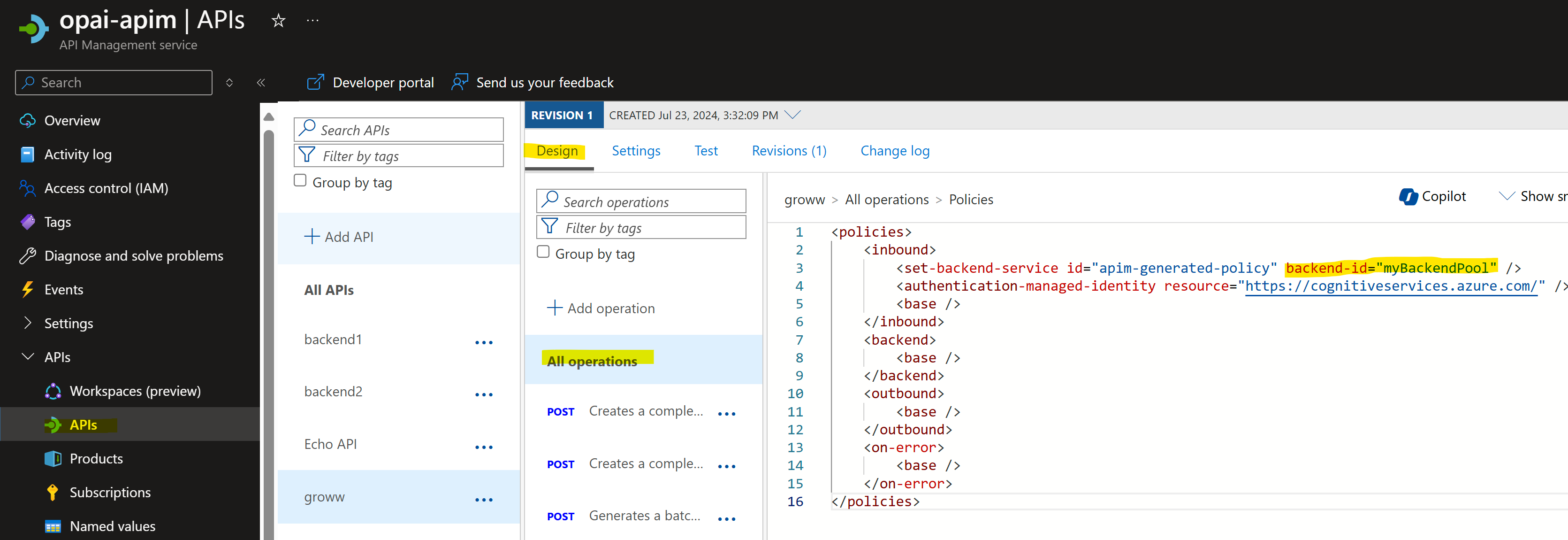

Update API with New Backend Name: Once deployed, you will see the new backend name in APIM backends. Copy that name and replace it in the API you created in the initial steps.

az deployment group create --resource-group Rresource-group-name --template-file apim.bicepCircuit Breaker Configuration:

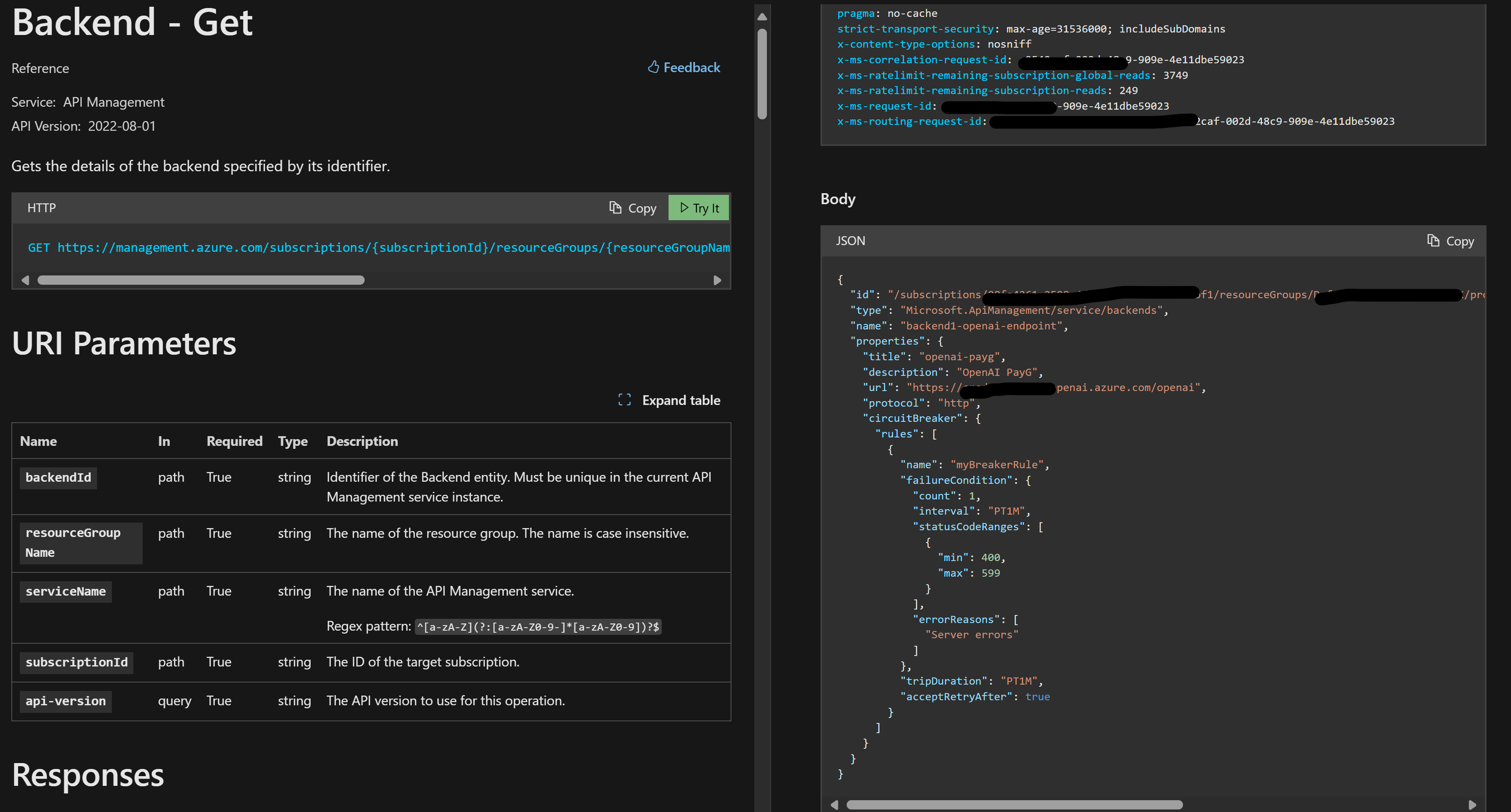

Health Probe Setup: Deploy circuit breaker conditions for each backend pool resource, consisting of a 'failure condition' and a 'retry after' period.

Failure Condition: Define the number of errors observed within a specific duration (e.g., 5 errors in 1 minute) and specify HTTPS error code ranges considered as errors.

Retry After: Specify the duration after which APIM can retry the health probe on a failed backend pool endpoint. In this configuration, error code ranges from 400-599 and a duration of 1 minute are set for each backend pool.

resource openaiprodbackend 'Microsoft.ApiManagement/service/backends@2023-09-01-preview' = {

name: 'APIM-name/nameofbackendpool'

properties: {

url: 'https://openaiendpointname.openai.azure.com/openai'

protocol: 'http'

circuitBreaker: {

rules: [

{

failureCondition: {

count: 1

errorReasons: [

'Server errors'

]

interval: 'PT1M'

statusCodeRanges: [

{

min: 400

max: 599

}

]

}

name: 'myBreakerRulePTU'

tripDuration: 'PT1M'

acceptRetryAfter: true

}

]

}

description: 'OpenAI PTU'

title: 'openai-PTU'

}

}

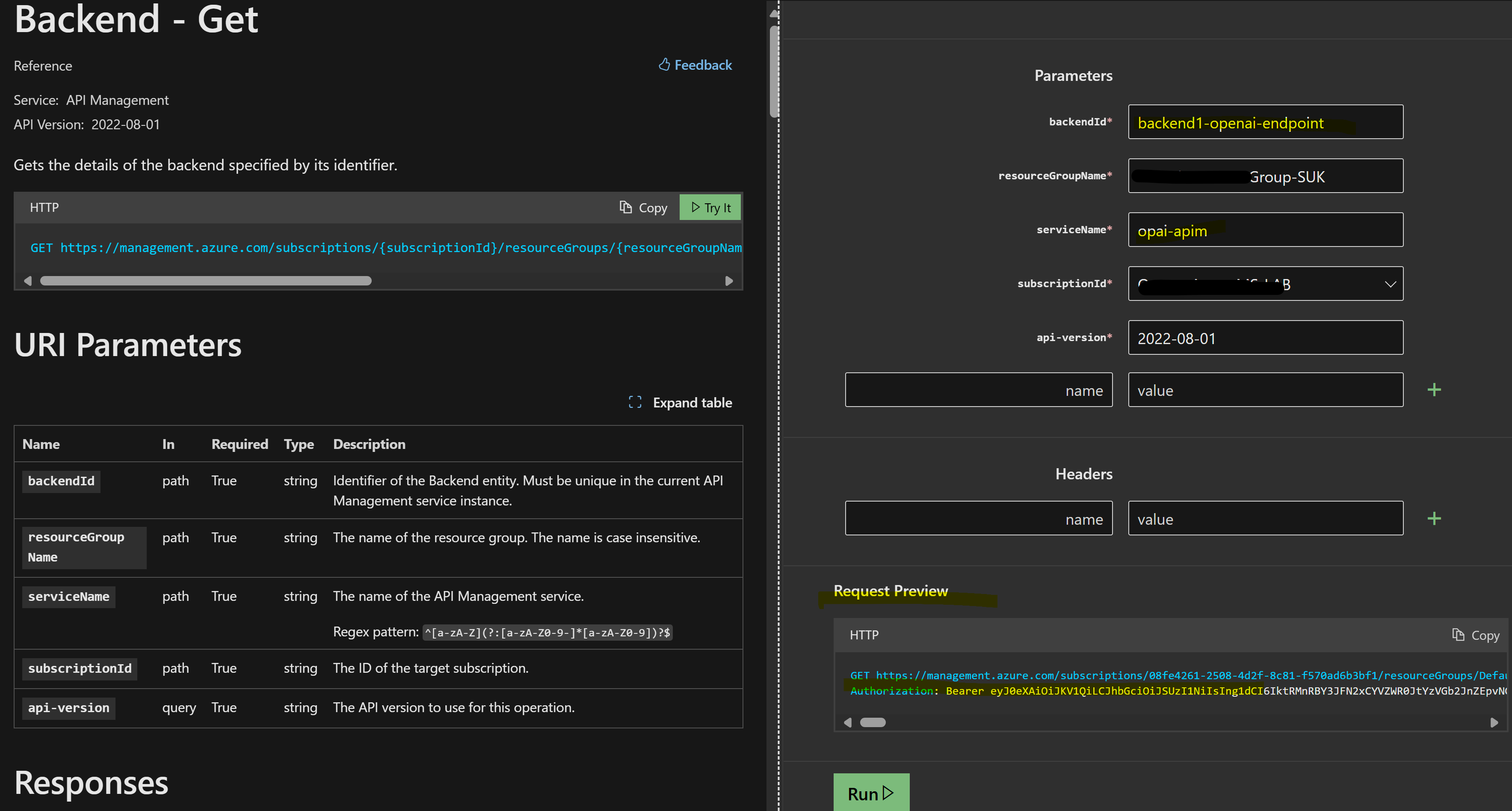

Verification via API

Since there is no UI to verify the circuit breaker configuration, use the APIM backend REST API to confirm changes. Use Postman or refer to the APIM documentation for the validation tool. Specify the backend name in

backendIDand the APIM name inserviceName.Bicep configuration is now completed, Next is to test LB & circuit breaker policy in action

Validation/Test

To test the circuit breaker with load balancing:

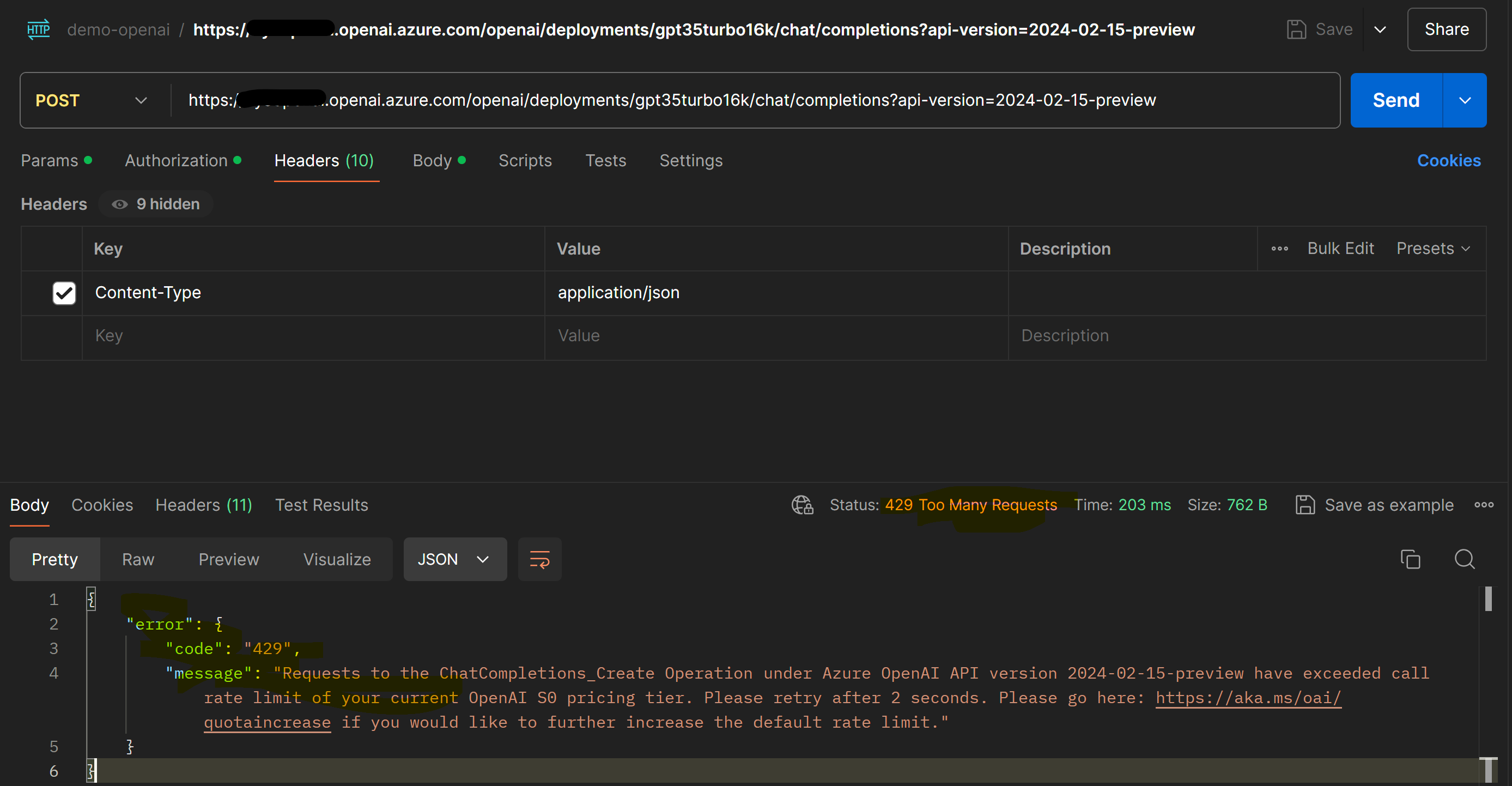

Induce Errors: Ensure the OpenAI endpoint throws errors within the 400-599 range. Perform a stress test on the OpenAI endpoint preferred in weight/priority, which should result in an HTTP 429 error ("Server is Busy").

Test APIM Response: Once the preferred endpoint is unresponsive, make a call via APIM to OpenAI and verify if the response is received from the second endpoint.

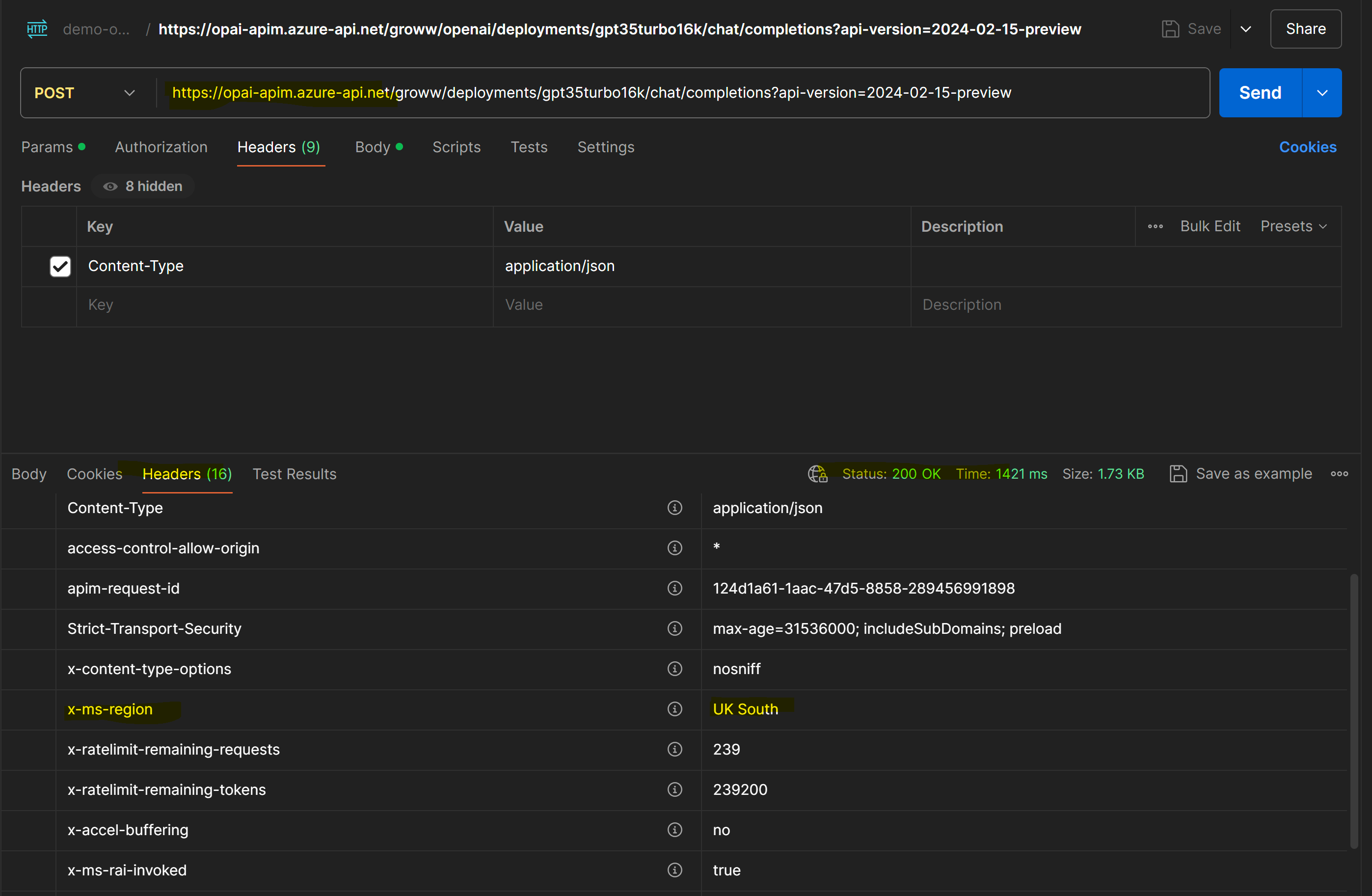

Example: Perform a stress test on the OpenAI endpoint in EastUS, resulting in a 429 error state. Validate with an ad-hoc call to the EastUS OpenAI endpoint to confirm it fails with 429. At the same time, a POST request to APIM should work fine, as it is handled by the secondary backend, which is the OpenAI endpoint in UKSouth.

Backend Endpoint 2: Backend endpoint 2 has a higher weight, located in EastUS. Perform a stress test on this endpoint using Postman.

Stress Test Results: Stress test on the OpenAI endpoint in EastUS should result in a 429 error state.

Ad-Hoc Call Validation: Validate with an ad-hoc call to the EastUS OpenAI endpoint to confirm it is failing with a 429 error.

Once the preferred endpoint is unresponsive, make a call via APIM to OpenAI and verify if the response is received from the second endpoint.

In this POST request to APIM should work fine, as it is handled by the secondary backend, which is the OpenAI endpoint in UKSouth.

Conclusion:

In conclusion, using the new GenAI gateway features in API Management can greatly improve the reliability and performance of Azure OpenAI services. By setting up smart traffic routing and safety measures, we ensure that your services stay responsive even under heavy load. This approach helps balance the workload and quickly redirects traffic if something goes wrong, making sure your users have a smooth and reliable experience.

Appendix:

Intelligent Load Balancing with APIM for OpenAI: Weight-Based Routing - Microsoft Community Hub

Subscribe to my newsletter

Read articles from Osama Shaikh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Osama Shaikh

Osama Shaikh

I have been working as App/Infra Solution Architect with Microsoft from 5 years. Helping diverse set of customers across vertical i.e. BFSI, ITES, Digital Native in their journey towards cloud