Day 89 - Mounting an AWS S3 Bucket on Amazon EC2 Linux Using S3FS 🚀

Nilkanth Mistry

Nilkanth Mistry

Project-10 Overview 📋

Project Description

In this AWS Mini Project, you will learn how to Mount an AWS S3 Bucket on an Amazon EC2 Linux instance using S3FS. The project provides a hands-on experience with Amazon Web Services (AWS) and covers key components such as AWS S3, Amazon EC2, and S3FS.

Through practical implementation, you will gain valuable insights into securely integrating AWS services, managing data storage in S3, and leveraging S3FS to enable seamless access and interaction between EC2 instances and S3 buckets.

Hands-on Project: Mounting S3 Bucket on EC2 Linux using S3FS

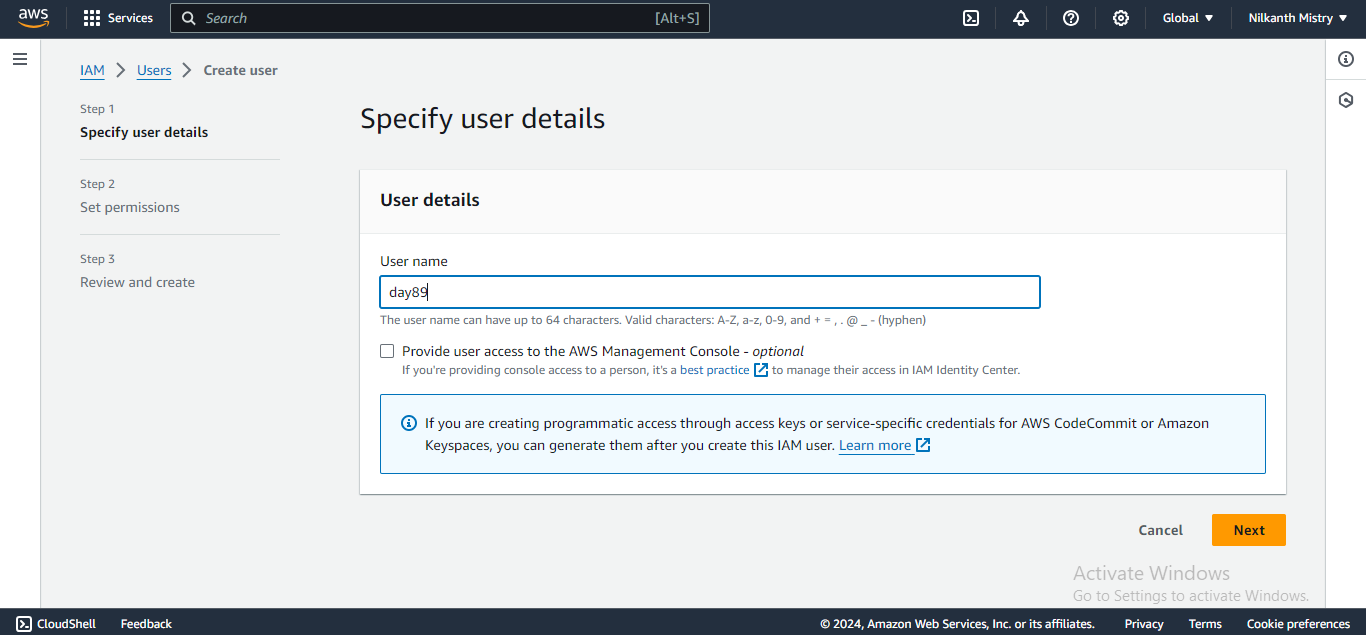

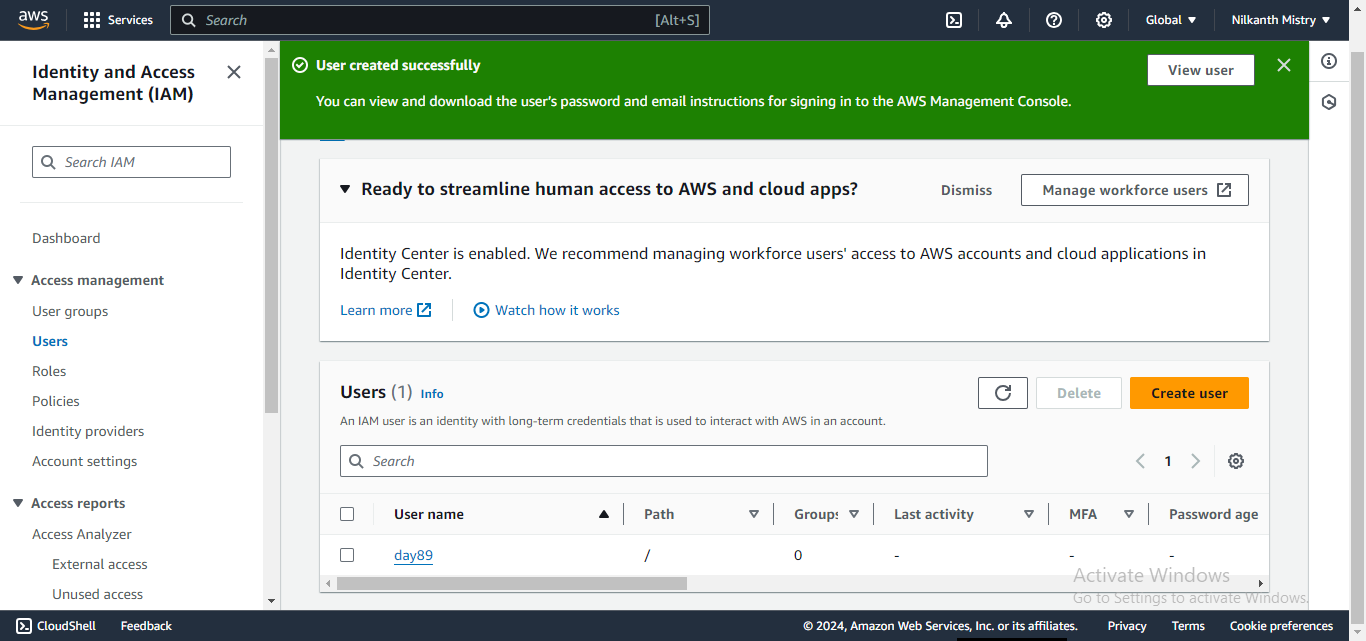

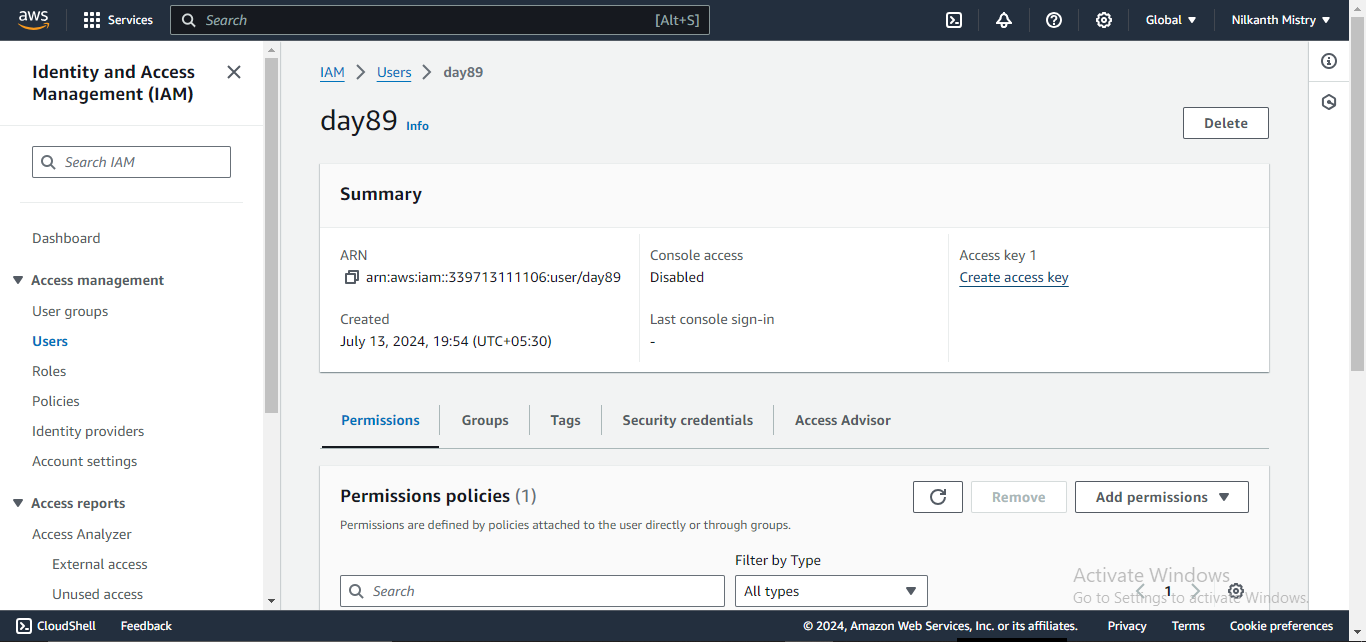

Step 1: Create a New IAM User

Begin by creating a new IAM user in the AWS console. Go to the IAM service, click on “Users,” and then “Add user.” Enter the name of the new user and proceed to the next step. 🧑💻

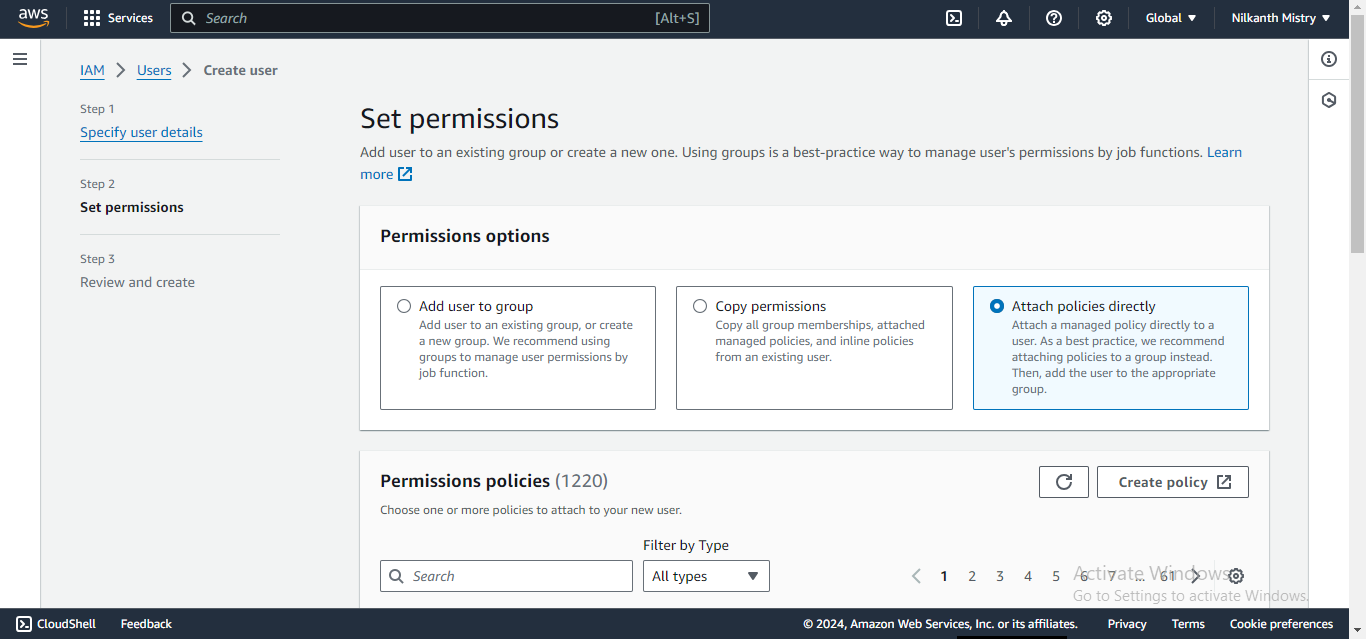

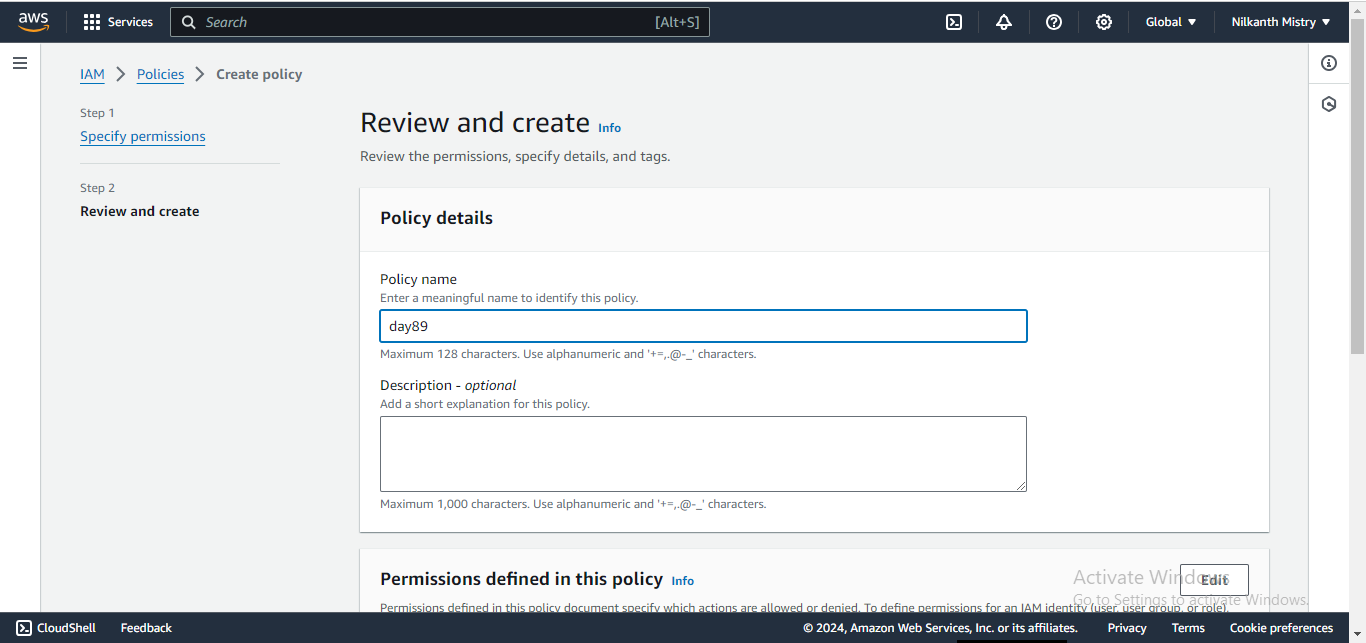

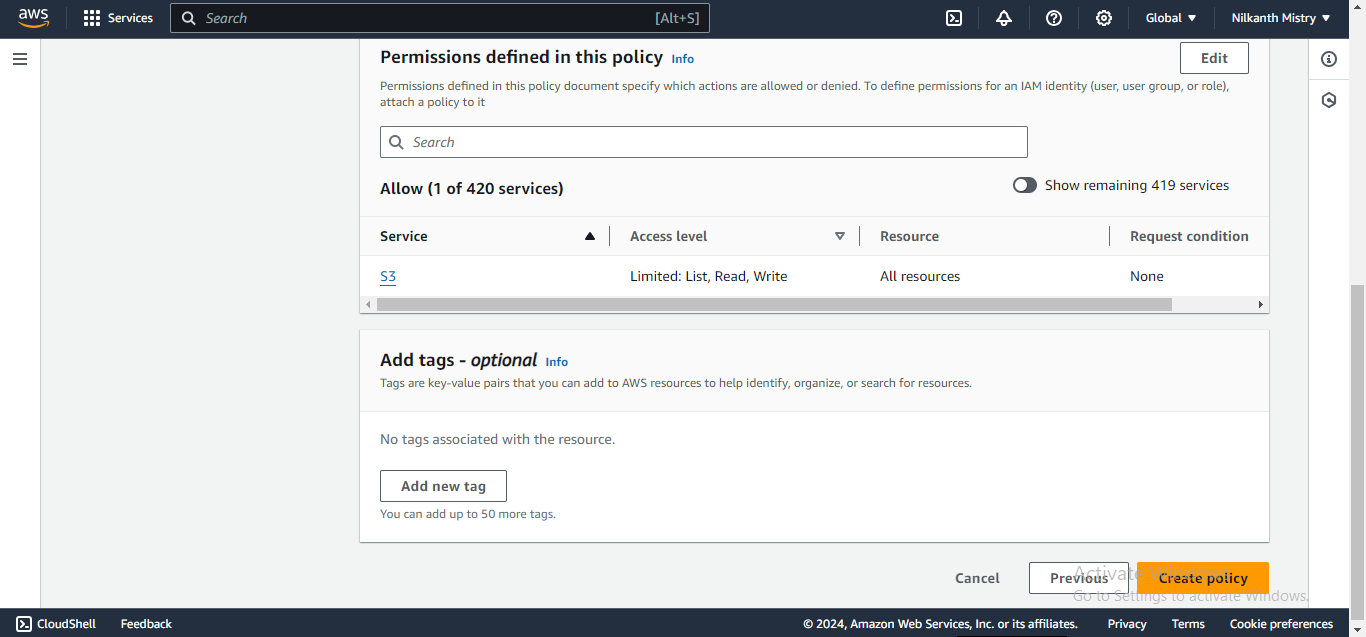

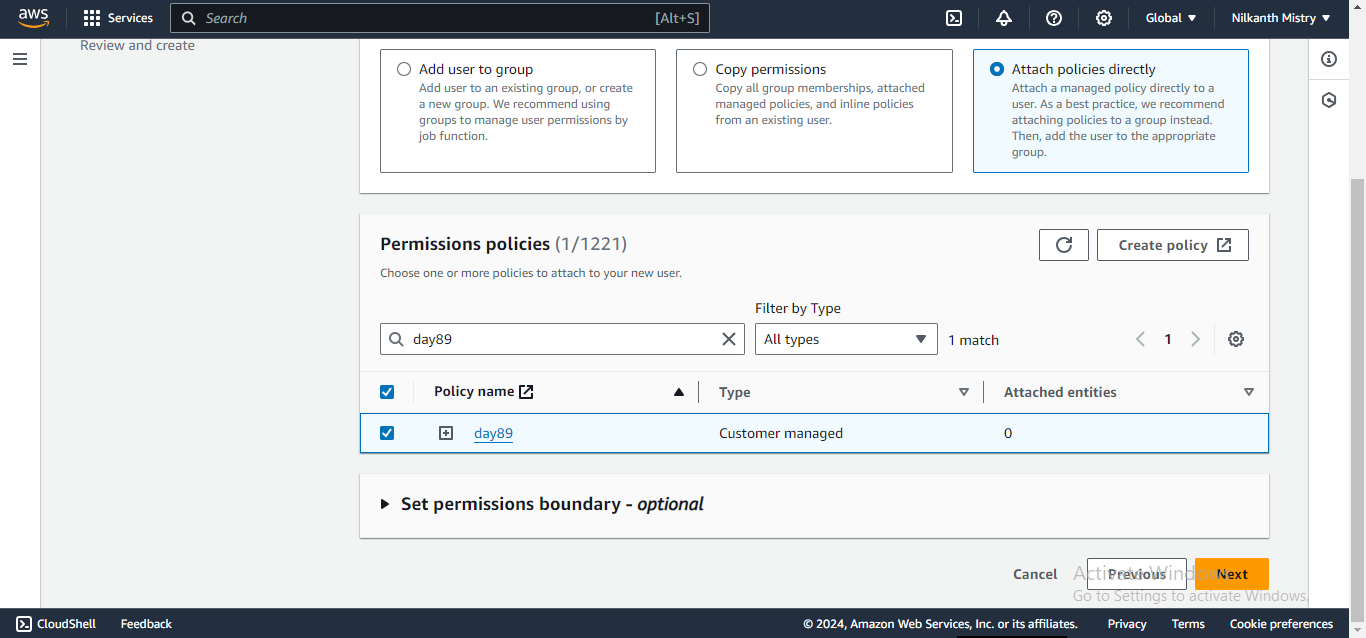

Step 2: Attach Policies to the User

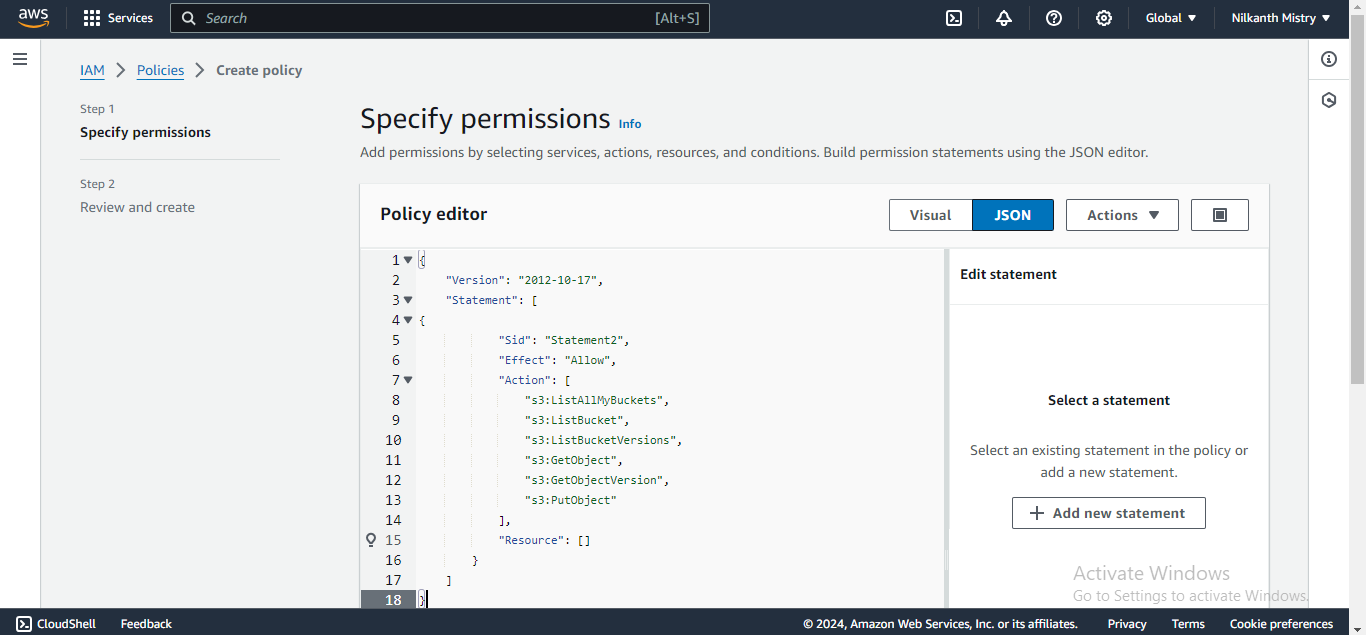

During user creation, select “Attach Policies directly” and click on “Create Policy.” Create a new policy with the settings mentioned below, focusing on S3-related actions:

Service: S3

Actions: ListAllMyBuckets, ListBucket, ListBucketVersions, GetObject, GetObjectVersion, PutObject

Resources: Specific

Bucket: Any

Object: Any 📑

Step 3: Create IAM User and Attach Policy

After creating the policy, give it a suitable name and proceed to create the IAM user, attaching the newly created policy to the user. 🔑

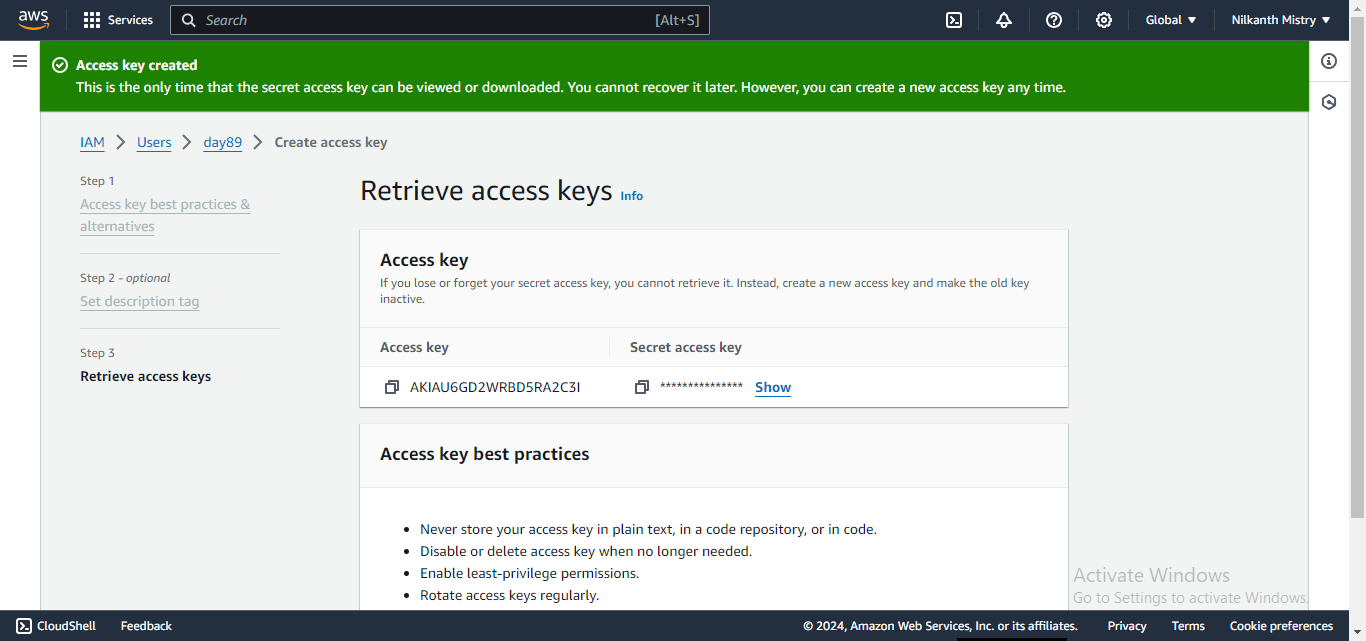

Step 4: Get Access Keys

Once the user is created, go to “Security Credentials,” and under “Access Keys,” click on “Create Keys.” Choose “Command Line Interface (CLI)” and get the Access Key and Secret Key. 🗝️

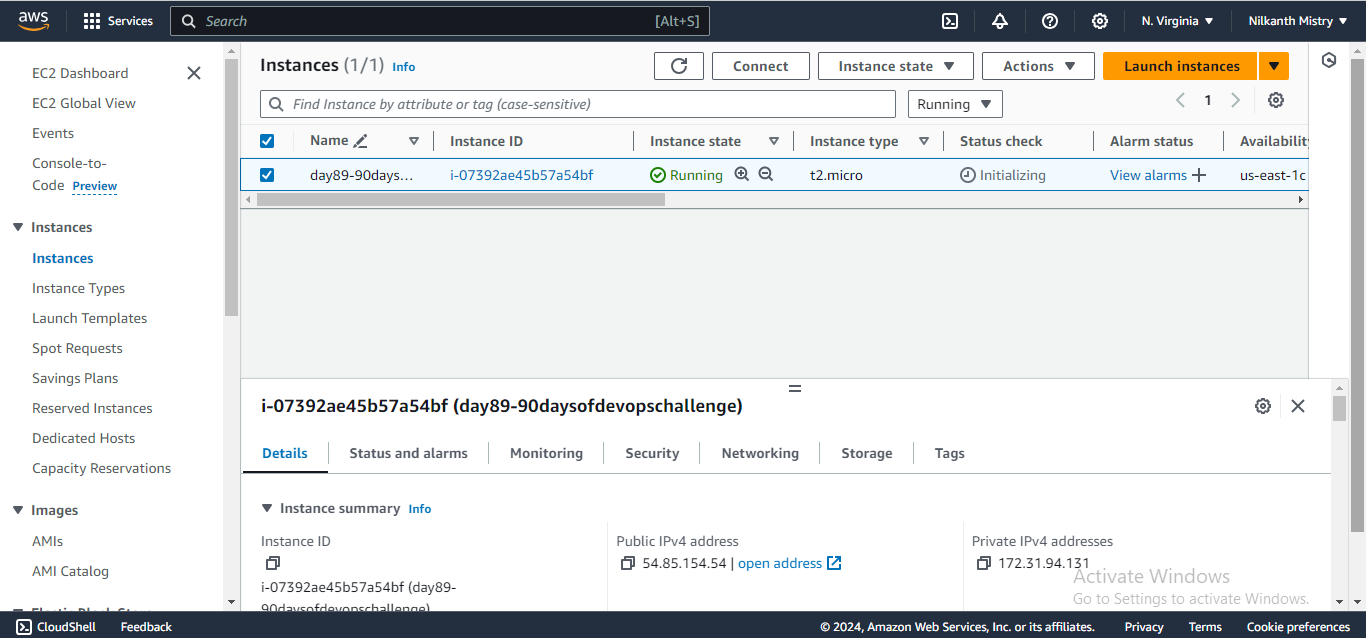

Step 5: Create EC2 Instance

Create a new t2.micro instance on AWS EC2. 🖥️

Step 6: Install AWS CLI

On the EC2 instance, install the AWS CLI using the appropriate package manager. 📦

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/install

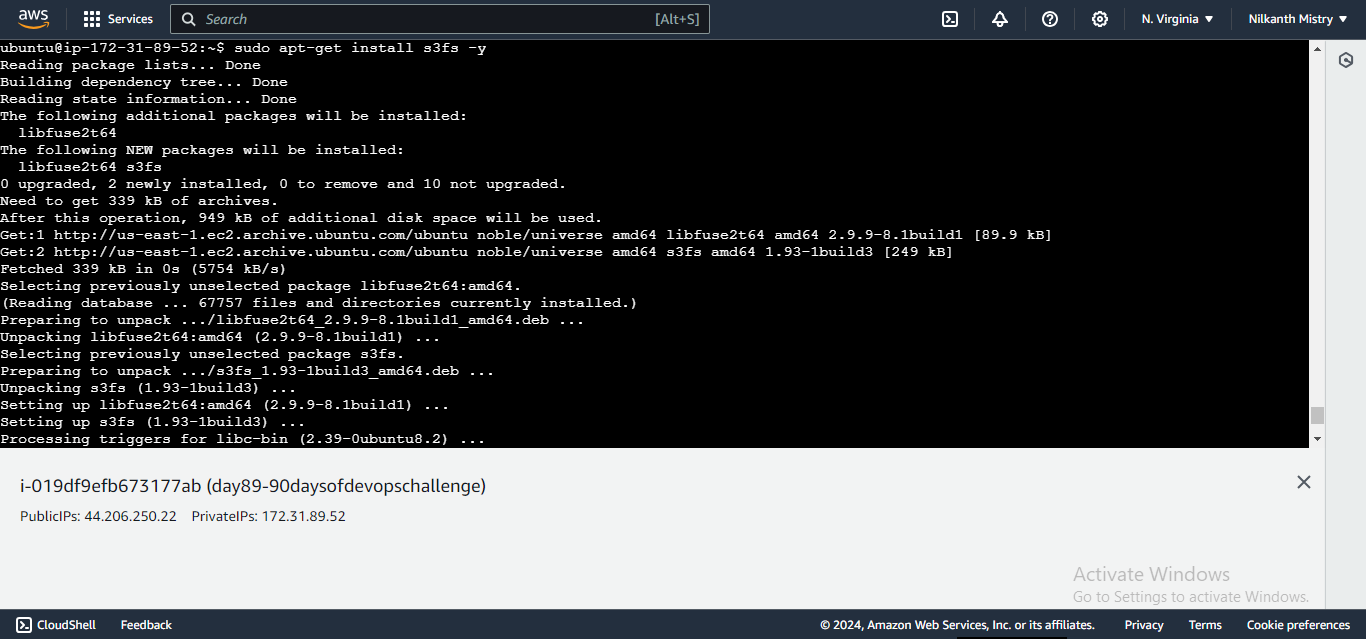

Step 7: Install S3FS

After installing AWS CLI, proceed to install S3FS on the EC2 instance. 🌐

sudo add-apt-repository ppa:ltfs/ppa

sudo apt-get update

sudo apt install s3fs -y

Step 8: Create a Folder and Add Files

Create a folder named “bucket” at a location /home/ubuntu on the EC2 instance. Add 2–3 files to this folder. 📂

mkdir bucket

cd bucket

touch test1.txt test2.txt test3.txt

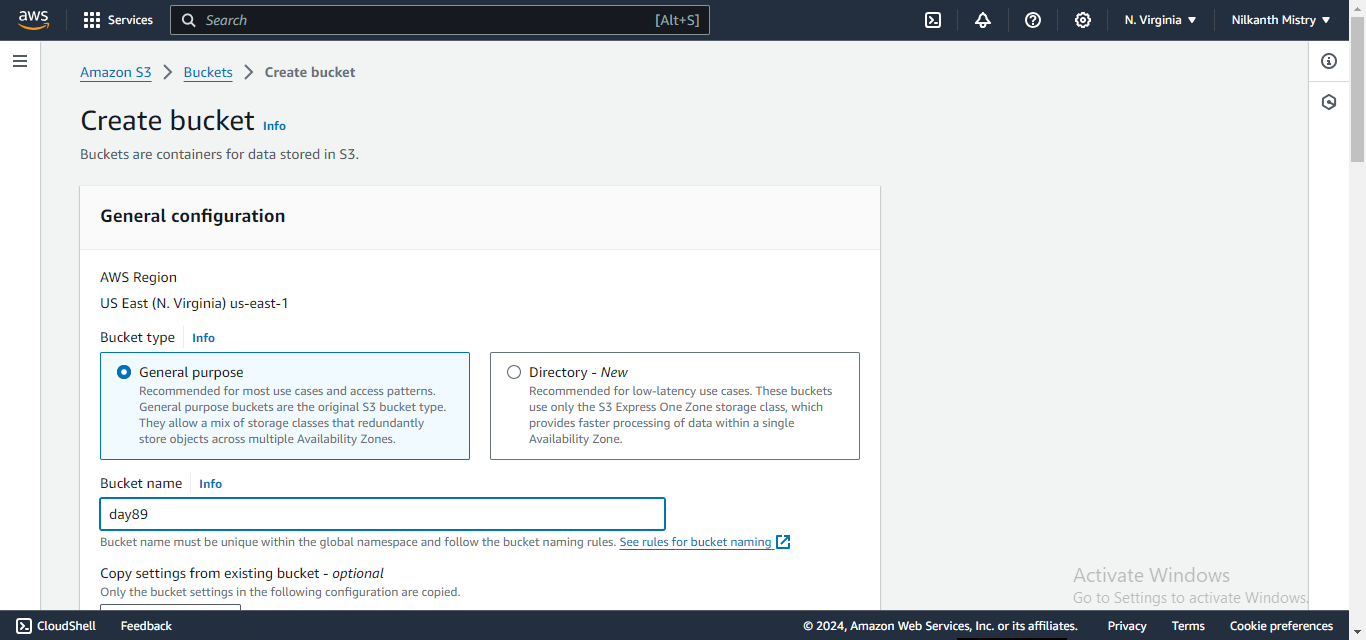

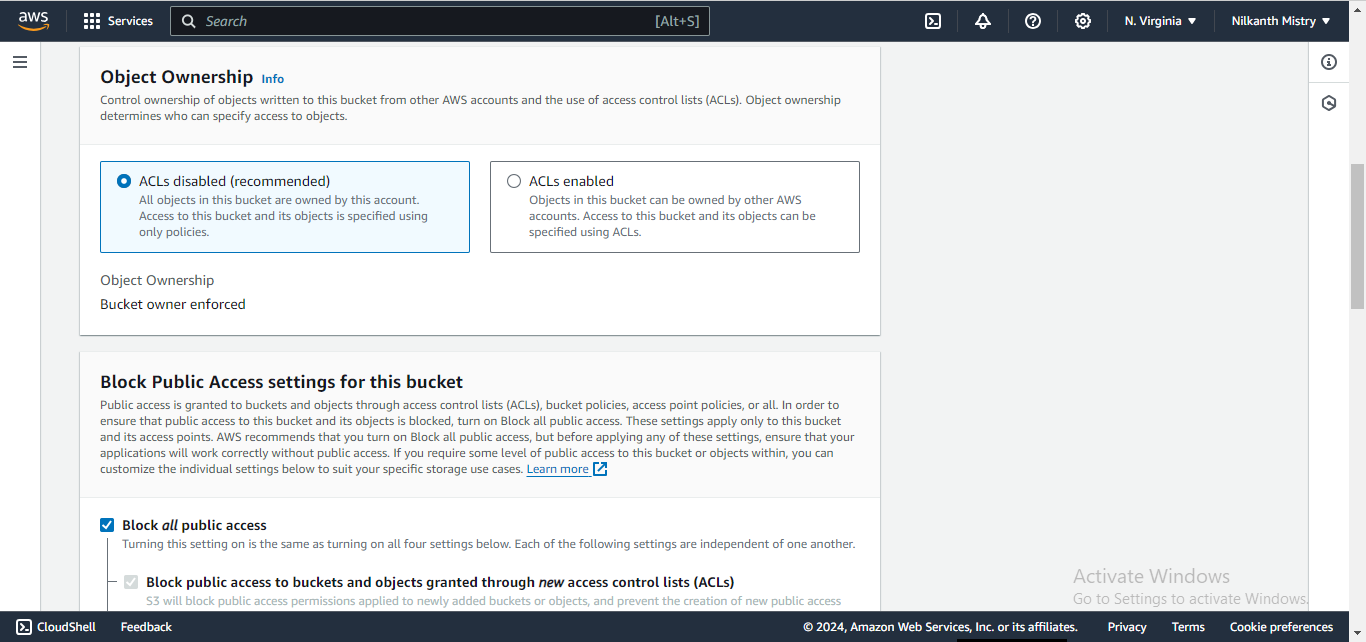

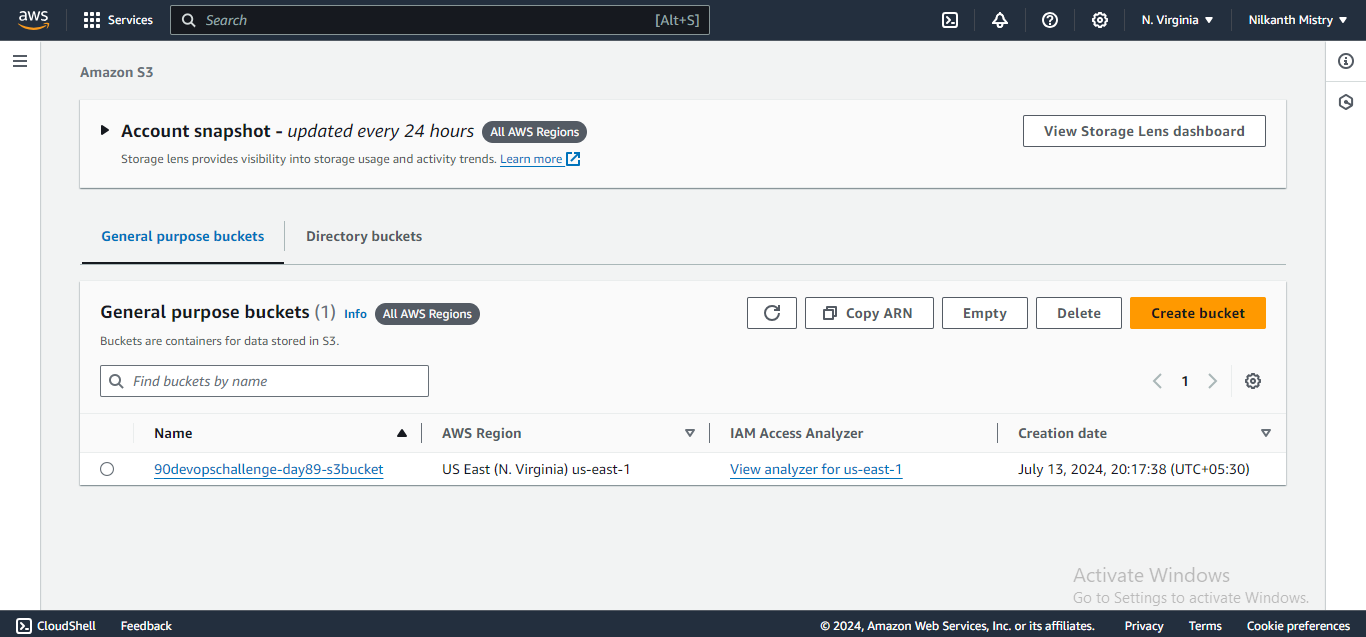

Step 9: Create S3 Bucket

In the AWS console, create an S3 bucket with a suitable name. 🪣

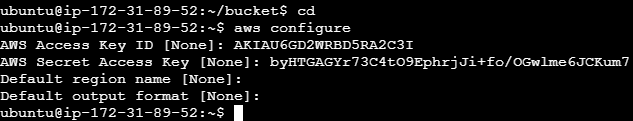

Step 10: Configure AWS CLI

On the EC2 instance, configure the AWS CLI by running the command aws configure and providing the Access Key and Secret Key obtained earlier. 🛠️

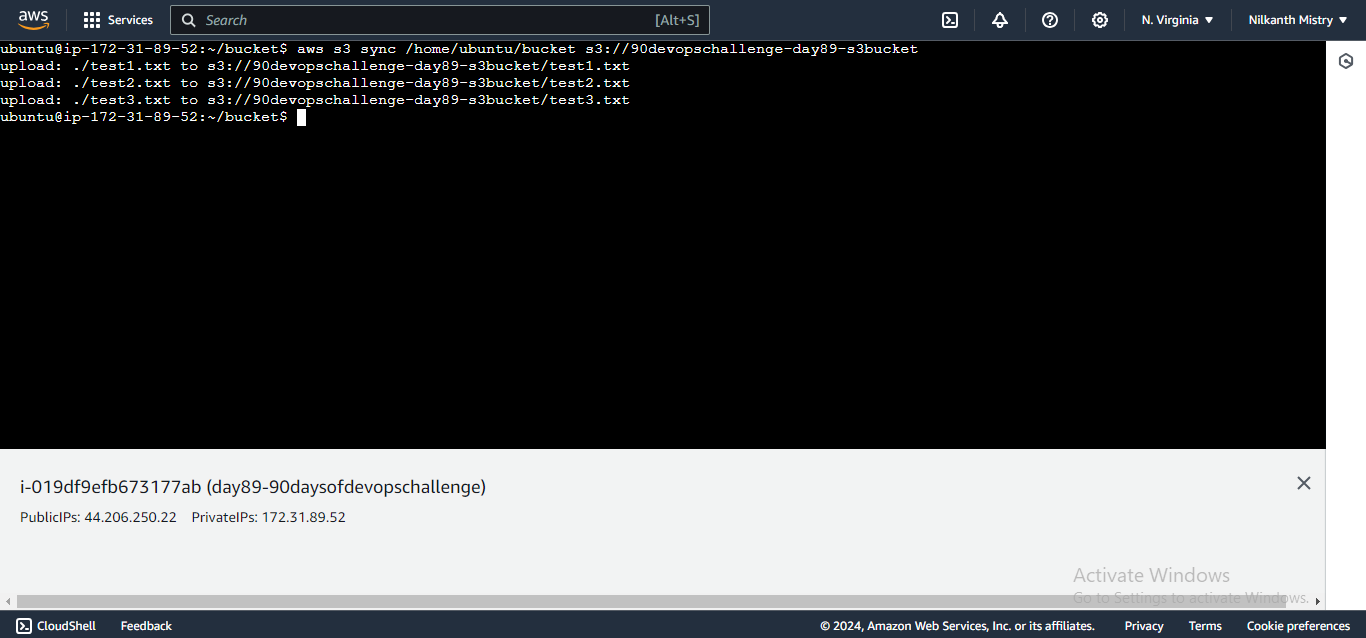

Step 11: Sync Files to S3 Bucket

Run the below command to sync the files from the given location on the EC2 instance to the S3 bucket.

aws s3 sync /home/ubuntu/bucket s3://devopschallenge-day89-s3bucket

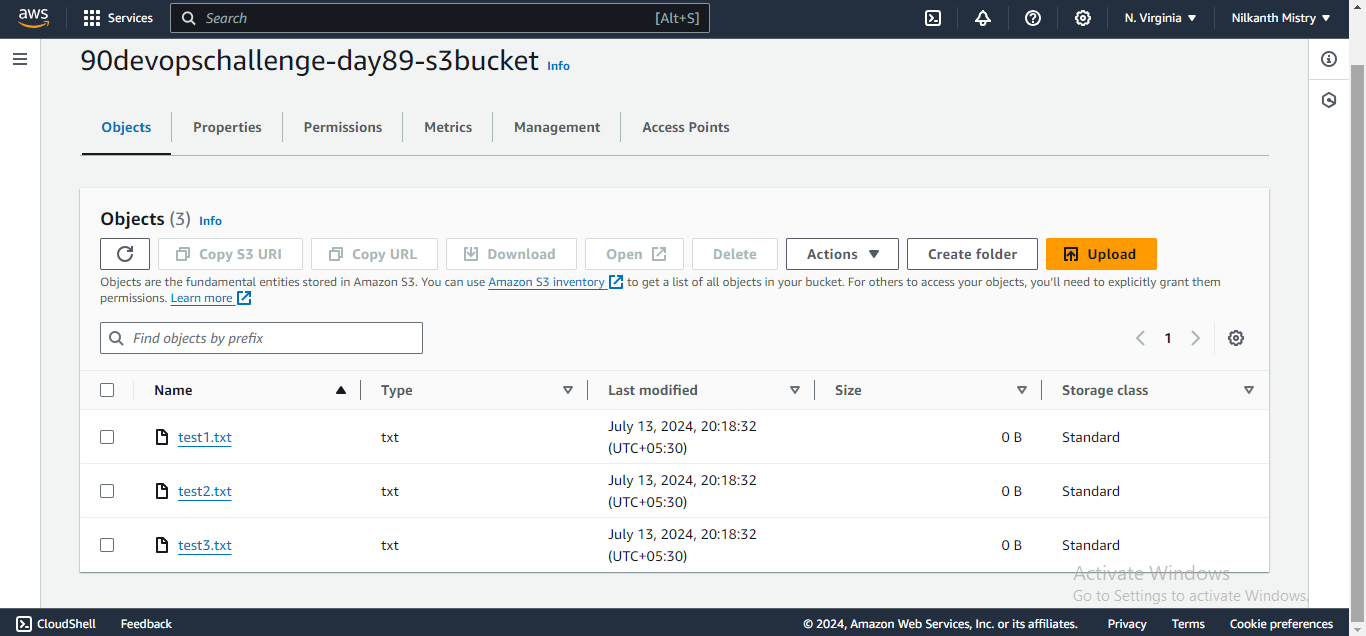

Step 12: Verify the Sync

Refresh the objects inside the S3 bucket to confirm that all the files from the EC2 instance are successfully uploaded to the S3 bucket. ✔️

Congratulations on completing Day 89 of the #90DaysOfDevOps Challenge! You have successfully mounted an AWS S3 bucket on an EC2 Linux instance using S3FS, gaining valuable knowledge about AWS, S3, EC2, and S3FS in the process.

I hope you found this guide helpful. If you have, don’t forget to follow and click the clap 👏 button below to show your support 😄. Subscribe to my blogs so that you won’t miss any future posts.

If you have any questions or feedback, feel free to leave a comment below. Thanks for reading and have an amazing day ahead! 🚀

Connect with me on LinkedIn 🌐

Connect with me on GitHub 🐙

Subscribe to my newsletter

Read articles from Nilkanth Mistry directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Nilkanth Mistry

Nilkanth Mistry

Embark on a 90-day DevOps journey with me as we tackle challenges, unravel complexities, and conquer the world of seamless software delivery. Join my Hashnode blog series where we'll explore hands-on DevOps scenarios, troubleshooting real-world issues, and mastering the art of efficient deployment. Let's embrace the challenges and elevate our DevOps expertise together! #DevOpsChallenges #HandsOnLearning #ContinuousImprovement