Large CSV Processing using PHP #WeekendBuild

Didik Tri Susanto

Didik Tri Susanto

Previously on my first #weekendbuild series, we already know how to process large CSV data in Go. Now as comparison, let's do the same thing use PHP. The idea and the output will be the same as first experiment in Go, no 3rd party library or dependencies will be used.

Reading CSV with PHP

Same concept for processing the CSV, we will load or open the given file and parse the csv data. Fortunately, PHP has standard lib for this called fgetcsv and we will use that function.

Load & Extract Data

Now opening the csv file in PHP is quite easy and can be achieved only using standard lib fopen

$f = fopen("../data/customers-1000000.csv", "r");

if ($f !== false) {

// process the csv

} else {

echo "Error opening file\n";

}

fopen received a file path string and "r" argument is a "read only" mode since we only need to read the files. This function will return resources when it successfully loaded or boolean false when it failed, so simple if else for error handling would do the job.

For extracting the rows, use fgetcsv and extract the row to an defined array to hold the value.

$records = [];

while (($csv = fgetcsv($file)) !== false) {

$num = count($csv);

$record = [];

for ($c=0; $c < $num; $c++) {

$record[] = $csv[$c];

}

$records[] = $record;

}

This would work but when I tried to load 1 million rows, it got out of memory error:

PHP Fatal error: Allowed memory size of 134217728 bytes exhausted (tried to allocate 20480 bytes)

Not surprised at all and It's kind of expected because php array could took many resources especially dealing with the large data. I checked php.ini it stated that current memory_limit setting was only 128M.

Workaround on this is temporary increase the memory_limit on the runtime temporarily.

<?php

ini_set("memory_limit", "512MB")

So when we run the script, we also tell PHP to set memory limit to 512 MB before executing the rest of the process. Other way we could also change "memory_limit" in php.ini as for permanent change so all of php executions will be use that as default limit.

However this is not a good idea because increasing memory limit is indicating our process is not efficient and we have to avoid it.

Generators

Instead of building an array, PHP generators might be useful.

A generator allows you to write code that uses foreach to iterate over a set of data without needing to build an array in memory, which may cause you to exceed a memory limit, or require a considerable amount of processing time to generate. Instead, you can write a generator function, which is the same as a normal function, except that instead of returning once, a generator can yield as many times as it needs to in order to provide the values to be iterated over.

How we do that?

We can create a function to build the data using generators and called it later in our main function.

$f = fopen("../data/customers-1000000.csv", "r");

if ($f !== false) {

$records = extractCsv($f);

} else {

echo "Error opening file\n";

}

function extractCsv($file) {

while (($csv = fgetcsv($file)) !== FALSE) {

$num = count($csv);

$record = [];

for ($c=0; $c < $num; $c++) {

$record[] = $csv[$c];

}

yield $record;

}

}

Thats it!

Mapping and Sorting Data

Now since we're able to iterate the data we will mapping the customer data based on city and total customers. We could initiate an array to hold the map values, something like cityMap["jakarta"] = 100

$cityMap = [];

$rows = 0;

$records = extractCsv($f); // extract csv records and load it to iterator

foreach ($records as $key => $record) {

$rows++;

if ($key == 0) {

continue; // skip header row

}

// create array map for city and total customers

if (array_key_exists($record[6], $cityMap)) {

$cityMap[$record[6]]++;

} else {

$cityMap[$record[6]] = 1;

}

}

Previously in Go version we used bubble sort algorithm to manually sort the data from map, PHP has built in sort function called arsort to sort an array value in descending order but still maintain the keys correlation.

arsort($cityMap);

echo "sorted from most customers in the city: " . json_encode($cityMap) . PHP_EOL;

Getting Memory Usage and Processing Time

We need to initiate the memory usage and a timer before executing the main process and later we calculate it at end of the process.

<?php

// start profiling

$startMemory = memory_get_usage();

$timeStart = microtime(true);

// starting main processes

// ...

// end of the process

$timeEnd = microtime(true);

$endMemory = memory_get_usage();

echo sprintf("processing time: %f (s)", ($timeEnd - $timeStart)) . PHP_EOL;

echo sprintf("memory usage: %f (Mb)", ($endMemory - $startMemory) / 1024 / 1024) . PHP_EOL;

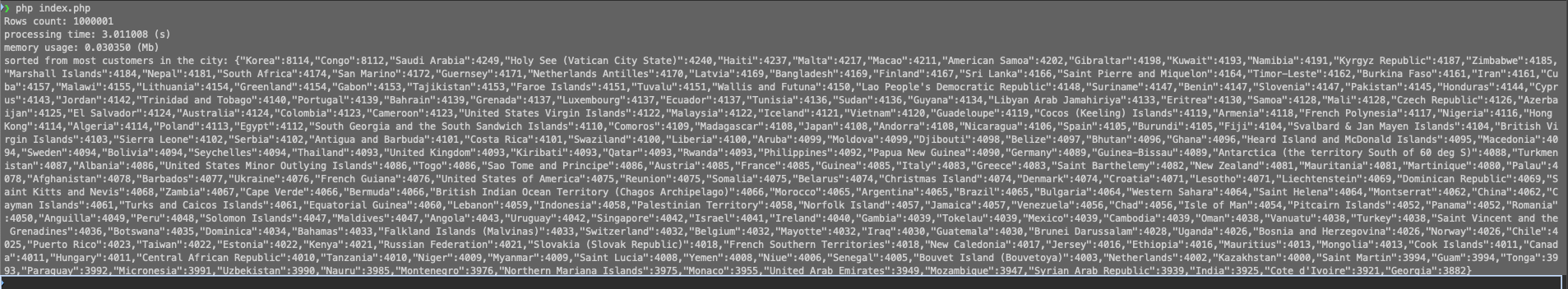

The Result

Running php application is simply to run with

php index.php

The result for loading and processing 1 million rows of csv was:

Processing time: ~2-3s

Memory usage: 0.030350 (Mb)

Not bad. Performance wise, Go is better since it's a compiled language meanwhile PHP is not.

Full source code can be checked on my Github: https://github.com/didikz/csv-processing/tree/main/php

Lesson Learned

Handling large data in PHP is quite tricky and we need to be aware about the memory usage especially when dealing with the arrays. We could increase memory_limit as "urgent" solution especially when there's an issue in production but don't do that. Optimize the application instead.

Subscribe to my newsletter

Read articles from Didik Tri Susanto directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Didik Tri Susanto

Didik Tri Susanto

Hi, I am a cat lover and software engineer from Malang, mostly doing PHP and stuff. Software Engineer Live in Malang, Indonesia Visit my resume and portfolios at didiktrisusanto.dev See you, folks!