Microservice Best Practices: Scale your java microservices using virtual threads & async programming

TECHcommunity_SAG

TECHcommunity_SAG

Overview

This article is part of the Microservices Best Practices series. The goal of this Tech Community article series is to address the need for Best Practices for Microservices Development.

One major pattern of a Cumulocity IoT microservice is a server-side agent (check out the Getting Started guide) which should handle thousands of devices at the same time. Very likely the microservice is not communicating with each device every time but offers kind of a client or endpoints that retrieves updates in an interval pushed by the device. To roughly describe the logic of such a microservice:

Process incoming message → transform it into the c8y domain model → send it to a Cumulocity tenant the device is registered to.

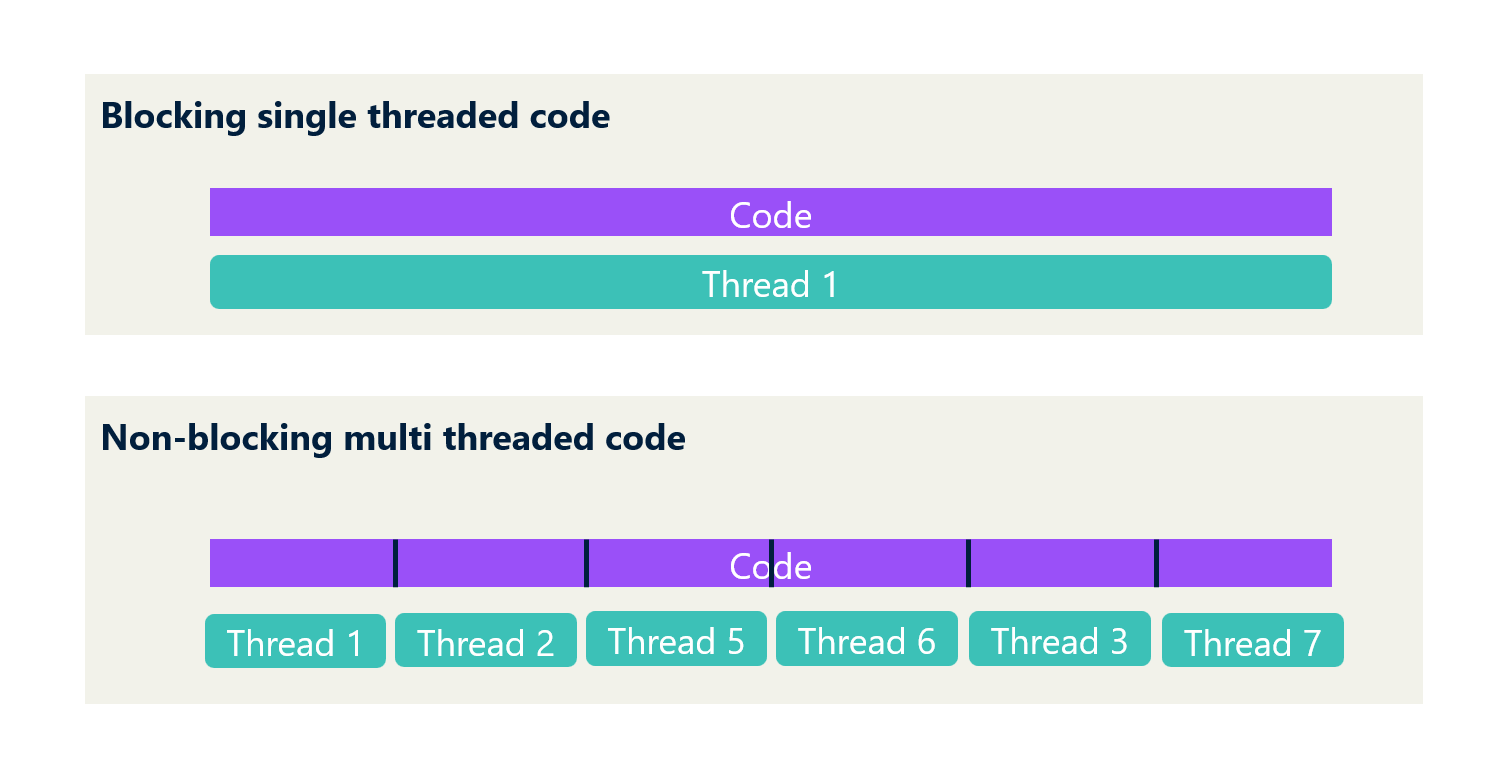

If you implement such logic in just a single blocking thread it will be not available for other devices during that time, thus your whole microservice only scales with your maximum number of available threads. Luckily there is a solution for that which is called asynchronous programming which tries to parallel logic as much as possible.

The main goal of such a microservice is to achieve a maximum throughput of concurrent tasks so it can handle thousands of devices in parallel just with a single instance.

But first, let’s find out how threading is working in java applications.

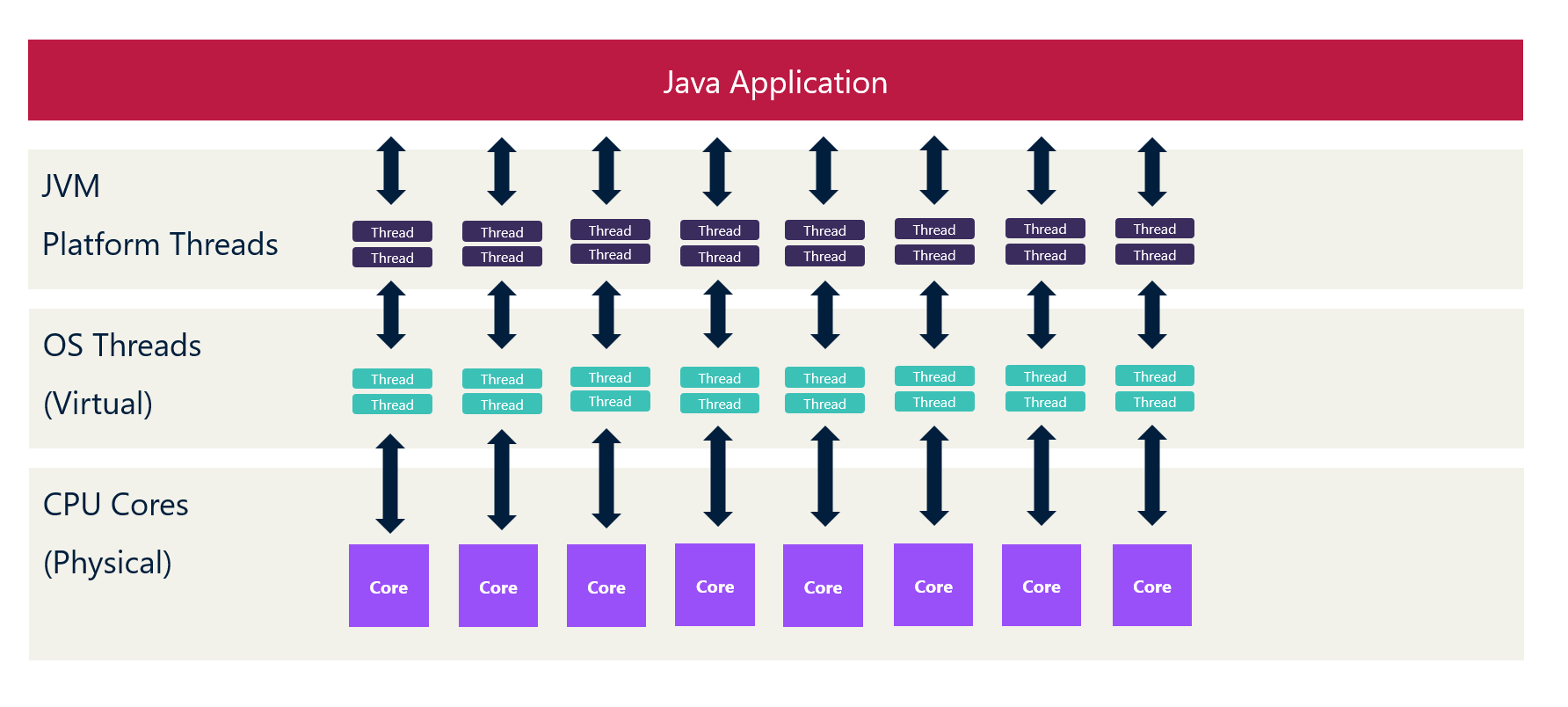

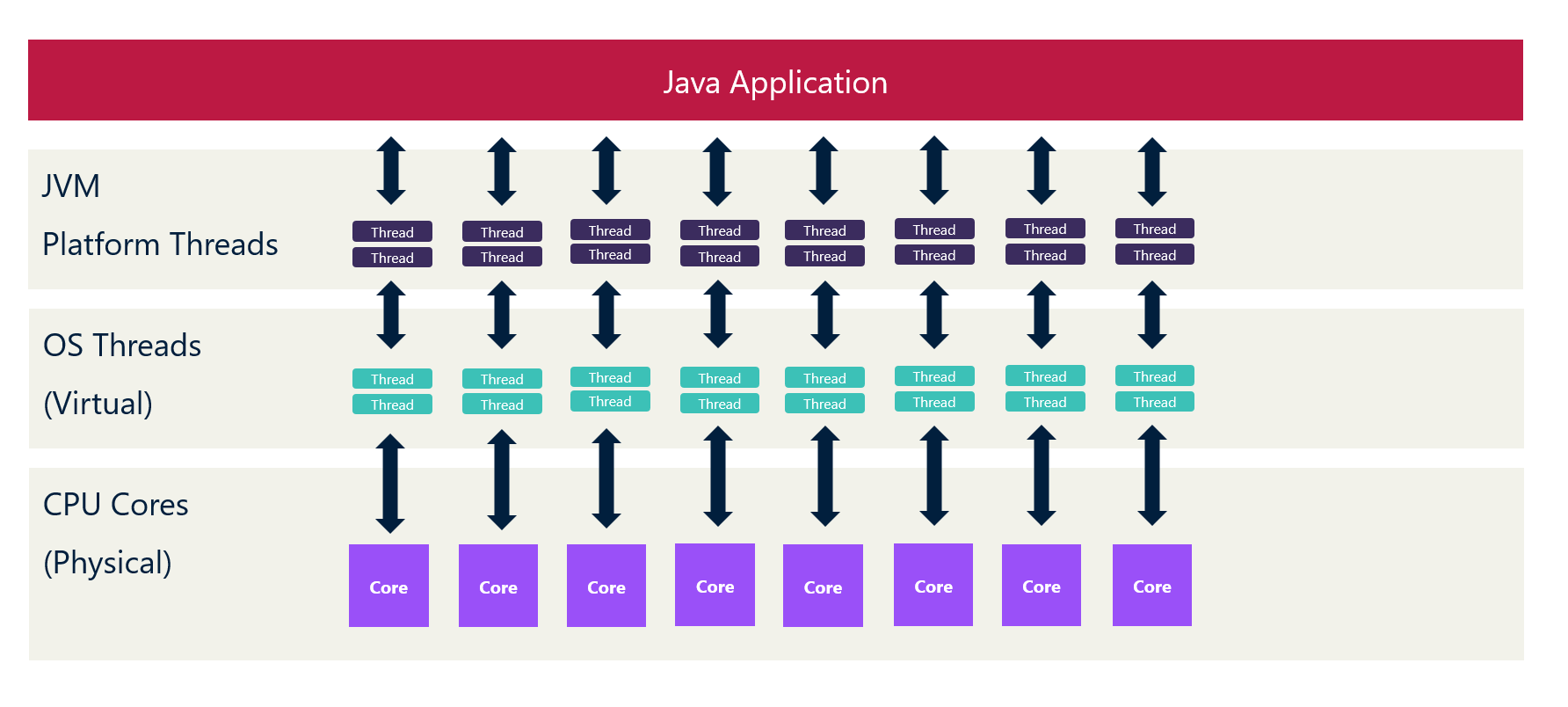

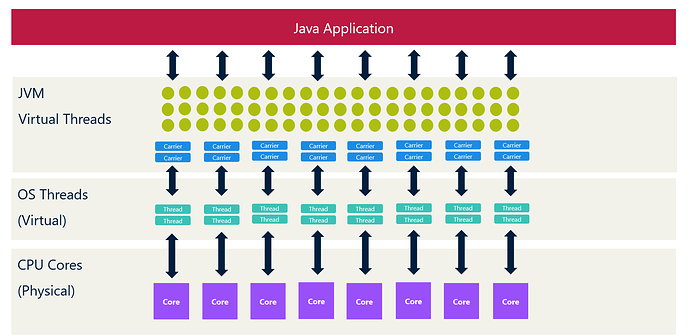

About Cores & Threads

As you might know, your CPU has a limited number of cores. Modern CPUs have multiple cores, in a desktop environment most likely around 8 Cores and 16 Threads. The main difference between cores and threads is that cores are physical units while threads are virtual constructs to allow physical cores to process more than 1 process in parallel. In our example 2 processes per physical core.

The operating system (OS) manages the available threads and assigns tasks to them. That the reason they are also called OS threads. In our example we can have max. 16 concurrent processes running on our CPU.

The Java Virtual Machine (JVM) uses the available OS Threads in a 1:1 relation and makes them available to applications as so called platform threads. As a programmer, you can implement code to be processed in just one thread (single-threaded). After the code execution is completed the jvm releases the thread and some other code can be executed. In the worst case all available threads are currently busy and new processes have to wait until one thread gets released so they can be executed.

As a developer, you can optimize this by writing non-blocking code that is executed in parallel by multiple threads and not blocking one dedicated thread to execute your code sequentially. This is called asynchronous programming.

Async Programming with Java

Asynchronous Programming has been mainly introduced in Java 8 back in 2014. Even before that in Java 5 a basic future interface in combination with an Executor Service was introduced which allows first asynchronous code by “submitting” tasks that return a “future”.

Executor Service

The Executor Service is a service that lets you run tasks asynchronously. It is very limited in regard to flexibility and chaining tasks.

ExecutorService executor = Executors.newCachedThreadPool();

executor.submit(() -> {

System.out.println("Do something async!");

});

Callable<String> callableTask = () -> {

return "Result of async Task";

};

Future<String> exFuture = executor.submit(callableTask);

System.out.println(exFuture.get());

You can define runnable and callable tasks that can be submitted to the executor. A basic future will be returned which allows to block of the current thread with .get() to retrieve the result for further processing.

Completable futures

With Java 8 completable futures were introduced which give much more flexibility on how to execute code asynchronously. For example, it contains methods like supplyAsync , runAsync , and thenApplyAsync to easily execute any code block asynchronously. All asynchronous code is executed in a ForkJoinPool.commonPool() if you don’t provide an explicit executor.

CompletableFuture<Void> future = CompletableFuture.runAsync(() -> {

System.out.println("Do something async!");

});

In the example above we just use runAsync to execute some logic asynchronously. We can also get a return value if necessary by using supplyAsync:

CompletableFuture<String> futureString = CompletableFuture.supplyAsync(() -> {

return "Result of async processing";

});

futureString.join();

Here we don’t get the string back but a completable future of type string. With get we can force the current thread to block and wait until the async processing is completed, but there is a better approach. We can chain operations:

futureString.thenApply(result -> {

return result.toUpperCase();

}).thenAccept(upperCaseString -> {

System.out.println(upperCaseString);

});

After the initial future is completed, another operation will make the result upper case, while just another one will print it out. This is one approach you can do that. You can also use the methods complete() and completeExceptionally() to define in your code block when the future should be completed.

CompletableFuture<String> future1 = new CompletableFuture();

CompletableFuture.runAsync(() -> {

future1.complete("Operation is finished!");

});

Especially the chaining of completable futures is very powerful. It allows you to write your code fully asynchronously with the drawback if making it more complex and less readable. Also, they are quite powerful enough to implement efficient code that is scaled across all available OS threads managed by the ForkJoinPool.

As you’ve seen completable futures require you to change your code-style from synchronous to handler-based code. As already explained this could lead to less readable and more complex code.

But with Java 21 there is a new kid in the asynchronous block: Virtual Threads

Virtual Threads in Java 21+

In Java 21 virtual threads have been introduced. While executor service and completable futures leverage and optimize the use of available OS threads, virtual threads abstract them one level further. Virtual threads are not tied to one specific OS thread but use so called carrier threads which can execute multiple virtual threads in parallel on one OS thread. The difference is that once a virtual thread is completed the JVM will suspend (or reuse) it and execute another one using the same carrier thread and assigned OS thread. Also, blocked virtual threads are unassigned from the carrier threads so others can be processed. With that you can have theoretically thousands, even millions of (blocking) virtual threads running on a limited number of OS threads.

Also, virtual threads consume much less resources and can be much quicker created than platform threads. As a result, you can scale your applications much better having even more asynchronous logic executed on the same number of available OS threads.

Some numbers:

- A platform thread takes about 1s to be created, a virtual thread can be created in less than 1 µs

- A platform thread reserves ~1 MB of memory for the stack, a virtual thread starts with ~1 KB

So are they in general faster than platform threads? It depends. Virtual threads are ideal for executing code blocks including blocking operations but not holding any thread-local variables. A virtual thread is not as fast as a platform thread if it is just using the CPU to calculate stuff without blocking much. Also if you already implemented your code mainly async you will also not benefit much from virtual threads.

There are also some risks when using virtual threads. Because virtual threads are somehow unlimited and available, you risk running into the memory limits of the JVM. You can avoid that by using immutable objects where possible which can be shared across threads. Also, thread-local variables shouldn’t be extensively used in virtual threads

If you have that in mind you can build very efficient and high-throughput applications with it without changing your coding style. It is even desired to implement your code in a synchronous blocking style as virtual threads scale with their numbers and don’t block any OS threads anymore.

Please note: Combining them with completable future async chains is less efficient as they would not benefit much from it and they might use thread-local variables in the background consuming a lot of memory.

All of this makes them a perfect fit for applications that have a lot of concurrency and want to achieve a high throughput which is normally the case for server-side-agents that need to handle thousands of concurrent requests.

Virtual Threads are supported by Spring Boot 3.2. If we enable them via

spring.threads.virtual.enabled=true

the embedded Tomcat and Jetty will automatically handle virtual threads. Also the Spring MVC profits by this setting e.g. the @Async annotation.

Unfortunately, the Microservice SDK currently uses Spring Boot 2.7.x. Still, we can use virtual threads natively by just increasing the java version to 21.

Using virtual threads

You can easily create a new virtual thread:

Thread.startVirtualThread(() -> {

System.out.println("Do something async using a virtual Thread!");

});

Or you use the Thread.Builder and define a task.

Please note: Virtual threads don’t have any names per default which makes it kind of hard to monitor. On creation, you can assign a thread name with

.name("threadName")Thread.Builder builder = Thread.ofVirtual().name("virtThread"); Runnable task = () -> { System.out.println("Do something async using a virtual Thread!"); }; Thread t = builder.start(task); t.join();

If you used the Executor Service before you can just change it to use virtual threads very easily:

//ExecutorService using Virtual Threads

ExecutorService virtExecutorService = Executors.newVirtualThreadPerTaskExecutor();

It follows the same concept to assign tasks etc.

Let’s now check how completable futures and virtual threads can be used for the Microservice SDK. Read full topic here.

Subscribe to my newsletter

Read articles from TECHcommunity_SAG directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

TECHcommunity_SAG

TECHcommunity_SAG

Discover, Share, and Collaborate with the Software AG Tech Community The Software AG Tech Community is your single best source for expert insights, getting the latest product updates, demos, trial downloads, documentation, code samples, videos and topical articles. But even more important, this community is tailored to meet your needs to improve productivity, accelerate development, solve problems, and achieve your goals. Join our dynamic group of users who rely on Software AG solutions every day, follow the link or you can even sign up and get access to Software AG's Developer Community. Thanks for stopping by, we hope to meet you soon.