Use AI Skills As Cell Magic In Fabric Notebook

Sandeep Pawar

Sandeep PawarThe public preview of AI Skills in Microsoft Fabric was announced yesterday. AI Skills allows Fabric developers to create their own GenAI experience using data in the lakehouse. Unlike Copilot, which is an AI assistant, AI Skills lets users build a validated Q&A application that queries lakehouse data by converting natural language questions into T-SQL queries. It's only available in paid F64+ SKUs. You can watch the below video for Copilot, AI Skills and Gen AI experiences in Fabric:

You can use the AI Skills UI in Fabric to build and consume the developed application. When AI Skills is published, it generates an endpoint which can be queried programmatically (as far as I know, only in Fabric not externally yet). In this blog, I will show how to do so using Semantic Link and create a cell magic so you can use the published AI Skills in a notebook.

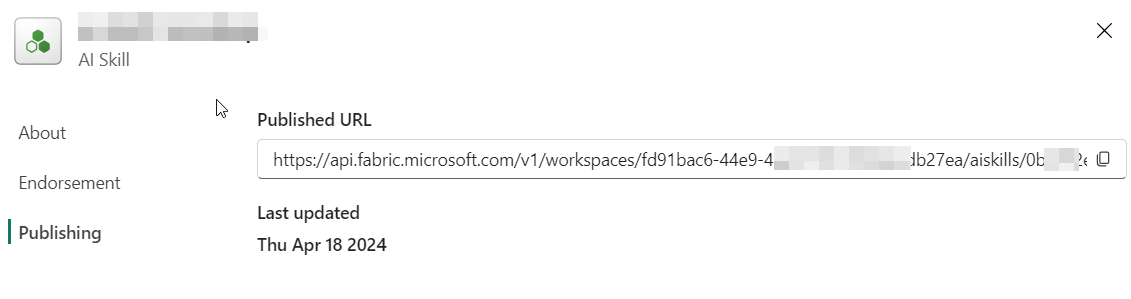

Endpoint: Get the endpoint of the published AI Skill from the settings. Copy the workspace id and the AI Skills id from the URL:

Call the endpoint : In Fabric notebook, call the endpoint. You can use

requestsbut Semantic Link makes it easier plus you don't have to create an authentication token. A few things about the code below:Calling any Fabric API is very easy, I have written about it here before.

The code below is very long because I am using

Pydanticto parse the json response. When working with LLM responses, especially if you are going to use them in another application, it's very important to create structured responses which can be validated.Pydantichelps define the schema of the response explicitly and handle type & data errors gracefully. This is completely optional, but I recommend usingPydantic(or similar libraries). (Use this if you want the shorter version withoutPydantic)This function needs three parameters : workspace id/name of the AI Skills, AI Skills id and the question. Currently, the question must be in English. API also supports few additional parameters which I am skipping for now.

There are no published API rate limits, only limitation would be your capacity size.

%pip install semantic-link pydantic --q

import sempy.fabric as fabric

from pydantic import BaseModel

from typing import List, Optional, Union, Any

import pandas as pd

import requests

client = fabric.FabricRestClient()

def ask_aiskill(workspace: str, aiskill: str, question: str) -> Optional[pd.DataFrame]:

"""

Sandeep Pawar | fabric.guru | Aug-07-2024 | v0.1

Returns results from AI Skills as a pandas dataframe

Parameters:

- workspace (str): The workspace id

- aiskill (str): AI skill id

- question (str): The user question to pass to the AI skill in English.

Returns:

- pandas dataframe or none in case of an error

"""

class ModelDetails(BaseModel):

sqlQueryVariations: int

showExecutedSQL: bool

includeSQLExplanation: bool

enableExplanations: bool

enableSemanticMismatchDetection: bool

executeSql: bool

enableBlockAdditionalContextByLength: bool

additionalContextLanguageDetection: bool

fewShotExampleCount: int

class Response(BaseModel):

artifactId: str

promptContextId: str

queryEndpoint: str

result: Union[str, float]

ResultRows: Any

ResultHeaders: List[str]

ResultTypes: List[str]

executedSQL: str

additionalMessage: Optional[Union[str, dict]]

prompt: str

userQuestion: str

modelBehavior: ModelDetails

additionalContext: str

payload = {"userQuestion": question}

try:

wsid = fabric.resolve_workspace_id(workspace)

except:

print("Check workspace name/id")

url = f"v1/workspaces/{wsid}/aiskills/{aiskill}/query/deployment"

try:

response = client.post(url, json=payload)

result = response.json()

aiskill_response = Response(**result)

#responses

result_header = aiskill_response.ResultHeaders

result_rows = aiskill_response.ResultRows

result_sql = aiskill_response.executedSQL

#model settings

result_context = aiskill_response.additionalContext

result_fewshotcount = aiskill_response.modelBehavior.fewShotExampleCount

#only return the df

df = pd.DataFrame(result_rows, columns=result_header)

return df

except requests.exceptions.HTTPError as h_err:

print(f"HTTP error: {h_err}")

except requests.exceptions.RequestException as r_err:

print(f"Request error: {r_err}")

except Exception as err:

print(f"Error: {err}")

return None

#ask a question

ask_aiskill(workspace, aiskill, question)

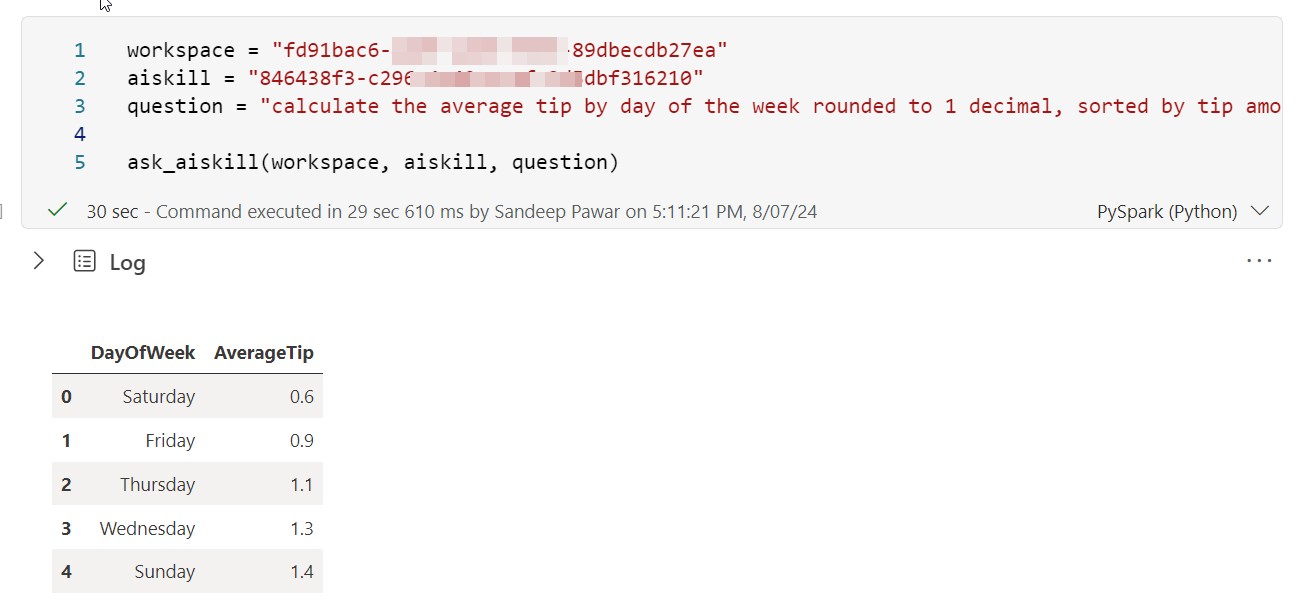

Example:

workspace = "fd91bac6-xxxxxx-89dbecdb27ea"

aiskill = "846438f3-xxxxxx-3d5dbf316210"

question = "calculate the average tip by day of the week rounded to 1 decimal, sorted by tip amount"

ask_aiskill(workspace, aiskill, question)

Create cell magic : This part is easy. I just registered the above function as a cell magic and that's it. You can save this in your environment, so all the notebooks attached to the environment can use it after loading the extension. Users can ask natural language questions and get the data back as a pandas dataframe.

from IPython.core.magic import register_cell_magic @register_cell_magic def aiskill(line, cell): # cell magic return ask_aiskill(workspace="xxx", aiskill="xxx", question=cell.strip())example:

Thanks to Nellie Gustafsson (Principal PM, Fabric) for the discussion and congratulations to the team on the launch.

References:

Subscribe to my newsletter

Read articles from Sandeep Pawar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sandeep Pawar

Sandeep Pawar

Principal Program Manager, Microsoft Fabric CAT helping users and organizations build scalable, insightful, secure solutions. Blogs, opinions are my own and do not represent my employer.