Simplifying Cloud Storage: Node.js and AWS S3 Integration

Pulkit

Pulkit

I was recently working on a project(image-tweaker) that required an image upload feature. I needed a reliable storage system to store the images. After researching various options, I decided to use a cloud storage service for its scalability and ease of integration. This choice allowed me to focus more on the core functionality of the project rather than worrying about storage limitations.

Among the available cloud storage services, AWS S3 stood out due to its features, reliability, and widespread adoption in the industry. AWS S3 provides a highly durable, scalable, and secure solution for storing and retrieving any amount of data from anywhere on the web. Its seamless integration with Node.js made it an ideal choice for my project, enabling efficient image uploads and management. In this article, I will walk you through the process of integrating AWS S3 with a Node.js application, simplifying the steps to help you get started quickly.

Let's Start

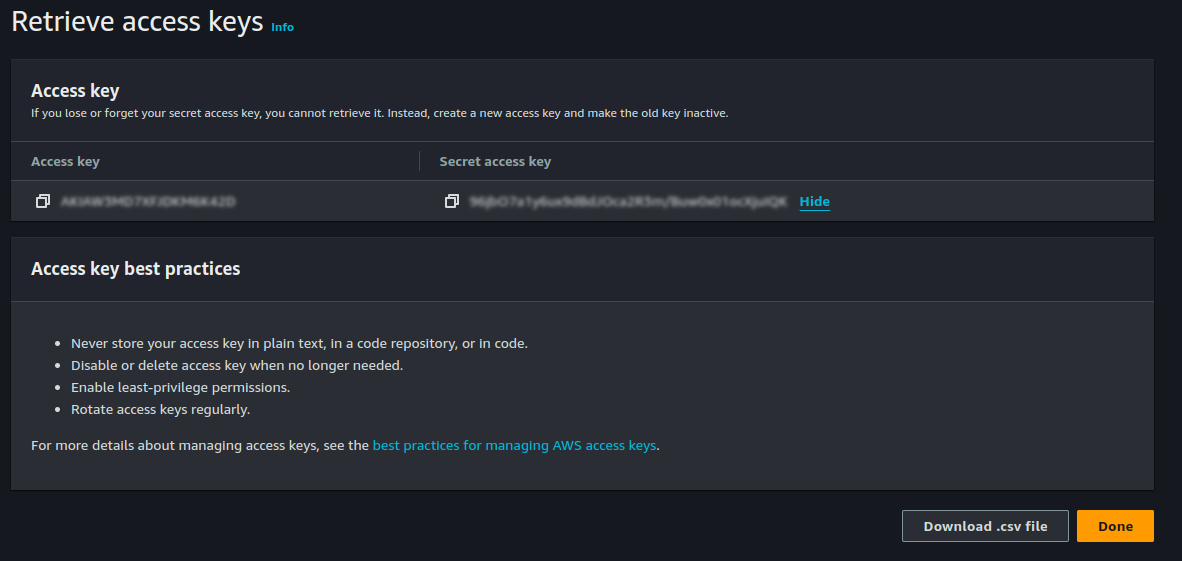

To integrate S3 with Node.js, you will need an Access key and a Secret access key. So let's start by creating one.

Creating Access key and Secret access key

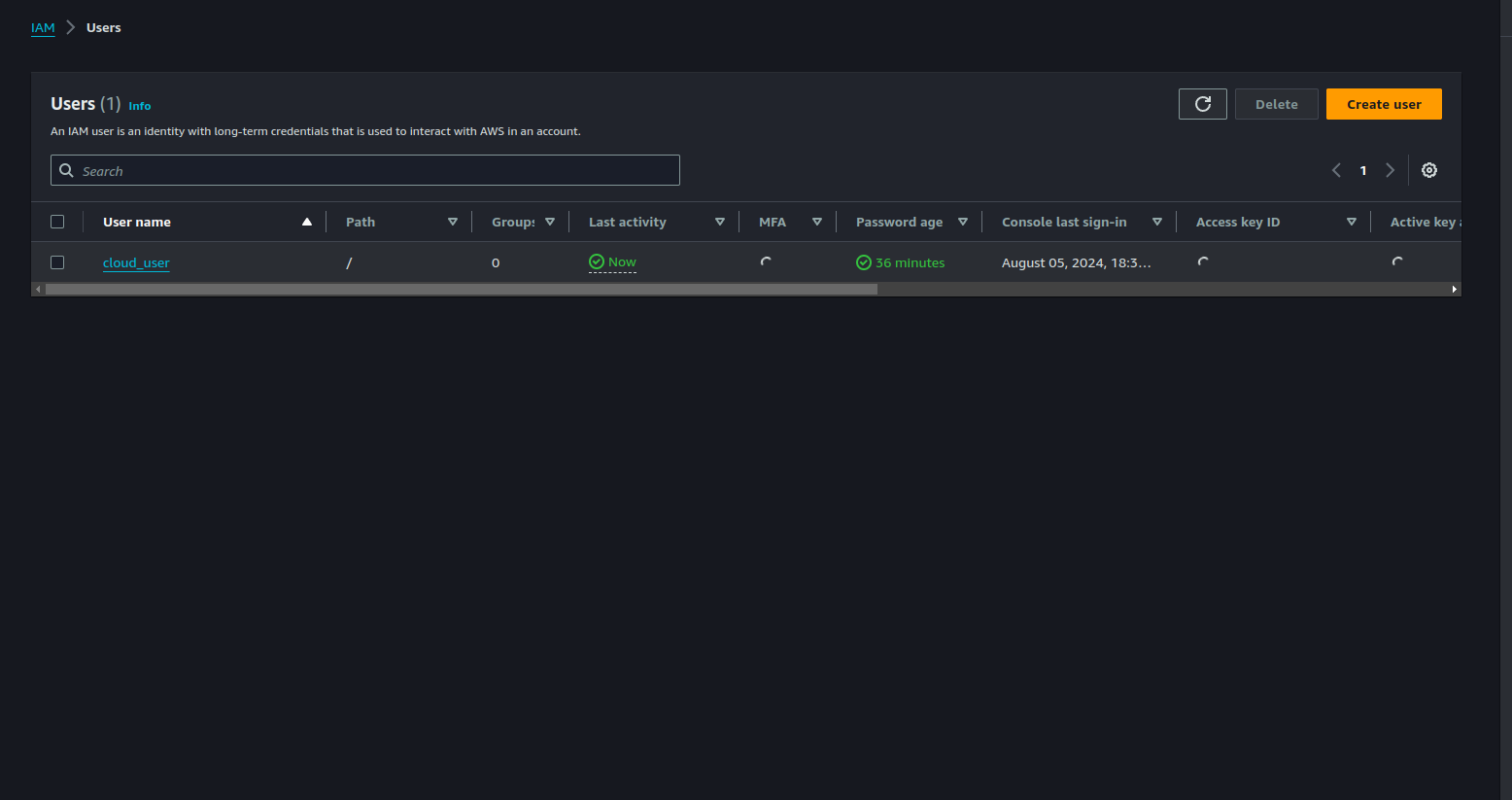

Head over to your AWS IAM dashboard

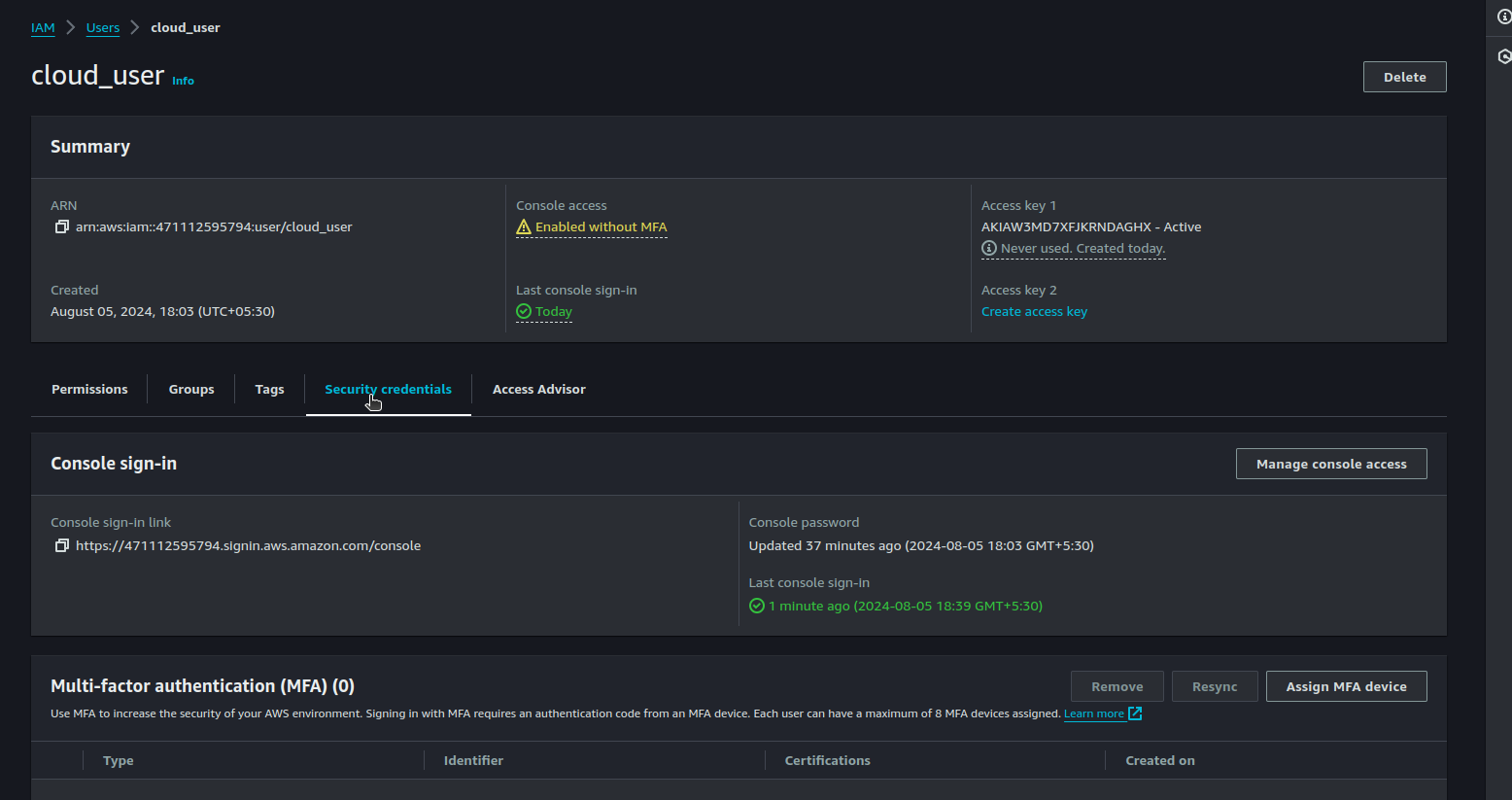

Go to your IAM user, and click on the Security credentials tab.

Note: You can also create a new user with the necessary permissions for S3 only.

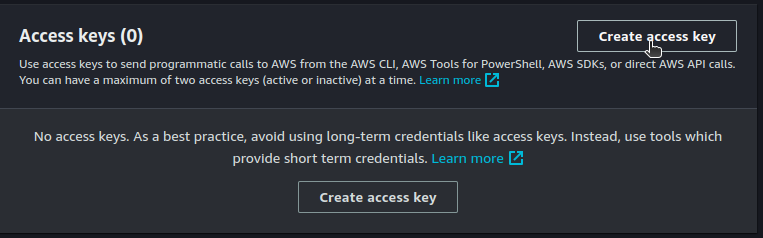

Create a new access key

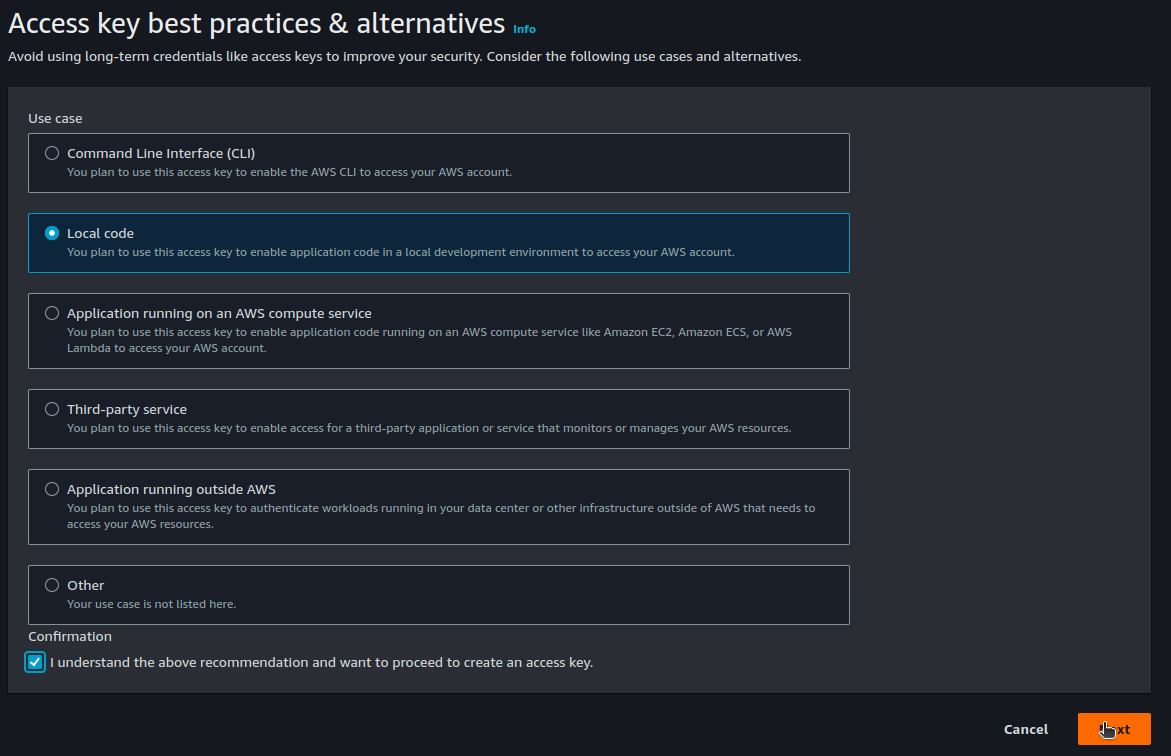

Add local code to access the S3 bucket

Now you have you both Access key and Secret access key

Note: Please save both the keys carefully, you won't be showed the Secret access key again on your dashboard.

Initialize a new Node.js Project

So let's kick things off by initializing a new Node.js project!

npm init -y

Next up, add @aws-sdk/client-s3 and dotenv as dependencies in your project!

npm i @aws-sdk/client-s3 dotenv

Starting with the S3 client

Starting with the S3 client is super exciting! Let's dive right in and get our hands dirty with some code!

// client.js

const { S3Client } = require('@aws-sdk/client-s3');

const s3 = new S3Client({

region: 'us-east-1',

credentials: {

accessKeyId: process.env.AWS_ACCESS_KEY_ID,

secretAccessKey: process.env.AWS_SECRET_ACCESS_KEY,

},

});

module.exports= s3;

Make sure to save both the AWS keys in the .env file in the following format

AWS_ACCESS_KEY_ID=

AWS_SECRET_ACCESS_KEY=

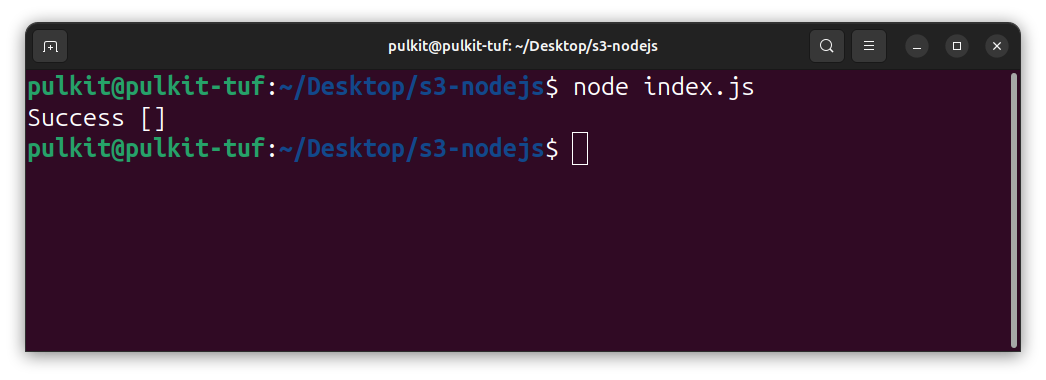

Listing S3 buckets

Listing S3 buckets is a great way to verify that our setup is working correctly. Let's write some code to list all the S3 buckets in our account.

// index.js

require("dotenv").config();

const s3= require("./client.js");

const { ListBucketsCommand } = require("@aws-sdk/client-s3");

const listBuckets = async () => {

const data = await s3.send(new ListBucketsCommand({}));

console.log("Success", data.Buckets);

};

listBuckets();

And guess what? We get an empty array because I don't have any S3 buckets in my account yet!

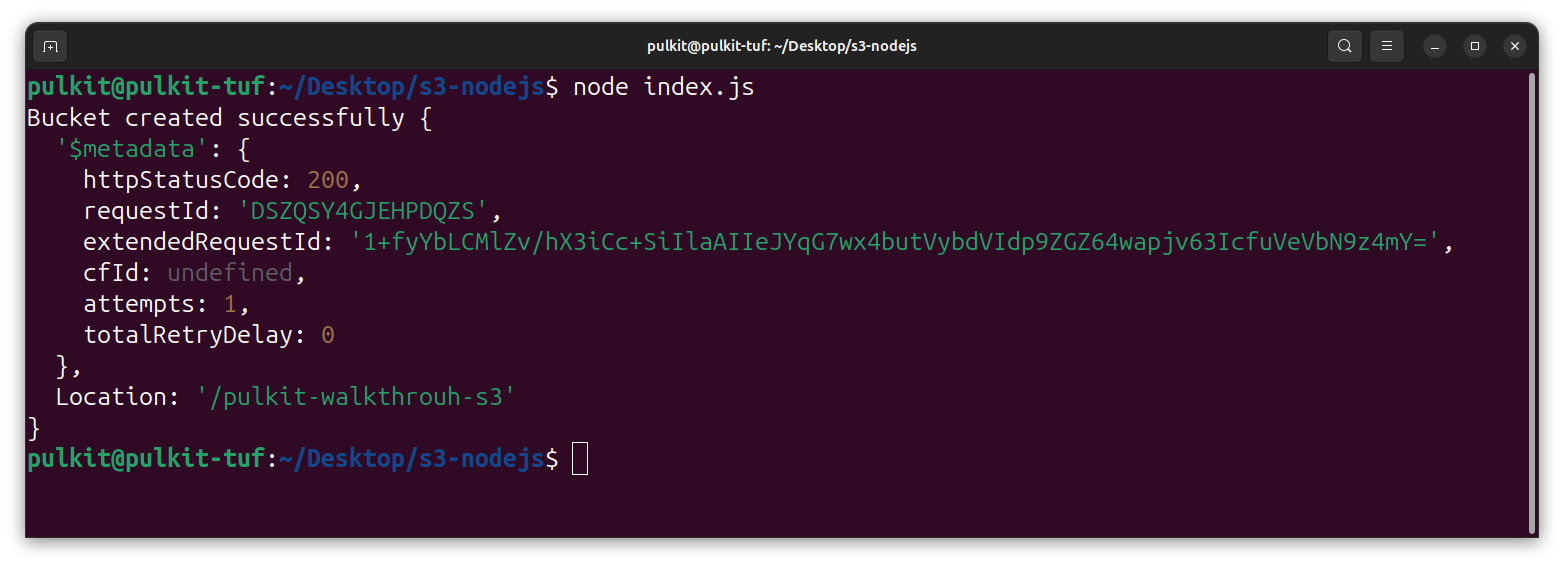

Let's create a new method to add a bucket.

const { CreateBucketCommand } = require("@aws-sdk/client-s3");

const createBucket = async (bucketName) => {

const data = await s3.send(new CreateBucketCommand({ Bucket: bucketName }));

console.log("Bucket created successfully", data);

};

createBucket("pulkit-walkthrouh-s3");

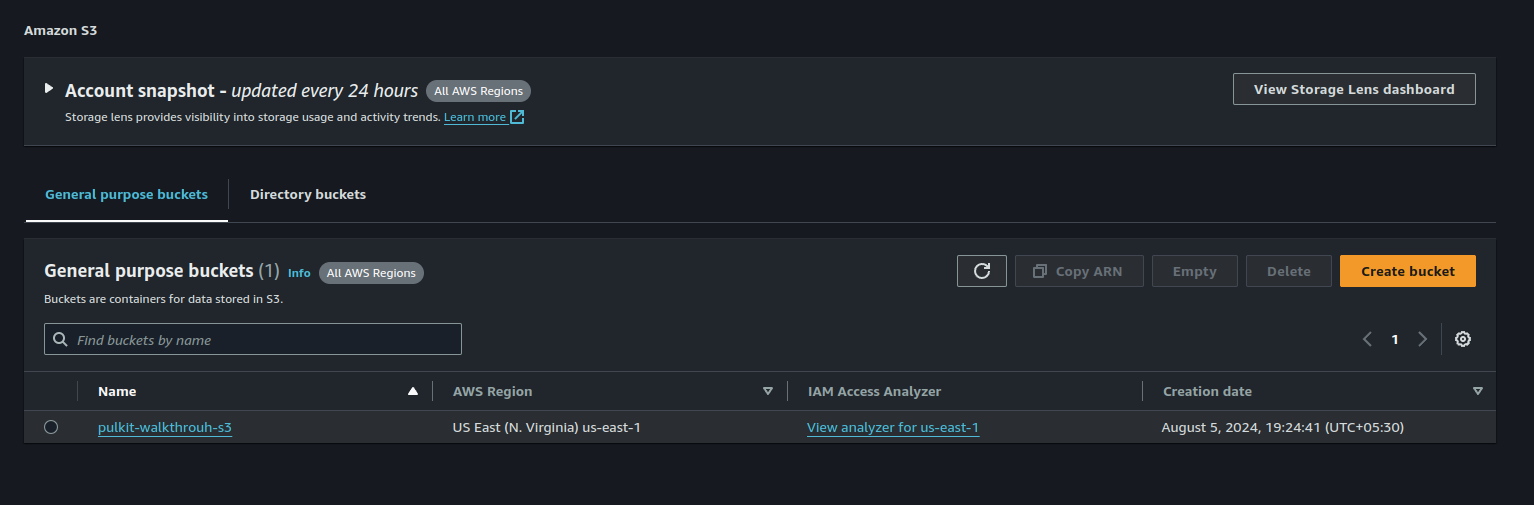

And there, I observe a new bucket created in the S3 list within the AWS console.

If we have a createBucket method, why not have a deleteBucket method too?

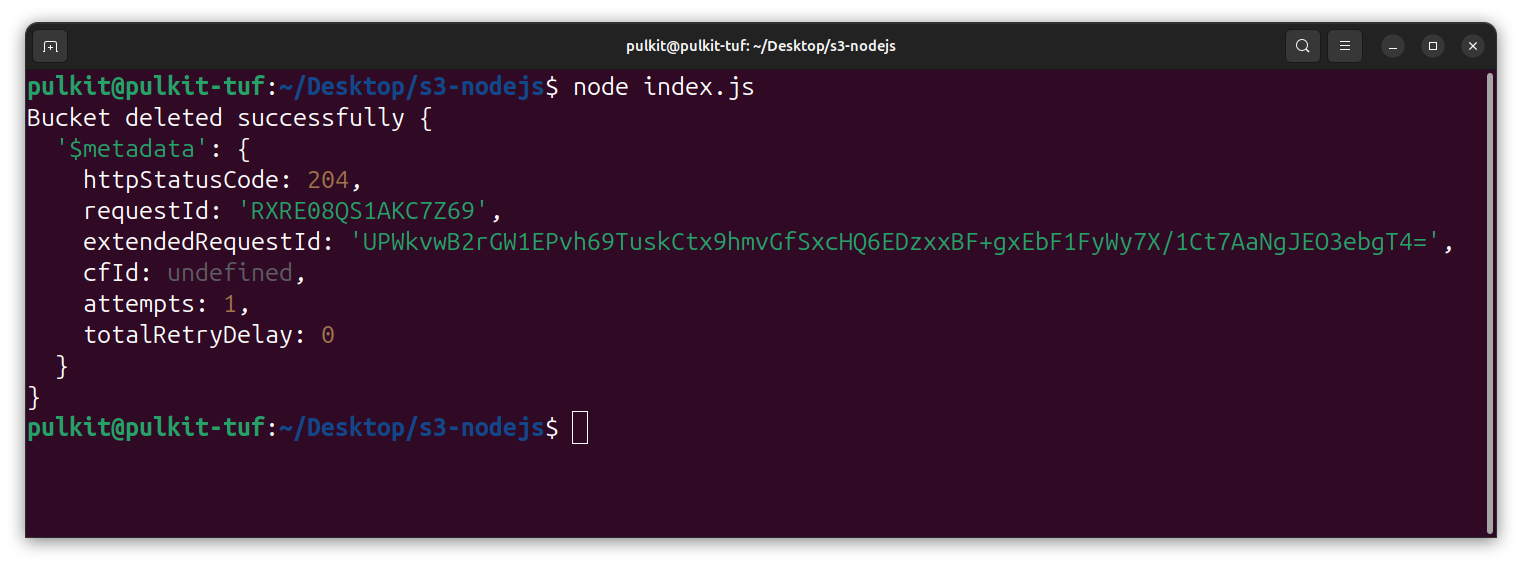

const { DeleteBucketCommand } = require("@aws-sdk/client-s3");

const deleteBucket = async (bucketName) => {

const data = await s3.send(new DeleteBucketCommand({ Bucket: bucketName }));

console.log("Bucket deleted successfully", data);

};

deleteBucket("pulkit-walkthrouh-s3");

Okay so the basic create and delete is done with the SDK, now let's hop on to a specific bucket and play around some objects within that.

Before that let's move the final code to a separate file: bucket.js

Uploading Objects on S3

To upload an object to S3, we need a PutObjectCommand method provided by the AWS SDK. Here's how you can use it:

const { PutObjectCommand } = require("@aws-sdk/client-s3");

To read the object in JavaScript for uploading, we need the fs module. Here's how you can use it:

const fs = require('fs');

finally the uploadObject method would look like

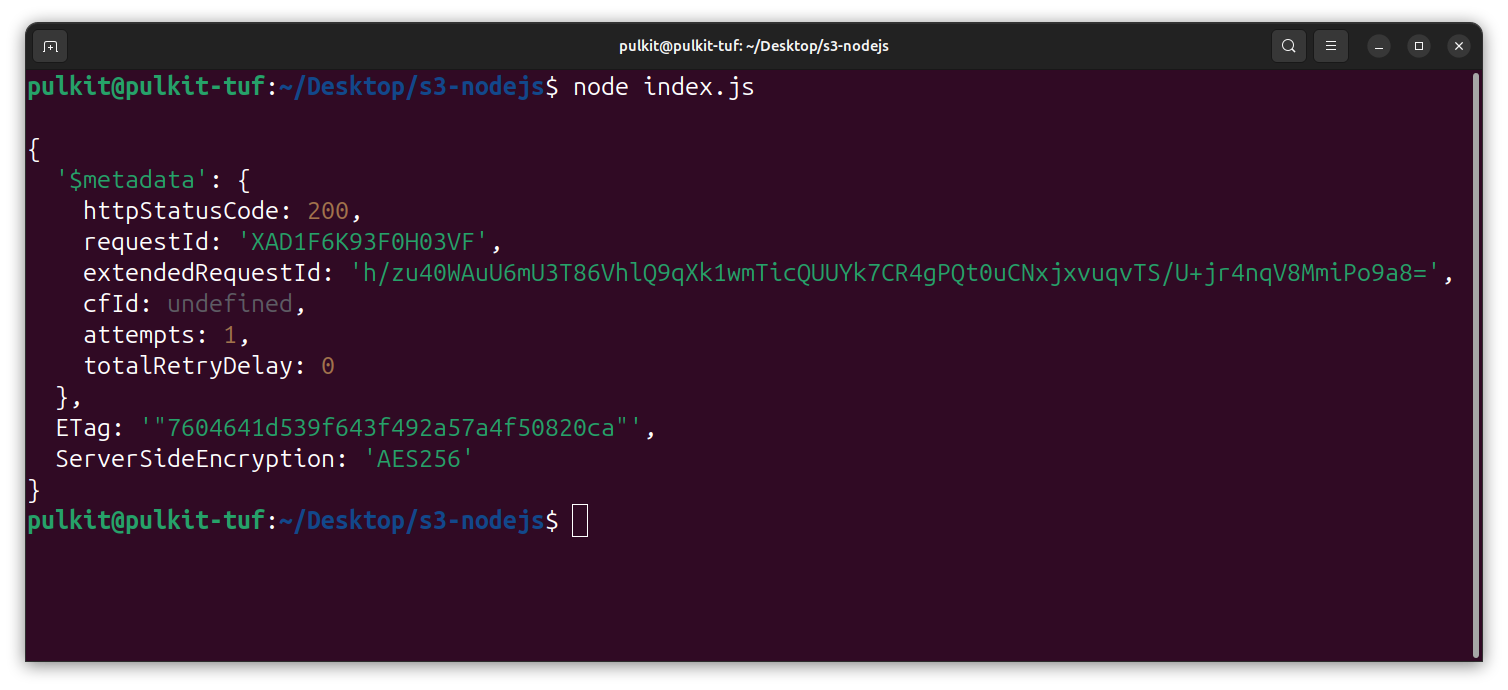

async function uploadObject(pathToFile,key){

const uploadParams = {

Bucket: 'pulkit-walkthrouh-s3',

Key:key,

Body: fs.createReadStream(pathToFile)

};

const command = new PutObjectCommand(uploadParams);

const response = await s3.send(command);

console.log(response);

}

uploadObject("./test.txt","test.txt");

One interesting observation: if we upload an object with the same key, it will overwrite the existing object in the S3 bucket. This can be useful for updating files but be cautious as it will replace the old content.

And yet, to delete an object from S3, we need the DeleteObjectCommand method provided by the AWS SDK. Here's how you can use it:

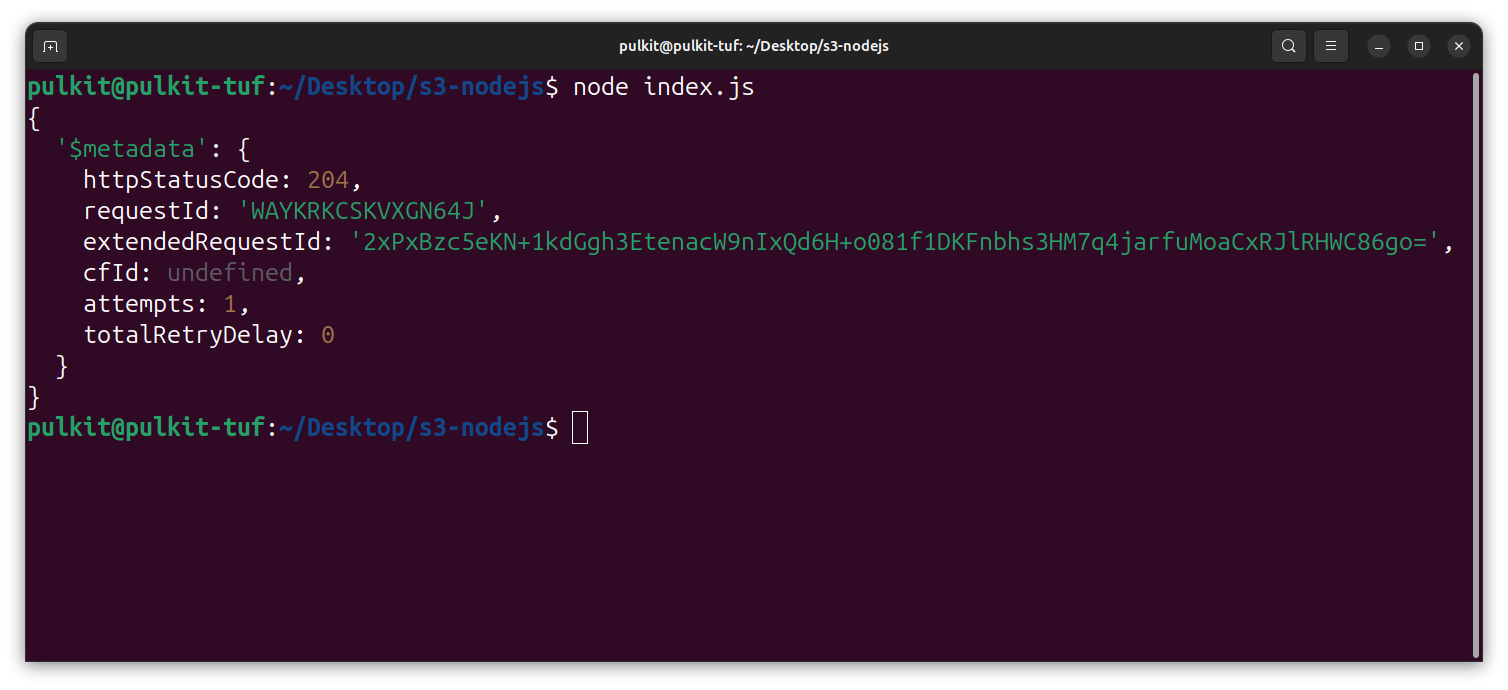

async function deleteObject(key) {

const deleteParams = {

Bucket: 'pulkit-walkthrouh-s3',

Key: key

};

const command = new DeleteObjectCommand(deleteParams);

const response = await s3.send(command);

console.log(response);

}

deleteObject("test.txt");

Upload Objects in a S3 bucket

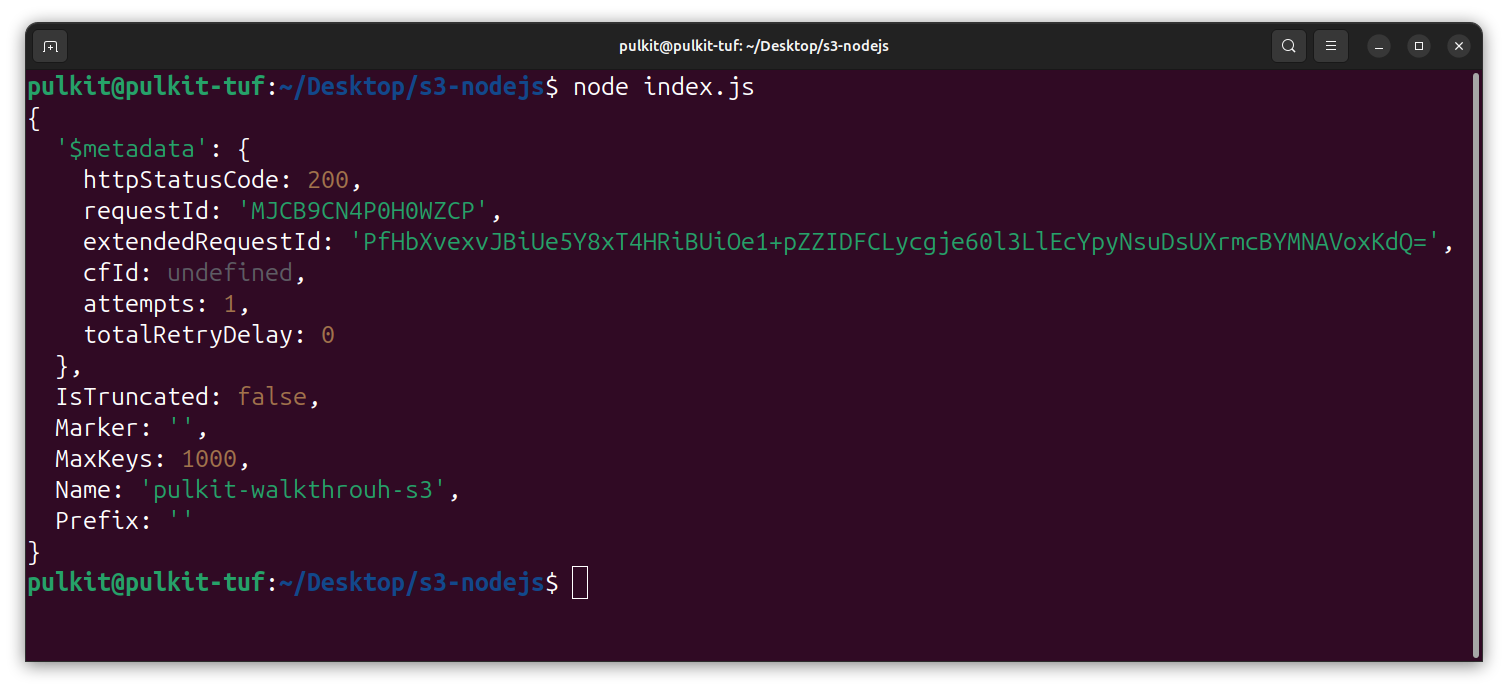

To list objects in a bucket, we need the ListObjectsCommand method provided by the AWS SDK. Here's how you can use it:

const { ListObjectsCommand } = require("@aws-sdk/client-s3");

async function listObjects() {

const listParams = {

Bucket: 'pulkit-walkthrouh-s3'

};

const command = new ListObjectsCommand(listParams);

const response = await s3.send(command);

console.log(response.Contents);

}

listObjects();

I hope this guide helps you seamlessly integrate AWS S3 with your Node.js application, making your uploads feature robust and efficient. Happy coding!

Subscribe to my newsletter

Read articles from Pulkit directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Pulkit

Pulkit

Hi there! 👋 I'm Pulkit, a passionate web and app developer specializing in MERN and React Native technologies. With a keen interest in open-source contributions and competitive programming, I love building innovative solutions and sharing my knowledge with the community. Follow along for insights, tutorials, and updates on my coding journey!