Machine Learning : Dimensionality Reduction - Linear Discriminant Analysis (LDA) (Part 33)

Md Shahriyar Al Mustakim Mitul

Md Shahriyar Al Mustakim MitulLDA is commonly used as a dimensionality reduction technique, It's used in the pre-processing step for pattern classification and machine learning algorithms, and its goal is to project a data set into a lower dimensional space.

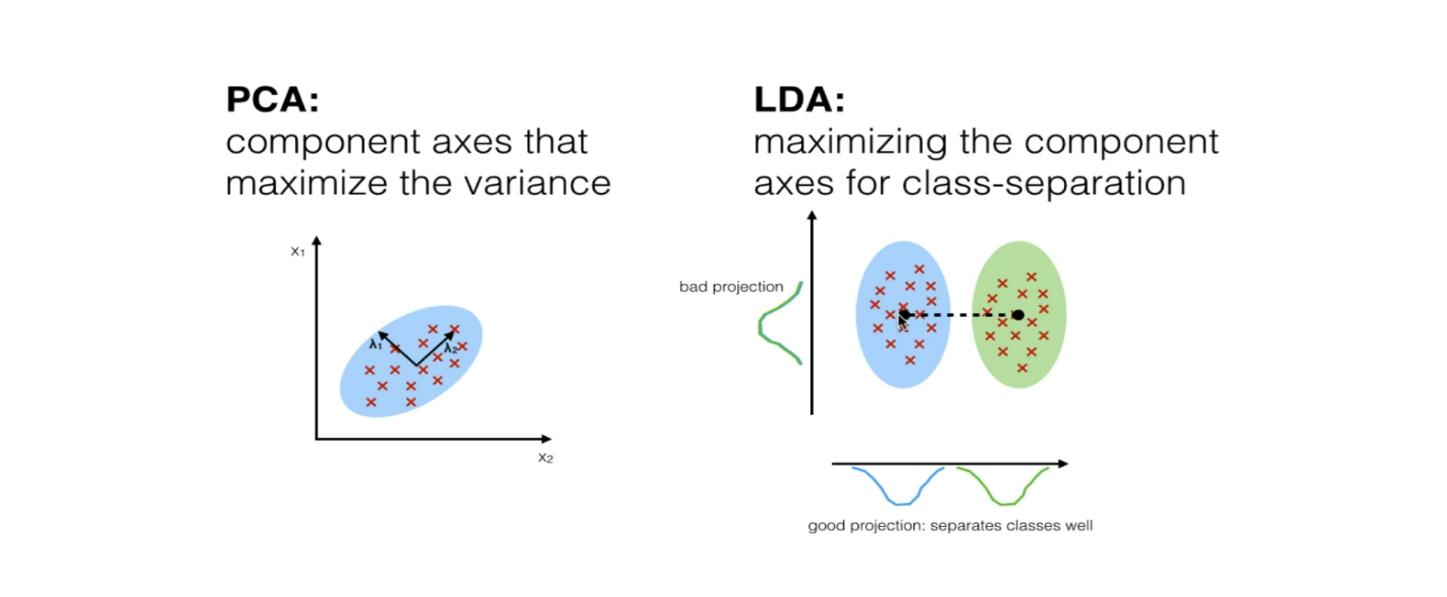

But what's the difference with PCA then?

In addition to finding the component axes with LDA we are interested in the axes that maximize the separation between multiple classes.

The goal of LDA is to project a feature space onto a small subspace, while maintaining the class discriminatory information.

And we have both PCA and LDA as linear transformation techniques used for dimensionality reduction.

PCA is a unsupervised algorithm, but LDA is supervised because of the relation to the dependent variable.

In PCA, we're looking in that subspace and the dimensionality reduction technique of the data to examine how the principal component axis are in relation.

in LDA we're looking for that class separation and I, think this visualization kind of makes it the most clear between the two.

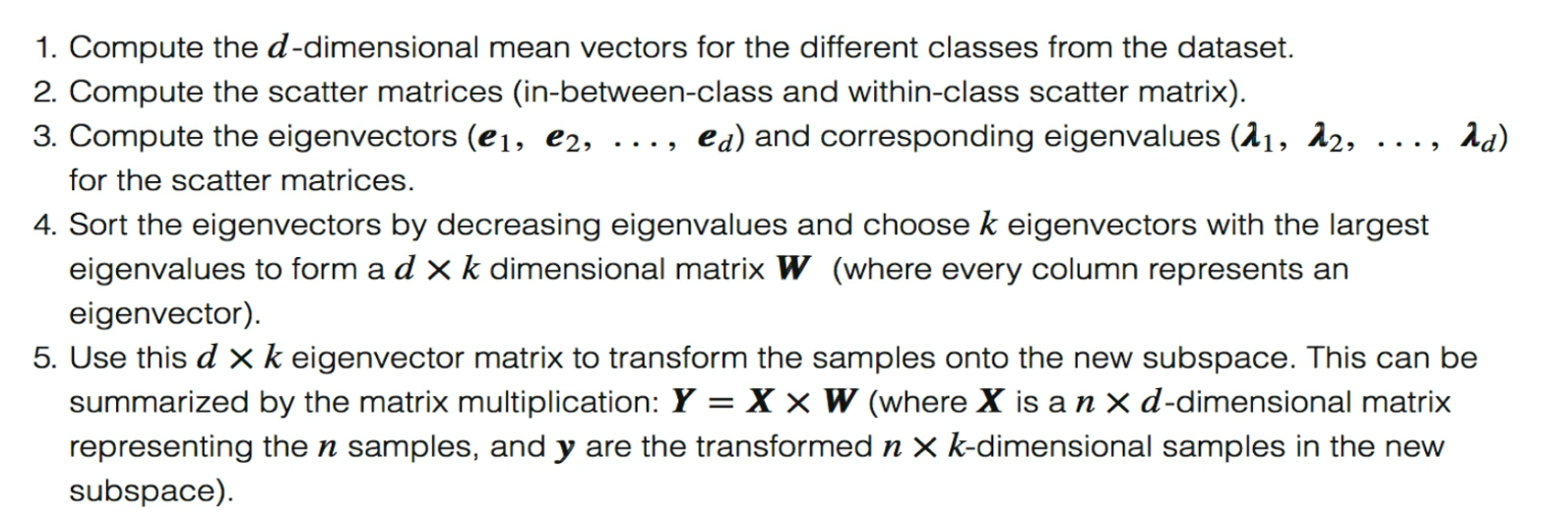

Steps:

Let's code this down

Problem Statement

Check the PCA Problem statement and the reason why we are using n_components to 2, here

This is the code for LDA

Imported the LDA Class

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis as LDA

created the lda object

lda = LDA(n_components = 2)

fit and transform the X_train

X_train = lda.fit_transform(X_train, y_train)

Transform the X_test

X_test = lda.transform(X_test)

Why have we done that was explained in the PCA blog as well.

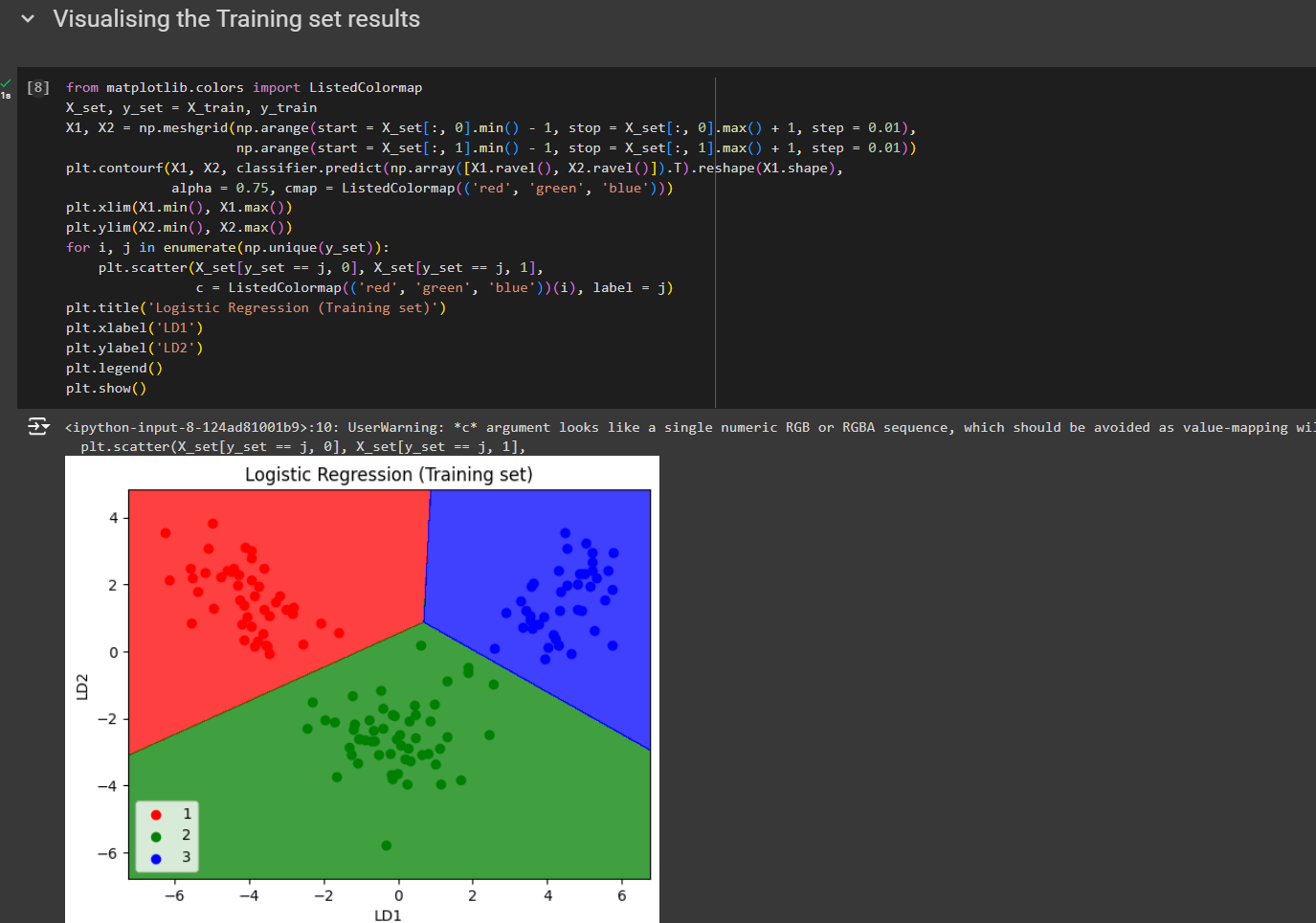

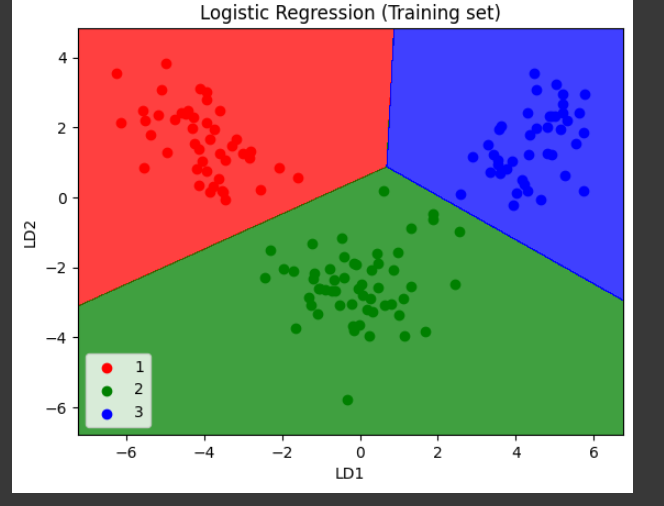

Let's check the results now for X_train

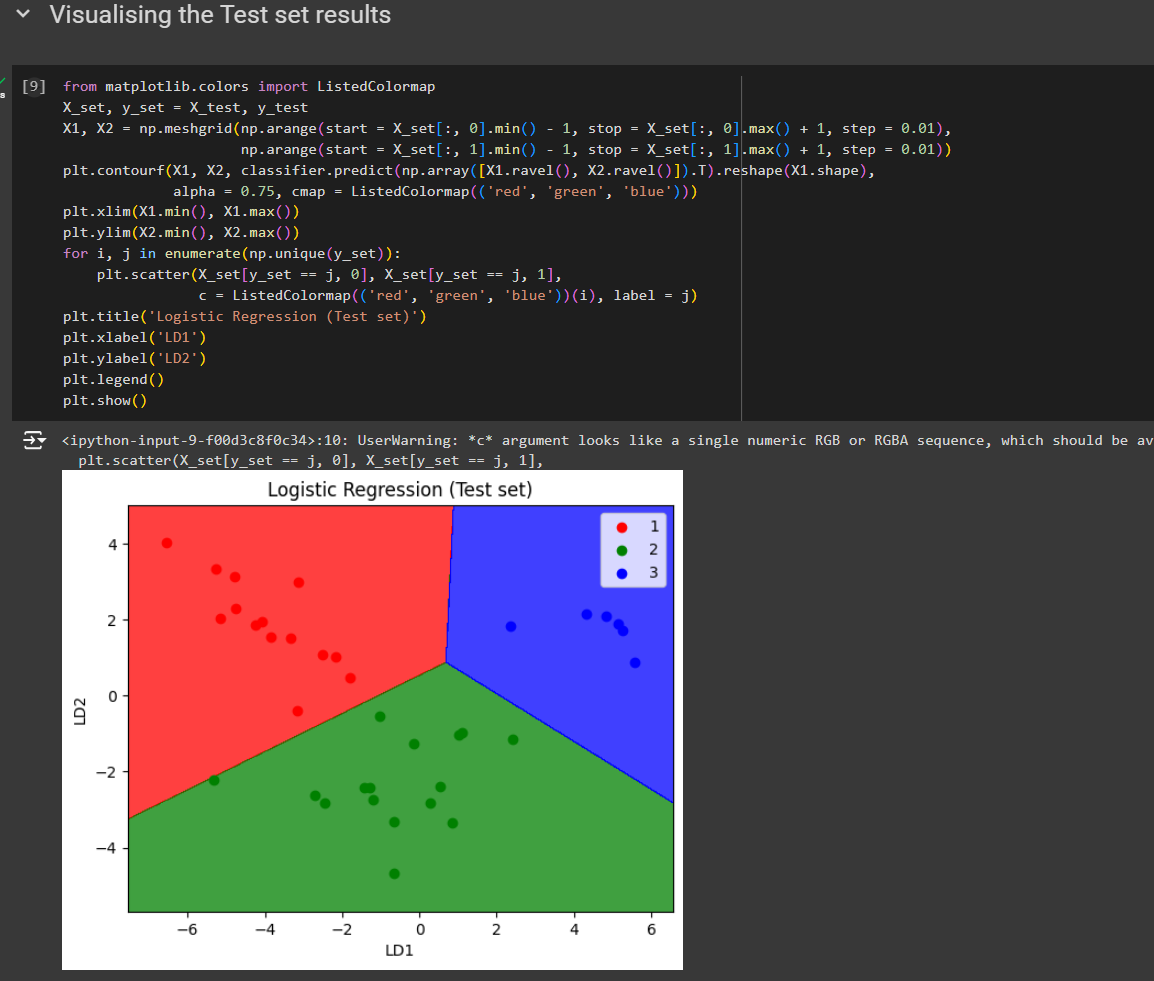

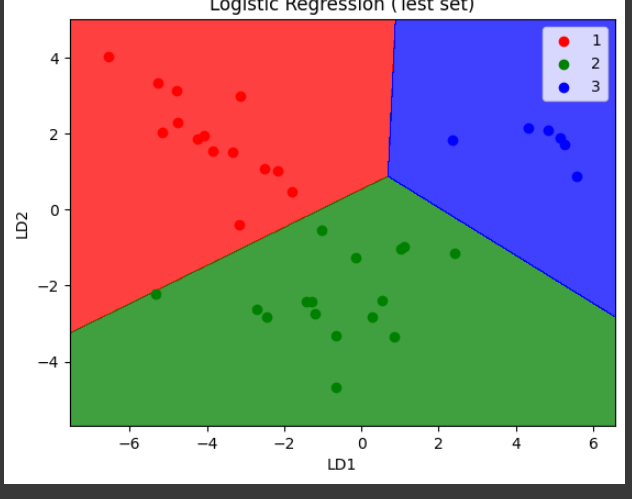

For X_test

Now, the question is why LDA when we have PCA?

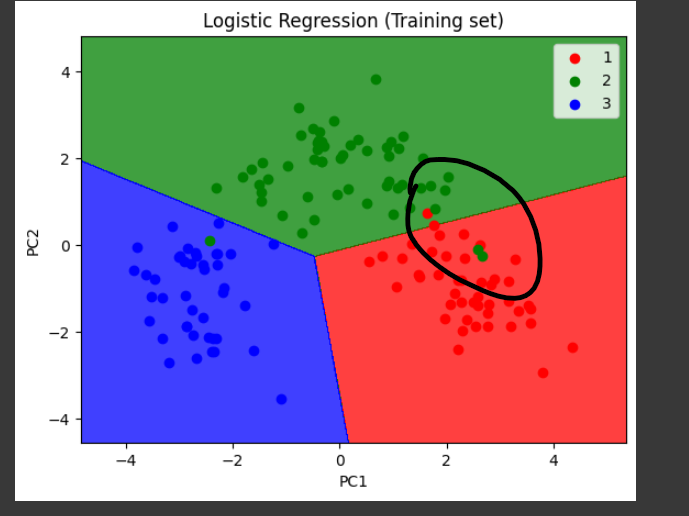

If you visualize PCA, you can see that the training cluster was like this

we had some anomalies but in this LDA, we don't have that.

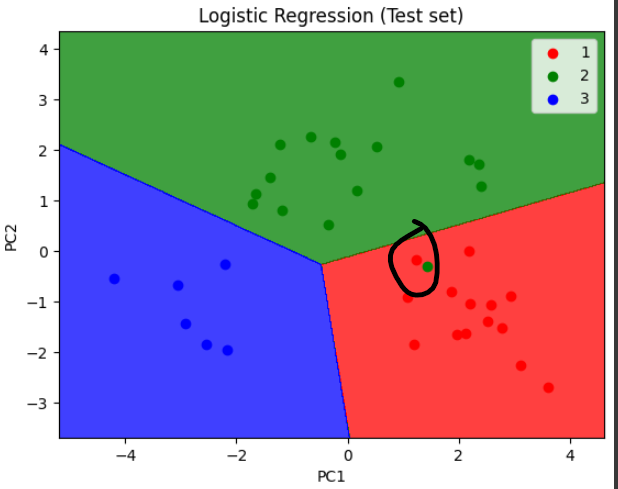

Again, for test cluster we had this in PCA:

Again some anomaly.

But this didn't happen for LDA

Awesome!

So, we reduced the dimensions and are more accurate than PCA

Code from here

Subscribe to my newsletter

Read articles from Md Shahriyar Al Mustakim Mitul directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by