Tesla T4: Analyzing Its Role in AI and Machine Learning

Spheron Network

Spheron NetworkTable of contents

- Introduction to the Tesla T4

- The Role of the Tesla T4 in AI

- Machine Learning and the Tesla T4

- Key Features of the Tesla T4

- Performance Benchmarks

- Deployment Scenarios

- The Impact of the Tesla T4 on AI and ML Development

- Tesla T4 vs. Competitors

- Challenges and Considerations

- Future Prospects for the Tesla T4

- Conclusion

- FAQs

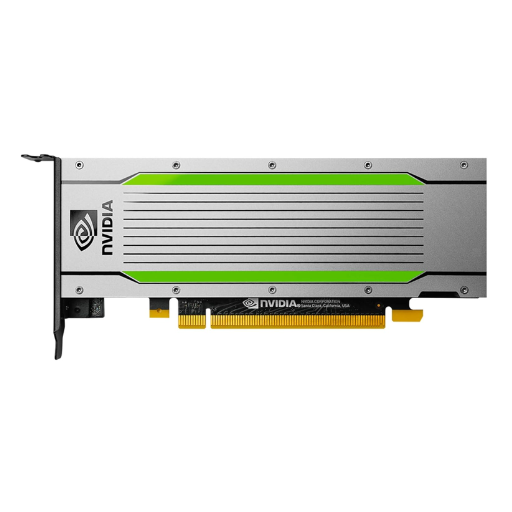

The rapid advancements in artificial intelligence (AI) and machine learning (ML) have brought forth a slew of powerful tools and technologies designed to optimize and accelerate these processes. Among these is the Tesla T4, an AI inference accelerator from NVIDIA that has become a crucial player in AI and ML. This article delves into the capabilities of the Tesla T4, its importance in AI and ML, and how it stands out in a crowded market of AI accelerators.

Introduction to the Tesla T4

The Tesla T4 is part of NVIDIA's Turing architecture, designed to deliver high performance and efficiency for AI inference workloads. Unlike its predecessors, the T4 is optimized for inferencing, deploying a trained model to make predictions. It supports various AI applications, from image and video processing to natural language understanding and recommender systems.

Here’s a specification chart for the NVIDIA Tesla T4:

| Specification | Details |

| Architecture | NVIDIA Turing |

| Tensor Cores | 320 |

| CUDA Cores | 2,560 |

| GPU Memory | 16 GB GDDR6 |

| Memory Bandwidth | 320 GB/s |

| FP16 Performance | 65 TFLOPS |

| INT8 Performance | 130 TOPS |

| INT4 Performance | 260 TOPS |

| Form Factor | Single-slot, low-profile PCIe card |

| Power Consumption | 70 Watts |

| Multi-Precision Computing | FP32, FP16, INT8, INT4 |

| Peak FP32 Performance | 8.1 TFLOPS |

| NVENC/NVDEC | Yes, supports H.264 and HEVC |

| Thermal Solution | Passive |

| Bus Interface | PCI Express 3.0 x16 |

| Operating System Support | Linux, Windows |

| Dimensions | 6.6" x 2.7" (167.65 mm x 68.9 mm) |

| Weight | 285 g (0.63 lbs) |

| Deployment Environments | Data Centers, Edge, AI Inference, HPC |

The Role of the Tesla T4 in AI

AI requires immense computational power, particularly during inference, where trained models are deployed in real-time applications. The Tesla T4, with its 320 Tensor Cores, is specifically engineered to accelerate inference workloads, making it an invaluable asset for AI-driven companies. It is designed to deliver high throughput and low latency, ensuring that AI applications run smoothly and efficiently.

Machine Learning and the Tesla T4

Once trained, machine learning models need to be deployed efficiently to provide real-time results. The Tesla T4 excels in this area, offering performance several times faster than that of traditional CPUs. Its support for mixed-precision computing, which allows it to process data in 16-bit and 32-bit formats, allows it to easily handle a wide range of ML workloads. This makes it an ideal choice for businesses looking to scale their ML operations.

Key Features of the Tesla T4

One of the standout features of the Tesla T4 is its versatility. It supports a wide range of AI and ML frameworks, including TensorFlow, PyTorch, and ONNX, making it easy to integrate into existing workflows. Additionally, the T4's compact size and energy efficiency allow it to be deployed in various environments, from data centers to edge devices. Its multi-precision capabilities further enhance flexibility, allowing it to handle everything from high-precision scientific calculations to high-throughput inferencing tasks.

Performance Benchmarks

When it comes to performance, the Tesla T4 delivers impressive results. In AI inference benchmarks, it consistently outperforms traditional CPU-based systems significantly. For example, in image recognition tasks, the T4 can process thousands of images per second, while in natural language processing, it can handle large datasets in real time, providing rapid and accurate results.

Deployment Scenarios

The Tesla T4 is designed to be versatile and can be deployed in various scenarios. In data centers, it can accelerate AI workloads across a wide range of applications, from healthcare to finance. At the edge, its compact size and energy efficiency make it ideal for deployment in autonomous vehicles, smart cities, and IoT devices, where real-time processing is crucial.

The Impact of the Tesla T4 on AI and ML Development

The Tesla T4 has significantly impacted the development of AI and ML. Its ability to deliver high performance in a compact and energy-efficient package has enabled businesses to deploy AI at scale, driving innovation across industries. From improving customer experiences through enhanced recommendation systems to enabling real-time decision-making in autonomous systems, the Tesla T4 is helping to push the boundaries of what is possible with AI.

Tesla T4 vs. Competitors

In a market crowded with AI accelerators, the Tesla T4 stands out due to its balance of performance, efficiency, and versatility. While other high-performance accelerators like Google’s TPU and AMD’s Radeon Instinct exist, the Tesla T4’s ability to support a wide range of frameworks and its superior inference capabilities give it an edge in many applications. Its adoption by leading tech companies and its deployment in various AI-driven industries underscore its importance.

Challenges and Considerations

Despite its many advantages, deploying the Tesla T4 is challenging. One of the primary considerations is the cost, as deploying multiple T4 units in a data center can be a significant investment. Additionally, while the T4 is highly efficient, it still requires substantial power and cooling infrastructure, which can be a limiting factor in some deployment scenarios. Furthermore, as AI models continue to grow in complexity, there is a constant need for even more powerful hardware, which may lead to the T4 being surpassed by newer, more advanced accelerators.

Future Prospects for the Tesla T4

The Tesla T4 is poised to remain a critical component in AI and ML infrastructure. The demand for efficient and powerful inference accelerators will only increase as AI continues to evolve. NVIDIA’s commitment to ongoing development and improvement of its hardware ensures that the T4 will continue to be relevant, even as newer technologies emerge. Moreover, with the increasing adoption of AI across industries, the T4’s role in facilitating these advancements cannot be understated.

Conclusion

The Tesla T4 has firmly established itself as a leader in the AI and ML landscape. Its powerful performance, versatility, and efficiency make it an essential tool for businesses looking to leverage AI and ML in their operations. As AI continues to permeate various industries, the T4’s ability to accelerate inference workloads will be crucial in driving innovation and maintaining competitive advantage.

FAQs

What makes the Tesla T4 different from other AI accelerators?

The Tesla T4 is optimized for AI inference, offering a balance of high performance, efficiency, and versatility that sets it apart from other accelerators.Can the Tesla T4 be used to train AI models?

While the T4 can be used for some training tasks, it is primarily designed for inference, where it excels in delivering real-time AI processing.What industries benefit most from the Tesla T4?

The Tesla T4 is used across various industries, including healthcare, finance, automotive, and retail, where AI-powered applications require efficient inference processing.Is the Tesla T4 suitable for edge deployment?

Yes, the Tesla T4’s compact size and energy efficiency make it ideal for edge deployment in environments like autonomous vehicles and IoT devices.What are the future developments expected for the Tesla T4?

As AI technology evolves, the Tesla T4 will continue to be enhanced with improved performance and capabilities, ensuring its relevance in the ever-changing AI landscape.

Subscribe to my newsletter

Read articles from Spheron Network directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Spheron Network

Spheron Network

On-demand DePIN for GPU Compute