Python Open-Source Development: How I Build and Maintain Open-Source Repositories!

Sadra Yahyapour

Sadra Yahyapour

Right now, you're probably using several open-source tools and benefiting from them. As an open-source enthusiast, I often follow a clear and reliable method for developing and maintaining my Python repositories.

In this article, I will discuss the steps I take to create a clean and impressive Python repository with over 90% code coverage and an excellent Development Experience (DX).

First, I Ask..

Before I take any steps, I have to ask myself a few questions. Initially, I think about my users and determine who I am releasing the tool for. Am I developing a tool for developers, like a package or library? Or am I working on a service for users with little to no programming knowledge?

Will I be the only developer working on this tool, or will it be open for contributions from developers of all experience levels? Which development flow will I follow?

Regardless of the tools, these are the basic initial questions I ask before starting. Today, we'll explore the steps for creating a sample open-source repository that other developers can contribute to and develop.

We will explore the tools I use, how I use them, and the value they bring to your workspace and repository.

I Use Git & GitHub

There are so many version-controlling software. I prefer Git as GitHub fully supports that. It's also easy to learn for beginners. Also, some other tools on my machine rely on git. So for me, git is the perfect solution.

DX Matters A Lot

Development experience is one of those great feelings that not all open-source contributors get to enjoy when contributing, often due to poor code quality and terrible development flows from some projects.

For some repositories, it takes days to understand the project's flow and build momentum to contribute. On the other hand, there are very neat projects where it only takes a few minutes to find the area you want to contribute to.

It's about documentation, clean code, excellent code structure, and a solid design. These key concepts help you find the issue you want to fix in someone's repository very quickly.

Tips For Improving the Development Experience

As a new contributor, what bothers you the most about a repository you just switched to? What features would you like that new repository to have to make you feel great about returning and contributing even later?

It's very common not to know the entire codebase in a repository. It might even be a framework you want to contribute to, so studying the entire framework and reviewing its codebase is obviously not a good practice. How do you want to know whether your change is healthy and not breaking any other part of the codebase?

In the next sections, we'll explore tools and best practices to help you maintain a clean, solid Python repository that every developer would love to open pull requests on.

Testing & Code Coverage

In the open-source world, you don't know much about your contributors' expertise levels. That's why reliability is crucial. Testing every change in your repository helps ensure your code's reliability. While it's not a perfect solution, it will catch many issues and vulnerabilities at the right time.

It doesn't really matter which development flow you follow, whether it's TDD or DDD, or if you write tests after your components are working. Make sure all related components are tested with each change.

Now, whenever someone makes a change in your repository on their local machine, they can test that change by running a single command to ensure everything is fine and the codebase is still working as expected.

He doesn't have to test every part of the project, and neither do you. But how do I achieve this in my repositories?

In a Python repository, I usually use either the standard unittest library or PyTest, which is an excellent tool for writing unit tests.

On top of that, I use Coverage.py to check how much of my code is covered by tests. It's not the smartest solution, but it works well for small to medium-sized projects.

If you want to use a third-party tool with more options, details, and great accessibility in your repository, CodeCov is a good solution for your Python projects.

Linting & Formatting

I don't think it needs any additional explanation on how important linting and formatting are. So, hands down, Ruff is the best tool for maintaining consistent formatting and linting across your entire repository. I love this tool because it handles all the cleaning tasks, so you won't need any other tools. Most of the time, I find myself ignoring some PEP statements, but that's fine.

How do I make the contributors to use Ruff and deliver their changes formatted and linted? Well, it's described in the next section.

I Use Pre-commit For Automate Evaluations

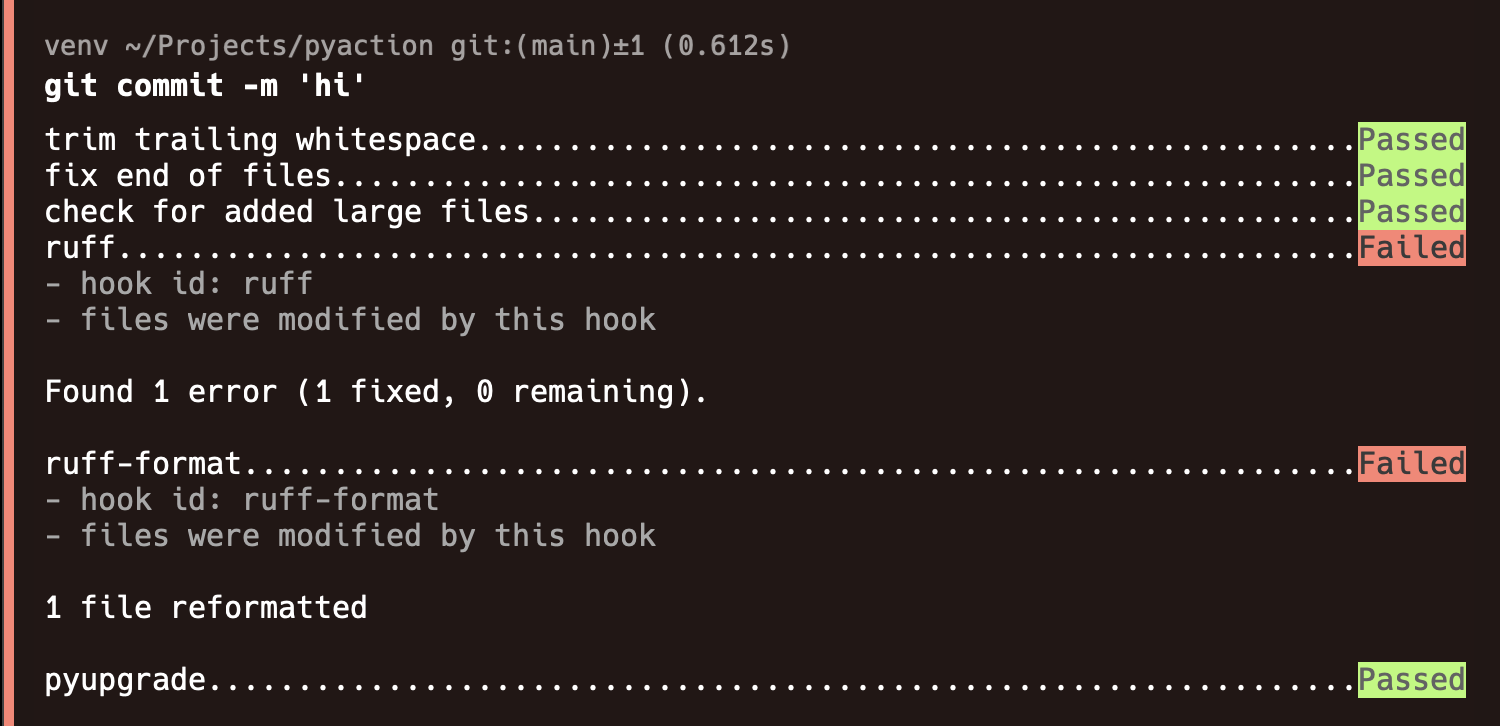

Pre-commit is essentially a Git Hook that runs automatically with each git commit by default. How does it help with open-source maintenance?

I use pre-commit to run Ruff and check the format and lint of any changes made by contributors. Actually, I don't run it myself; the contributors use it on their own!

As the maintainer of the repository, all I need to do is add a pre-commit configuration file to the root of my repository. Contributors then have to install pre-commit and enable it. From that point on, commits will only go through if Ruff runs successfully. If Ruff fails, the commit won't happen.

If there are contributors who don't want to install pre-commit and add any hooks to the .git/hooks/ directory, that's still fine. We can set up a CI/CD pipeline to run pre-commit hooks and catch the linting and formatting issues when a PR is opened. We'll discuss that later.

Multiple Python Version Support

There are times when I want my repository to work in environments with different Python versions. Naturally, before any deployment, my project should be tested in all those environments, right?

I use Tox for that purpose. Consider tox as an environment (venv) manager in your repository. You can define the following environments and set a custom set of commands, it'll create those virtual environments and run those commands.

[tox]

env_list = py3{8,9,10,11,12}

skipsdist = true

skip_install = true

[testenv]

description = run tests

deps = -rdev_requirements.txt

commands =

coverage run -m pytest {posargs:tests}

coverage report -m

coverage erase

This is a very basic tox.ini config file. It shows that I have five environments: py38, py39, py310, py311, and py312. For all these environments, the following process will occur.

Tox creates the environment.

Uses

pipto install the dependencies described like-r dev_requirements.txt.Runs

coverage run -m pytest {posargs:tests}Runs

coverage report -mRuns

coverage erase

This procedure happens for each environment, meaning we should expect tox to be executed five times.

There is a cool fact about Tox. It creates virtual environments for each environment defined inside the tox.ini file. Running the tox command goes through the process, so if you've already run that command, you should have a .tox/ directory in the root of your project, containing all the environments.

.tox/

├── py38/

├── py39/

├── py310/

├── py311/

└── py312/

It makes the further tox calls way faster because the dependencies are already installed in the environments. Note that if you want to test your changes in all these environments, you should have all these Python versions installed on your machine so that Tox can create those environments and test your changes. I use pyenv for that purpose.

CI/CD Pipelines

What if the contributors forget to install pre-commit or run tox to make sure their changes are tested in all supported environments and don't break anything in the repository?

Well, we need to double-check and ensure everything happens correctly. Since I'm developing my repository on GitHub, I use GitHub Actions. Almost all open-source repositories follow the Fork Flow strategy for contributions. This means if you want to contribute to someone's repository, you first need to clone it, make your changes, and then open a pull request.

As a maintainer, you can run both tox and pre-commit inside those pull requests. But wait a second. Why not use pre-commit inside a new tox environment? That way, your pipeline would be easier to follow. If any contributor regrets using pre-commit, it's still part of the tox configuration.

[tox]

env_list = py3{8,9,10,11,12}, pre-commit # <- new

...

[testenv:pre-commit]

description = run pre-commit

deps = pre-commit

commands = pre-commit run --all-files --show-diff-on-failure

Now, our tox has six environment and in the last environment, it first installs pre-commit and then runs pre-commit run --all-files --show-diff-on-failure. Nice!

There is a useful feature of Tox that I forgot to mention earlier. When you run tox, it only runs the environments for which you have the corresponding Python interpreter version installed on your machine. What does that mean?

If you only have Python 3.8 installed and you run tox, only one environment will be created, and the codebase will be tested in that environment. Tox won't return any status code other than 0 if you don't have the other Python versions installed. That's great.

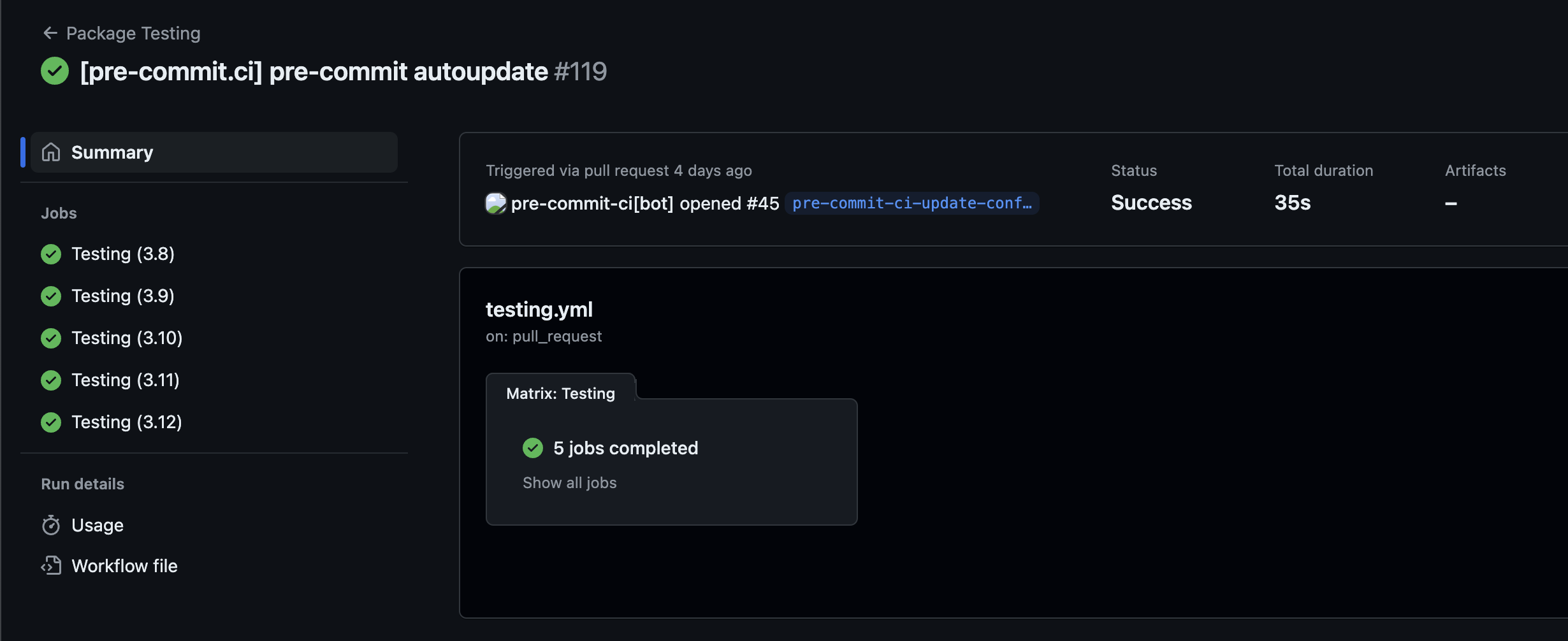

I use matrix in my actions pipeline to run tox for each Python environment.

name: Testing

on:

pull_request:

branches: [main]

jobs:

Testing:

runs-on: ubuntu-latest

strategy:

matrix:

python: ["3.8", "3.9", "3.10", "3.11", "3.12"]

steps:

- uses: actions/checkout@v4

- name: Setup Python

uses: actions/setup-python@v5

with:

python-version: ${{ matrix.python }}

- name: Install tox

run: pip install tox

- name: Run tox

run: tox -e py

Now, whenever someone opens a new pull request, a pipeline will get triggered and test the changes in all the environments.

We're almost there. The next section is optional. If you want to include documentation for your Python repository, then don't skip it.

Documentation

I've used Sphinx with reStructuredText (rST) format before. It's a good solution if you want to add quick documentation. You can gather and display information about internal APIs effectively.

You can then deploy your documentation on ReadTheDocs for free. But if you're looking for more customization and flexibility, my best advice would be MkDocs.

Material MkDocs has taken the project further by adding more flexibility, color options, and visual plugins. Both MkDocs and Material MkDocs support Markdown, which can speed up your workflow if you're not familiar with rST.

See in Practice

If you want to see all the tools and practices described in this article in action, I highly recommend checking out this repository of mine.

Conclusion

In conclusion, adopting best practices and utilizing tools to maintain Python repositories not only enhances developer experience (DX) but also contributes to the overall efficiency and quality of the software development process. By prioritizing simplicity, clean code, and thoughtful design decisions, developers can streamline their workflows, reduce technical debt, and foster a collaborative and inclusive development environment. Embracing these principles not only benefits the individual developer but also the entire team and the long-term success of the project.

Ultimately, investing in maintaining clean and well-organized code will pay off in the form of increased productivity, easier maintenance, and more robust and resilient software applications.

Subscribe to my newsletter

Read articles from Sadra Yahyapour directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sadra Yahyapour

Sadra Yahyapour

A passionate software developer who enjoys sharing his thoughts with others and learning from them vise versa!