Quick Test : Fabric AI Skills As A Function

Sandeep Pawar

Sandeep PawarIn the last blog I wrote, I showed how to call the AI Skills endpoint in a Fabric notebook. Being able to call the endpoint programmatically creates many opportunities to integrate AI Skills in different applications. One that I thought of was using AI Skills as a function. Function Calling or Tools is a specific use case in Gen AI to create structured output based on a function or behavior instructed by the user. I am not referring to that as AI Skills can only return a table. Instead, what if you created a number of these AI Skills that are grounded in your data with specific functions built to get the intended output? You could serve/share these with end users who can call these functions to generate data/results. Think of these as macros in Excel. See the example below:

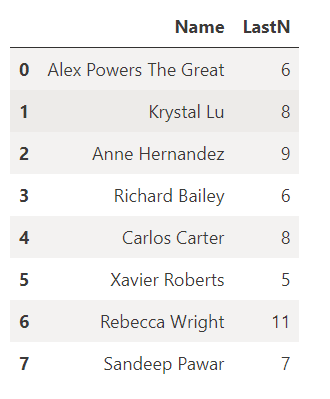

I have a table of customers and I need to get the total order amount for each customer for last 5 transactions and their last order date. Yes, you can easily write a Python function to achieve this but what if I could use natural language to get the data I need for my specific use case without knowing Python or any other language? AI Skills to the rescue.

| Name | LastN | |

| 0 | Krystal Lu | 11 |

| 1 | Anne Hernandez | 9 |

| 2 | Richard Bailey | 8 |

| 3 | Carlos Carter | 3 |

| 4 | Xavier Roberts | 12 |

| 5 | Rebecca Wright | 6 |

Structured Output

Below function is similar to the one I shared in the last blog with couple of changes:

Still using

Pydanticbut this time I am including schema and data validation to ensure the amount returned cannot be negative and date can be parse as a date. AI Skills returns the date as a string. This ensures that my downstream application receives valid (not necessarily accurate) data.The function returns the results as a JSON in the format:

#sample output { "headers": ["TotalOrderAmount", "LastPurchaseDate"], "data": [ { "TotalOrderAmount": 4347.0, "LastPurchaseDate": "2021-10-18" }, { "TotalOrderAmount": 1234.56, "LastPurchaseDate": null }, { "TotalOrderAmount": 5678.9, "LastPurchaseDate": "2023-05-12" } ] }This is to ensure I can use it further downstream in another applications, just a nice to have.

Ask AI Skills:

%pip install semantic-link pydantic --q import sempy.fabric as fabric from pydantic import BaseModel, field_validator from typing import List, Optional, Union, Any import pandas as pd import requests from datetime import datetime client = fabric.FabricRestClient() def ask_aiskill(workspace: str, aiskill: str, question: str) -> Optional[dict]: """ Sandeep Pawar | fabric.guru | Aug-08-2024 | v0.5 Returns results from AI Skills as a valid JSON object Parameters: - workspace (str): The workspace id - aiskill (str): AI skill id - question (str): The user question to pass to the AI skill in English. Returns: - JSON object with headers and validated values """ class ModelDetails(BaseModel): sqlQueryVariations: int showExecutedSQL: bool includeSQLExplanation: bool enableExplanations: bool enableSemanticMismatchDetection: bool executeSql: bool enableBlockAdditionalContextByLength: bool additionalContextLanguageDetection: bool fewShotExampleCount: int class Response(BaseModel): artifactId: str promptContextId: str queryEndpoint: str result: Union[str, float] ResultRows: Any ResultHeaders: List[str] ResultTypes: List[str] executedSQL: str additionalMessage: Optional[Union[str, dict]] prompt: str userQuestion: str modelBehavior: ModelDetails additionalContext: str class ValidatedRow(BaseModel): ## for schema and dta validation TotalOrderAmount: Optional[float] LastPurchaseDate: Optional[str] @field_validator('TotalOrderAmount') def check_total_order_amount(cls, value): if value is not None and value < 0: raise ValueError('TotalOrderAmount cannot be negative') return value @field_validator('LastPurchaseDate', mode='before') def validate_last_purchase_date(cls, value): if value is None: return value try: datetime.strptime(value, '%Y-%m-%d') except ValueError: raise ValueError('LastPurchaseDate must be a valid date in yyyy-mm-dd format or null') return value payload = {"userQuestion": question} try: wsid = fabric.resolve_workspace_id(workspace) except: print("Check workspace name/id") url = f"v1/workspaces/{wsid}/aiskills/{aiskill}/query/deployment" try: response = client.post(url, json=payload) result = response.json() aiskill_response = Response(**result) # Responses result_header = aiskill_response.ResultHeaders result_rows = aiskill_response.ResultRows # Validate and construct JSON validated_data = [] for row in result_rows: validated_row = ValidatedRow( TotalOrderAmount=row[0], LastPurchaseDate=row[1] ) validated_data.append(validated_row.dict()) return {'headers': result_header, 'data': validated_data} except requests.exceptions.HTTPError as h_err: print(f"HTTP error: {h_err}") except requests.exceptions.RequestException as r_err: print(f"Request error: {r_err}") except Exception as err: print(f"Error: {err}") return NoneUse AI Skills as a Function:

Apply above AI Skills as a function to below df:

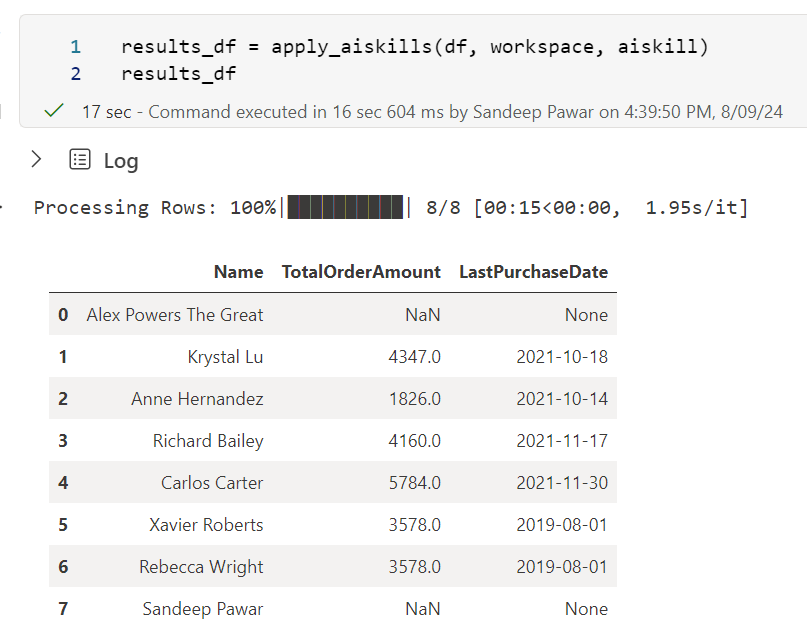

from tqdm import tqdm import pandas as pd def apply_aiskills(df, workspace, aiskill): """ Apply the AI Skills to the specified df to generate results, experimental. """ all_results = [] for _, row in tqdm(df.iterrows(), total=df.shape[0], desc="Processing Rows"): name = row['Name'] last_n = row['LastN'] question = f"total order amount by {name} and date of last purchase based on last {last_n} orders" result = ask_aiskill(workspace, aiskill, question) if result and 'data' in result: for item in result['data']: result_df = pd.DataFrame({ 'Name': [name], 'TotalOrderAmount': [item.get('TotalOrderAmount')], 'LastPurchaseDate': [item.get('LastPurchaseDate')] }) all_results.append(result_df) else: result_df = pd.DataFrame({ 'Name': [name], 'TotalOrderAmount': [None], 'LastPurchaseDate': [None] }) all_results.append(result_df) # Combne teh dfs results_df = pd.concat(all_results, ignore_index=True) return results_df workspace = "fd91c6-xxxxx-89dbecdb27ea" aiskill = "846438f3-xxxxx-6210" results_df = apply_aiskills(df, workspace, aiskill) results_df

Here is the SQL query generated by AI Skills:

SELECT SUM(CAST(Quantity AS DECIMAL) * CAST(UnitPrice AS DECIMAL)) AS TotalOrderAmount, MAX(CAST(OrderDate AS DATE)) AS LastOrderDate

FROM (

SELECT TOP 5 Quantity, UnitPrice, OrderDate

FROM [dbo].[sales]

WHERE FirstName = 'Krystal' AND LastName = 'Lu'

ORDER BY OrderDate DESC

) subquery;

There you have it. Again, in this case it would have been easier to just write a Python function but here are the applications where I think this could potentially be used for :

Being able to ask natural language questions and generate structured data

Integrate in other applications

This AI Skills is grounded in lakehouse data but like Azure AI Copilot Studio or Google AI Studio what if AI Skills also supported unstructured data? That would be a game changer. Being able to generate structured responses like above from varying data sources (Lakehouse, Files, SharePoint, Onedrive etc.) would be fantastic.

Based on my tests, I am 99% sure I can build this as a Python function in Excel. In a notebook, you could use sparkSQL but what if you just published this AI Skills and user could just enter this as a function in an Excel cell and get the results? Amazing, right !!!

Notes

Take a look at the SQL query. There two columns in the table

FirstNameandLastName. I did not instruct the model to combine these two columns. My table has had just name, NL2SQL model combined it to meanName. I did add additional context that unit price and quantity are varchar in the table and sales amount, order amount, revenue should be calculated as quantity * unit price.This was more of an experiment than anything else. I am super excited for all the applications and opportunities that are possible.

I had to add instructions and give several SQL examples as few shot to ensure I get the response I want.

Here it is assumed that the user will systematically validate AI Skills before publishing. I will write more about it soon. It's a heavy and important topic so need to find more time.

I am not sure if I can batch the API calls to reduce latency, if anyone knows, let me know.

I would like to see an AI Skills Hub or Library in Fabric for discoverability. The hub can have all the published AI Skills I have access to, lineage of data sources used, example use cases, prompts used, examples, examples of where it doesn't work (based on developer's or user's submissions), validation etc. Making it open, discoverable, auditable and transparent will help gain trust and adoption.

I have few more experiments & applications in mind, stay tuned!

Subscribe to my newsletter

Read articles from Sandeep Pawar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sandeep Pawar

Sandeep Pawar

Principal Program Manager, Microsoft Fabric CAT helping users and organizations build scalable, insightful, secure solutions. Blogs, opinions are my own and do not represent my employer.