AI Power Crisis: The Hidden Costs of Our Future's Intelligence

nidhinkumar

nidhinkumar

Whoa!!! how cool the image is it's a masterpiece. Really this music was generated using AI looks so realistic. AI helps me to write copy codes faster and makes me dumb.Voila AI helps me to think like a human how great it is.

Listen AI will be the future and if you aren't in the race i think you are lost as a human. These would be the words you might hear from the visionless visionaries who just thinks only about their Profits rather than next generations.

Charitram padikkadha tharalam mandammar undolo ellaayidangalilum.

In Generative AI race by OpenAI, Gemini, CoPilot and so many you can witness the raise of datacenters that are springing up which means the power to run them and cool them are getting increased day by day.

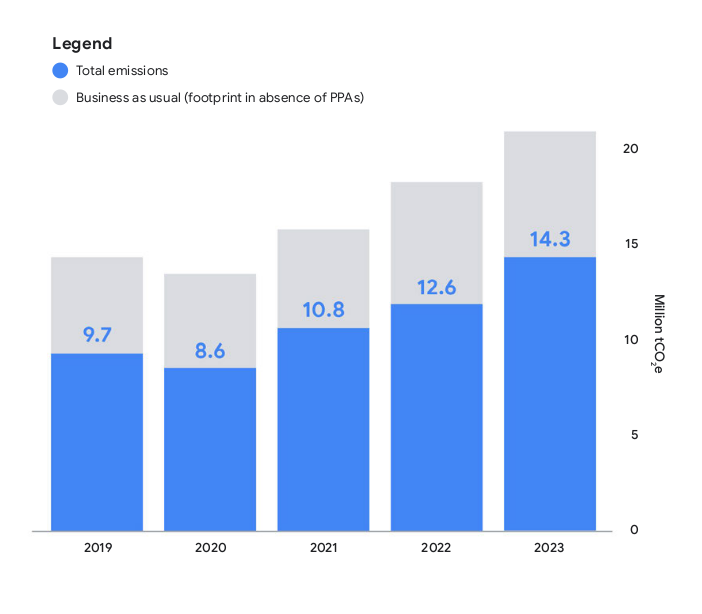

Hyperscalers building datacenters to accommodate AI have seen emissions skyrocket. Well this is not a new problem way back in 2019 estimates found that training one large language model produced as much as CO2 as the entire lifetime of five gas powered cars.

Well, that's fine so what's the problem generate more power it would solve this problem right if that's your reply. Think the below scenario

Well even if we can generate enough power. our aging grids are unable to handle that loads. Imagine on a hot summer day when the demand for the power is at it's peak and the datacenters doesn't reduces it's load which will lead to a "blackout". Will you sit on a hot summer without turning AC's ?

To know more about this crisis let's understand the problem in depth and welcome to the AI Power Crisis.

Power Chase

There are around 8,000 data centers globally with the highest number of centers in the US which is not enough for the demand which is seen for the AI specific applications and there would be a steady increase of 15 to 20% increase in the demand every year till 2030.

Google's latest environmental report state that the greenhouse gas emission rises nearly 50% from 2019 to 2023.

Whereas Microsoft total emission rises nearly 30% from 2020 to 2024. Well this increase in emission won't stop the datacenters they will do whatever they can to get the power capacity.

One approach which they would look is building the datacenters where the resources are more plentyful like the renewable energies (Wind or Solar). Even some AI companies and data centers are generating powers from their site using this approach

Renewable Energies

The efforts made by AI companies and data centers to generate their own power on-site and the challenges of delivering and transmitting the power from where it's generated to where it's consumed. Some companies are experimenting with renewable energy sources like solar, nuclear, and geothermal power to generate electricity on-site. For instance, OpenAI's CEO Sam Altman has invested in solar and nuclear startups like Oklo. Microsoft and Google have also signed deals with nuclear fusion and geothermal startups like Helion and Fervo, respectively.

Hardening the Grid

Data centers themselves are also generating their own power, such as Vantage's 100-megawatt natural gas power plant in Virginia. However, even when enough power can be generated, the aging grid is often ill-equipped to handle transmitting it, leading to the need for grid hardening and solutions like predictive software to reduce transformer failures. VIE technologies which provides a solution which can predict failures and determine which transformer can handle more load. The bottleneck often occurs in getting power from the site where it's generated to where it's consumed, and adding new transmission lines is a costly and time-consuming solution. The aging transformers, which are a common cause for power outages, are expensive and slow to replace.

ImageSource: VIE Technologies

VIE's business has been tripled since ChatGPT came on to the scene since 2022.

Cooling down servers

The massive power draw of generative AI is a concern due to its water intensity, with AI projected to withdraw more water annually by 2027. The water needs of AI computing have shocked researchers, with every 10 to 50 ChatGPT prompts burning through what's found in a standard 16-ounce water bottle. Training AI generates even more heat and power, and cooling it down is essential to prevent overheating Global AI demand is accountable for up to 6.6 billion cubic meters of water withdrawal by 2027.

The challenges of cooling data centers for the rapidly growing AI industry, specifically focusing on the water and power consumption. The industry is exploring alternative cooling methods, such as using cool liquid directly on chips or submerging servers under the ocean.

Microsoft Underwater Datacenter (Image Source: Microsoft)

ARM Based Chips

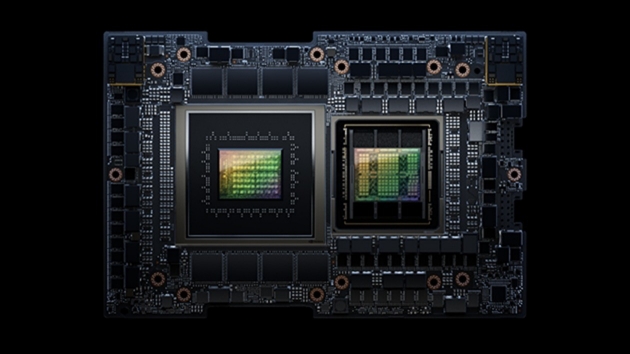

However, the speed at which this industry is growing makes it challenging to implement these solutions. Companies like Microsoft, Amazon, and Google adopting ARM-based specialized processors for their data centers to save power. ARM's power efficiency has made it increasingly popular in the tech industry, with significant power savings reported by companies like AWS and Nvidia. The potential energy savings from doing AI on devices instead of servers could help data centers build more and catch up to AI's insatiable appetite for power. Despite the challenges, there is a lot of growth ahead in this industry.

NVIDIA GH200 Grace Hopper Superchip (Image Source: NVIDIA)

No matter what new innovation comes to ease our lives the moment it starts to affect the living beings and the nature then it will go back to square one because

Nature knows how to heal on its own

Subscribe to my newsletter

Read articles from nidhinkumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by