Rate limiting AWS Lambda invocation with AWS SQS event source

Aparna Vikraman

Aparna Vikraman

Overview

AWS Lambda provides developers with serverless computing resources with built-in scalability and optimizes agility, performance, cost, and security. But it comes with its challenges. In this blog, we will see the current scaling behavior, and avoid unnecessary cost and impact on your downstream systems while using AWS Lambda with AWS SQS as an event source for asynchronous invocations.

What is concurrency?

Concurrent means “existing, happening, or done at the same time”. Lambda concurrency is not a rate or transactions per second (TPS) limit but the number of invocations in flight at any given time.

For example, Consider a fixed concurrency of 1000 and each invocation runs at a duration of 1 second. In a given second, you can initiate only 1000 invocations. These environments will remain busy for that entire second and block you from creating any new invocations.

Similarly, Consider a fixed concurrency of 1000 and each invocation has a duration of 500ms. In a given second, you can initiate only 2000 invocations. As the first 1000 invocations will remain busy only for half a second you can initiate another 1000 invocations within that same second.

Scaling behavior with AWS SQS FIFO queues

A single lambda processes a batch of messages from the same message group to ensure the processing order.

Scaling behavior with AWS SQS Standard Queues

The event source mapping polls the queues to consume the messages and can start at five concurrent batches with five lambdas. As we receive more messages, Lambda continues to scale to meet the demand by adding up to 60 functions per minute and overall 1000 functions to consume the messages.

Problem

Account currency quota limit

Scaling out lambda as the number of messages increases could result in the consumption of Lambda concurrency quota in the account.

Overloading downstream systems

An application would be a combination of Server-based and Serverless applications. A server-based system with fixed capacity and resources can get overloaded when the serverless component of the application scales up to match the demand.

Even for the cases with strict rate limiting on the server-based component to prevent the overload, the message would be returned to the queue due to downstream resource limitation and hence retried based on the re-drive policy, expired based on its retention policy, or sent to another SQS dead-letter queue (DLQ). While sending unprocessed messages to a DLQ is a good option to preserve messages, requires a separate mechanism to inspect and process messages from the DLQ.

Cost

Server-less scales quickly, and a piece of poorly written code could scale up and consume resources, making it difficult to control costs.

Possible Solutions

Reserved concurrency

We can set reserved concurrency limits for individual lambda functions to avoid the above problems. This ensures the lambda can scale only up to the set reserved concurrency limit.

Once the concurrency limit is reached, the message is returned to the queue and retried based on the re-drive policy, expired based on its retention policy, or sent to another SQS dead-letter queue (DLQ) based on the SQS queue configuration. Even though sending unprocessed messages to the DLQ is a good option, a separate mechanism must be defined to re-drive the messages in the DLQ.

This solution could solve only Problems (2) and (3)

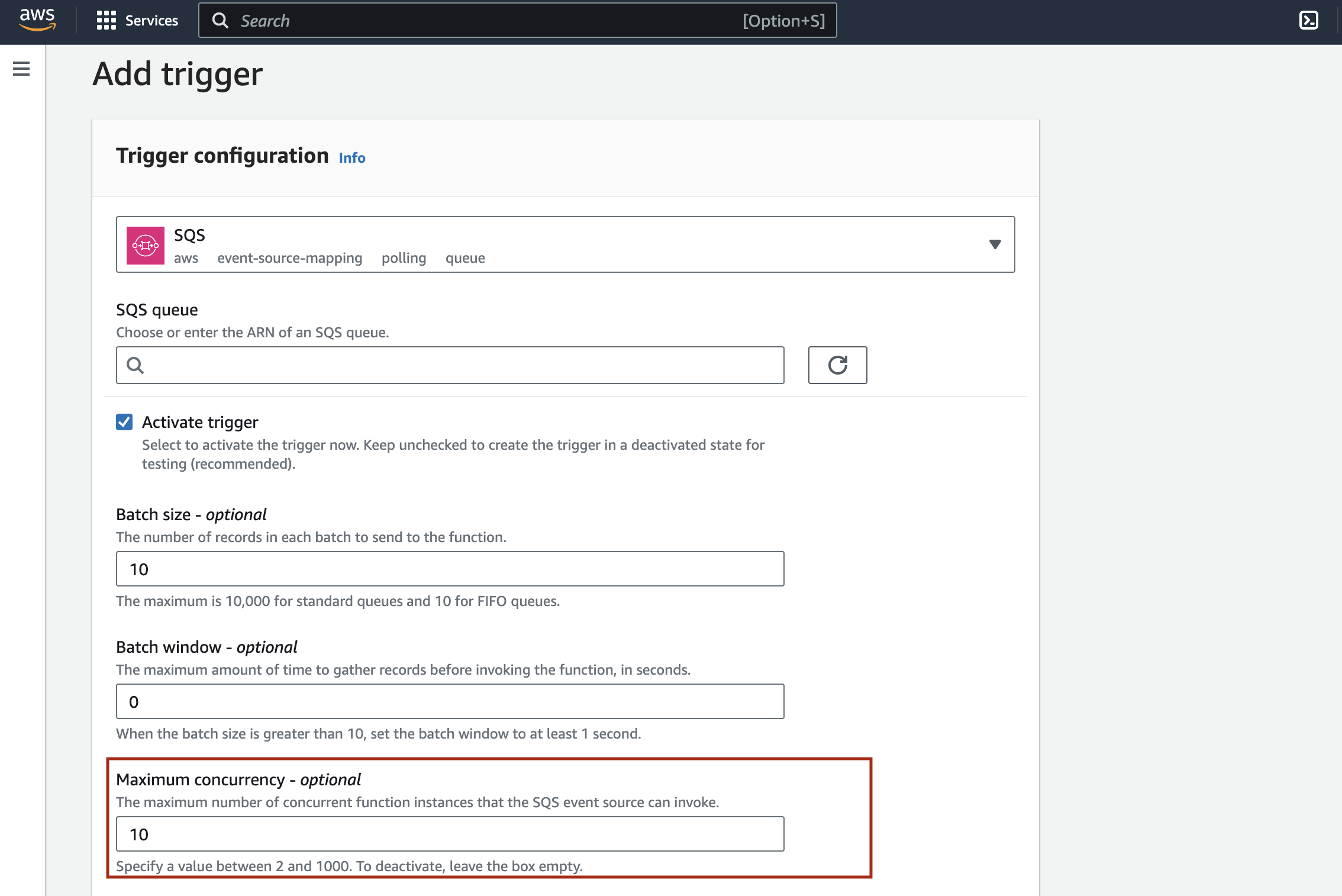

Max Concurrency for SQS event source

Maximum lambda concurrency can be controlled at the SQS event source. This allows you to define the max concurrency at the SQS event source and not on a lambda function.

This doesn’t change the lambda scaling or batching behavior with SQS. This configuration ensures the number of concurrent lambda functions per SQS event once. Once lambda reaches the max concurrency limit no more message batches would be consumed from the Queue. This feature also provides flexibility to control concurrency on the SQS source level, when a lambda function consumes messages from different SQS queues.

This solution could solve all three problems listed above.

Setting Max concurrency

- From CDK code, you can set the max concurrency as below

this.lambdaFunction.addEventSource(

new SqsEventSource(this.sqsQueue, {

batchSize: 5,

maxBatchingWindow: Duration.minutes(1),

maxConcurrency: 7,

reportBatchItemFailures: true,

}),

);

- From the AWS console, While setting up the trigger for the Lambda function, set the

Maximum concurrencyto the required value.

Subscribe to my newsletter

Read articles from Aparna Vikraman directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by