Unlocking the secrets of cybersecurity: easily spotting a crawler in disguise

小狼

小狼

Synopsis

In the vast realm of the Internet, hundreds of millions of web pages and data flow through unseen channels every day. However, with the growing importance of data, cyber security has become a key challenge that every website operator must face. Our websites are like virtual fortresses that need to be open to real users as well as defending against "enemies" that hide in the data stream. These "enemies" may be harmless crawlers or malicious intruders, often masquerading as ordinary visitors, trying to steal information, damage systems or consume resources. Therefore, when our website encounters intrusion, how to accurately distinguish between real users and reptiles through the IP has become the top priority of website protection.

Crawlers: Hidden Visitors

Before we explore how to distinguish between real users and crawlers, we first need to understand what these crawlers are and their behavioural characteristics. Crawlers, or web spiders, are tools used to automatically visit websites. They are ubiquitous on the Internet and act as information gatherers. Some of these crawlers are "friendly" visitors that bring traffic and exposure to our websites; however, others are unwanted invaders that can cause great damage to our websites.

Useful crawlers: movers and shakers of the Internet

Firstly, let's take a look at the "friendly" crawlers that help our websites to be recognised by the Internet in a more positive way. These include search engine crawlers, marketing crawlers, monitoring crawlers, traffic crawlers, link checking crawlers, tool crawlers, speed test crawlers and vulnerability scanners. Each of them has its own role to play and provides a lot of valuable data to the webmasters.

Search Engine Crawlers: These crawlers are the foundation of search engines. Crawlers like Googlebot, Bingbot visit your website regularly to collect and index your website content so that it can be displayed in search engine results pages. Visits from search engine crawlers are critical to improving the visibility of your website.

Marketing Crawlers: These crawlers are widely used by companies for market research, they automatically crawl competitors' website data, such as product prices, promotions, user reviews, etc., to provide data support for the company's marketing decisions.

Monitoring Crawlers: Web monitoring tools use these crawlers to monitor the operational status of the website in real-time, including page loading speed, availability, performance, etc. With the help of these crawlers, webmasters are able to identify and fix potential problems in time.

Information Streaming Crawlers: These crawlers grab the latest content from various news sites and social media platforms and integrate it into the information stream so that users can get the latest information at the first time. For example, RSS aggregators use crawlers to grab content from subscribed websites and push it to users.

Link Checking Crawlers: These crawlers scan all links within a website to find and report broken links or 404 error pages, helping administrators to maintain the health of the website and improve the user experience.

Tool Crawlers: These crawlers are usually used for tasks such as page performance testing and structural analysis. They help administrators improve the performance of a website by accessing and analysing pages and providing a series of optimisation recommendations.

Vulnerability Scanning Crawlers: These crawlers are used to discover potential security vulnerabilities of a website, such as SQL injection points, XSS attack points, etc. By fixing these vulnerabilities in time, the website can effectively resist hacker attacks and safeguard data security.

Malicious crawlers: a hidden threat

However, not all crawlers are harmless. Some malicious crawlers pose a threat to website security by disguising themselves as legitimate visitors and carrying out activities such as data theft, illegal crawling, and resource abuse. These malicious crawlers include crawl-type crawlers, forgery crawlers, resource-consuming crawlers, and data-stealing crawlers.

Scraping Crawlers: Unauthorised large-scale data scraping crawlers steal website content and copy it to other platforms. This not only infringes on a website's copyright, but can also lead to a drop in SEO rankings, which can seriously affect a website's traffic and reputation.

Counterfeit crawlers: These crawlers impersonate legitimate search engine crawlers, such as Googlebot, Bingbot, etc., by forging User-Agent headers with the aim of bypassing firewalls and other security measures to carry out malicious activities such as Distributed Denial-of-Service (DDoS) attacks, data theft, and so on.

Resource-consuming crawlers: These crawlers consume the server resources of a website through frequent visits, increasing the load on the server and leading to degradation of the website's performance, or even rendering the website unserviceable. For small websites, this attack is especially destructive.

Data-stealing crawlers: These crawlers specialise in crawling sensitive information on websites, such as user login information, payment data, personal information and so on. The stolen data may be used for illegal transactions, identity theft or other malicious behaviours, posing a huge security risk to users and websites.

Crawler detection from server logs

To detect crawlers from server logs, you can identify crawler activity by looking at the User-Agent field and IP address in the log file. Common crawler User-Agents such as Googlebot, Bingbot, etc. can help you determine whether requests are coming from legitimate crawlers, and you can also back-check the IP address and analyse the access frequency and access patterns to determine whether there is a spoofed User-Agent or a malicious crawler.

In the logs, you will see a large number of IPs, you can initially determine which are crawlers and which are normal users based on the User-Agent. Example:

- Mozilla/5.0 (compatible; SemrushBot/7~bl; +http://www.semrush.com/bot.html)

This is the Semrush crawler.

Mozilla/5.0 (compatible; bingbot/2.0;+http://www.bing.com/bingbot.htm)

This is Bing's crawler.

- Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.97 Mobile Safari/537.36 ( compatible; Googlebot/2.1; +http://www.google.com/bot.html)

It's a Google crawler.

However, User-Agent can be easily forged, so it alone cannot accurately determine whether a visitor is a crawler. A more reliable way is to check it in combination with IP.

For example, suppose you see the following entry in the log:

66.249.71.19 - - [19/May/2021:06:25:52 +0800] "GET /history/16521060410/2019 HTTP/1.1" 302 257 "-" "Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.97 Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google .com/bot.html)."

This record shows an IP of 66.249.71.19, which looks like a Googlebot crawler. To confirm its authenticity, we can backtrack the IP to see if its Hostname is crawl-66-249-71-19.googlebot.com, and use the ping command to confirm that the IP of the Hostname matches the IP in the log. This will determine if the IP actually belongs to the Google crawler. If there is any doubt about the IP, you can also use the Crawler Identification Tool to check further.

Managing IP Access with Third-Party Services: Improving Defence Efficiency

Manually managing crawler IPs is both time-consuming and inefficient in the face of increasingly complex cyber threats. As a result, more and more organisations are using third-party services to automate this process.

IP Anti-Crawl Proxies: Blocking Malicious Access

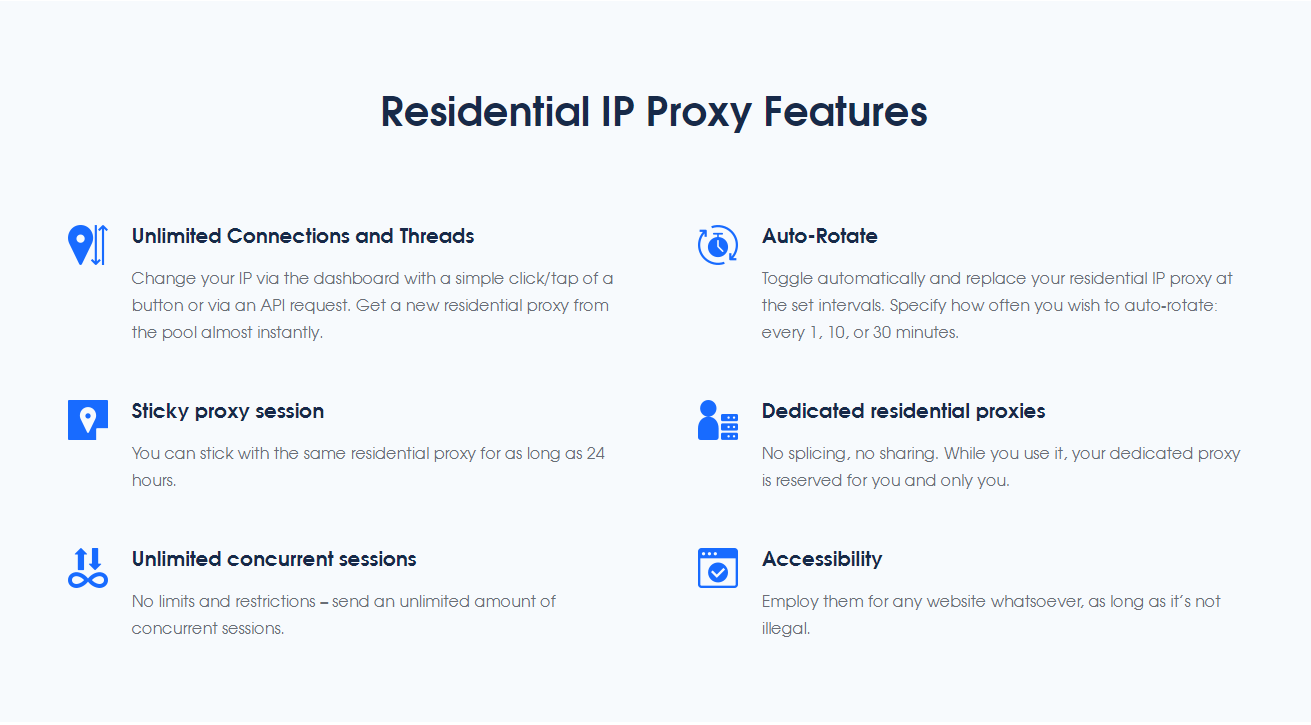

IP Anti-Crawl Proxies are a service specifically designed to keep malicious crawlers at bay. Recommended Proxies Proxy4Free's Residential Proxies is one of the widely used anti-crawl proxy services in the industry, which is able to provide IPs worldwide and update the IP pool on a regular basis. These IPs help organisations effectively block access by malicious crawlers while ensuring that normal access by legitimate crawlers and real users is not affected.

Services such as Proxy4Free improve the overall performance and security of websites by redirecting malicious crawlers to other IPs, reducing their consumption of server resources. Such services are particularly valuable for organisations that need to process large amounts of data, as they automate the management of large-scale IPs while providing efficient security.

Automated log analysis tools: real-time monitoring and protection

In addition to IP Anti-Crawler Proxies services, automated log analysis tools are also effective means of preventing the intrusion of malicious crawlers. These tools can analyse server logs in real time, identify abnormal access behaviours and automatically blacklist suspicious IPs to prevent further access.

Some advanced log analysis tools also have the ability to integrate with an organisation's existing security system for comprehensive network protection. For example, when an IP's behaviour is identified as malicious, the system can automatically trigger a security alert and take appropriate action, such as disabling the IP or notifying administrators.

Not only do these tools improve the security of your website, they also reduce the workload of administrators, allowing them to focus on other, more important tasks.

Conclusion

With the continuous progress of technology, the behaviour of malicious crawlers has become more and more covert, and the threat to the website has become greater and greater. Through in-depth understanding of the behavioural patterns of crawlers, combined with a variety of technical means of IP identification and management, webmasters can effectively improve the website's defensive capabilities to protect the security and normal operation of the website.

Subscribe to my newsletter

Read articles from 小狼 directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by