Amazon AppRunner - The perfect sidekick for the high-code OutSystems developer

Stefan Weber

Stefan Weber

This article details the setup of an AWS AppRunner service to host a Python-based REST API using the spaCy NLP library. It provides a step-by-step guide on configuring AppRunner with source code from GitHub and integrating the deployed REST API with OutSystems.

Sometimes I need to add custom backend code to OutSystems, whether it's a custom code extension in OutSystems 11 or an external logic function in OutSystems Developer Cloud. However, in both platforms, I am limited to using C#. Specifically, in OutSystems 11, I am even limited to .NET Framework 4.8, which makes it impossible to use some libraries that require newer .NET Framework editions.

Wrapping existing libraries of all kinds is my primary use-case when it comes to custom code in OutSystems. Unfortunately not all of them are .NET based, but JavaScript, Java and Python.

Amazon Lambda

My personal preference, and if my customers agree, is to use AWS Lambda functions. These allow me to build in almost any programming language (all by using a custom runtime container) and call them from my OutSystems application, either using a REST call to a Lambda function URL (or via API Gateway) or by using the AWS C# SDK for Lambda, for which I created an O11 Forge component.

Developing with Lambda, of course, comes with a cost. You need to have a good understanding of AWS Lambda configurations. It's also helpful to know IAM, API Gateway, and a toolset like AWS CDK or SAM. Lastly, you should know how to set up a deployment pipeline for your Lambda functions and attached resources.

In short, AWS Lambda might not be suitable for you, and in some cases, it isn't suitable for me either.

Amazon AppRunner

In this article we take a look at another Amazon Web Service: AppRunner.

AppRunner is a fully managed service for containerized web applications and APIs. The beauty of AppRunner is that it supports automated builds and deployment directly from a GitHub source code repository (public or private) or container images published to AWS Elastic Container Registry.

For source code builds and deployments, you configure an AppRunner service, connect it to your GitHub repository, and define settings like scaling options and health checks. AppRunner takes care of the rest. You can also set up a custom domain or attach your service to a VPC. Additionally, you can choose to automatically redeploy your service when your code changes.

Overall, AppRunner is one of the most user-friendly services in the AWS ecosystem. Given its ease of use, you could say that AppRunner was essentially designed for low-coders like us.

What we build

In this walkthrough, we will create an AppRunner service that hosts a simple REST API written in Python. This API identifies and extracts named entities from text using the spaCy NLP library.

You have two options:

Lazy Option - If you just want to launch an AppRunner service with a prebuilt REST API, start with Fork the Sample Repository.

Coding Option - If you prefer to code the REST API yourself, skip Fork the Sample Repository and start reading at Code the REST API.

Prerequisites

For both options you will need

GitHub account - AppRunner will pull the source code from a repository in your account.

AWS AppRunner - You need permission to create an AppRunner service configuration.

Only for the coding option

Python 3.11 - AppRunner uses Python 3.11 for its runtime environment.

Poetry - We will use Poetry for dependency management. To install Poetry follow the installation tutorial in the Poetry documentation.

- Visual Studio Code - For editing the source files. Install extensions for Python and DevContainers as well.

Fork the Sample Repository

If you just want to configure an AppRunner service with pre-built REST API simply fork my sample repository to your GitHub account

Click on Fork in the spacy-service repository

Enter a name for the copy in your GitHub account

Make sure the Copy main branch only checkbox is selected

Click on Create fork

Skip the following section and read on with AppRunner Configuration File.

Code the REST API

Let's start by initializing a Poetry-based Python project. Open a terminal window and execute the following commands.

mkdir spacy-service

cd spacy-service

poetry init

Leave the default values for Package Name, Version, Description, Author, License and Compatible python versions. Enter no for Would you like to define your main dependencies interactively? and Would you like to define your development dependencies interactively?. Then confirm generation with yes.

This will generate a pyproject.toml file with the default values. Open the toml file in an editor of your choice and append the following line to the [tool.poetry] section.

package-mode = false

We are not going to use Poetry for publishing, and setting package-mode to false turns off this feature along with all the validation checks.

Next install the required packages by

cd spacy-ner-api

poetry add fastapi uvicorn spacy pydantic

fastapi - A modern, fast web framework for building APIs with Python.

spacy - An industrial-strength Natural Language Processing (NLP) library in Python.

uvicorn - A lightning-fast ASGI server implementation.

pydantic - A data validation library

Your pyproject.toml should look now like this

[tool.poetry]

name = "spacy-service"

version = "0.1.0"

description = "REST API Service using spaCy NLP for Named Entity Recognition"

authors = ["Stefan Weber <stefan.weber@spatium.one>"]

license = "MIT"

readme = "README.md"

package-mode = false

[tool.poetry.dependencies]

python = "^3.11"

fastapi = "^0.112.0"

uvicorn = "^0.30.5"

spacy = "^3.7.5"

pydantic = "^2.8.2"

[build-system]

requires = ["poetry-core"]

build-backend = "poetry.core.masonry.api"

Time for some coding. In the project directory, create a new folder called src and within it, create the following Python code files.

models.py

from pydantic import BaseModel

from typing import List

class Entity(BaseModel):

text: str

label: str

start: int

end: int

class RecognitionRequest(BaseModel):

text: str

class RecognitionResponse(BaseModel):

entities: List[Entity]

These Pydantic models are used to define the structure of the input and output data for the named entity recognition operation.

services.py

import spacy

from .models import Entity

from typing import List

class EntityService:

def __init__(self):

self.nlp = spacy.load("en_core_web_sm")

def process(self, text: str) -> List[Entity]:

doc = self.nlp(text)

return [

Entity(

text=ent.text,

label=ent.label_,

start=ent.start_char,

end=ent.end_char

)

for ent in doc.ents

]

entity_service = EntityService()

This code defines a class EntityService that uses the spaCy library to perform named entity recognition (NER) on input text. The process method takes a string of text, passes it through a pre-loaded NLP model (en_core_web_sm), and returns a list of Entity objects representing the detected named entities, along with their text, label, and character positions within the input text.

main.py

from fastapi import FastAPI

from fastapi.openapi.utils import get_openapi

from .models import RecognitionRequest, RecognitionResponse

from .services import entity_service

app = FastAPI()

@app.post("/ner", response_model=RecognitionResponse)

async def extract_entities(request: RecognitionRequest):

entities = entity_service.process(request.text)

return RecognitionResponse(entities=entities)

def openapi_spec():

if app.openapi_schema:

return app.openapi_schema

openapi_schema = get_openapi(

title="spaCy Named Entity Recognition",

version="1.0.0",

description="Detects and extracts named entities from text",

routes=app.routes,

)

app.openapi_schema = openapi_schema

return app.openapi_schema

app.openapi = openapi_spec

Here we set up a FastAPI application with a single route, /ner, that accepts POST requests with text data. The extract_entities function processes the text using the entity_service object and returns a RecognitionResponse object with the recognized named entities. The code also generates an OpenAPI specification for the application, which is available by default under the /docs route.

Before starting our service, we need to download the pre-trained spaCy model using the following command:

poetry run python -m spacy download en_core_web_sm

Now it is time to start the service with

poetry run uvicorn src.main:app --reload

After a successful start, you can redirect your browser to http://localhost:8000/docs to view the generated Swagger documentation and try the Named Entity Recognition.

AppRunner Configuration File

Add another file apprunner.yaml in the root directory of your project and paste the following

version: 1.0

runtime: python311

build:

commands:

build:

- echo "Building..."

run:

runtime-version: 3.11

pre-run:

- pip3 install poetry

- poetry config virtualenvs.create false

- poetry install

- poetry run python3 -m spacy download en_core_web_sm

command: poetry run uvicorn src.main:app --host 0.0.0.0 --port 8000 --reload

network:

port: 8000

apprunner.yaml for AppRunner source code builds is similar to a Dockerfile for Docker images.

Although you can configure all the settings in the AWS AppRunner console, it is more efficient to use a configuration file in your repo.

The apprunner.yaml file sets the runtime to Python version 3.11.

In the pre-run phase, it executes the following commands:

Installs poetry using pip

Configures poetry to not create a virtual environment

Installs the dependencies

Downloads the spaCy model used for named entity recognition

The command starts our REST API listening on port 8000.

Under network, we tell AppRunner that our application runs internally on port 8000.

To finalize the coding part, add a .gitignore file to the root of your project. Go to gitignore.io, generate exclusions for Python, and copy the contents to the file.

Initialize a git repository in the root directory of your project, then commit and push it to your GitHub account.

AppRunner

With our code repository prepared we are now ready to go to the AWS AppRunner console.

Connect GitHub Account

The first task we perform is to connect our GitHub account to AppRunner.

In the AppRunner menu (left) select Connected accounts and then click on Add new.

Make sure that GitHub is selected in the dropdown then click Add.

Follow the instructions to link your GitHub account and provide a connection name.

Create an AppRunner Service

Next we create an AppRunner service

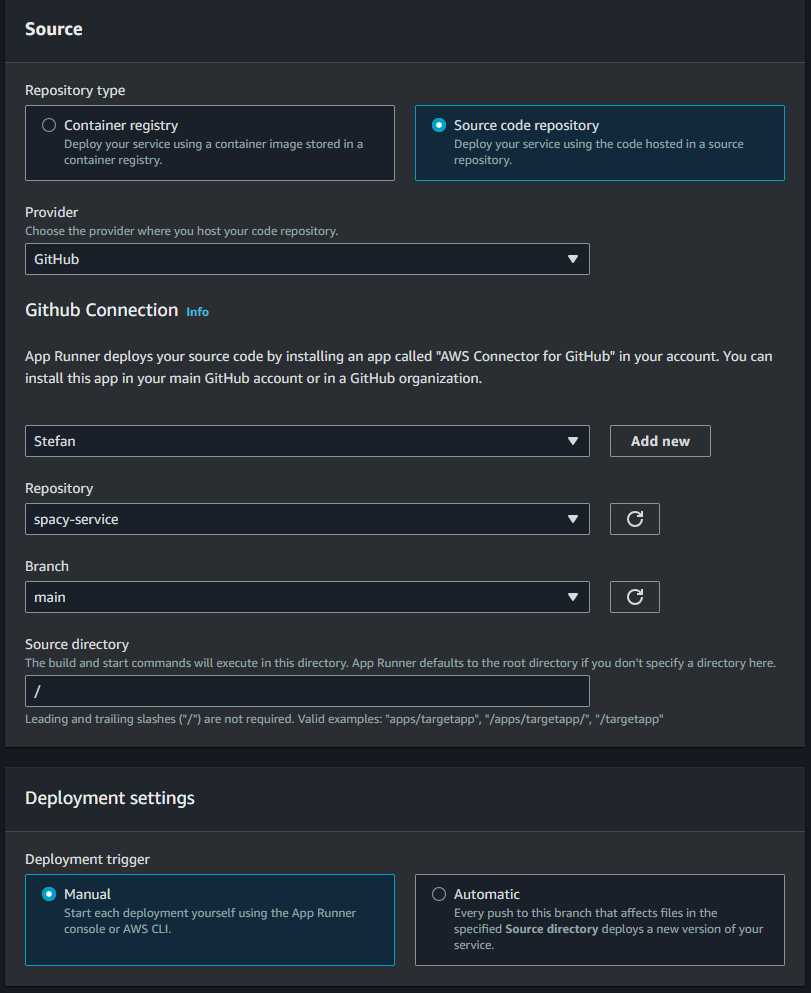

In the Services menu click Create service

Select Source code repository

Choose GitHub, your connected GitHub account and your repository

For the Deployment trigger, it's up to you. Choose Manual to manually redeploy the service, or Automatic to auto-deploy whenever you push to your repository.

- Click Next.

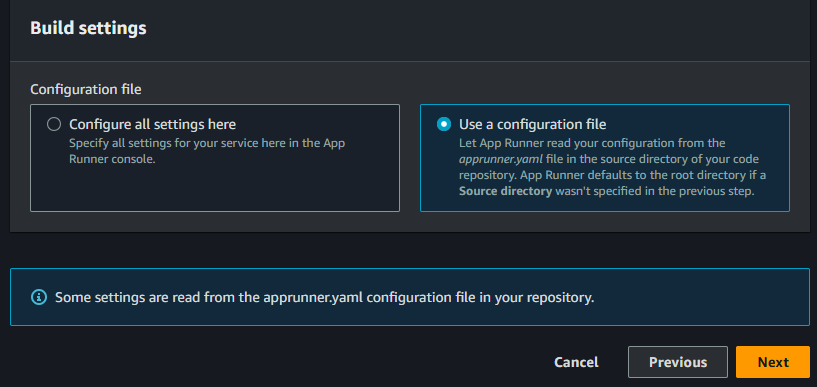

In Configure Build

- Select Use a configuration file - We added a configuration file directly in our repository that defines the necessary AppRunner configurations.

- Click Next.

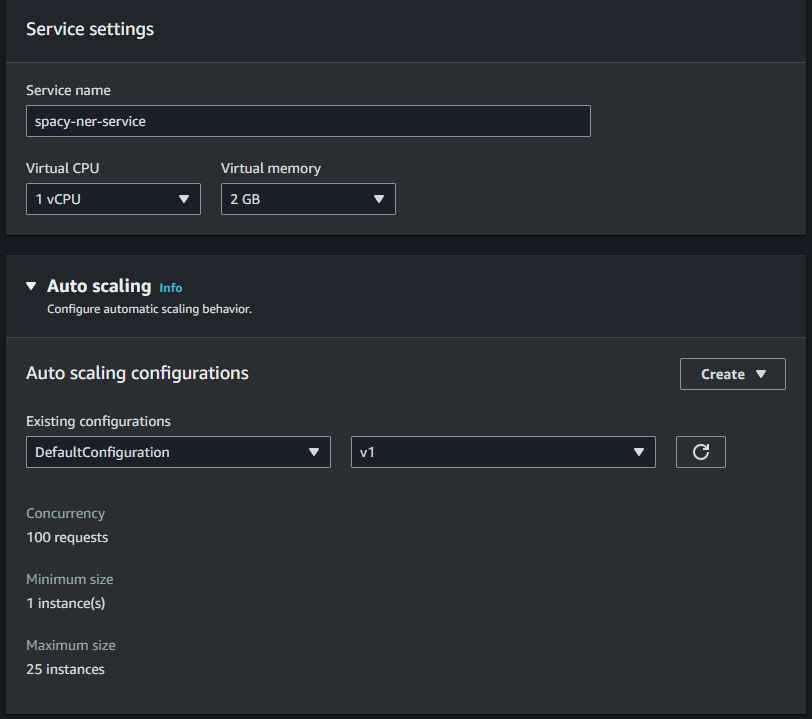

In Configure service

Enter a name for the service e.g. spacy-ner-service

Note the default configuration in Auto Scaling. You may create another Autoscaling configuration for this test.

Leave all other configurations as is.

- Click Next.

Finally on the Review page click Create & deploy to start the service provisioning.

Provisioning the service will take a few minutes. You can track the progress on the service details page and you will also have access to application logs here.

Access AppRunner Service

On the service detail page, copy the Default domain name URL and paste it into your browser's address bar. Add /docs to the domain to view the OpenAPI documentation UI.

Try the /ner endpoint with some text to see if the response works as expected.

AppRunner Summary

Setting up a basic AppRunner Service from a GitHub source repository only takes a few minutes, including an optional automatic deployment pipeline. AppRunner offers many features, such as adding a custom domain to your services, making the service private by configuring a private endpoint to one of your VPCs, and much more.

Review the documentation, especially the sections on Autoscaling and Security.

Regarding security, the sample service does not have any form of authorization. In a real use case, you definitely want to add some form of authorization, whether the service is public or in a private environment.

OutSystems

With our REST API up and running, we can easily integrate with OutSystems. Unfortunately, we cannot use the OpenAPI specification generated by FastAPI because OutSystems still requires a Swagger version 2.0 specification file and FastAPI generates OpenAPI version 3.1.

But it is just a single endpoint that can be integrated manually quite quickly using the Test tab in the REST consume assistant.

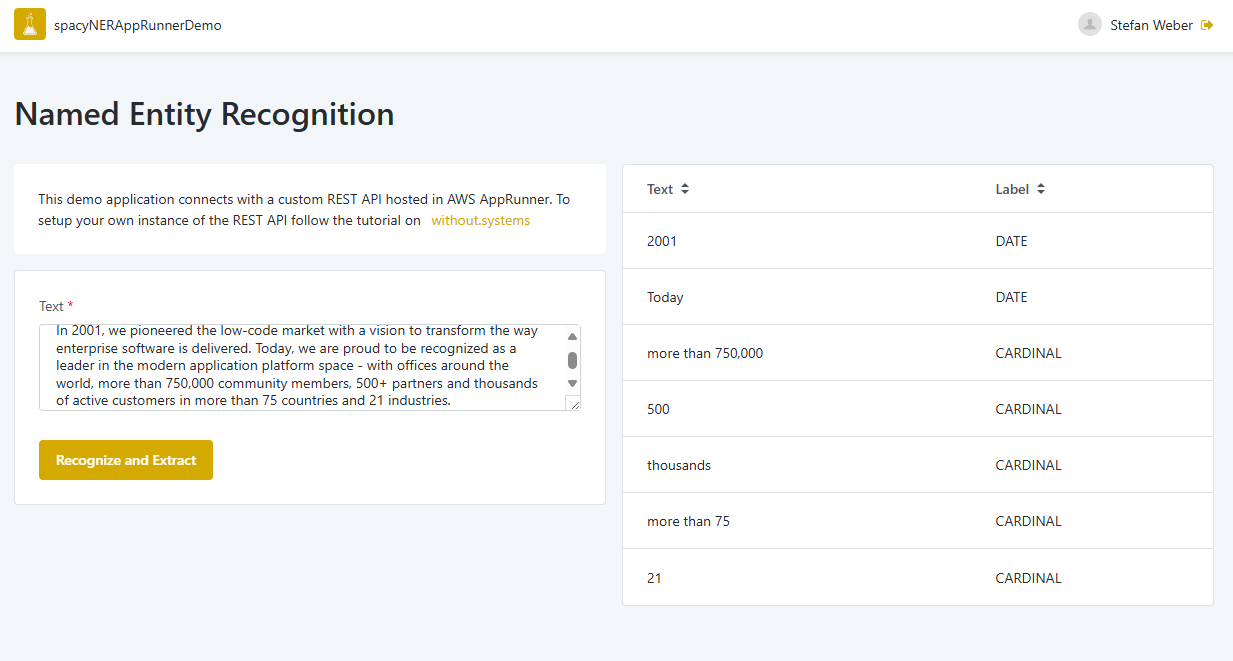

I created a sample application that includes the REST consumer, available on Forge for OutSystems 11.

Start the demo application and try it with the following text:

In 2001, we pioneered the low-code market with a vision to transform the way enterprise software is delivered. Today, we are proud to be recognized as a leader in the modern application platform space - with offices around the world, more than 750,000 community members, 500+ partners and thousands of active customers in more than 75 countries and 21 industries.

which should give you the following results:

Wow, you did it! At least, I hope so. You might wonder why I haven't explained how to consume the REST API in detail, but I trust that if you've made it this far, you already know how to integrate with any REST API in OutSystems.

The End?

You have reached the end, and I hope you successfully launched your own AppRunner REST API service connected with an OutSystems application. If any parts need more details or are unclear, please let me know.

As usual, this article is just the beginning. AppRunner has much more to explore. If it made sense to you, start by reading the official documentation and creating your own labs.

Follow me on LinkedIn to receive notifications whenever I publish something new.

Subscribe to my newsletter

Read articles from Stefan Weber directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Stefan Weber

Stefan Weber

Throughout my diverse career, I've accumulated a wealth of experience in various capacities, both technically and personally. The constant desire to create innovative software solutions led me to the world of Low-Code and the OutSystems platform. I remain captivated by how closely OutSystems aligns with traditional software development, offering a seamless experience devoid of limitations. While my managerial responsibilities primarily revolve around leading and inspiring my teams, my passion for solution development with OutSystems remains unwavering. My personal focus extends to integrating our solutions with leading technologies such as Amazon Web Services, Microsoft 365, Azure, and more. In 2023, I earned recognition as an OutSystems Most Valuable Professional, one of only 80 worldwide, and concurrently became an AWS Community Builder.