Introducing Snowflake Dynamic Tables: What you need to know

John Ryan

John Ryan

Having worked with over 50 Snowflake customers building out complex data transformation pipelines, I understand how complex Extract, Transform, and Load (ETL) systems can get. Spoiler Alert: Damn hard!

In this article, I'll explain the benefits of a revolutionary tool from Snowflake: Dynamic Tables.

In addition to the "how to" basics, including a discussion around the TARGET LAG and REFRESH MODE, I'll explain where you should use Dynamic Tables and discuss some of the drawbacks and the potential performance pitfalls of this incredible technology.

What is a Snowflake Dynamic Table?

Dynamic Tables are a special kind of Snowflake table that materializes the results of a query. They behave a little like a traditional Materialized View, but they can handle complex queries and are designed to compute the end-state of a table to simplify transformation pipelines.

If that all sounds full of jargon, consider the diagram below, which shows a simple data pipeline combining Sales and Finance data.

The diagram above illustrates the ETL processes that prepare, clean and join data for analysis and reporting. The challenges here include:

Dependencies: You need to ensure both Sales and Finance jobs have finished delivering data before you combine the results.

Failures: If either fails, you need to stop the processing until it's fixed.

Scheduling: You must decide on a regular schedule to execute the jobs.

Incremental Processing: Ideally, you need to process the changed records in the Sales and Finance tables; otherwise, you'll rebuild the entire data set each time, which won't scale. You may, therefore, need to deploy Snowflake streams to identify new inserts and then deploy a merge statement to deal with inserts or updates.

With Snowflake Dynamic Tables, you remove the need for orchestration, merge statements, and scheduled tasks, and simplify the entire pipeline, as shown below.

Each Dynamic Table includes the SQL needed to deliver the end result and the target lag (how fresh you need the data), and Snowflake takes care of the rest. It can even automatically identify and process incremental changes.

How to Create a Dynamic Table in Snowflake

The SQL statement below illustrates how to create a Dynamic Table.

create dynamic table sales_and_finance

target_lag = "10 minutes"

warehouse = sales_wh

as

select s.sale_id,

s.amount,

f.booking_type

from sales s

join finance f

on s.customer_id = f.customer_id;

The key points to note include:

The SQL Statement: Notice there's no merge statement here - that's entirely managed by Snowflake. We define the end state and let Snowflake work out the details.

The Target Lag: Indicates how fresh the results should be. There's no need to define a schedule. You only need to define the maximum time between updates in the source tables and the final result.

Warehouse: Indicates which warehouse to execute the SQL. If the source data is unchanged, the warehouse won't be resumed, which will help reduce transformation costs compared to regular scheduling.

What is the Dynamic Table Target Lag?

Instead of defining a scheduled task to refresh Dynamic Tables regularly, you need to set the TARGET_LAG. The Target Lag determines the acceptable time the data is allowed to be out of date compared to the source data. Consider the diagram below.

In the above example, the Sales and Finance data will be refreshed with hourly changes. If there are no changes, the refresh will be skipped. However, the DT to combine the data will only be refreshed daily.

Assuming the Finance and Sales analysts need to work on data that are at most an hour out of date, the above target lag would work fine. Equally, the aggregate reports of both Sales & Finance are only needed once per day.

What is an Incremental or Full Refresh?

The diagram below illustrates how rows in the SALES table are reformatted and enriched with additional values by the DT_SALES Dynamic Table. As you can see, both tables hold the entire history, including 30m rows.

By default, Snowflake will perform an incremental refresh, automatically identify the new and changed records in the table, and apply these to the Dynamic Table. This is incredibly fast for situations where few entries are modified, and Snowflake identifies changes automatically.

However, consider the following situation: the full set of Finance codes is delivered each time. In this case, it makes little sense to identify changes as the entire table is rebuilt each time.

The SQL below shows how to handle this situation. Unlike the default incremental refresh, the refresh_mode = FULL overwrites the Dynamic Table data, which is faster than attempting to identify differences each time.

COPY

COPY

create or replace dynamic table dt_finance

warehouse = bench_wh

target_lag = "1 hour"

refresh_mode = 'FULL'

as

select ...

Where Should I Deploy a Dynamic Table?

The diagram below illustrates a simple Data Warehouse Architecture in which data files are loaded from Cloud Storage into a Raw History zone before being cleaned and integrated in an Integration Zone before Delivery.

A common approach would involve building the transformation pipelines in the Integration Zone using dynamic tables. Normally, we'd build the "T" of ETL with lots of small jobs with complex dependencies. Dynamic Tables work well here because Snowflake handles the scheduling, orchestration, and dependencies.

Likewise, when we deliver the data to end-users, Dynamic Tables are useful as, unlike a Snowflake View, DTs store the result, which helps maximize end-user query performance.

How to View Dynamic Table Status?

The screenshot below shows how to monitor Dynamic Tables using the Snowflake Web User Interface. Click on Monitoring and Dynamic Tables to list the current status.

Then click on one of the tables to show the Graph, which shows a diagram like the screenshot below, which illustrates the dependencies and current status of each DT.

What about Dynamic Table Performance?

The sensible answer is: It depends. Since DTs save the results of a query in a table, it's likely that end-user queries will be faster against a dynamic table than querying a Snowflake View, as the view needs to re-execute the query every time.

You can also deploy Snowflake Cluster Keys and Search Optimization Service against Dynamic Tables, allowing you to further maximize query performance.

The performance of Refresh, however, is essentially the same for any query, and factors include:

Query Complexity: It's best practice to break down complex query pipelines into more manageable steps rather than attempting to execute multi-level sub-queries, and the same rule applies to DTs.

Warehouse Size: A Dynamic Table refresh on large data volumes will benefit from a larger warehouse, like any query. However, frequent incremental refreshes of small data volumes can lead to excessive costs.

Spilling to Storage: Queries with Window Functions or an

ORDER BYorGROUP BYclause can lead to memory spilling, and this can have a huge impact on refresh performance.

However, an often overlooked feature of Dynamic Tables is the REFRESH MODE:

Full Refresh: This will effectively truncate the data and re-load the entire data set from the source. This is most effective when all table contents are regenerated, or most micro-partitions are updated each time.

Incremental Refresh: Indicates that Snowflake should search through the source micro-partitions to identify changed rows and merge these into the target DT.

Unfortunately, once set, the REFRESH MODE cannot be changed, and there are hidden pitfalls, especially when combined with TARGET_LAG. For example:

If the

TARGET_LAGis set to refresh every minute on a table with a few small frequent updates, aREFRESH MODEofFULLre-loads the entire dynamic table each time. In this case,INCREMENTALmode would be much faster as it only processes the new or modified rows.If, however, the contents of a table are deleted and reloaded each time, then the default

REFRESH MODEofINCREMENTALwould be much slower. In this case, Snowflake is forced to identify every change between the source and target tables and aFULLrefresh would be much faster.

Finally, consider the diagram below, which illustrates updates to a few rows across every micro-partition in a huge table.

In the above example, since there were few updates made to the table, you'd probably assume that a REFRESH_MODE of INCREMENTAL would be faster. In reality, however, the number of rows changed is less critical than the number of modified micro-partitions; in this case, a FULL refresh would be faster.

To make this example more concrete, take a table of CUSTOMER TRANSACTIONS over the past 20 years. If we needed to update every row for a single CUSTOMER, we might modify just 10,000 rows in a table with billions of entries, but it's likely to impact the entire table. This would make the UPDATE statement and the Dynamic Table REFRESH a relatively slow process, needing a much larger warehouse than expected.

Conclusion

Dynamic Tables are the fastest growing but often most misunderstood feature in the Data Engineers toolkit. They have remarkable advantages over hand-coding Stored Procedures or using Orchestration systems like Airflow to coordinate complex dependencies between SQL scripts.

Dynamic Tables have the potential to hugely simplify the process of cleaning, transforming, and delivering data for end-user consumption. They manage the dependencies, scheduling, error handling, and incremental processing without needing more complex solutions like Streams and Tasks with complex merge operations.

They are, however, designed to simplify 80% of data transformation tasks, and they do have limitations, specifically around the REFRESH MODE which, if incorrectly deployed, can lead to both poor performance and excessive cost.

Therefore, you should consider Dynamic Tables as another tool in the box. They often simplify transformation pipelines but you need to be aware of the potential pitfalls.

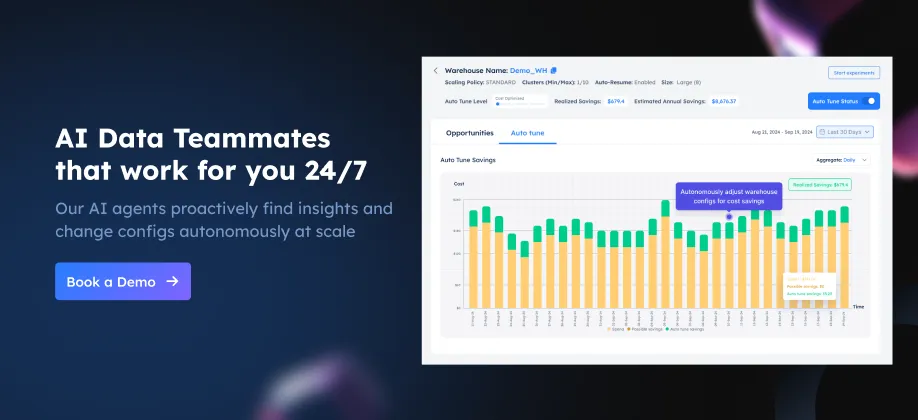

Deliver more performance and cost savings with Altimate AI's cutting-edge AI teammates. These intelligent teammates come pre-loaded with the insights discussed in this article, enabling you to implement our recommendations across millions of queries and tables effortlessly. Ready to see it in action? Request a recorded demo by just sending a chat message (here) and discover how AI teammates can transform your data teams.

Subscribe to my newsletter

Read articles from John Ryan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

John Ryan

John Ryan

After 30 years of experience building multi-terabyte data warehouse systems, I spent five incredible years at Snowflake as a Senior Solution Architect, helping customers across Europe and the Middle East deliver lightning-fast insights from their data. In 2023, I joined Altimate.AI, which uses generative artificial intelligence to provide Snowflake performance and cost optimization insights and maximize customer return on investment.