Kubernetes Network Policies: Day 26 of 40daysofkubernetes

Shivam Gautam

Shivam Gautam

Introduction

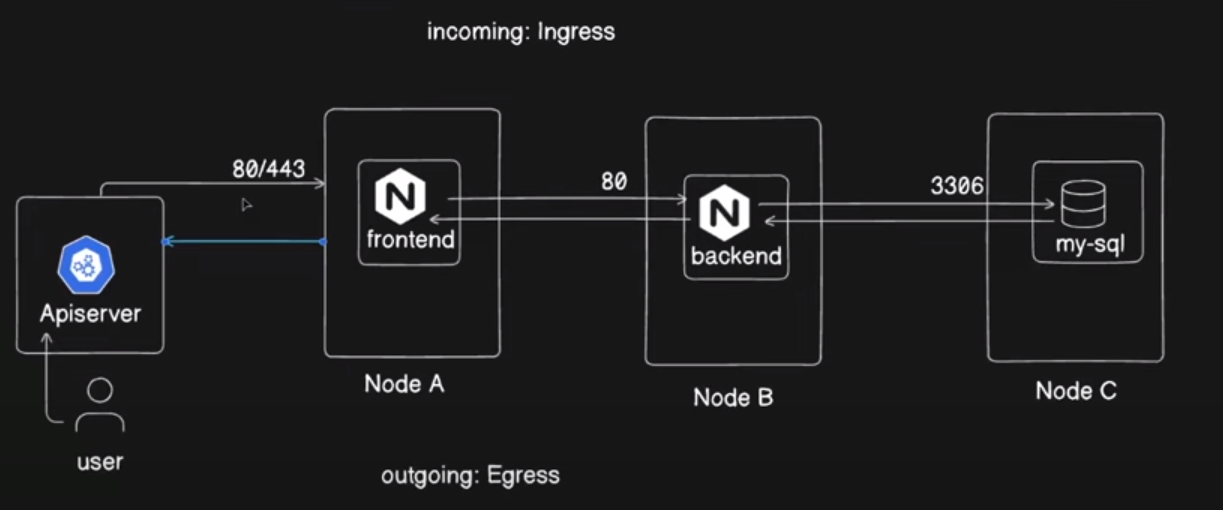

By default, pods in Kubernetes are non-isolated, meaning they can freely communicate with any other pod within the cluster. For example, a frontend pod can interact with both the backend and database pods, the backend pod can communicate with the frontend and database pods, and the database pod can exchange data with both the frontend and backend pods. While this open communication is convenient for many use cases, there are scenarios where you might want to restrict communication between certain pods for security or organizational reasons.

In this blog, we will explore how to use Kubernetes Network Policies to selectively restrict communication between pods. By implementing these policies, we can enforce fine-grained control over which pods can communicate with each other, enhancing the security and isolation of our applications.

Network Policies

Network Policies in Kubernetes are a set of rules that define how pods communicate with each other and with other network endpoints. They are used to control the traffic flow at the IP address or port level within the cluster, providing an additional layer of security by allowing or denying traffic to pods based on specified criteria.

Key Concepts

Pods: The smallest deployable units in Kubernetes that run containers.

Labels: Key-value pairs attached to objects like pods, used to select objects and organize them.

Ingress: Incoming traffic to a pod.

Egress: Outgoing traffic from a pod.

Namespaces: Virtual clusters backed by the same physical cluster, used for dividing cluster resources between multiple users.

How Network Policies Work

Isolation: By default, pods in Kubernetes are non-isolated, meaning they can communicate with any other pod. A network policy can be used to isolate pods, so they can only communicate with specific pods or namespaces.

Selectors: Network policies use label selectors to define which pods the policy applies to. The policy can control both ingress and egress traffic based on these selectors.

Prerequisite

We are using kind clusters for our Kubernetes setup, and with kind, there is a default kind-net CNI (Container Network Interface). However, kind-net does not support Network Policies, so we need to install Calico CNI to enforce them.

Creating a kind cluster with disableDefaultCNI

Here’s a sample configuration for creating a kind cluster with Calico CNI support:

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30001

hostPort: 30001

- role: worker

- role: worker

networking:

disableDefaultCNI: true

podSubnet: 192.168.0.0/16

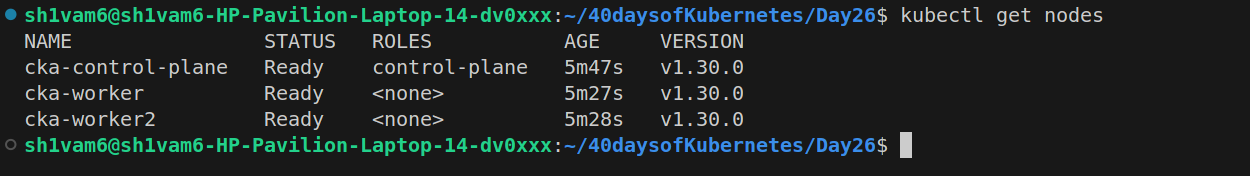

Create the cluster using the following command:

kind create cluster --config kind-cluster.yaml --name cka --image kindest/node:v1.30.0

Install Calico CNI with the following command:

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.1/manifests/calico.yaml

Example of Network Policy

Let’s create three pods: Frontend, Backend, and Database, along with three corresponding services. We will then demonstrate how these pods can initially communicate with each other and how this changes after applying Network Policies.

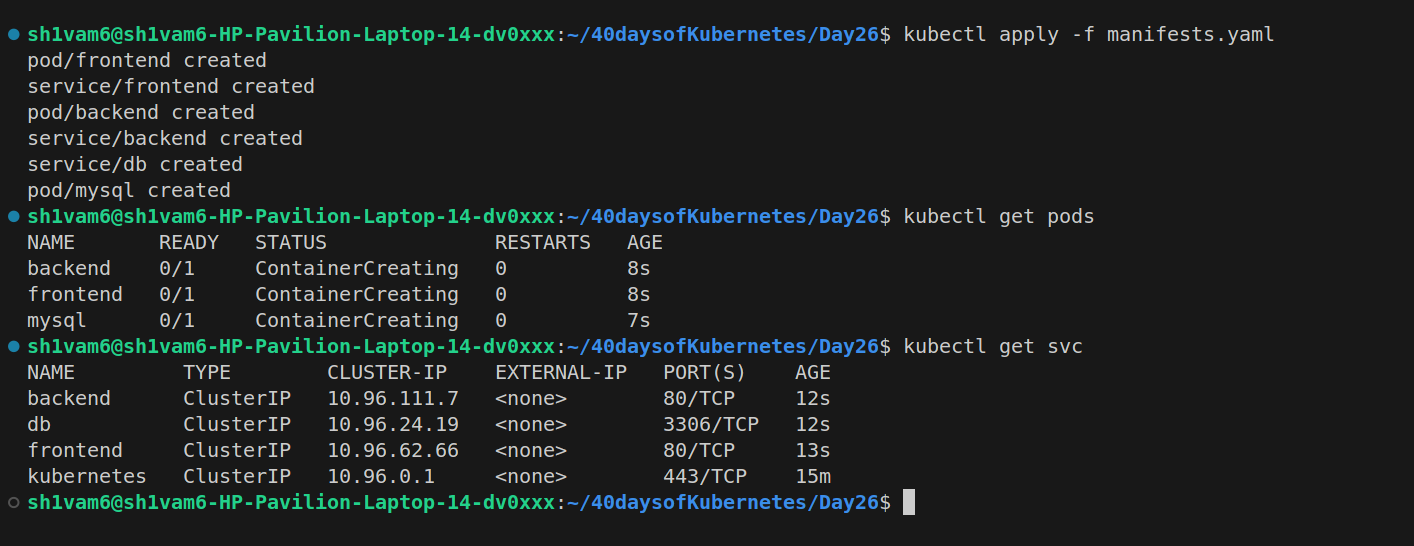

Apply the following manifests.yaml file to create the resources:

apiVersion: v1

kind: Pod

metadata:

name: frontend

labels:

role: frontend

spec:

containers:

- name: nginx

image: nginx

ports:

- name: http

containerPort: 80

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: frontend

labels:

role: frontend

spec:

selector:

role: frontend

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: Pod

metadata:

name: backend

labels:

role: backend

spec:

containers:

- name: nginx

image: nginx

ports:

- name: http

containerPort: 80

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: backend

labels:

role: backend

spec:

selector:

role: backend

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: Pod

metadata:

name: mysql

labels:

name: mysql

spec:

containers:

- name: mysql

image: mysql:latest

env:

- name: "MYSQL_USER"

value: "mysql"

- name: "MYSQL_PASSWORD"

value: "mysql"

- name: "MYSQL_DATABASE"

value: "testdb"

- name: "MYSQL_ROOT_PASSWORD"

value: "verysecure"

ports:

- name: http

containerPort: 3306

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: db

labels:

name: mysql

spec:

selector:

name: mysql

ports:

- protocol: TCP

port: 3306

targetPort: 3306

Verifying Pod Communication

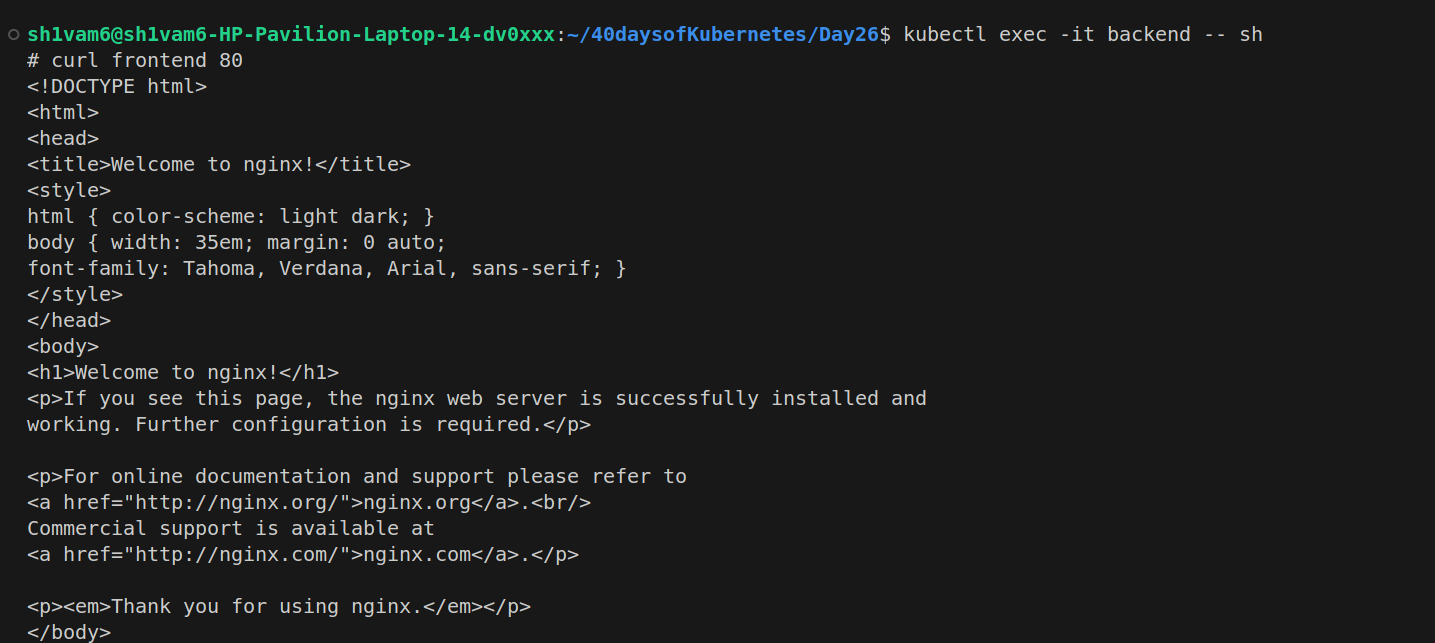

By default, all pods can communicate with each other. To verify this:

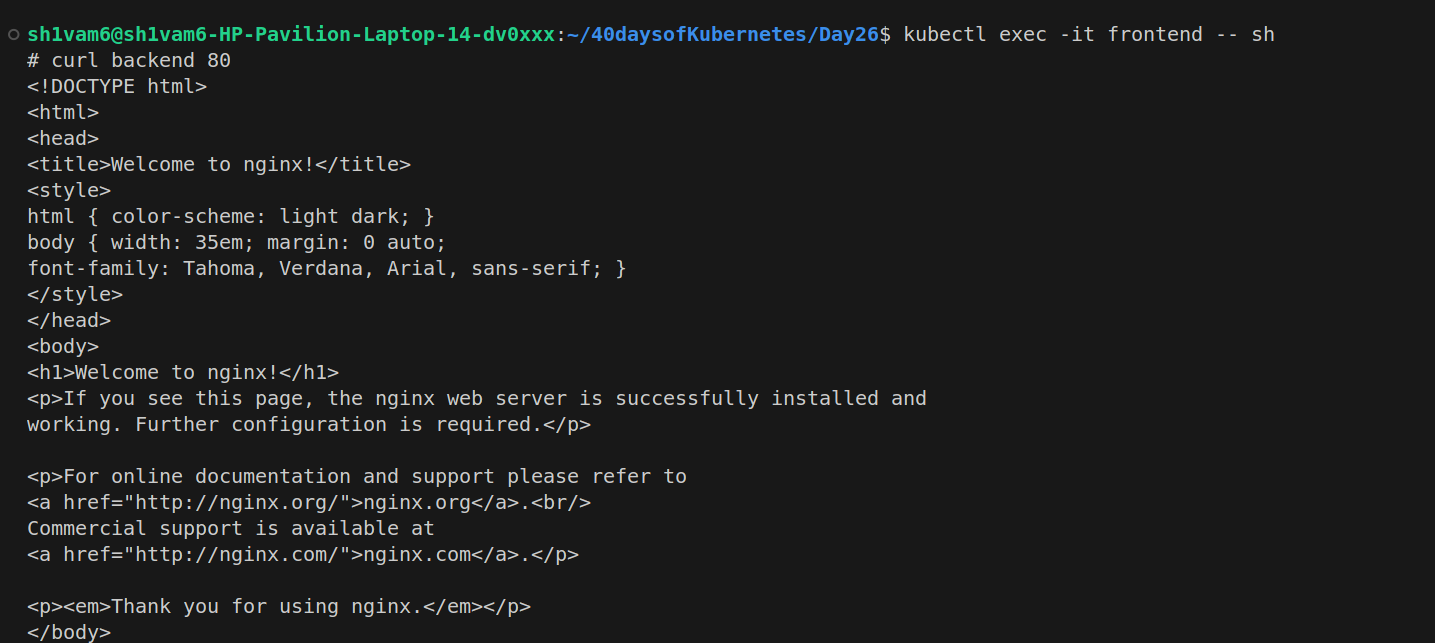

Accessing the Backend pod from the Frontend pod: You should be able to communicate freely.

Accessing the Frontend pod from the Backend pod: You should be able to communicate freely.

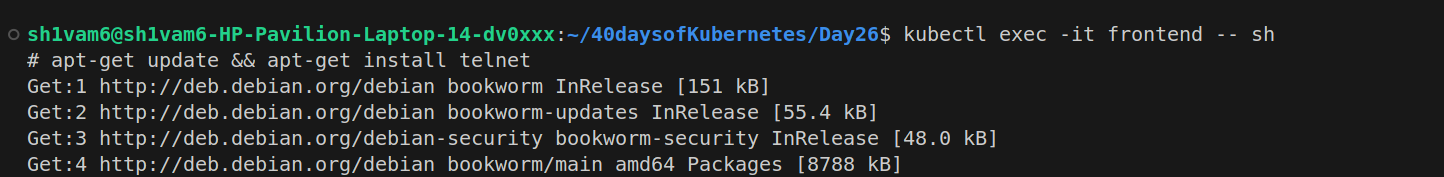

Accessing the Database pod from the Frontend pod: You should be able to communicate using port 3306.

To test, install telnet in the frontend pod and try to connect to the MySQL service:

We can see that, all pods can communicate with each other.

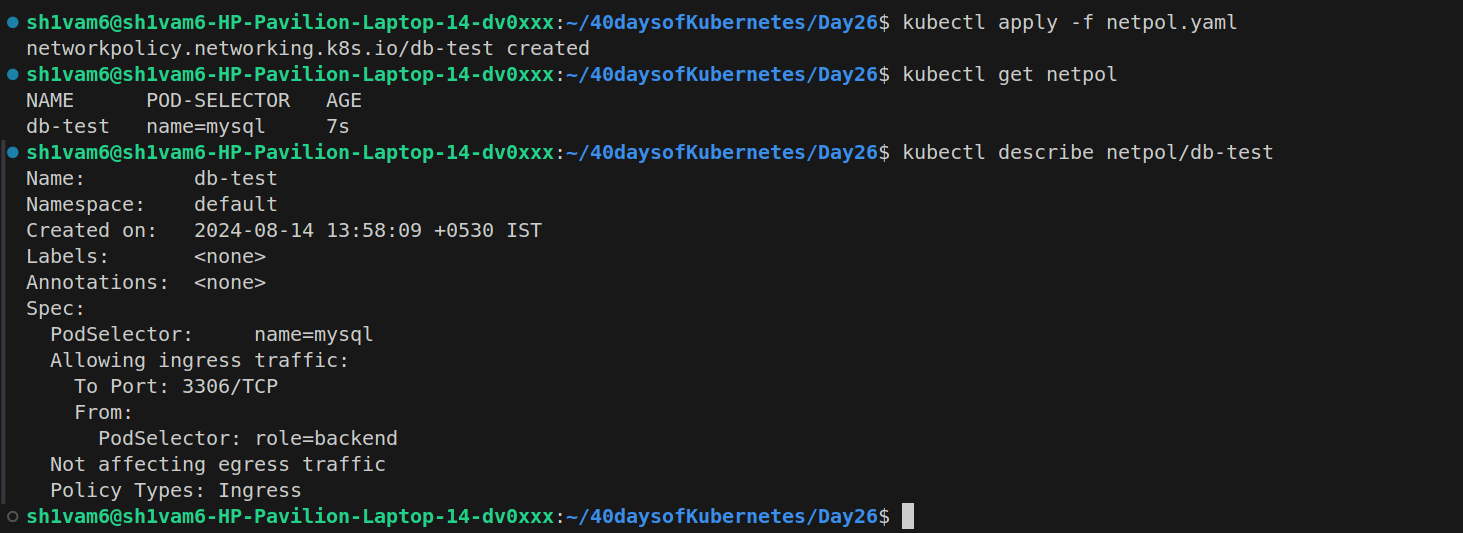

Applying a Network Policy

Now, let's apply a Network Policy to restrict communication:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: db-test

spec:

podSelector:

matchLabels:

name: mysql

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

role: backend

ports:

- port: 3306

Explaination

podSelector:This selects the pods to which the network policy will apply. In this case, it applies to pods with the labelname: mysql, targeting the MySQL pod(s).policyTypes:Specifies the type of traffic the policy controls, here it isIngress, controlling incoming traffic to the MySQL pod.ingress:This section defines the rules for incoming traffic:from:Specifies that only pods with the labelrole: backendare allowed to send traffic to the MySQL pod.ports:Restricts traffic to port 3306.

With this policy in place, only pods with the label role: backend can communicate with the pod labeled name: mysql, and only on port 3306. All other traffic will be denied.

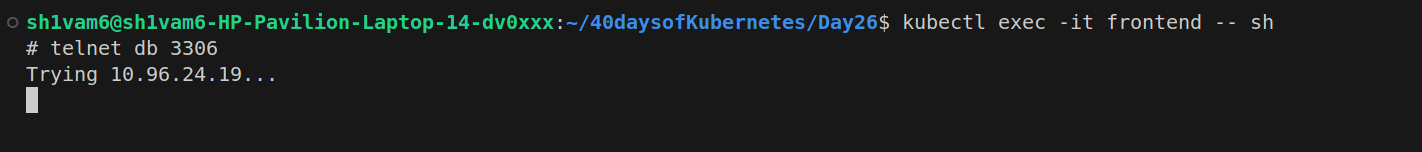

Verifying the Network Policy

Exec into the Frontend pod and attempt to telnet to

db:3306. You should no longer be able to connect, demonstrating that the policy is working.

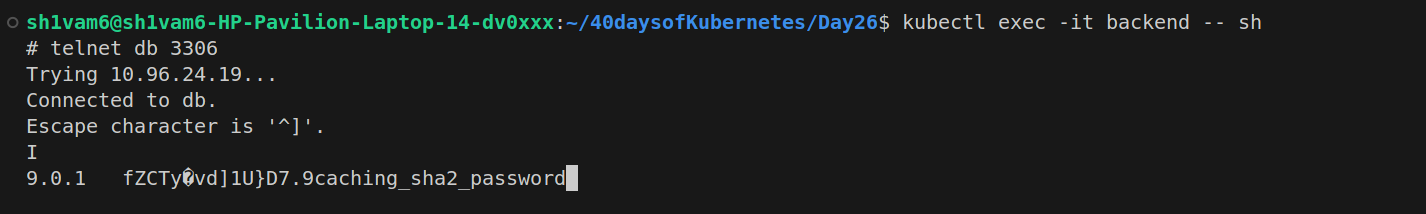

Exec into the Backend pod and attempt the same. You should be able to connect, confirming that the Backend pod can still communicate with the Database pod.

Conclusion

Network policies are essential for securing Kubernetes clusters by controlling the traffic flow between pods and other network endpoints. By defining rules based on pod labels and namespaces, we can ensure that only authorized communication occurs within your cluster.

Resources I used

Subscribe to my newsletter

Read articles from Shivam Gautam directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Shivam Gautam

Shivam Gautam

DevOps & AWS Learner | Sharing my insights and progress 📚💡|| 2X AWS Certified || AWS CLoud Club Captain'24