Fine-Tuning the JVM for Enterprise Applications

Sandeep Choudhary

Sandeep Choudhary

Java Virtual Machine (JVM) tuning is crucial for optimising the performance of enterprise applications. Proper tuning can lead to significant improvements in application responsiveness, throughput, and resource utilisation. Here’s a comprehensive guide to fine-tuning the JVM for enterprise applications.

Understanding JVM Components

There are few essential components for JVM performance tuning. We will deep dive and explore these key components and how to fine tune these components.

1. Heap Memory

What is Heap Memory?

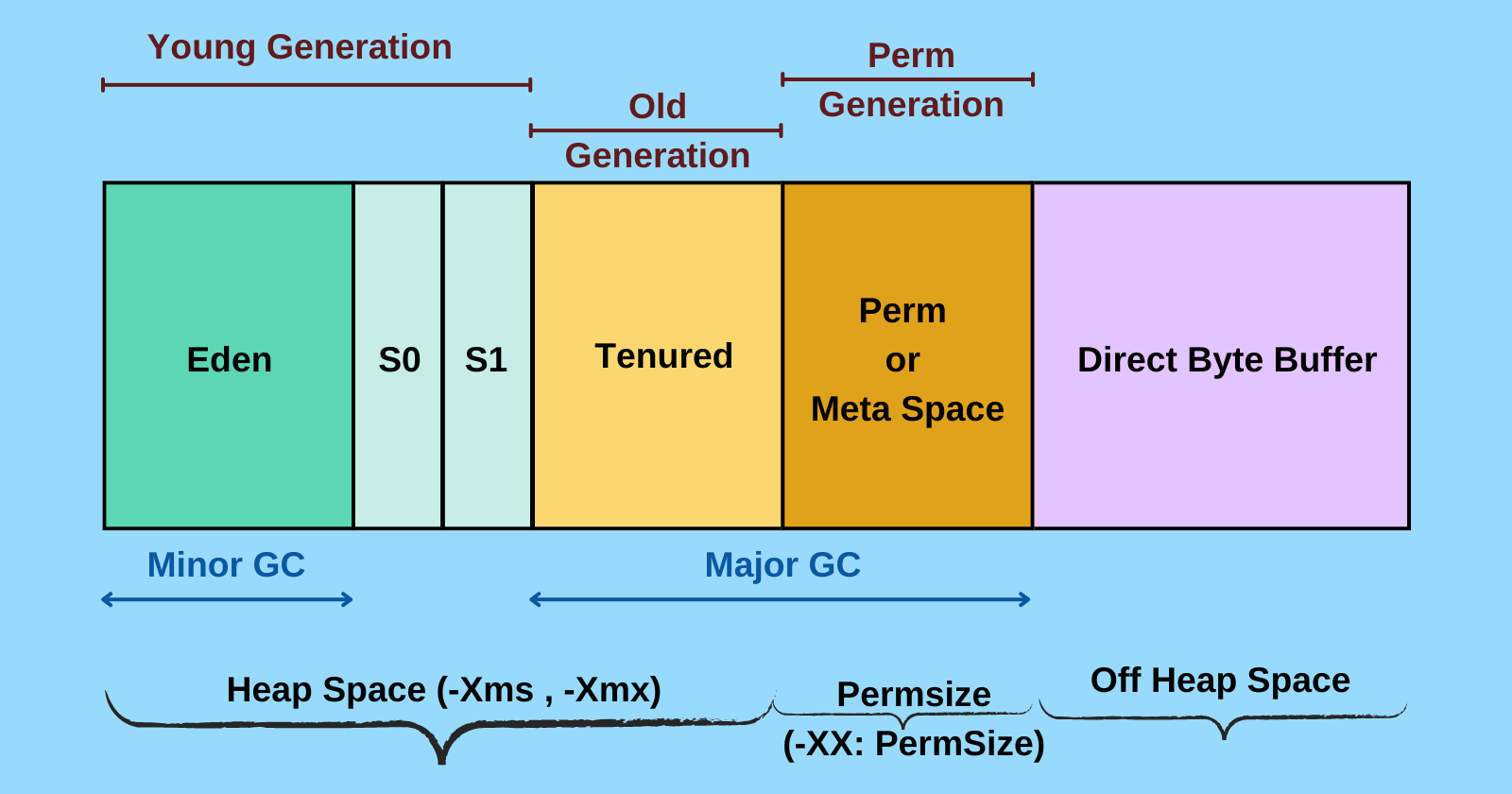

The JVM heap is the runtime data area from which memory for all class instances and arrays is allocated. It is created when the JVM starts and can dynamically grow or shrink based on the application’s needs. The heap is divided into several regions, each serving a specific purpose:

Young Generation (Young Gen) : The Young Generation is where all new objects are allocated and is further divided into three regions:

Eden Space: This is where new objects are initially allocated. When the Eden space fills up, a minor garbage collection (GC) event is triggered.

Survivor Spaces (S0 and S1): These are two equally sized spaces where objects that survive the garbage collection in the Eden space are moved. Objects are copied between these two spaces during minor GCs.

Key Points:

Minor GC: Occurs frequently and is usually fast. It collects objects in the Young Generation.

Promotion: Objects that survive multiple minor GCs are promoted to the Old Generation.

Old Generation (Old Gen): The Old Generation, also known as the Tenured Generation, is where long-lived objects are stored. Objects that survive multiple minor GCs are moved here. The Old Generation is typically larger than the Young Generation and is collected less frequently.

Key Points:

Major GC: Also known as full GC, it occurs less frequently but is more time-consuming as it involves the entire heap.

Compaction: During major GC, the Old Generation may be compacted to reduce fragmentation.

Permanent Generation (Perm Gen) / Metaspace: In earlier versions of Java, the Permanent Generation (Perm Gen) was used to store metadata such as class definitions and method information. However, starting from Java 8, Perm Gen has been replaced by Metaspace, which is allocated in native memory rather than the heap.

Key Points:

Metaspace: Automatically expands as needed, reducing the risk of out-of-memory errors related to class metadata.

Configuration: Metaspace size can be controlled using

-XX:MetaspaceSizeand-XX:MaxMetaspaceSizeparameters.

DirectByteBuffer in Java is a type of buffer that allows for off-heap memory allocation. This means that the memory used by DirectByteBuffer is allocated outside the Java heap, which can help reduce the burden on the garbage collector and improve performance for applications that require large amounts of memory. When you use the

allocateDirectmethod to create a DirectByteBuffer, the JVM allocates memory directly from the operating system, bypassing the Java heap. This off-heap memory is not subject to garbage collection, which can lead to more predictable performance, especially in applications with large memory requirements.However, there are some trade-offs. Allocating and deallocating off-heap memory can be slower than on-heap memory because it involves native system calls. Additionally, managing off-heap memory requires careful handling to avoid memory leaks.

Heap Memory Management

Heap memory tuning is one of the most critical aspects of JVM performance:

Initial and Maximum Heap Size: Set using

-Xmsand-Xmxparameters. For enterprise applications, it’s common to set these values to the same size to avoid dynamic resizing.New Generation Size: Controlled by

-Xmn. A larger New Generation can reduce GC frequency but may increase pause times.Survivor Ratio: Adjusted using

-XX:SurvivorRatio. This parameter controls the size ratio between Eden space and Survivor spaces.GC Algorithms: Choose the appropriate garbage collection algorithm (e.g., G1, CMS, Parallel GC) based on the application’s performance characteristics and requirements.

2. Garbage Collection (GC)

Garbage Collection (GC) in Java is a crucial process that helps manage memory automatically, ensuring that Java applications run efficiently without memory leaks.

What is Garbage Collection?

In Java, garbage collection is the process by which the Java Virtual Machine (JVM) automatically identifies and discards objects that are no longer needed by the application. This process helps in reclaiming memory and preventing memory leaks, allowing the application to use memory more efficiently.

Java programs compile to bytecode, which runs on the JVM. When the program runs, objects are created on the heap, a portion of memory dedicated to the program. Over time, some objects become unreachable, meaning no part of the program references them anymore. The garbage collector identifies these unused objects and deletes them to free up memory.

Types of Garbage Collection Activities

Minor or Incremental Garbage Collection: This occurs when unreachable objects in the young generation heap memory are removed. The young generation is where most new objects are allocated and is typically smaller and collected more frequently.

Major or Full Garbage Collection: This happens when objects that survived the minor garbage collection are moved to the old generation heap memory and are subsequently removed. The old generation is larger and collected less frequently compared to the young generation.

Important Concepts

Unreachable Objects: An object is considered unreachable if no references to it exist. For example, if an object is created and then the reference to it is set to

null, the object becomes unreachable and eligible for garbage collection.Eligibility for Garbage Collection: An object is eligible for garbage collection if it is unreachable. The garbage collector will eventually reclaim the memory used by such objects

Garbage Collection Algorithms

Java provides several garbage collection algorithms, each with its own advantages and use cases:

Serial Garbage Collector: Suitable for single-threaded environments, it uses a single thread to perform all garbage collection work.

Parallel Garbage Collector: Uses multiple threads to speed up the garbage collection process, making it suitable for multi-threaded applications.

CMS (Concurrent Mark-Sweep) Garbage Collector: Aims to minimise pause times by performing most of the garbage collection work concurrently with the application threads.

G1 (Garbage-First) Garbage Collector: Designed for applications that require large heaps and low pause times. It divides the heap into regions and prioritizes garbage collection in regions with the most garbage

Garbage Collection Tuning

Monitoring garbage collection is essential for optimizing application performance. Tools like VisualVM and Java Mission Control can help developers monitor garbage collection activity and identify potential issues. Tuning garbage collection involves adjusting parameters such as heap size and garbage collector type to achieve the desired performance. Choosing the right GC algorithm and tuning its parameters can significantly impact performance:

GC Algorithms: Common algorithms include Serial GC, Parallel GC, CMS (Concurrent Mark-Sweep), and G1 (Garbage-First). Each has its pros and cons depending on the application’s needs.

GC Logging: Enable GC logging with

-Xlog:gc*to monitor and analyze GC behaviour.GC Tuning Parameters: Parameters like

-XX:MaxGCPauseMillis,-XX:GCTimeRatio, and-XX:ParallelGCThreadscan be adjusted to optimise GC performance.

3. Thread Management

Thread management is a critical aspect of Java application performance. Efficient thread management ensures that applications run smoothly, making the best use of system resources. Handling multiple threads for concurrent execution. Efficient thread management is vital for high-performance applications.

Understanding Thread Management in JVM

In Java, threads are the smallest units of execution. The JVM manages these threads to execute tasks concurrently, allowing for better utilisation of CPU resources. Each thread in Java is represented by an instance of the Thread class or implements the Runnable interface.

Key Concepts in Thread Management

Thread Lifecycle: A thread in Java goes through several states: New, Runnable, Blocked, Waiting, Timed Waiting, and Terminated. Understanding these states helps in managing thread behaviour effectively.

Thread Pools: Instead of creating new threads for every task, thread pools manage a pool of worker threads. This approach reduces the overhead of thread creation and destruction, leading to better performance.

Synchronisation: Synchronisation mechanisms like

synchronizedblocks and methods,ReentrantLock, andReadWriteLockhelp manage access to shared resources, preventing race conditions and ensuring thread safety.Concurrency Utilities: Java provides a rich set of concurrency utilities in the

java.util.concurrentpackage, including executors, concurrent collections, and atomic variables, which simplify thread management and improve performance.

Fine-Tuning Thread Management

Minimise Contention: Contention occurs when multiple threads try to access shared resources simultaneously. Reducing contention involves minimising the use of synchronised blocks and using more granular locks or lock-free algorithms.

Reduce Context Switching: Context switching happens when the CPU switches from one thread to another, which can be costly. Reducing the number of threads or using thread pools can help minimise context switching.

Thread Pool Configuration: Properly configuring thread pools is crucial. The size of the thread pool should be based on the nature of the tasks and the available system resources. For CPU-bound tasks, the pool size should be around the number of available processors. For I/O-bound tasks, a larger pool size may be beneficial.

Use Executors: The

Executorsframework provides factory methods for creating different types of thread pools, such as fixed thread pools, cached thread pools, and scheduled thread pools. Choosing the right executor based on the task type can improve performance.Monitor and Profile: Monitoring and profiling tools like VisualVM, Java Mission Control, and JConsole can help identify bottlenecks and optimise thread management. Regular monitoring ensures that the application performs optimally under different loads.

Thread Pool Size: Use

-XX:ParallelGCThreadsand-XX:ConcGCThreadsto control the number of threads used by GC.Thread Stack Size: Adjust with

-Xssto optimise memory usage per thread.Thread Priorities: Use

-XX:+UseThreadPrioritiesoption allows the JVM to use native thread priorities, which can be useful for fine-tuning the performance of multi-threaded applications.Compressed Object Pointers: Use

-XX:+UseCompressedOopsoption to reduce memory usage by compressing object pointers, which can be beneficial in multi-threaded environments.

4. Monitoring and Profiling

Monitoring and profiling are crucial practices for ensuring the performance, stability, and efficiency of Java applications. These techniques help developers understand the behaviour of their applications, identify bottlenecks, and optimise resource usage. Here’s an in-depth look at monitoring and profiling in Java applications.

What is Monitoring and profiling?

Monitoring involves tracking the performance and health of an application in real-time. It provides insights into various metrics such as CPU usage, memory consumption, thread activity, and response times. Monitoring tools help detect issues early and ensure that the application runs smoothly.

Profiling, on the other hand, is a more detailed analysis of an application’s performance. It involves measuring specific aspects of the application’s execution, such as method execution times, object creation, and garbage collection. Profiling is typically used during development and testing to optimise code and improve performance.

Key JVM Monitoring Tools

Java Management Extensions (JMX): JMX is a standard API for monitoring and managing Java applications. It allows developers to monitor various aspects of the JVM, such as memory usage, thread activity, and garbage collection.

Java Flight Recorder (JFR): JFR is a powerful tool integrated into the JVM that collects detailed information about the application’s runtime behaviuor. It has minimal performance overhead and can be used in production environments.

Java Mission Control (JMC): JMC is a suite of tools for monitoring, managing, and profiling Java applications. It works in conjunction with JFR to provide detailed insights into the application’s performance.

Prometheus and Grafana: Prometheus is an open-source monitoring system that collects metrics from applications and stores them in a time-series database. Grafana is a visualisation tool that can be used with Prometheus to create dashboards for monitoring application performance.

Key JVM Profiling Tools

Java VisualVM is a visual tool for monitoring and profiling Java applications. It provides features such as CPU and memory profiling, thread analysis, and garbage collection monitoring.

JProfiler is a commercial profiling tool that offers a wide range of features, including CPU profiling, memory profiling, thread profiling, and database profiling. It integrates with popular IDEs like Eclipse, NetBeans, and IntelliJ IDEA.

YourKit Java Profiler is another popular commercial profiler that provides detailed insights into CPU and memory usage, thread activity, and garbage collection. It supports remote profiling and integrates with various IDEs.

Apache SkyWalking is an open-source APM (Application Performance Management) tool that provides monitoring, tracing, and profiling capabilities for Java applications. It supports distributed tracing and can monitor applications across multiple services.

Techniques for Effective Monitoring and Profiling

Setting Up Alerts: Configure alerts for critical metrics such as CPU usage, memory consumption, and response times. This helps in detecting issues early and taking corrective actions.

Analysing Garbage Collection: Monitor garbage collection logs to understand the impact of GC on application performance. Tools like JFR and JMC can provide detailed insights into GC activity.

Thread Analysis: Use profiling tools to analyse thread activity and identify bottlenecks caused by thread contention or deadlocks. This can help in optimising multi-threaded applications.

Memory Leak Detection: Profiling tools can help detect memory leaks by analysing object creation and retention patterns. Identifying and fixing memory leaks is crucial for maintaining application stability.

Performance Tuning: Use profiling data to identify slow methods and optimise their performance. This may involve refactoring code, optimising algorithms, or improving database queries.

5. Best Practices

Benchmarking: Regularly benchmark your application under different loads to understand its performance characteristics.

Incremental Tuning: Make small, incremental changes and monitor their impact.

Documentation: Keep detailed records of all changes and their effects on performance.

Regular Monitoring: Continuously monitor heap usage and GC performance to identify and address issues proactively.

Application Profiling: Use profiling tools to identify memory leaks and optimise memory usage in the application code.

6. Common Pitfalls

Over-Tuning: Avoid excessive tuning that can lead to instability.

Ignoring Application Code: JVM tuning can only go so far. Ensure that the application code is optimised for performance.

Premature Optimisation: One of the most common pitfalls is optimising too early. Developers often try to tune performance before they have a clear understanding of where the bottlenecks are. This can lead to wasted effort and suboptimal results. It’s essential to profile the application first to identify the actual performance issues before making any changes.

Ignoring Garbage Collection Impact: Garbage collection is a crucial aspect of JVM performance. Ignoring its impact can lead to significant performance degradation. Common mistakes include:

Not monitoring GC logs: Failing to monitor GC logs can result in missing critical information about memory usage and GC pauses.

Improper GC tuning: Using default GC settings without considering the application’s specific needs can lead to inefficient memory management and long GC pauses.

Overlooking Thread Management: Thread management is vital for multi-threaded applications. Common pitfalls include:

Ignoring thread contention: High thread contention can lead to performance bottlenecks. It’s essential to analyse thread dumps and identify contention points.

Improper thread pool configuration: Using inappropriate thread pool sizes can either lead to resource exhaustion or under utilisation.

Misconfiguring Heap Size: Heap size configuration is critical for JVM performance. Common mistakes include:

Setting heap size too large: A large heap size can lead to long GC pauses, affecting application responsiveness.

Setting heap size too small: A small heap size can cause frequent GC cycles, leading to high CPU usage and performance degradation.

Ignoring Native Memory Usage: Java applications can also use native memory, especially when using JNI (Java Native Interface) or off-heap memory. Ignoring native memory usage can lead to out-of-memory errors and crashes. It’s essential to monitor both heap and native memory usage.

Not Using Appropriate Tools: Relying solely on manual tuning without using appropriate tools can be inefficient. Common mistakes include:

Not using profiling tools: Profiling tools like Java VisualVM, JProfiler, and YourKit provide valuable insights into application performance.

Ignoring monitoring tools: Tools like JMX, Prometheus, and Grafana help monitor JVM metrics and detect issues early.

Overlooking Application-Specific Tuning: Every application has unique performance characteristics. Common pitfalls include:

Using generic tuning parameters: Applying generic tuning parameters without considering the application’s specific workload can lead to suboptimal performance.

Ignoring application-level optimisations: JVM tuning alone may not be sufficient. Optimising the application’s code, algorithms, and database queries is equally important.

Failing to Test in Production-Like Environments: Testing performance changes in non-production environments can lead to misleading results. Common mistakes include:

Not replicating production load: Failing to replicate the production load can result in tuning parameters that do not perform well in real-world scenarios.

Ignoring environmental differences: Differences in hardware, network, and other environmental factors can impact performance. It’s essential to test in environments that closely resemble production.

Conclusion

Fine-tuning the JVM is a complex but rewarding process that can lead to significant performance gains for enterprise applications. By understanding the JVM components, managing heap memory, optimising garbage collection, and efficiently handling threads, you can ensure your application runs smoothly and efficiently. By using the right tools and techniques, developers can gain valuable insights into their applications behaviour, identify performance bottlenecks, and optimise resource usage. Whether in development, testing, or production, these practices help ensure that Java applications run efficiently and effectively.

Subscribe to my newsletter

Read articles from Sandeep Choudhary directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by