What Is a Load Balancer? A Comprehensive Golang Tutorial for Building Your Own

Uttkarsh

Uttkarsh

Introduction

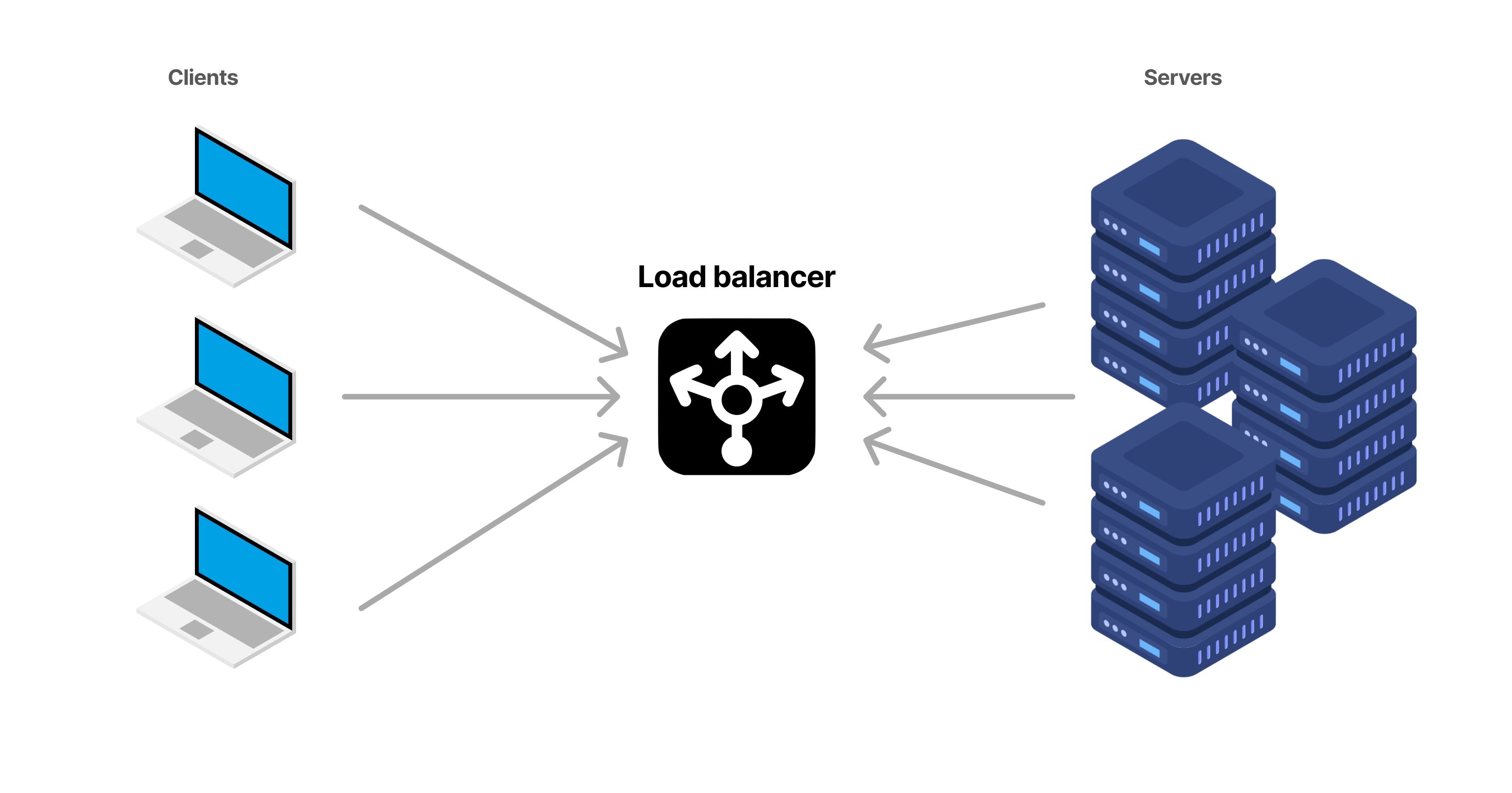

A load balancer is a crucial component of modern infrastructure. It serves as a traffic manager that distributes incoming network requests across multiple servers to ensure no single server gets overwhelmed. By intelligently routing traffic, load balancers help reduce latency, prevent server overload, and enhance the overall performance and availability of applications. Whether you’re dealing with high-traffic websites, APIs, or microservices, a load balancer ensures your system remains responsive, scalable, and reliable by optimizing resource usage and balancing the load among available servers.

How does it work?

In a typical scenario, when a user accesses a website, their browser directly sends a request to the web server using its IP address. The web server processes the request and sends the website data directly back to the user. However, in a load-balanced setup, the process involves an additional layer.

A load balancer acts as an intermediary between users and your server cluster. It distributes incoming requests across multiple servers (also known as backend or target servers) to ensure that no single server is overwhelmed. Here’s how it works:

User Request: The user sends a request to access a website via their browser.

Request Received by Load Balancer: Instead of going directly to a web server, the request first reaches the load balancer.

Traffic Distribution: The load balancer evaluates factors like server load, health, and response times, then forwards the request to the most suitable server.

Server Response: The selected server processes the request and sends the website data back to the load balancer.

Response Forwarded to User: Finally, the load balancer forwards the response back to the user.

By strategically distributing traffic, the load balancer optimizes resource usage, improves server efficiency, and enhances overall application performance.

Understanding Load Balancers and Their Algorithms: A Restaurant Analogy

Load balancing algorithms are essential for managing traffic across servers, ensuring that no single server becomes overwhelmed. They can be categorized into static and dynamic types, each with its own approach to distributing requests. To make these concepts more relatable, let’s use a restaurant analogy where:

Customers are clients,

Orders are API requests,

Waiters are the request mediums,

Cooks are the servers,

The head chef is the load balancer.

Static Load Balancing Algorithms

Static algorithms follow predefined rules and do not adapt based on real-time server conditions:

Round Robin: Requests are distributed in a fixed rotation among servers. Each server receives requests in turn, regardless of its current load.

Restaurant Analogy: The head chef assigns each order to a different cook in a fixed sequence. For instance, Cook A handles the first order, Cook B the second, and so on, irrespective of how busy each cook is.

Weighted Round Robin: Servers are assigned weights based on their capacity. Requests are distributed in rotation, but servers with higher weights receive more traffic.

Restaurant Analogy: The head chef knows that Cook A is more experienced and can handle more orders efficiently. Thus, Cook A receives more orders compared to Cook B and Cook C, following a weighted rotation.

IP Hash: Requests are routed based on a hash of the client’s IP address. This ensures that requests from the same client consistently reach the same server.

Restaurant Analogy: Each customer’s table number (similar to their IP address) determines which cook will handle their order. Customers sitting at the same table always get their orders prepared by the same cook.

Dynamic Load Balancing Algorithms

Dynamic algorithms adjust distribution based on real-time conditions such as server load and response time:

Least Connection: Traffic is directed to the server with the fewest active connections, assuming that each connection requires similar processing power.

Restaurant Analogy: The head chef tracks how many orders each cook is currently handling. The next order is assigned to the cook who has the fewest orders in progress at that moment.

Weighted Least Connection: Servers are assigned weights reflecting their capacity. The load balancer routes traffic to servers with fewer connections, adjusted by their weights.

Restaurant Analogy: The head chef considers which cooks are currently handling the fewest orders and also takes into account each cook’s ability to handle more orders. The next order is assigned based on both current load and cook capacity.

Weighted Response Time: Considers both the server’s response time and current connections. Requests are sent to servers that are both fast and less busy.

Restaurant Analogy: The head chef checks how quickly each cook usually works and how many orders they are currently handling. Orders are sent to cooks who are both quick and not overloaded.

Resource-Based: Monitors server resources (like CPU and memory) and routes traffic to servers with the most available resources.

Restaurant Analogy: The head chef regularly checks how much energy or focus each cook has left. Orders are assigned to cooks who have the most resources available to handle new tasks efficiently.

Let's cut to the chase and dive into the code! 🚀

Step-by-Step Guide to Building a Load Balancer in Golang

In this section, we'll delve into constructing a basic load balancer using the Go programming language. This guide assumes you have a foundational understanding of Golang or at least a basic knowledge of the language. By building our own load balancer, we'll gain valuable insights into its functionality and how to customize it to meet various requirements. Let’s dive in!

We’ll be leveraging Go’s powerful standard library to develop our load balancer. The focus will be on implementing two types of load balancing algorithms:

Static Load Balancer: We’ll start by creating a round-robin load balancer. This method distributes incoming requests sequentially across a set of servers, ensuring a balanced distribution without considering the current load of each server.

Dynamic Load Balancer: Next, we’ll implement a least-connection load balancer. This algorithm directs traffic to the server with the fewest active connections, allowing for more efficient handling of varying traffic loads.

Through this tutorial, you'll not only build these load balancing strategies but also understand their practical applications and how to integrate them into real-world scenarios. Let’s get coding!

First, to dynamically determine the server with the least connections, we need an efficient method to prioritize servers based on their connection counts. For this purpose, we'll utilize a priority queue. A priority queue is a data structure that allows us to efficiently retrieve and remove the element with the highest (or lowest) priority—in our case, the server with the fewest connections.

In Go, there isn't a built-in priority queue, so we’ll need to adapt the heap package to create one. For beginners, a priority queue works similarly to a regular queue but with an additional layer of ordering based on priority. Think of it as a line at a concert where VIPs get in before regular ticket holders.

To implement this, create a new file named priority_queue.go in the models directory and paste the following code. This will define our priority queue and its functionality, allowing us to manage server priorities effectively.

package models

import (

"container/heap"

)

type QueueItem struct {

id int

Connections int

ServerAddr string

}

func NewQueueItem(connections int, address string) *QueueItem {

return &QueueItem{

Connections: connections,

ServerAddr: address,

}

}

type PriorityQueue []*QueueItem

func (pq *PriorityQueue) Push(x interface{}) {

item := x.(*QueueItem)

item.id = len(*pq)

*pq = append(*pq, item)

}

func (pq *PriorityQueue) Len() int {

return len(*pq)

}

func (pq *PriorityQueue) Pop() interface{} {

old := *pq

n := len(old)

item := old[n-1]

item.id = -1

*pq = old[0 : n-1]

return item

}

func (pq *PriorityQueue) Less(i, j int) bool {

return (*pq)[i].Connections < (*pq)[j].Connections

}

func (pq *PriorityQueue) Swap(i, j int) {

(*pq)[i], (*pq)[j] = (*pq)[j], (*pq)[i]

(*pq)[i].id = i

(*pq)[j].id = j

}

// Update modifies the number of connections of a specific QueueItem.

func (pq *PriorityQueue) Update(item *QueueItem, connections int) {

item.Connections = connections

heap.Fix(pq, item.id)

}

// Will implement a Min Heap so must return the item with the least connections

func (pq *PriorityQueue) GetItemMinConnections() *QueueItem {

if pq.Len() == 0 {

return nil

}

return (*pq)[0] // return the element with the least connections

}

// Remove item from queue with a certain address

func (pq *PriorityQueue) RemoveByServerAddr(serverAddr string) *QueueItem {

for i, item := range *pq {

if item.ServerAddr == serverAddr {

return heap.Remove(pq, i).(*QueueItem)

}

}

return nil

}

func (pq *PriorityQueue) GetItemByServerAddr(serverAddr string) *QueueItem {

for _, item := range *pq {

if item.ServerAddr == serverAddr {

return item

}

}

return nil

}

Next, we’ll define the structure of the Load Balancer model to handle connections and server functions. Create a new file named load_balancer.go in the models directory. This model will be extended to implement both a Round Robin and a Least Connections load balancer.

package models

import (

"container/heap"

"fmt"

"net/http"

"net/url"

"sync"

)

// Enum for the strategy/type

type LoadBalancingStrategy int

const (

RoundRobin LoadBalancingStrategy = iota

LeastConnections

)

type LoadBalancer struct {

ServerAddr []string // list of server address in the load balalncer

Servers map[string]int // map of server address to the index for easy retreival

RRNext int // will tell which is the next server to return in a round robin fashion

Connections PriorityQueue // to return the server wiht the least connections in a dynamic load balancer

Type LoadBalancingStrategy

Mutex sync.Mutex

}

func NewLoadBalancer() *LoadBalancer {

lb := &LoadBalancer{

ServerAddr: make([]string, 0),

Servers: make(map[string]int),

RRNext: -1,

Connections: make(PriorityQueue, 0),

Type: RoundRobin,

}

heap.Init(&lb.Connections)

return lb

}

func NewRoundRobinLoadBalancer() *LoadBalancer {

lb := NewLoadBalancer()

lb.Type = RoundRobin

return lb

}

func NewLeastConnectionLoadBalancer() *LoadBalancer {

lb := NewLoadBalancer()

lb.Type = LeastConnections

return lb

}

var client = &http.Client{}

func (lb *LoadBalancer) AddServer(connections int, serverAdd string) error {

lb.Mutex.Lock()

defer lb.Mutex.Unlock()

_, contains := lb.Servers[serverAdd]

if !contains {

item := NewQueueItem(connections, serverAdd)

lb.ServerAddr = append(lb.ServerAddr, serverAdd)

lb.Servers[serverAdd] = len(lb.ServerAddr) - 1

heap.Push(&lb.Connections, item)

return nil

}

return fmt.Errorf("error: This server already exists")

}

func (lb *LoadBalancer) DeleteServer(address string) error {

lb.Mutex.Lock()

defer lb.Mutex.Unlock()

_, contains := lb.Servers[address]

if contains {

_ = lb.Connections.RemoveByServerAddr(address)

index := lb.Servers[address]

if len(lb.ServerAddr) > 1 {

lb.ServerAddr = append(lb.ServerAddr[:index], lb.ServerAddr[index+1:]...)

} else {

lb.ServerAddr = lb.ServerAddr[:0]

}

delete(lb.Servers, address)

for i := 0; i < len(lb.ServerAddr); i++ {

lb.Servers[lb.ServerAddr[i]] = i

}

return nil

}

return fmt.Errorf("error: The server does not exists")

}

func (lb *LoadBalancer) GetServerWithMinimumServerAddr() string {

item := lb.Connections.GetItemMinConnections()

fmt.Printf("Response from address %s.\n", item.ServerAddr)

item.Connections++

lb.Connections.Update(item, item.Connections)

return item.ServerAddr

}

func (lb *LoadBalancer) GetRoundRobinNextServerAddr() string {

lb.RRNext = (lb.RRNext + 1) % len(lb.ServerAddr)

return lb.ServerAddr[lb.RRNext]

}

func (lb *LoadBalancer) ForwardRequest(request *http.Request) (*http.Response, error) {

lb.Mutex.Lock()

defer lb.Mutex.Unlock()

if len(lb.ServerAddr) == 0 {

return nil, fmt.Errorf("error: No servers available. Register a server")

}

var serverAddr string

switch lb.Type {

case RoundRobin:

serverAddr = lb.GetRoundRobinNextServerAddr() // Round Robin implementation

case LeastConnections:

serverAddr = lb.GetServerWithMinimumServerAddr() // Minimum Connections implementation

default:

return nil, fmt.Errorf("error: Unknown load balancing strategy")

}

serverURL, err := url.Parse(serverAddr)

if err != nil {

return nil, err

}

// Create a new request to forward to the target server

proxyURL := serverURL.String() + request.URL.Path

proxyReq, err := http.NewRequest(request.Method, proxyURL, request.Body)

if err != nil {

return nil, err

}

// Copy headers from the original request to the new request

for key, values := range request.Header {

for _, value := range values {

proxyReq.Header.Add(key, value)

}

}

resp, err := client.Do(proxyReq)

if err != nil {

return nil, err

}

// Decrement the connections count => the server is free again

if lb.Type == LeastConnections {

go func() {

lb.Mutex.Lock()

defer lb.Mutex.Unlock()

item := lb.Connections.GetItemByServerAddr(serverAddr)

item.Connections--

lb.Connections.Update(item, item.Connections)

}()

}

return resp, nil

}

The final step is to create the server and controllers to handle requests. Our load balancer will keep internal server IP addresses hidden. Create a new file named lb_controller.go in the controller directory and paste the following code.

package controller

import (

"context"

"encoding/json"

"io"

"net/http"

"time"

"github.com/Uttkarsh-raj/go-load-balancer/models"

"github.com/gin-gonic/gin"

)

func AddNewServer(loadBalancer *models.LoadBalancer) gin.HandlerFunc {

return func(ctx *gin.Context) {

_, cancel := context.WithTimeout(context.Background(), time.Second*3)

defer cancel()

var body map[string]string

err := json.NewDecoder(ctx.Request.Body).Decode(&body)

if err != nil {

ctx.JSON(http.StatusBadRequest, gin.H{"success": false, "message": "Body missing the address field"})

return

}

addr := body["address"]

if addr == "" {

ctx.JSON(http.StatusBadRequest, gin.H{"success": false, "message": "Body missing the address field"})

return

}

err = loadBalancer.AddServer(0, addr)

if err != nil {

ctx.JSON(http.StatusBadRequest, gin.H{"success": false, "message": err.Error()})

return

}

ctx.JSON(http.StatusOK, gin.H{"success": true, "message": "Server added successfully"})

}

}

func DeleteServer(loadBalancer *models.LoadBalancer) gin.HandlerFunc {

return func(ctx *gin.Context) {

_, cancel := context.WithTimeout(context.Background(), time.Second*3)

defer cancel()

var body map[string]string

err := json.NewDecoder(ctx.Request.Body).Decode(&body)

if err != nil {

ctx.JSON(http.StatusBadRequest, gin.H{"success": false, "message": "Body missing the address field"})

return

}

addr := body["address"]

if addr == "" {

ctx.JSON(http.StatusBadRequest, gin.H{"success": false, "message": "Body missing the address field"})

return

}

err = loadBalancer.DeleteServer(addr)

if err != nil {

ctx.JSON(http.StatusBadRequest, gin.H{"success": false, "message": err.Error()})

return

}

ctx.JSON(http.StatusOK, gin.H{"success": true, "message": "Server deleted successfully"})

}

}

func ForwardRequest(loadBalancer *models.LoadBalancer) gin.HandlerFunc {

return func(ctx *gin.Context) {

request := ctx.Request

// if the Add Server request is called

if request.Method == http.MethodPost && request.URL.Path == "/addServer" {

// Call the Add New Server handler

AddNewServer(loadBalancer)(ctx)

return

}

// if the Delete Server request is called

if request.Method == http.MethodDelete && request.URL.Path == "/delete" {

// Call the Delete Server handler

DeleteServer(loadBalancer)(ctx)

return

}

resp, err := loadBalancer.ForwardRequest(request)

if err != nil {

ctx.JSON(http.StatusInternalServerError, gin.H{"success": false, "message": err.Error()})

return

}

body, err := io.ReadAll(resp.Body)

if err != nil {

ctx.JSON(http.StatusInternalServerError, gin.H{"success": false, "message": err.Error()})

return

}

defer resp.Body.Close()

for k, v := range resp.Header {

for _, h := range v {

ctx.Writer.Header().Add(k, h)

}

}

ctx.Writer.WriteHeader(resp.StatusCode)

ctx.Writer.Write(body)

}

}

Next, set up the routes for handling incoming requests. Create a new file named routes.go in the routes folder and add the following code. Note that there will be only a single route, as all navigation is managed internally by the load balancer.

package routes

import (

"github.com/Uttkarsh-raj/go-load-balancer/controller"

"github.com/Uttkarsh-raj/go-load-balancer/models"

"github.com/gin-gonic/gin"

)

func SetRoutes(router *gin.Engine, loadBalancer *models.LoadBalancer) {

router.Any("/*path", controller.ForwardRequest(loadBalancer)) // Single route for all the requests

}

Finally, create the main.go file in the root directory. This will be the entry point for the program. We’ll use the Gin framework for its simplicity and efficiency in handling HTTP requests and responses.

package main

import (

"fmt"

"log"

"github.com/Uttkarsh-raj/go-load-balancer/models"

"github.com/Uttkarsh-raj/go-load-balancer/routes"

"github.com/gin-gonic/gin"

)

func main() {

fmt.Println("Starting Server.....")

router := gin.New()

router.Use(gin.Logger())

// balancer := models.NewRoundRobinLoadBalancer()

balancer := models.NewLeastConnectionLoadBalancer()

routes.SetRoutes(router, balancer)

log.Fatal(router.Run(":3000"))

}

To run the program, open the terminal in your IDE, navigate to your project directory, and execute the following commands to start the project.

go run main.go

Summary

In this blog, we’ve delved into the world of load balancers and learned how to create our own using the Go. We started by understanding the basics of load balancing and its role in distributing traffic efficiently across servers. Using a restaurant analogy, we made these concepts more relatable by comparing customers, orders, waiters, and cooks to clients, API requests, request mediums, and servers, with the head chef acting as the load balancer.

We then moved on to practical implementation, building a load balancer that incorporates both static (Round Robin) and dynamic (Least Connections) algorithms. We utilized a priority queue for managing server priorities and set up controllers and routes to handle incoming requests while keeping internal server IP addresses hidden.

Additionally, I’ve written tests for the load balancer and included demo videos in the project’s README on GitHub. Feel free to check them out, give the project a star, and follow me on GitHub to see more projects like this. Don’t forget to explore my other blogs for more insights and tutorials!

Repository: https://github.com/Uttkarsh-raj/Go-Load-Balancer

GitHub: https://github.com/Uttkarsh-raj

Twitter/X: Uttkarsh

Subscribe to my newsletter

Read articles from Uttkarsh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Uttkarsh

Uttkarsh

Hi, I'm Uttkarsh , a tech enthusiast with a knack for building cool stuff. I enjoy translating ideas into code, whether it's crafting user interfaces or tackling new challenges. There's a certain satisfaction in creating things. I'm here to keep learning, coding, and having fun along the way. When not coding, you'll find me exploring different interests and trying to make the most out of every moment. Open to collaborations and always up for a tech chat. Feel free to connect!