Azure AKS Troubleshooting Hands-On - Connectivity Issues Pods and Services Within Same Cluster

Francisco Souza

Francisco SouzaTable of contents

- 📝Introduction

- 📝Log in to the Azure Management Console

- 📝Prerequisites:

- 📝Setting an Azure Storage Account to Load Bash or PowerShell

- 📝Default settings for this Lab

- 📝Create log analytics workspace

- 📝Create custom AKS VNet and Subnet

- 📝 Create a AKS Cluster using custom VNet subnet

- 📝Connect to AKS Cluster

- 📝Switch to a new namespace

- 📝Enable AKS Diagnostics logging using CLI

- 📝Create Deployments and Services:

- 📝Simulate the Issue:

- 📝Troubleshoot the issue:

- 📝Solving the Issue:

- 📝Extra Troubleshooting Using Custom Domain Names

📝Introduction

In this hands-on lab, we will guide for troubleshooting a real scenario in Azure Kubernetes Service (AKS) for a common issue: Connectivity issues Pods and Services within the same cluster.

Learning objectives:

In this module, you'll learn how to:

Identify the issue

Resolve the issue

📝Log in to the Azure Management Console

Using your credentials, make sure you're using the right Region. In my case, I am using the region uksouth in my Cloud Playground Sandbox.

📌Note: You can also use the VSCode tool or from your local Terminal to connect to Azure CLI

More information on how to set it up is at the link.

📝Prerequisites:

Update to PowerShell 5.1, if needed.

Install .NET Framework 4.7.2 or later.

Visual Code

Web Browser (Chrome, Edge)

Azure CLI installed

Azure subscription

Docker installed

📝Setting an Azure Storage Account to Load Bash or PowerShell

- Click the Cloud Shell icon

(>_)at the top of the page.

- Click PowerShell.

- Click Show Advanced Settings. Use the combo box under Cloud Shell region to select the Region. Under Resource Group and Storage account(It's a globally unique name), enter a name for both. In the box under File Share, enter a name. Click ***Create storage (***if you don't have any yet).

📝Default settings for this Lab

- First, register the following resources:

az login

az account set --subscription <subscriptionID>

az provider register --namespace Microsoft.Storage

az provider register --namespace Microsoft.Compute

az provider register --namespace Microsoft.Network

az provider register --namespace Microsoft.Monitor

az provider register --namespace Microsoft.ManagedIdentity

az provider register --namespace Microsoft.OperationalInsights

az provider register --namespace Microsoft.OperationsManagement

az provider register --namespace Microsoft.KeyVault

az provider register --namespace Microsoft.ContainerService

az provider register --namespace Microsoft.Kubernetes

Let's check all the resources registered:

az provider list --query "[?registrationState=='Registered'].namespace"

📝Create log analytics workspace

Save its Resource Id to use in aks create, but before storing the name of some variables.

$resource_group="akslabs"

$aks_name="akslabs"

$location="uksouth"

$aks_vnet="$aks_name-vnet"

$aks_subnet="$aks_name-subnet"

$log_analytics_workspace_name="$aks_name-log"

az monitor log-analytics workspace create `

--resource-group $resource_group `

--workspace-name $log_analytics_workspace_name `

--location $location --no-wait

$log_analytics_workspace_resource_id=az monitor log-analytics workspace show `

--resource-group $resource_group `

--workspace-name $log_analytics_workspace_name `

--query id --output tsv

echo $log_analytics_workspace_resource_id

📝Create custom AKS VNet and Subnet

Save its Resource Id to use in aks create.

az network vnet create `

--resource-group $resource_group `

--name $aks_vnet `

--address-prefixes 10.224.0.0/12 `

--subnet-name $aks_subnet `

--subnet-prefixes 10.224.0.0/16

$vnet_subnet_id=az network vnet subnet show `

--resource-group $resource_group `

--vnet-name $aks_vnet `

--name $aks_subnet `

--query id --output tsv

echo $vnet_subnet_id

📝 Create a AKS Cluster using custom VNet subnet

Create an AKS cluster using the

az aks createcommand:az aks create --resource-group $resource_group --name $aks_name --node-count 1 --node-vm-size standard_ds2_v2 --network-plugin kubenet -vnet-subnet-id $vnet_subnet_id --enable-managed-identity --network-policy calico --enable-addons monitoring --generate-ssh-keys --workspace-resource-id $log_analytics_workspace_resource_id

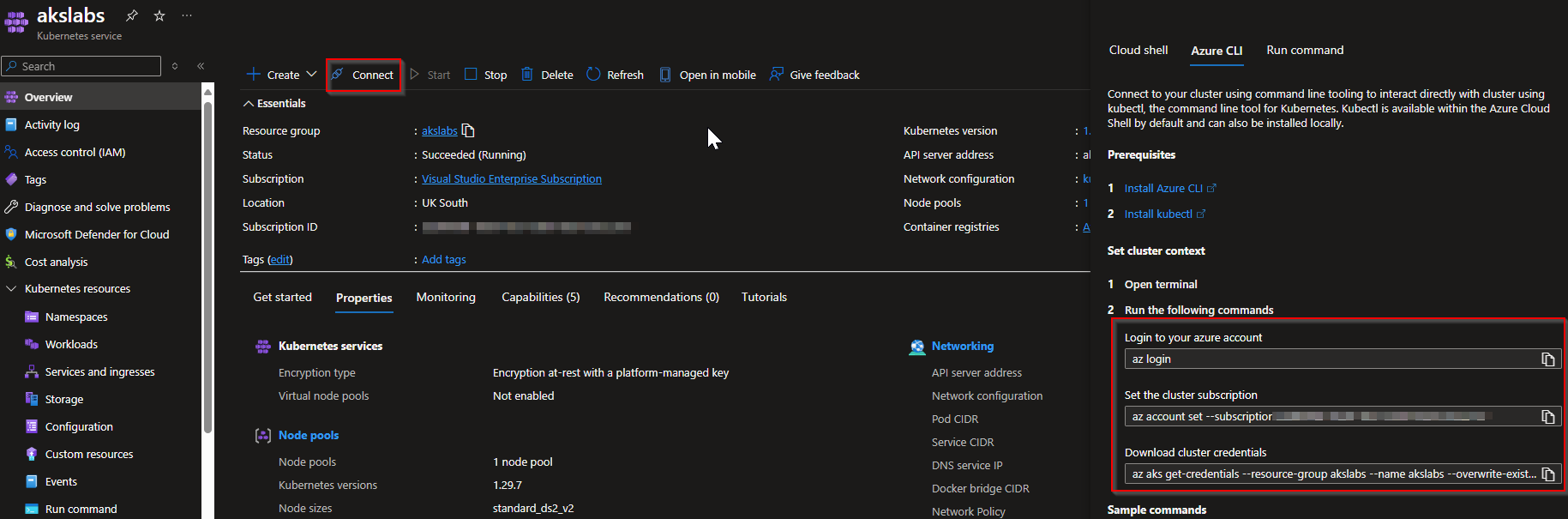

📝Connect to AKS Cluster

Use the Azure Portal to check your AKS Cluster resources, by following the steps below:

Go to Azure Dashboard, and click on the Resource Group created for this Lab, looking for your AKS Cluster resource.

On the Overview tab, click on Connect to your AKS Cluster**.**

A new window will be opened, so you only need to open the Azure CLI and run the following commands:

az login

az account set subscription <your-subscription-id>

az aks get-credentials -g $resource_group -n $aks_name --overwrite-existing

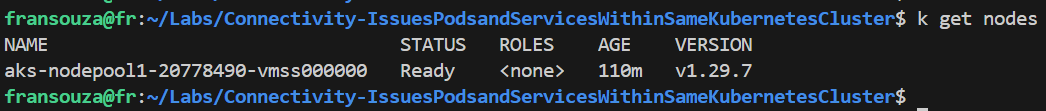

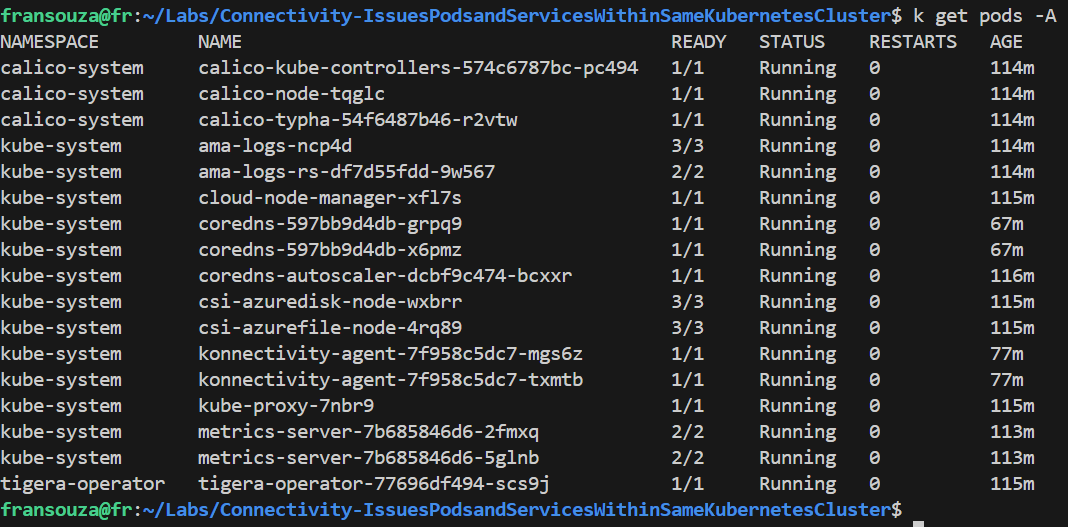

After that, you can run some Kubectl commands to check the default AKS Cluster resources.

📝Switch to a new namespace

Create a new name space and switch to working on it.

k create ns <name>

k config set-context --current --namespace=<name>

# Verify current namespace

k config view --minify --output 'jsonpath={..namespace}'

# Confirm ability to view

k get pods -A

📝Enable AKS Diagnostics logging using CLI

$aks_resource_id=az aks show `

--resource-group $resource_group `

--name $aks_name `

--query id `

--output tsv

echo $aks_resource_id

az monitor diagnostic-settings create `

--name aks_diagnostics `

--resource $aks_resource_id `

--logs '[{"category":"kube-apiserver","enabled":true},{"category":"kube-controller-manager","enabled":true},{"category":"kube-scheduler","enabled":true},{"category":"kube-audit","enabled":true},{"category":"cloud-controller-manager","enabled":true},{"category":"cluster-autoscaler","enabled":true},{"category":"kube-audit-admin","enabled":true}]' `

--metrics '[{"category":"AllMetrics","enabled":true}]' `

--workspace $log_analytics_workspace_resource_id

📝Create Deployments and Services:

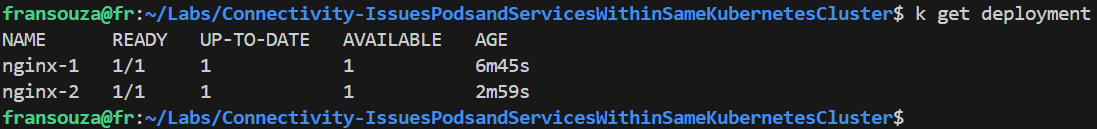

Create two deployments and respective services:

k create deployment <app-name-1> --image=nginx k create deployment <app-name-2> --image=nginx k expose deployment <app-name-1> --name <app-name-1>-svc --port=80 --target-port=80 --type=ClusterIP k expose deployment <app-name-2> --name <app-name-2>-svc --port=80 --target-port=80 --type=ClusterIP

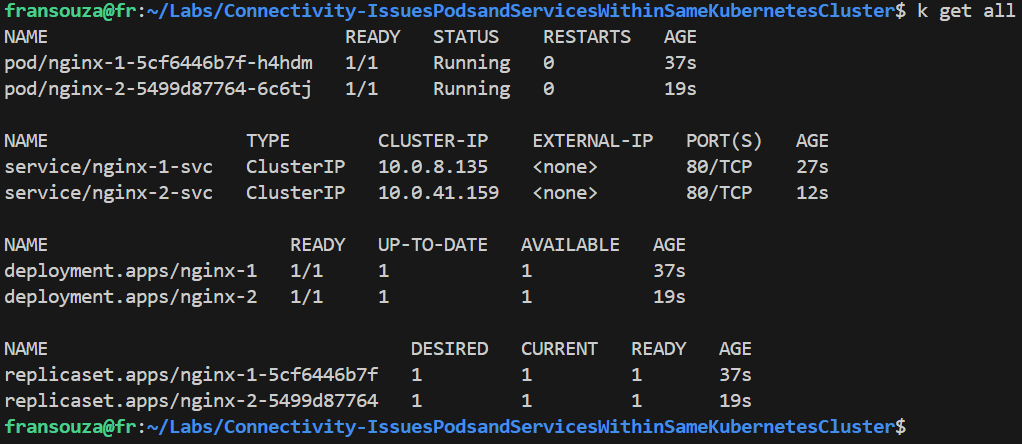

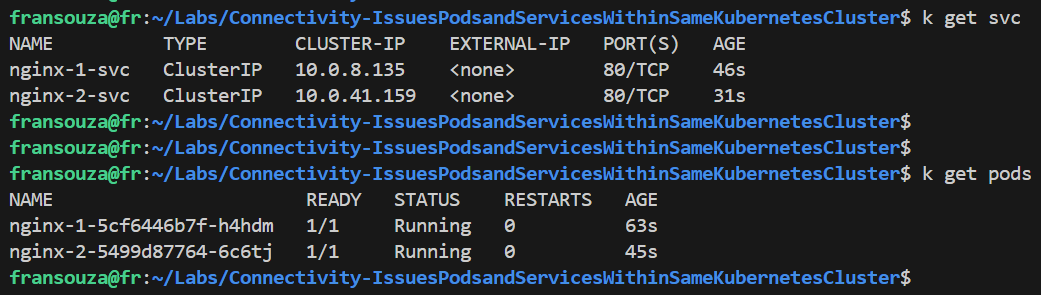

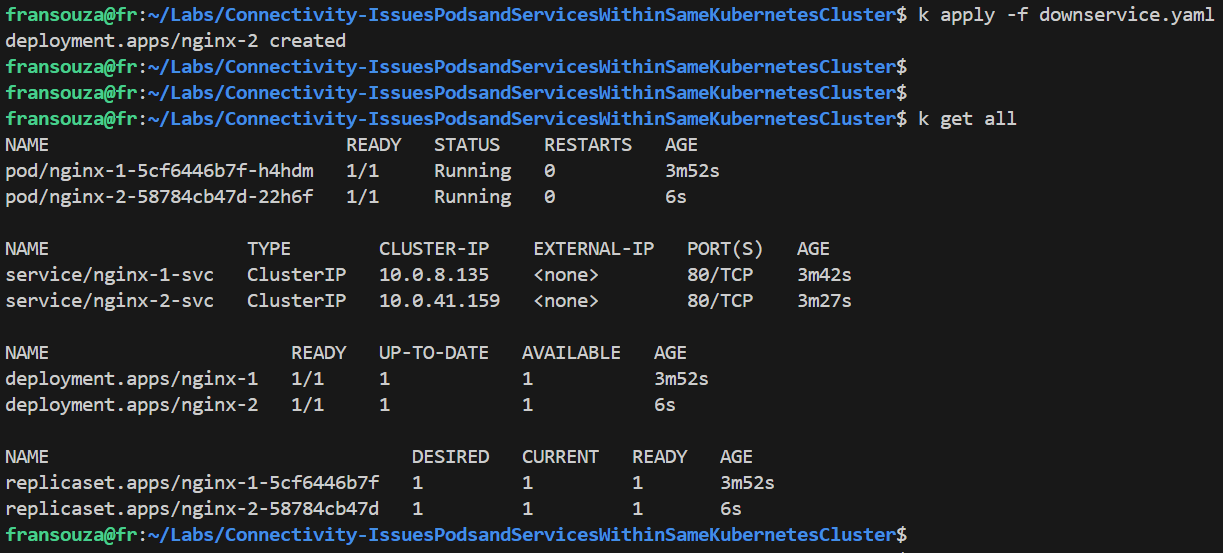

Please, confirm if deployment and service are functional. Pods should be running and services listening on Port 80.

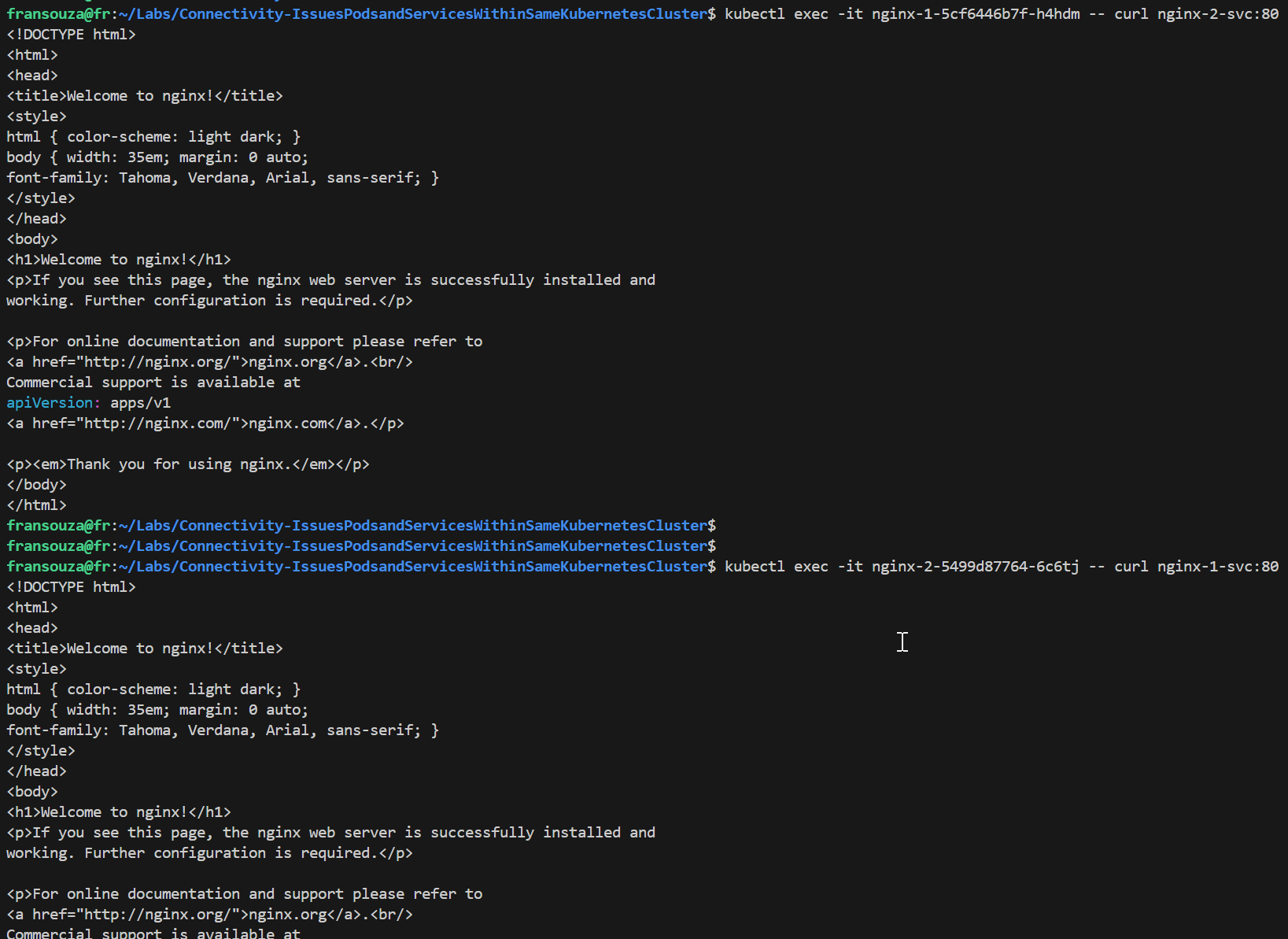

Verify that we can access both services from within the cluster by using Cluster IP addresses:

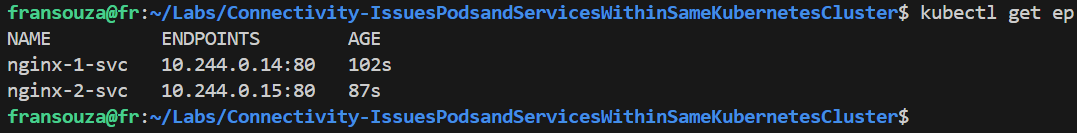

# Services returned k get svc # Get the value of running Pods k get pods # We should see the NGINX HTML page from each Pod k exec -it <app-name-1> -- curl <app-name-1-svc>:80 k exec -it <app-name-2> -- curl <app-name-2-svc>:80 # Check endpoints for the services k get ep

Backup existing deployments and services:

Let's to backup the deployment associated with nginx-2 deployment and service.

k get deployment.apps/<app-name-2> -o yaml > <app-name-2>-dep.yaml k get service/<app-name-2-svc> -o yaml > <app-name-2-svc>.yaml

📝Simulate the Issue:

Let's to delete the previous created resources and create new ones using the following YAML file to simulate the connectivity issue between the Pods within the same cluster. I called this file as downservice.yaml.

k delete -f <app-name-2>-dep.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

labels:

app: <app-name-2>

name: <app-name-2>

namespace: <name>

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: <app-name-02>

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: <app-name-02>

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: nginx

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

Apply the downservice.yaml deployment file and confirm all Pods are running using the commands:

k apply -f downservice.yaml

k get all

📝Troubleshoot the issue:

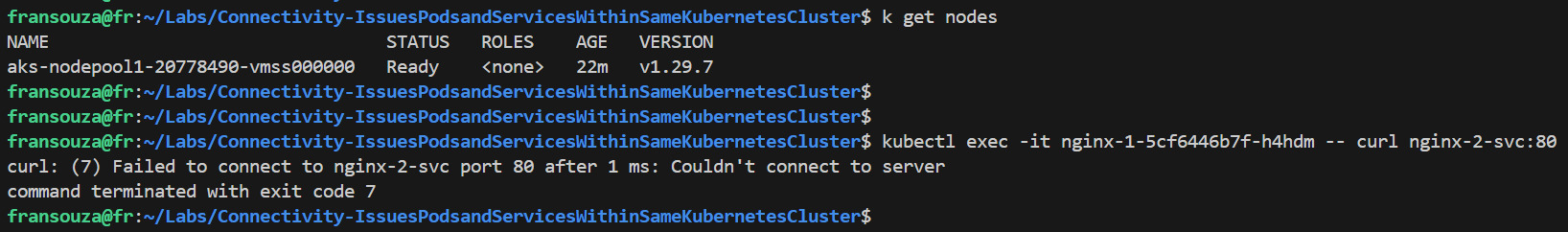

Check the health of the nodes in the cluster to see if there is a node issue:

k get nodesVerify that we can no longer access nginx-2-svc from within the cluster:

k exec -it <app-name-1-pod> -- curl <app-name-2>-svc:80 # msg Failed to connect to nginx-2-svc port 80 after 1 ms: Couldn't connect to servercommand terminated with exit code 7

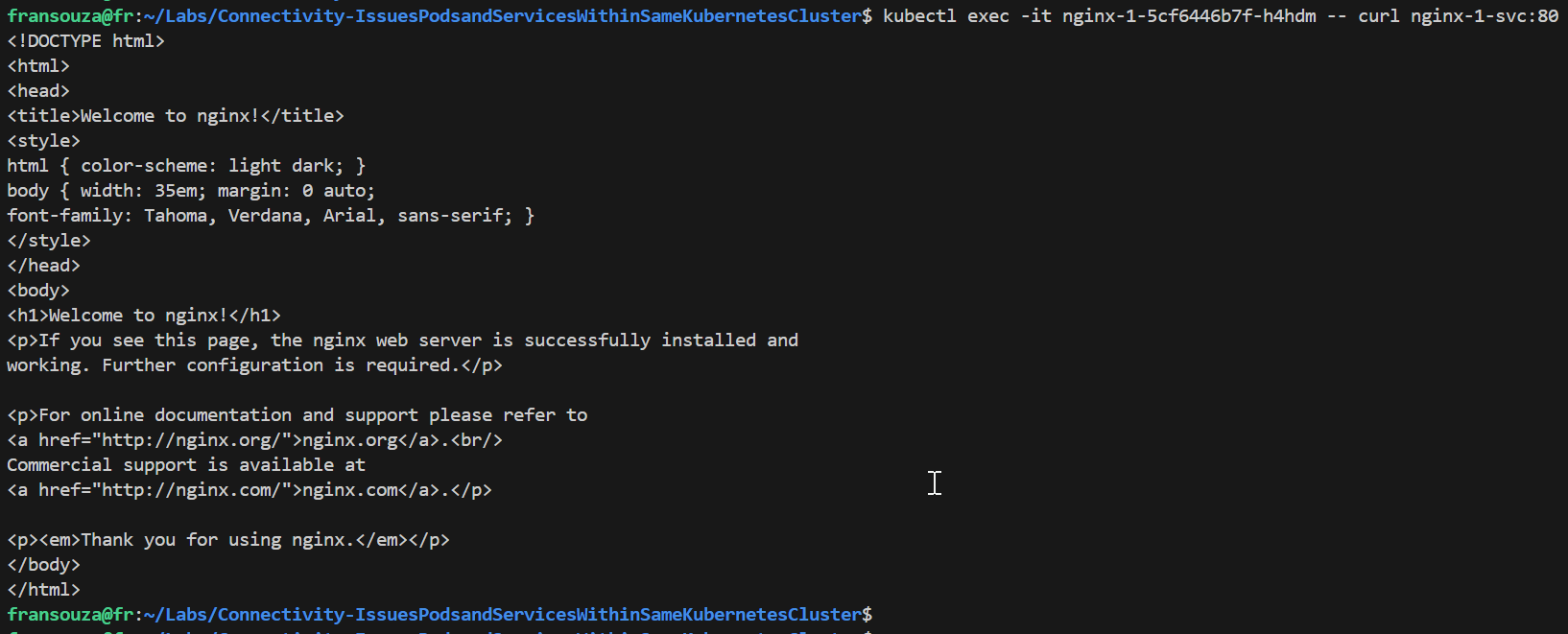

Verify that you can access <app-name-1>-svc from within the cluster:

k exec -it <app-name-1-pod> -- curl <app-name-1>-svc:80 # displays HTML page

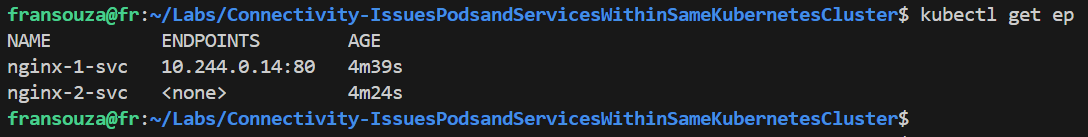

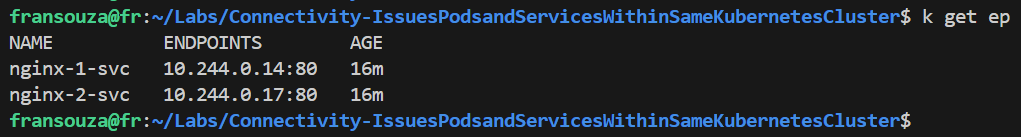

Verify that the right Endpoints line up with their Services. There should be at least 1 Pod associated with a service to <app-name-1>, but none to <app-name-2>.

k get ep

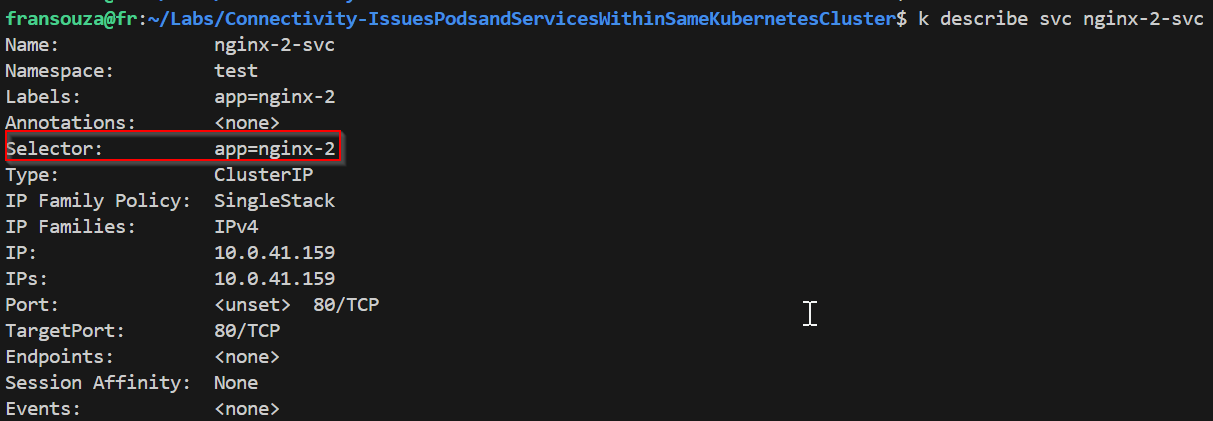

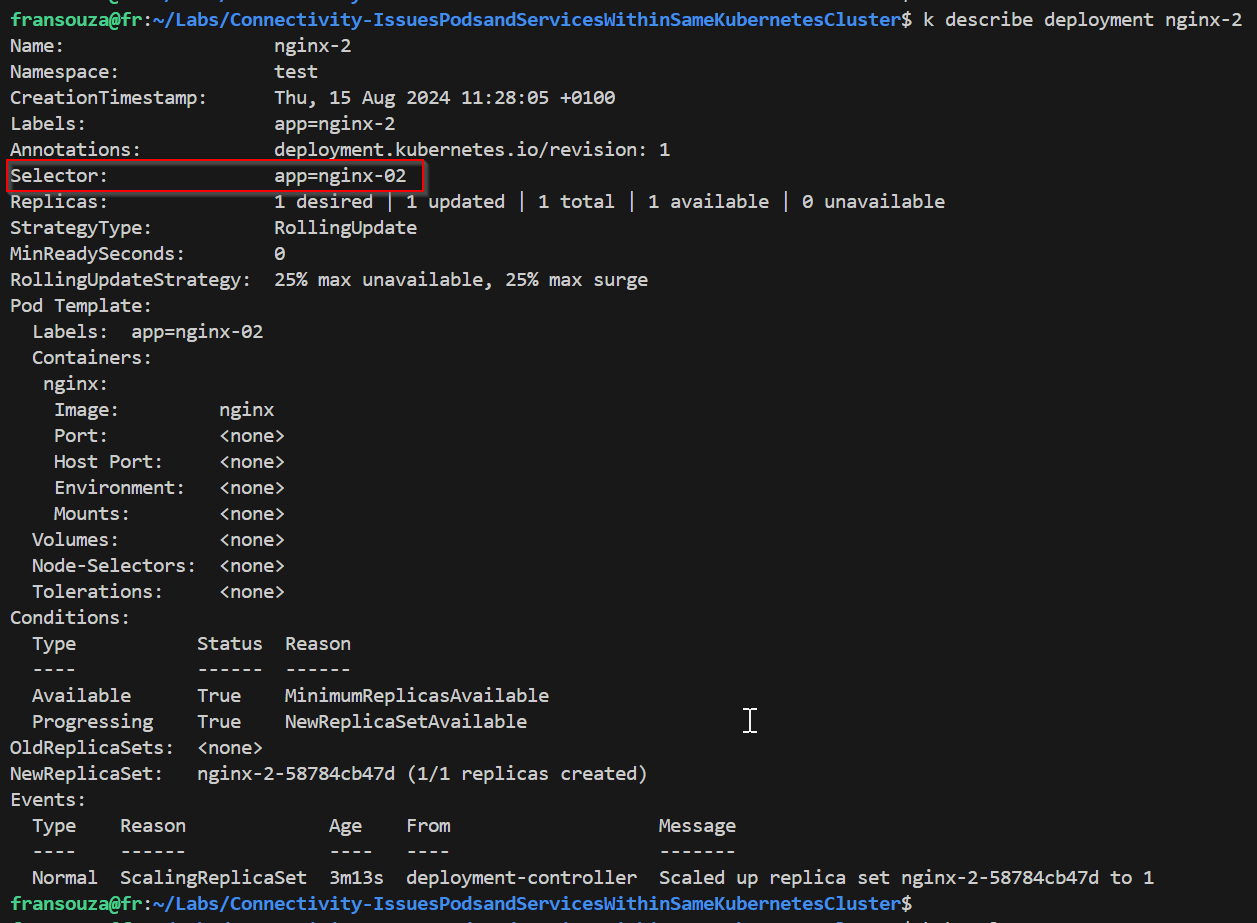

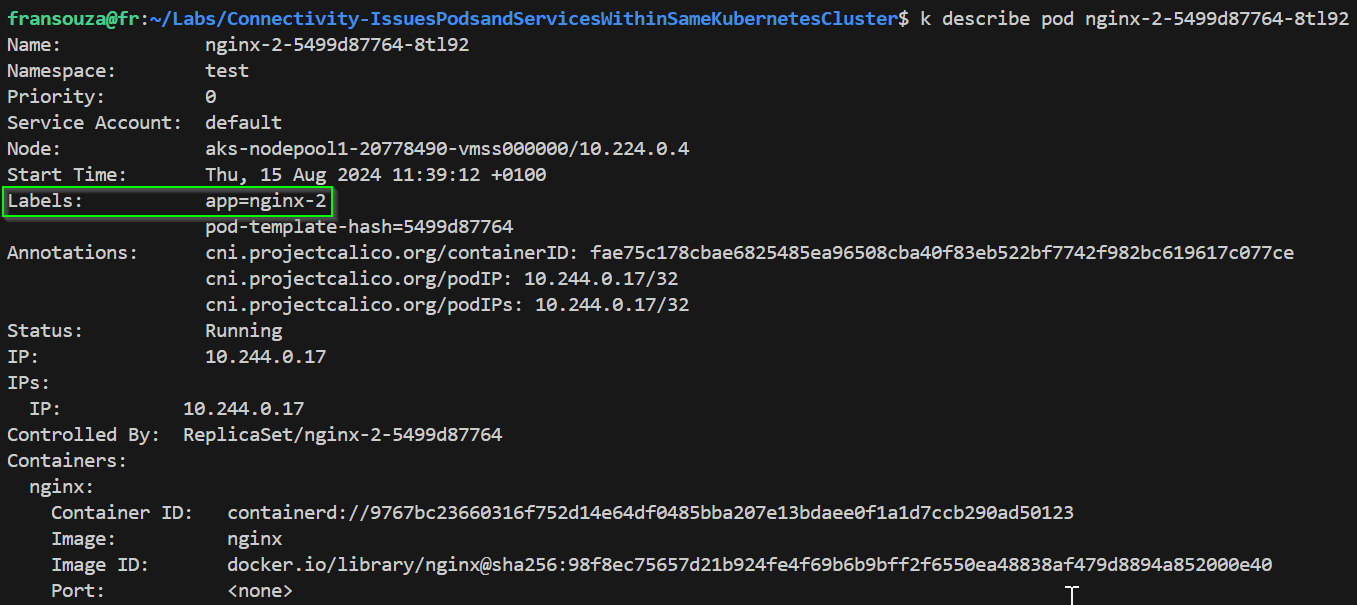

Check label selector used by the Service experiencing issue, ensure that it matches the label selector used by its corresponding Deployment.

k describe service <service-name> k describe deployment <deployment_name> k get svc k get deployment

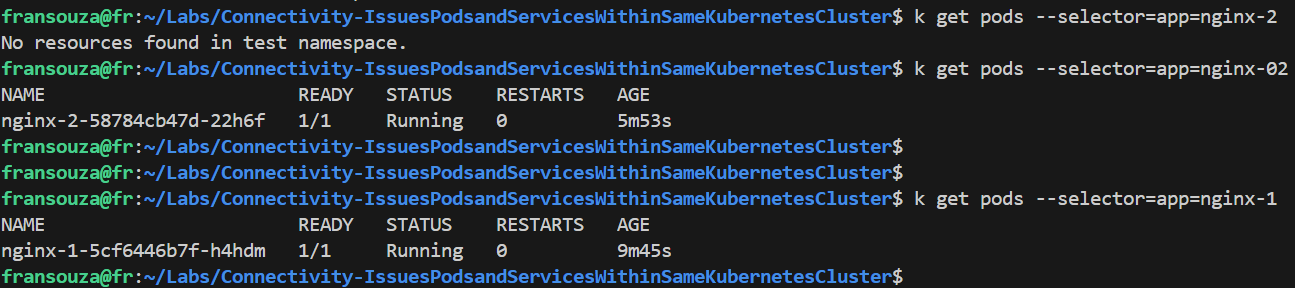

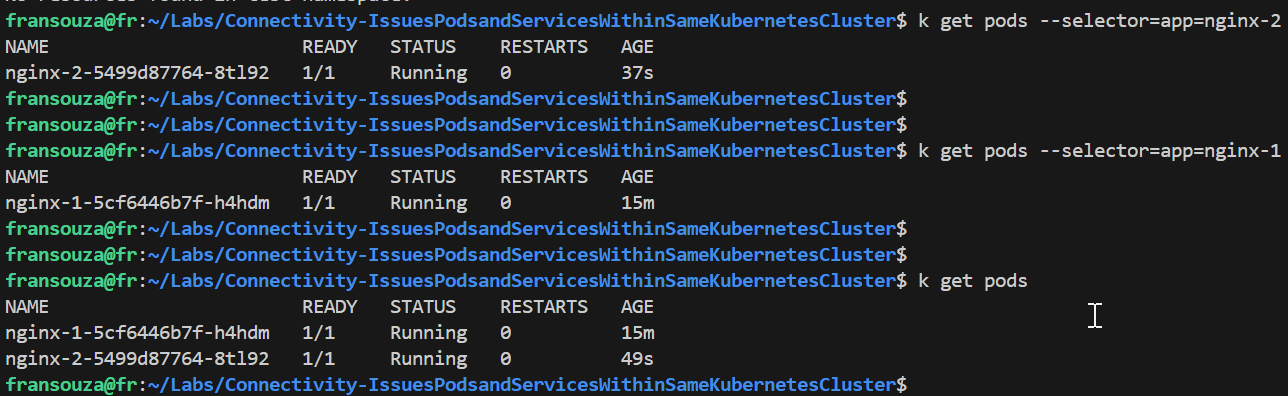

Using the Service label selector from Step 4, check that the Pods selected by the Service match the Pods created by the Deployment.

If no results are returned then there must be a label selector mismatch. From below selector used by deployment returns pods but not selector used by service.

kubectl get pods --selector=<selector_used_by_service>

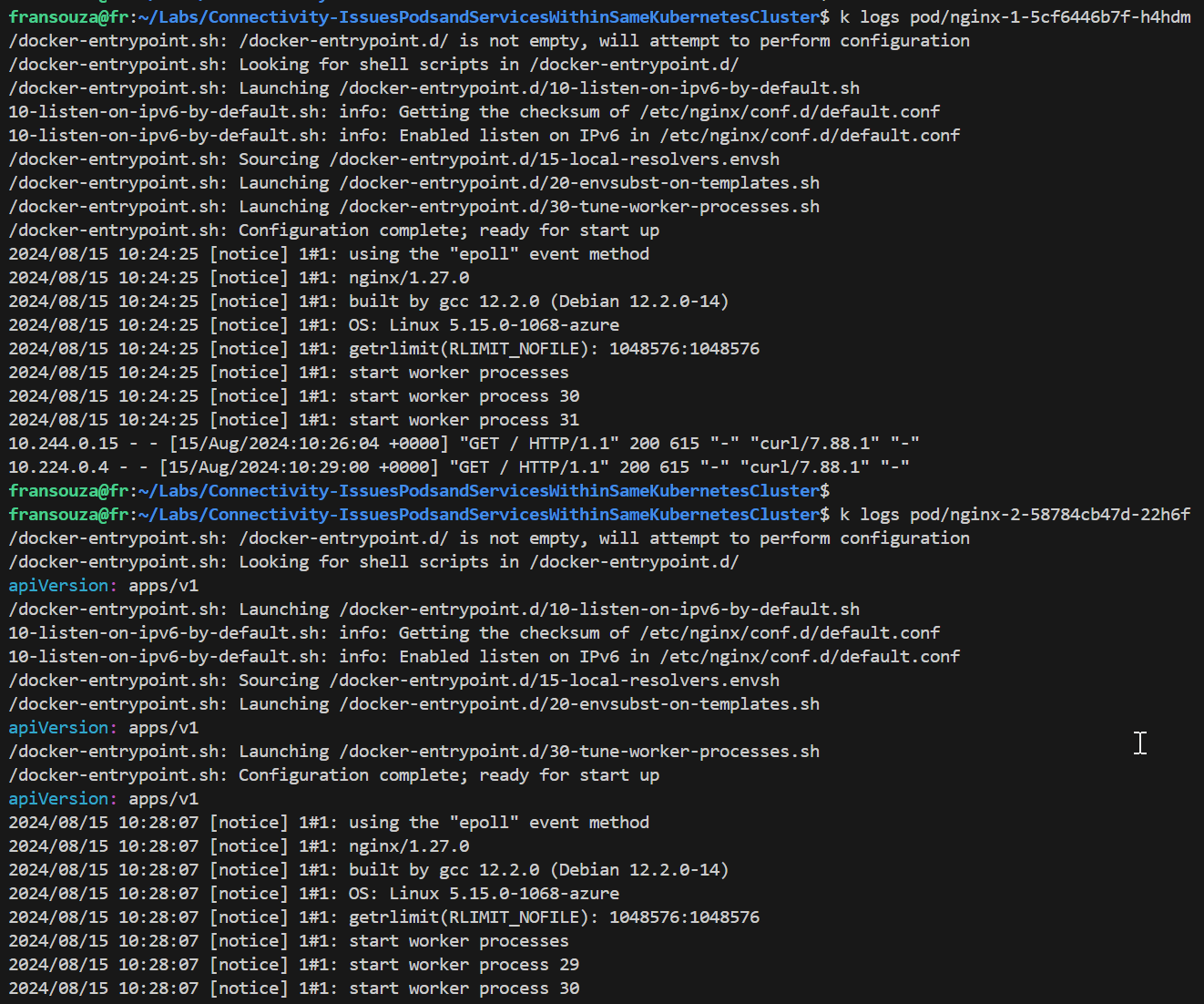

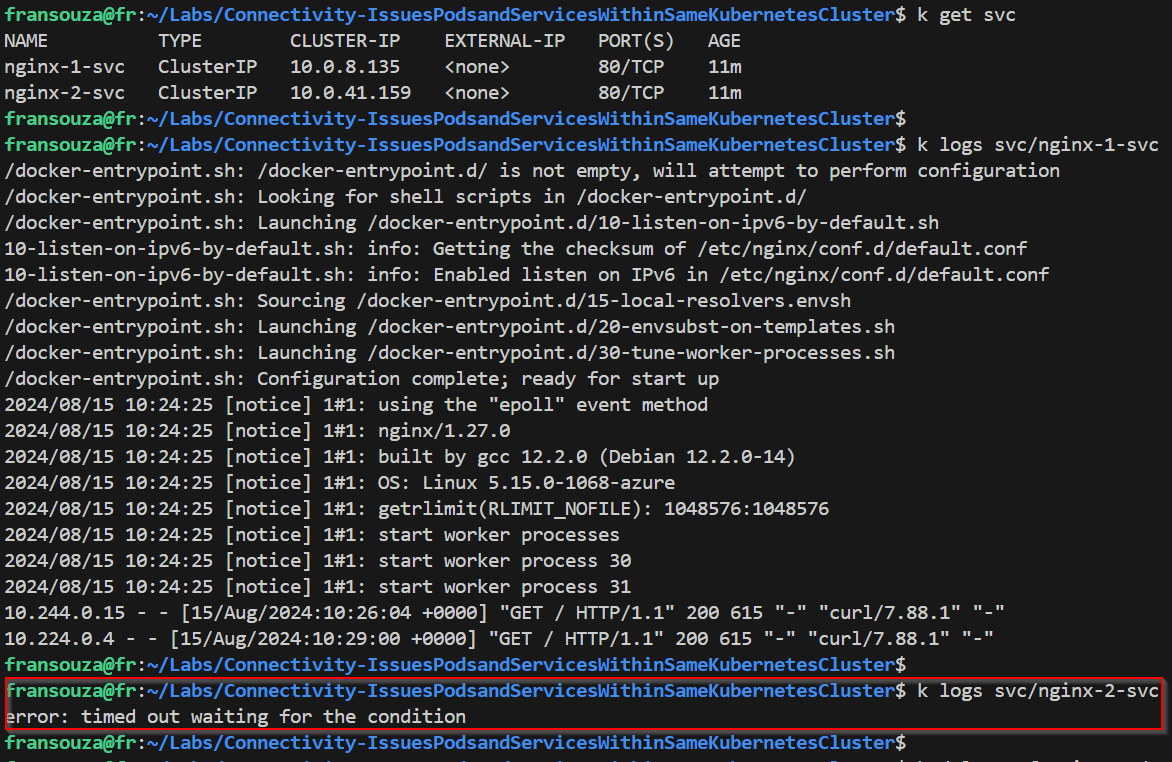

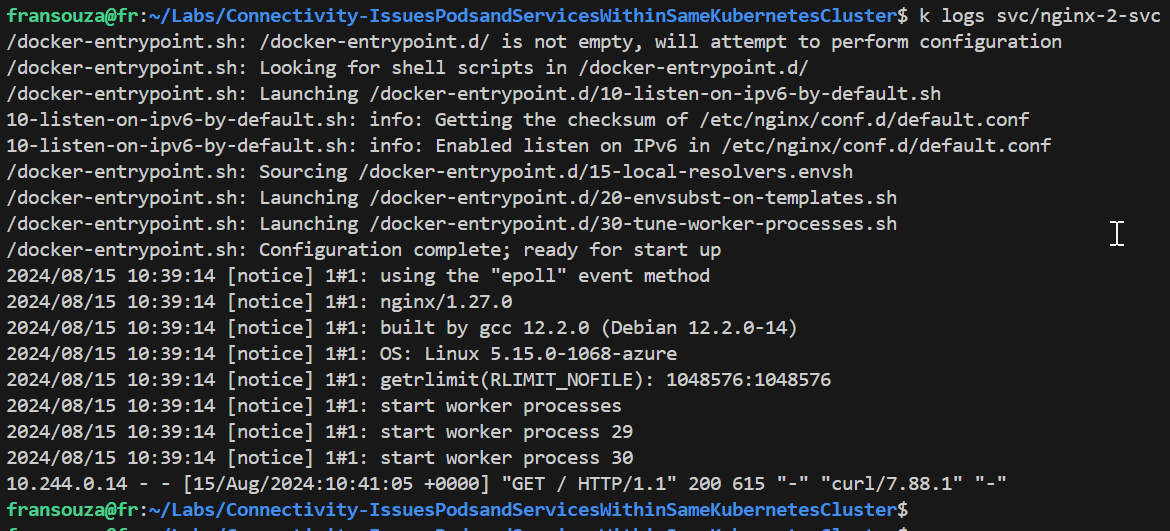

Check service and pod logs and ensure HTTP traffic is seen. Compare nginx-1 pod and service logs with nginx-2. Previously, it was suggesting no incoming traffic.

k logs pod/<app-name-2> k logs pod/<app-name-1> k logs svc/<app-name-2> k logs svc/<app-name-1>

📝Solving the Issue:

Check the label selector from the Service associated with the issue and get associated pods:

k describe service <app-name-2>-svc k describe pods -l app=<app-name-2>Update deployment and apply changes:

k delete -f <app-name-2>-dep.yaml #In downservice.yaml, Update labels > app: <app-name-02>, to app: <app-name-2> k apply -f downservice.yaml k describe pod <app-name-2> k get ep

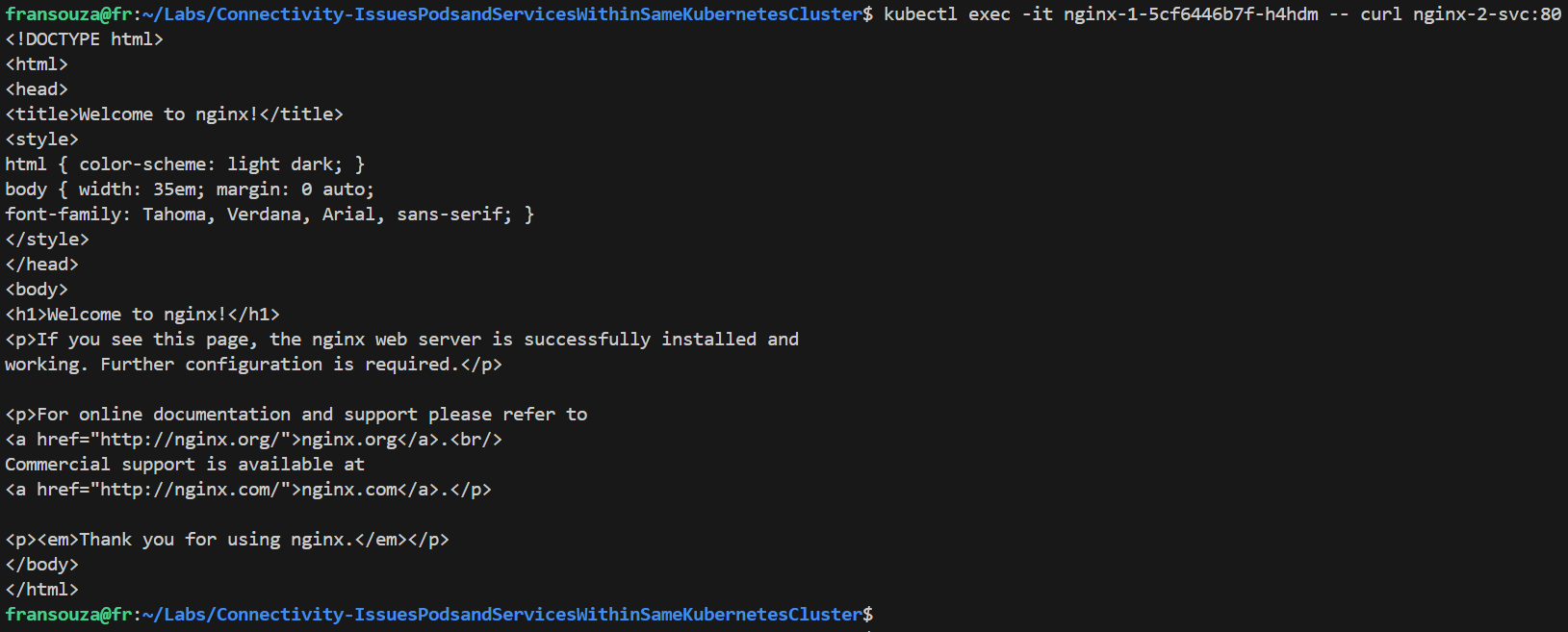

Verify that we can now access the newly created service from within the cluster:

# Should return HTML page from nginx-2-svc kubectl exec -it <app-name-1 pod> -- curl <app-name-2>-svc:80 # Confirm from logs k logs pod/<app-name-2>

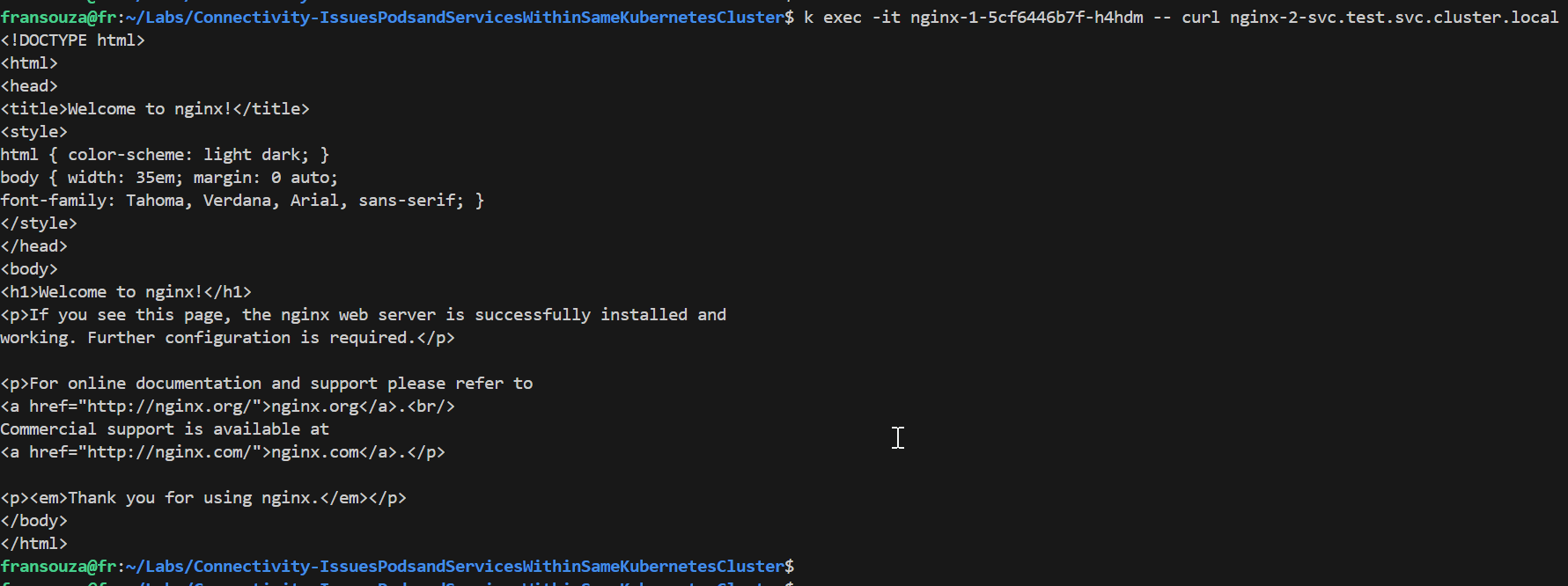

📝Extra Troubleshooting Using Custom Domain Names

Currently Services in our namespace will resolve using ..svc.cluster.local.

k exec -it <app-name-1 pod> -- curl <app-name-2>-svc.test.svc.cluster.local

Let's to create a custom

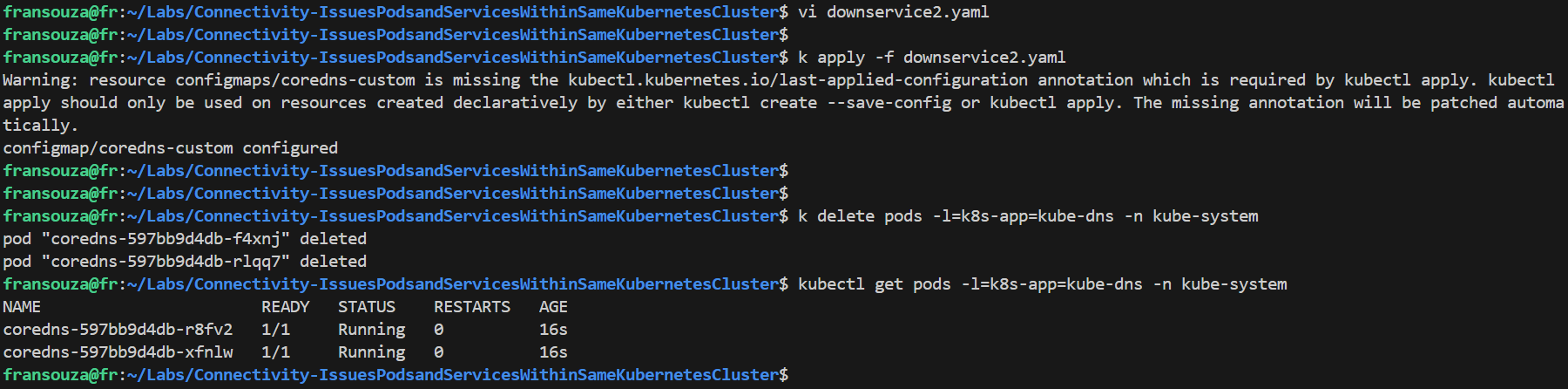

yamlfile calleddownservice2.yamlto break it:apiVersion: v1 kind: ConfigMap metadata: name: coredns-custom namespace: kube-system data: internal-custom.override: | # any name with .server extension rewrite stop { name regex (.*)\.svc\.cluster\.local {1}.bad.cluster.local. answer name (.*)\.bad\.cluster\.local {1}.svc.cluster.local. }Apply the

downservice2.yamlfile and and restartcoredns:k apply -f downservice2.yaml k delete pods -l=k8s-app=kube-dns -n kube-system # Monitor to ensure pods are running k get pods -l=k8s-app=kube-dns -n kube-system

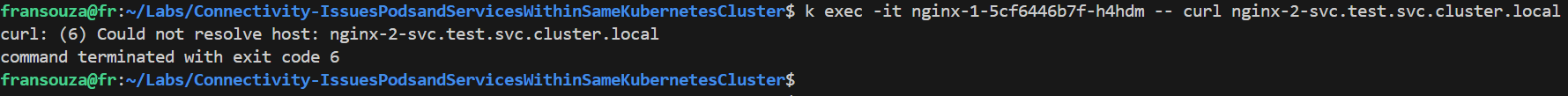

Validate if DNS resolution works and it should fail:

k exec -it -- curl <app-name-2>-svc.test.svc.cluster.local

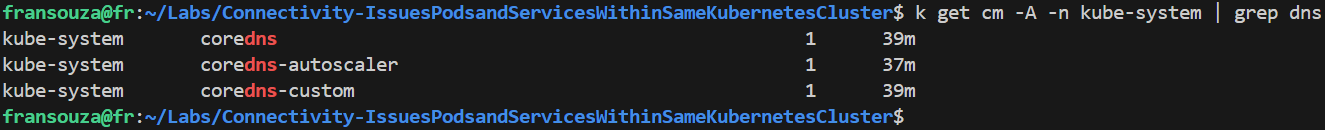

Check the DNS configuration files in

kube-systemwhich shows theconfigmaps, as below.k get cm -A -n kube-system | grep dns

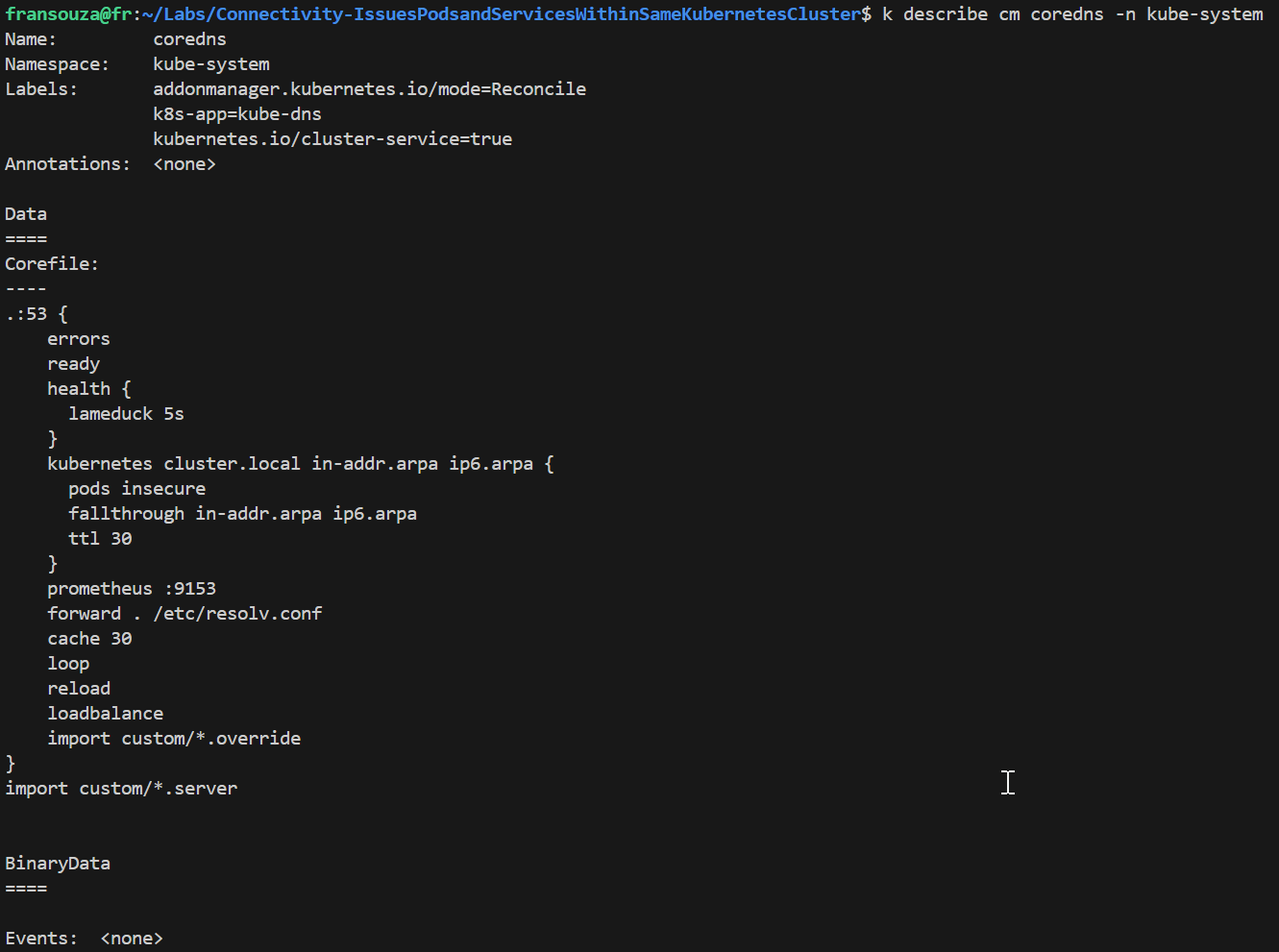

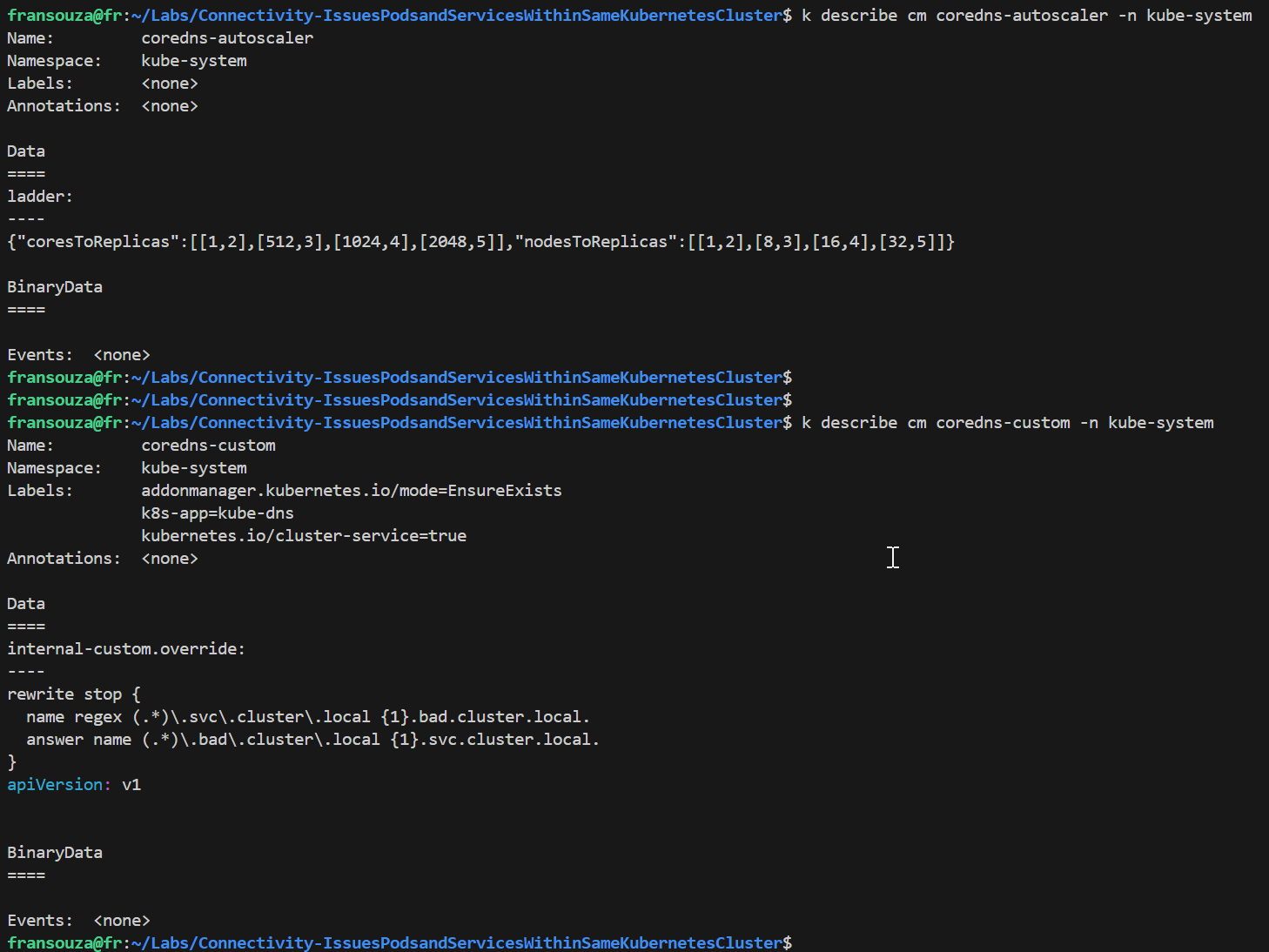

Describe each of the ones found above and look for inconsistencies:

k describe cm coredns -n kube-system k describe cm coredns-autoscaler -n kube-system k describe cm coredns-custom -n kube-system

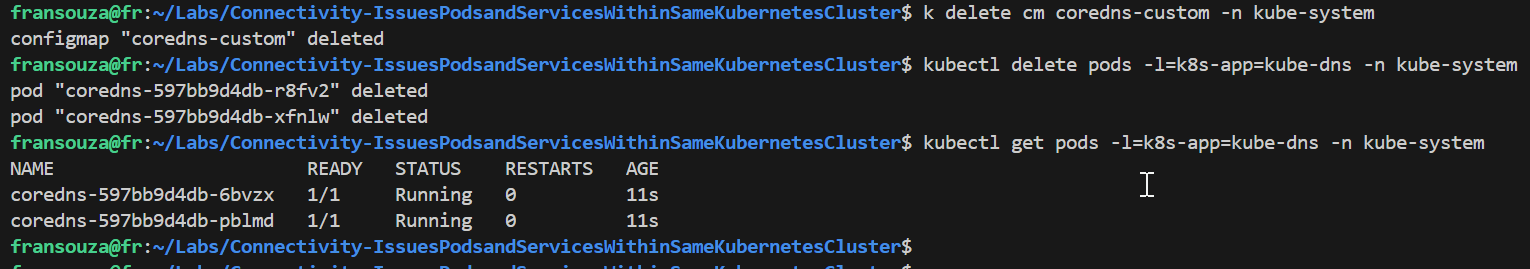

To solve the issue, since the custom DNS file holds wrong parameters breaking it, either edit

coredns-custom(to remove data section) or deleteconfigmapand restart DNS.k delete cm coredns-custom -n kube-system kubectl delete pods -l=k8s-app=kube-dns -n kube-system # Monitor to ensure pods are running kubectl get pods -l=k8s-app=kube-dns -n kube-system

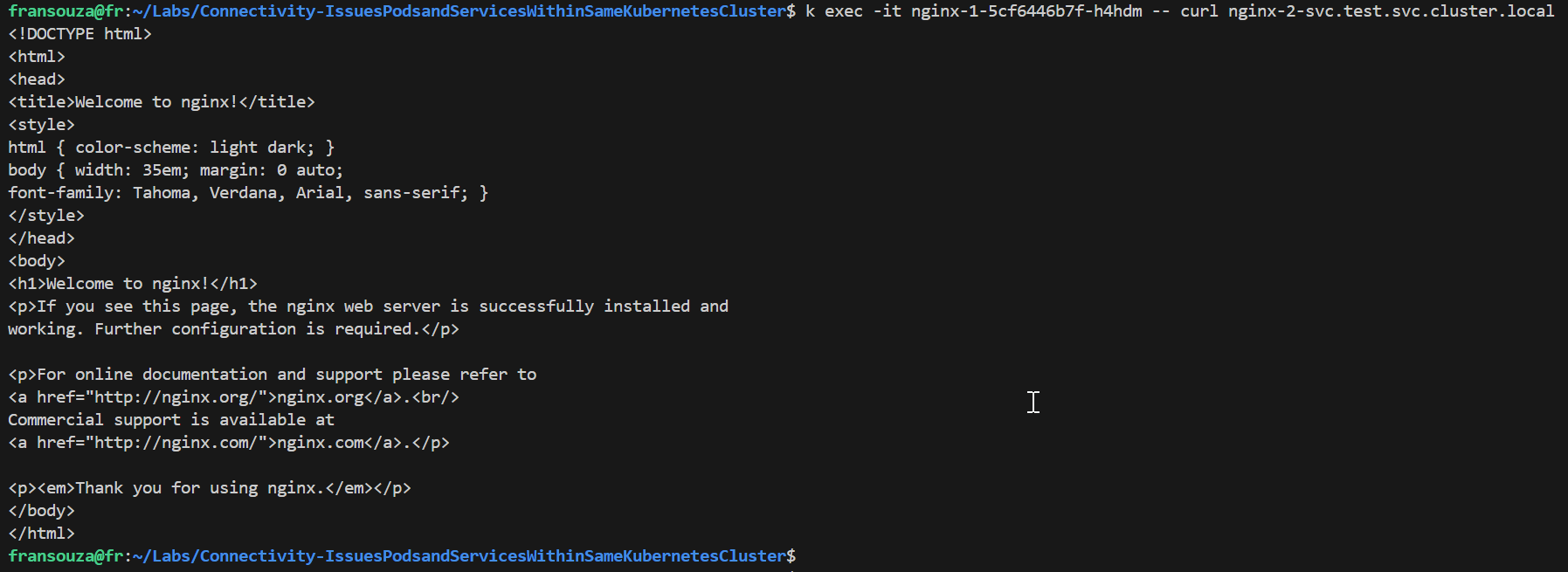

Confirm DNS resolution now works as before.

k exec -it -- curl <app-name-2>.test.svc.cluster.local

📌Note - At the end of each hands-on Lab, always clean up all resources previously created to avoid being charged.

Congratulations — you have completed this hands-on lab covering the basics of Troubleshooting an AKS Connectivity issues between Pods and Services within the same cluster.

Thank you for reading. I hope you understood and learned something helpful from my blog.

Please follow me on Cloud&DevOpsLearn and LinkedIn, franciscojblsouza

Subscribe to my newsletter

Read articles from Francisco Souza directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Francisco Souza

Francisco Souza

I have over 20 years of experience in IT Infrastructure and currently work at Microsoft as an Azure Kubernetes Support Engineer, where I support and manage the AKS, ACI, ACR, and ARO tools. Previously, I worked as a Fault Management Cloud Engineer at Nokia for 2.9 years, with expertise in OpenStack, Linux, Zabbix, Commvault, and other tools. In this role, I resolved critical technical incidents, ensured consistent uptime, and safeguarded against revenue loss from customers. Additionally, I briefly served as a Technical Team Lead for 3 months, where I distributed tasks, mentored a new team member, and managed technical requests and activities raised by our customers. Previously, I worked as an IT System Administrator at BN Paribas Cardif Portugal and other significant companies in Brazil, including an affiliate of Rede Globo Television (Rede Bahia) and Petrobras SA. In these roles, I developed a robust skill set, acquired the ability to adapt to new processes, demonstrated excellent problem-solving and analytical skills, and managed ticket systems to enhance the customer service experience. My ability to thrive in high-pressure environments and meet tight deadlines is a testament to my organizational and proactive approach. By collaborating with colleagues and other teams, I ensure robust support and incident management, contributing to the consistent satisfaction of my customers and the reliability of the entire IT Infrastructure.