Day 10 Task: Log Analyzer and Report Generator

Rakshita Belwal

Rakshita Belwal

Challenge Title: Log Analyzer and Report Generator

Scenario

You are a system administrator responsible for managing a network of servers. Every day, a log file is generated on each server containing important system events and error messages. As part of your daily tasks, you need to analyze these log files, identify specific events, and generate a summary report.

Task

Write a Bash script that automates the process of analyzing log files and generating a daily summary report. The script should perform the following steps:

Input: The script should take the path to the log file as a command-line argument.

Error Count: Analyze the log file and count the number of error messages. An error message can be identified by a specific keyword (e.g., "ERROR" or "Failed"). Print the total error count.

Critical Events: Search for lines containing the keyword "CRITICAL" and print those lines along with the line number.

Top Error Messages: Identify the top 5 most common error messages and display them along with their occurrence count.

Summary Report: Generate a summary report in a separate text file. The report should include:

Date of analysis

Log file name

Total lines processed

Total error count

Top 5 error messages with their occurrence count

List of critical events with line numbers

Optional Enhancement: Add a feature to automatically archive or move processed log files to a designated directory after analysis.

Tips

Use

grep,awk, and other command-line tools to process the log file.Utilize arrays or associative arrays to keep track of error messages and their counts.

Use appropriate error handling to handle cases where the log file doesn't exist or other issues arise.

Solution:

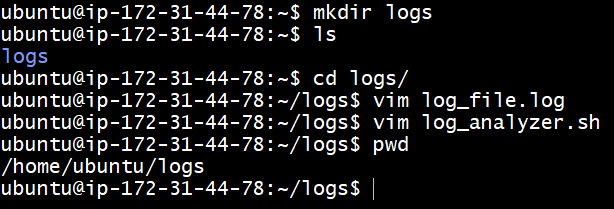

Create a folder and then a log file inside:

In log_analyzer.sh insert the shell script:

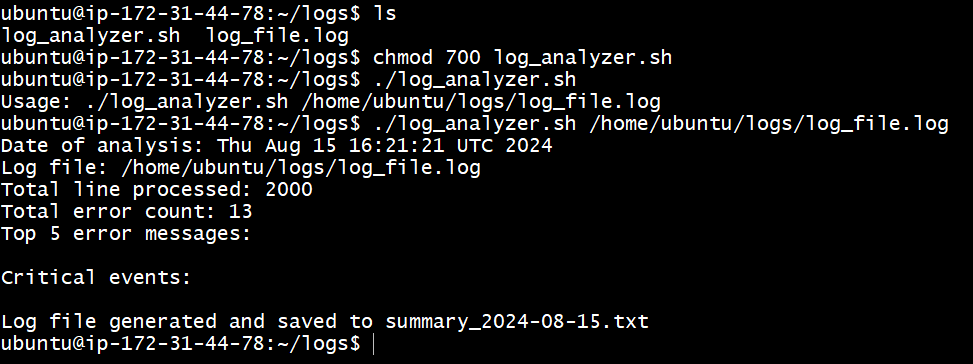

#!/bin/bash if [ $# -ne 1 ]; then echo "Usage: $0 /home/ubuntu/logs/log_file.log" exit 1 fi log_file=$1 summary_report="summary_$(date +%Y-%m-%d).txt" # check if the log file exists if [ ! -f "$log_file" ]; then echo "Error: Log file not found!" exit 1 fi # Count the total number of lines in the log file total_lines=$(wc -l < "$log_file") # Count the number of error messages error_count=$(grep -c "ERROR" "$log_file") # Search for critical events critical_events=$(grep -n "CRITICAL" "$log_file") # Identify the top 5 most common error messages declare -A error_messages while IFS= read -r line; do if [[ "$line" == "ERROR" ]]; then ((error_messages["$message"]++)) fi done < "$log_file" TOP_ERRORS=$(for msg in "${!ERROR_MESSAGES[@]}"; do echo "${ERROR_MESSAGES[$msg]}: $msg" done | sort -nr | head -5) # Generate summary report { echo "Date of analysis: $(date)" echo "Log file: $log_file" echo "Total line processed: $total_lines" echo "Total error count: $error_count" echo "Top 5 error messages:" echo "$TOP_ERRORS" echo "Critical events:" echo "$critical_events" } > "$summary_report" # Print the summary report to the console cat "$summary_report" # Optional: Archive or move the processed log file archive_dir="processed_logs" mkdir -p "$archive_dir" mv "$summary_report" "$archive_dir/" echo "Log file generated and saved to $summary_report"Change the file permission to make it executable and execute:

Check summary report:

HAPPY SCRIPTING!🚀

Subscribe to my newsletter

Read articles from Rakshita Belwal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Rakshita Belwal

Rakshita Belwal

Hi there! I'm Rakshita Belwal, an enthusiastic and aspiring DevOps engineer and Cloud engineer with a passion for integrating development and operations to create seamless, efficient, and automated workflows. I'm driven by the challenges of modern software development and am dedicated to continuous learning and improvement in the DevOps field.